Elke Hergenroether

Automated Bioacoustic Monitoring for South African Bird Species on Unlabeled Data

Jun 19, 2024

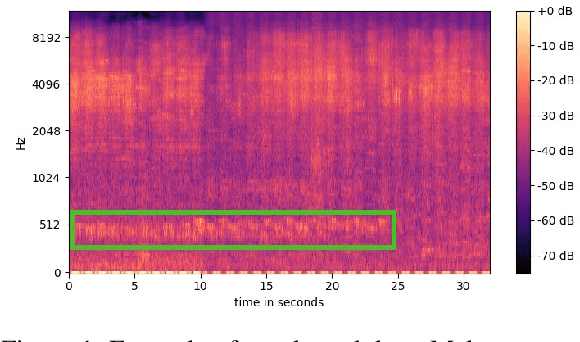

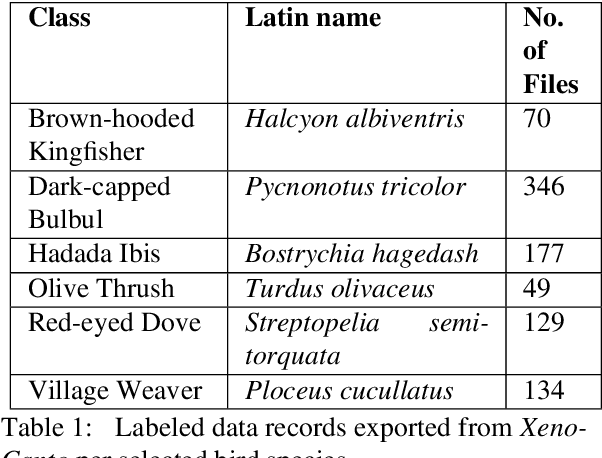

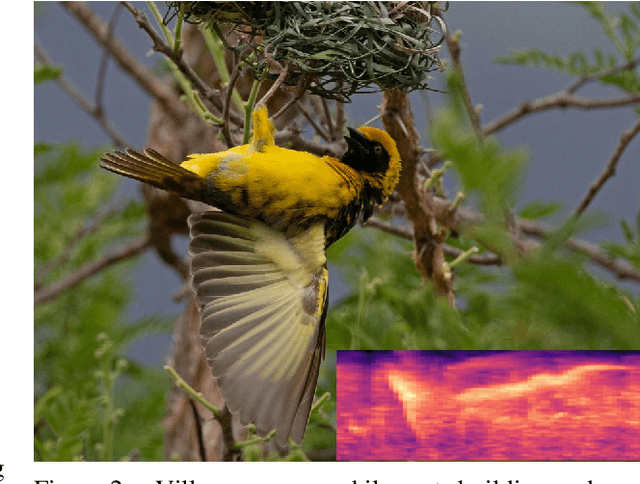

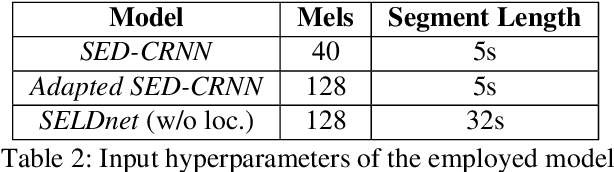

Abstract:Analyses for biodiversity monitoring based on passive acoustic monitoring (PAM) recordings is time-consuming and challenged by the presence of background noise in recordings. Existing models for sound event detection (SED) worked only on certain avian species and the development of further models required labeled data. The developed framework automatically extracted labeled data from available platforms for selected avian species. The labeled data were embedded into recordings, including environmental sounds and noise, and were used to train convolutional recurrent neural network (CRNN) models. The models were evaluated on unprocessed real world data recorded in urban KwaZulu-Natal habitats. The Adapted SED-CRNN model reached a F1 score of 0.73, demonstrating its efficiency under noisy, real-world conditions. The proposed approach to automatically extract labeled data for chosen avian species enables an easy adaption of PAM to other species and habitats for future conservation projects.

* preprint

CNN Based Flank Predictor for Quadruped Animal Species

Jun 19, 2024

Abstract:The bilateral asymmetry of flanks of animals with visual body marks that uniquely identify an individual, complicates tasks like population estimations. Automatically generated additional information on the visible side of the animal would improve the accuracy for individual identification. In this study we used transfer learning on popular CNN image classification architectures to train a flank predictor that predicts the visible flank of quadruped mammalian species in images. We automatically derived the data labels from existing datasets originally labeled for animal pose estimation. We trained the models in two phases with different degrees of retraining. The developed models were evaluated in different scenarios of different unknown quadruped species in known and unknown environments. As a real-world scenario, we used a dataset of manually labeled Eurasian lynx (Lynx lynx) from camera traps in the Bavarian Forest National Park to evaluate the model. The best model, trained on an EfficientNetV2 backbone, achieved an accuracy of 88.70 % for the unknown species lynx in a complex habitat.

Automatic Individual Identification of Patterned Solitary Species Based on Unlabeled Video Data

Apr 19, 2023

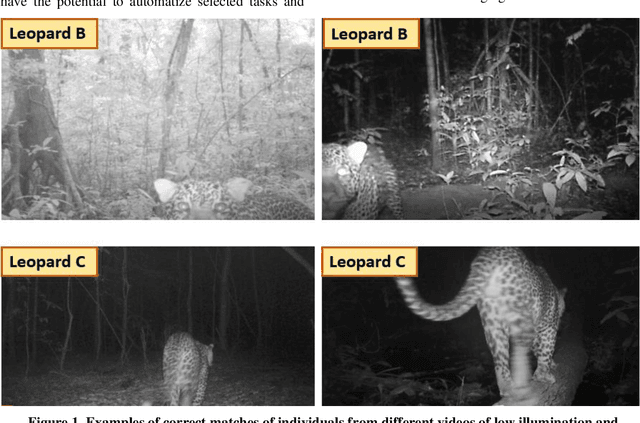

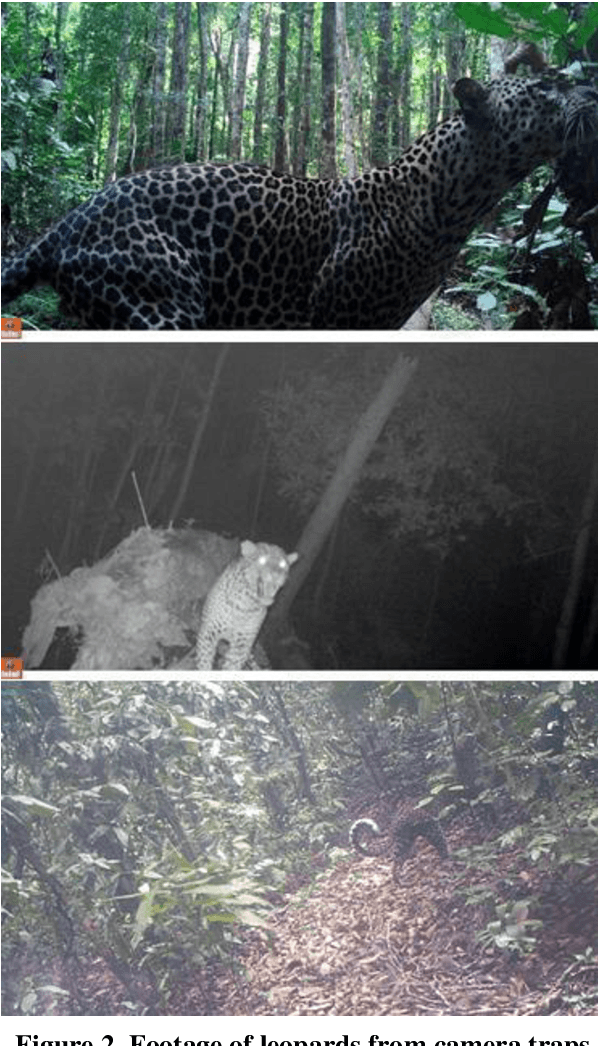

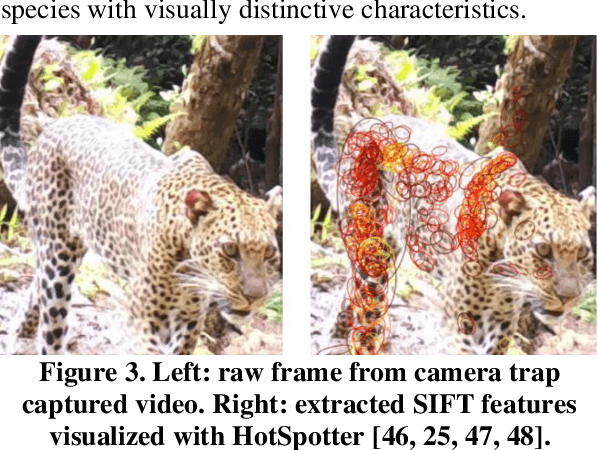

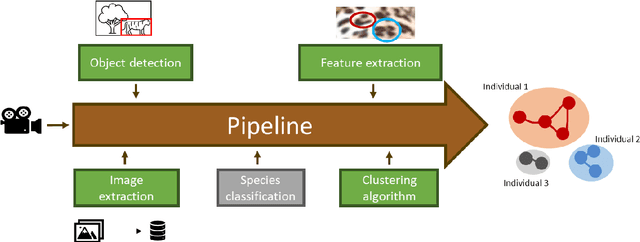

Abstract:The manual processing and analysis of videos from camera traps is time-consuming and includes several steps, ranging from the filtering of falsely triggered footage to identifying and re-identifying individuals. In this study, we developed a pipeline to automatically analyze videos from camera traps to identify individuals without requiring manual interaction. This pipeline applies to animal species with uniquely identifiable fur patterns and solitary behavior, such as leopards (Panthera pardus). We assumed that the same individual was seen throughout one triggered video sequence. With this assumption, multiple images could be assigned to an individual for the initial database filling without pre-labeling. The pipeline was based on well-established components from computer vision and deep learning, particularly convolutional neural networks (CNNs) and scale-invariant feature transform (SIFT) features. We augmented this basis by implementing additional components to substitute otherwise required human interactions. Based on the similarity between frames from the video material, clusters were formed that represented individuals bypassing the open set problem of the unknown total population. The pipeline was tested on a dataset of leopard videos collected by the Pan African Programme: The Cultured Chimpanzee (PanAf) and achieved a success rate of over 83% for correct matches between previously unknown individuals. The proposed pipeline can become a valuable tool for future conservation projects based on camera trap data, reducing the work of manual analysis for individual identification, when labeled data is unavailable.

Challenges of the Creation of a Dataset for Vision Based Human Hand Action Recognition in Industrial Assembly

Mar 07, 2023

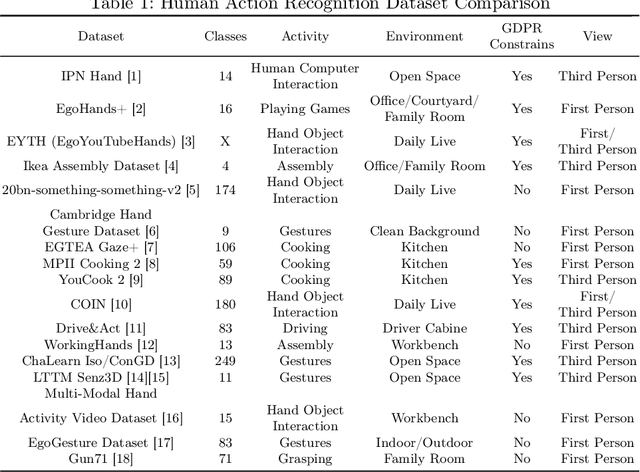

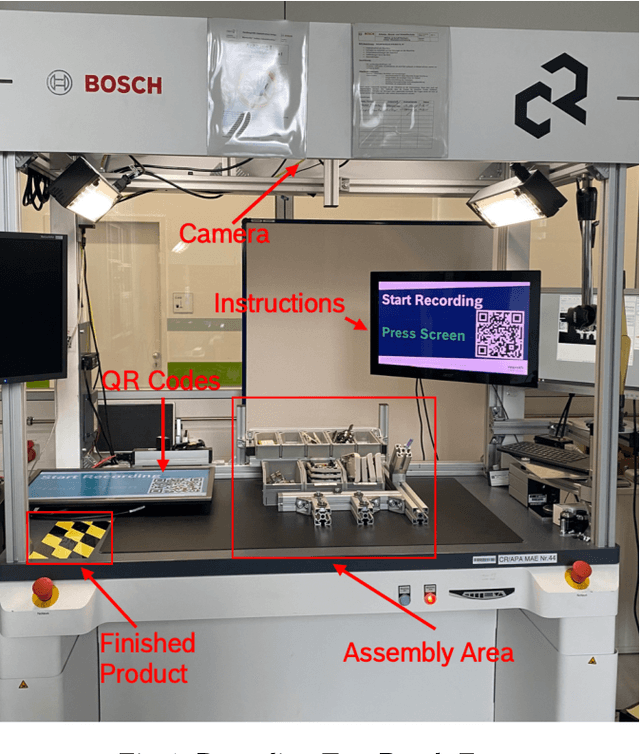

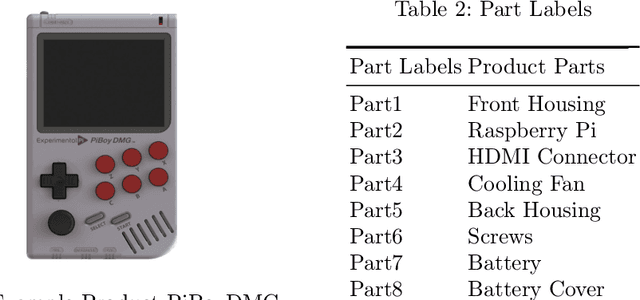

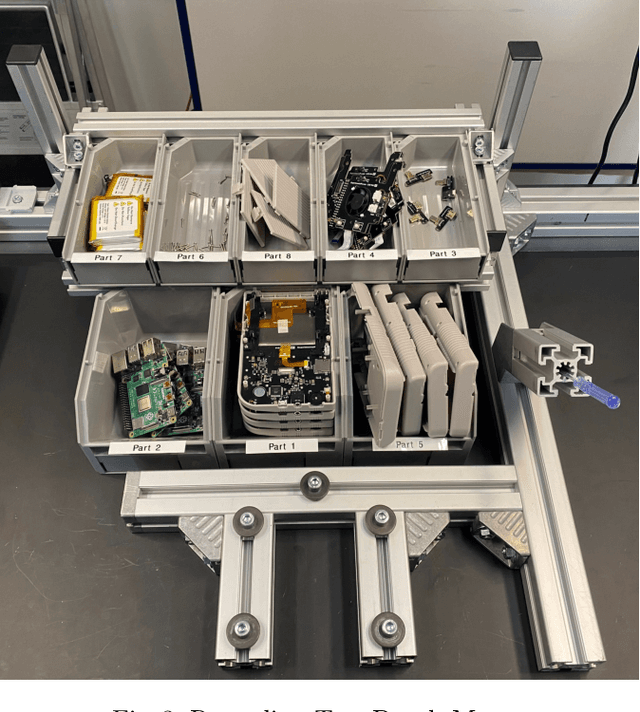

Abstract:This work presents the Industrial Hand Action Dataset V1, an industrial assembly dataset consisting of 12 classes with 459,180 images in the basic version and 2,295,900 images after spatial augmentation. Compared to other freely available datasets tested, it has an above-average duration and, in addition, meets the technical and legal requirements for industrial assembly lines. Furthermore, the dataset contains occlusions, hand-object interaction, and various fine-grained human hand actions for industrial assembly tasks that were not found in combination in examined datasets. The recorded ground truth assembly classes were selected after extensive observation of real-world use cases. A Gated Transformer Network, a state-of-the-art model from the transformer domain was adapted, and proved with a test accuracy of 86.25% before hyperparameter tuning by 18,269,959 trainable parameters, that it is possible to train sequential deep learning models with this dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge