Ammie K. Kalan

Automatic Individual Identification of Patterned Solitary Species Based on Unlabeled Video Data

Apr 19, 2023

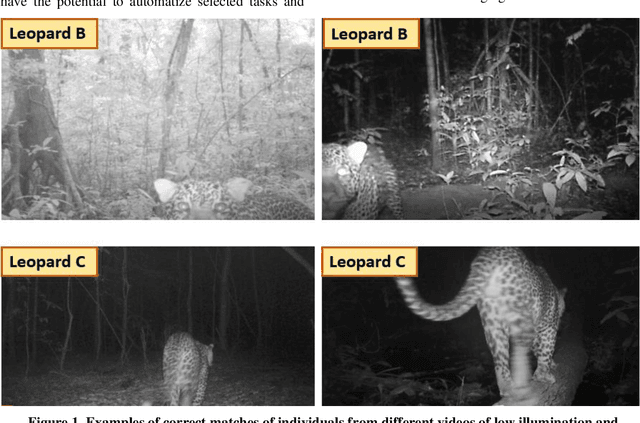

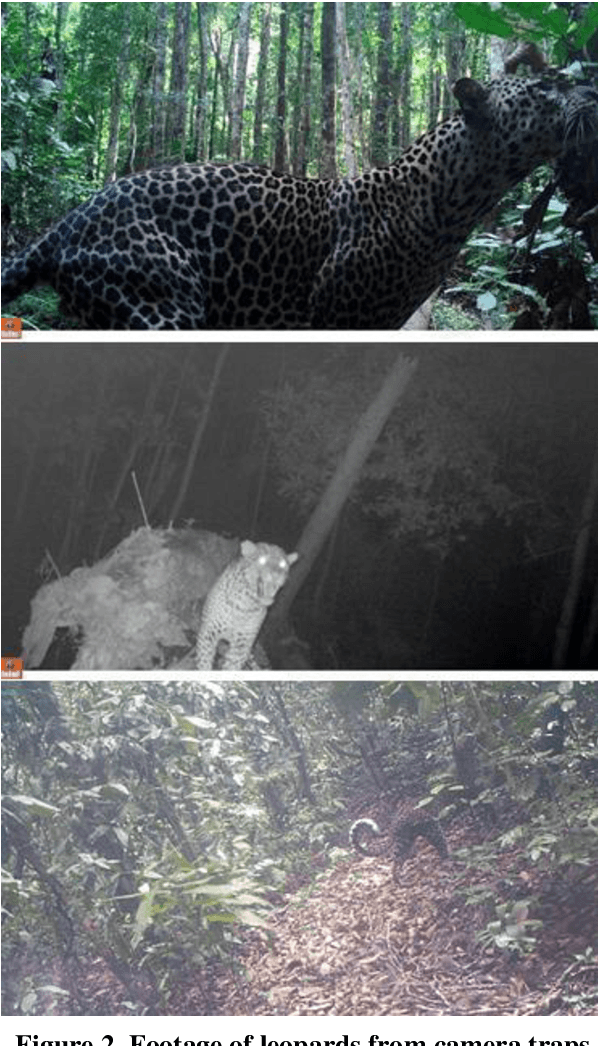

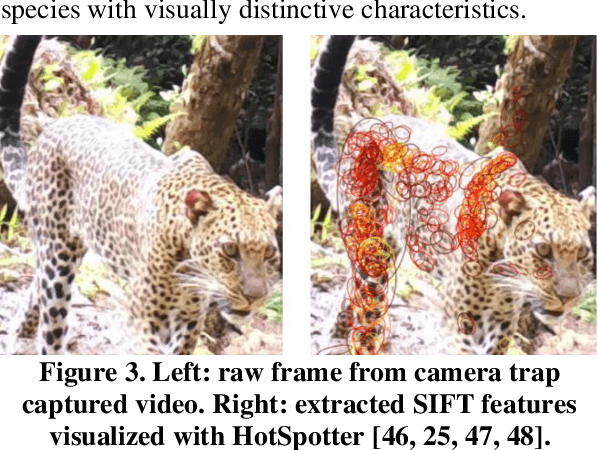

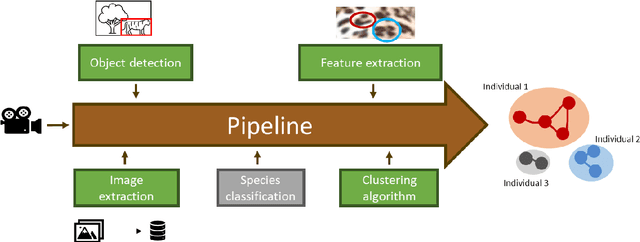

Abstract:The manual processing and analysis of videos from camera traps is time-consuming and includes several steps, ranging from the filtering of falsely triggered footage to identifying and re-identifying individuals. In this study, we developed a pipeline to automatically analyze videos from camera traps to identify individuals without requiring manual interaction. This pipeline applies to animal species with uniquely identifiable fur patterns and solitary behavior, such as leopards (Panthera pardus). We assumed that the same individual was seen throughout one triggered video sequence. With this assumption, multiple images could be assigned to an individual for the initial database filling without pre-labeling. The pipeline was based on well-established components from computer vision and deep learning, particularly convolutional neural networks (CNNs) and scale-invariant feature transform (SIFT) features. We augmented this basis by implementing additional components to substitute otherwise required human interactions. Based on the similarity between frames from the video material, clusters were formed that represented individuals bypassing the open set problem of the unknown total population. The pipeline was tested on a dataset of leopard videos collected by the Pan African Programme: The Cultured Chimpanzee (PanAf) and achieved a success rate of over 83% for correct matches between previously unknown individuals. The proposed pipeline can become a valuable tool for future conservation projects based on camera trap data, reducing the work of manual analysis for individual identification, when labeled data is unavailable.

Compensating class imbalance for acoustic chimpanzee detection with convolutional recurrent neural networks

May 26, 2021

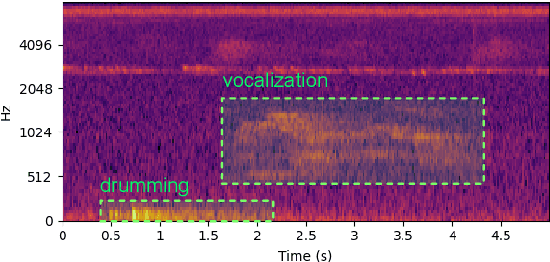

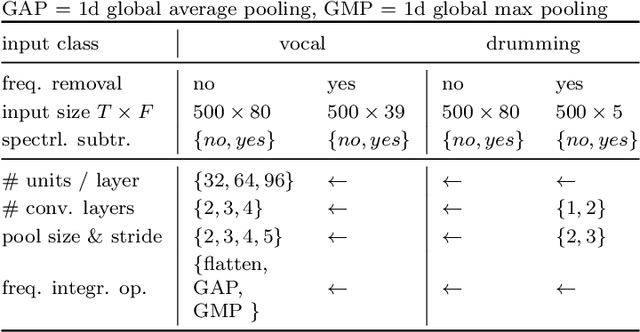

Abstract:Automatic detection systems are important in passive acoustic monitoring (PAM) systems, as these record large amounts of audio data which are infeasible for humans to evaluate manually. In this paper we evaluated methods for compensating class imbalance for deep-learning based automatic detection of acoustic chimpanzee calls. The prevalence of chimpanzee calls in natural habitats is very rare, i.e. databases feature a heavy imbalance between background and target calls. Such imbalances can have negative effects on classifier performances. We employed a state-of-the-art detection approach based on convolutional recurrent neural networks (CRNNs). We extended the detection pipeline through various stages for compensating class imbalance. These included (1) spectrogram denoising, (2) alternative loss functions, and (3) resampling. Our key findings are: (1) spectrogram denoising operations significantly improved performance for both target classes, (2) standard binary cross entropy reached the highest performance, and (3) manipulating relative class imbalance through resampling either decreased or maintained performance depending on the target class. Finally, we reached detection performances of 33% for drumming and 5% for vocalization, which is a >7 fold increase compared to previously published results. We conclude that supporting the network to learn decoupling noise conditions from foreground classes is of primary importance for increasing performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge