Franz Anders

Investigation of the Assessment of Infant Vocalizations by Laypersons

Aug 20, 2021

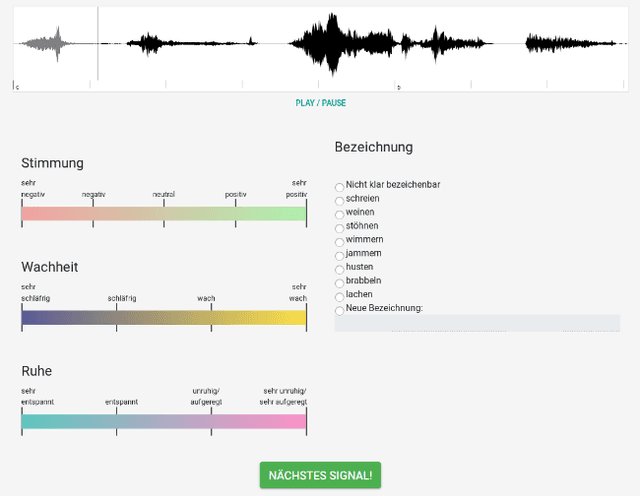

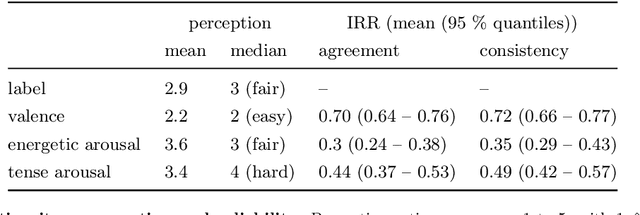

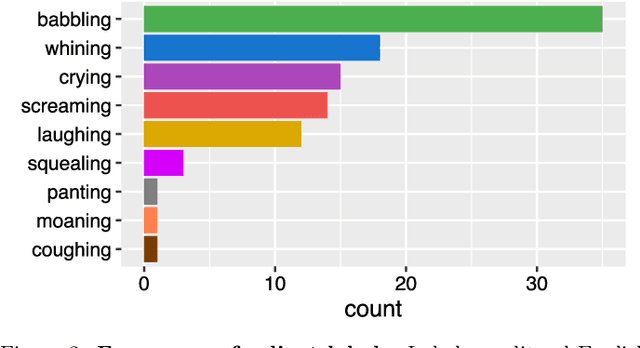

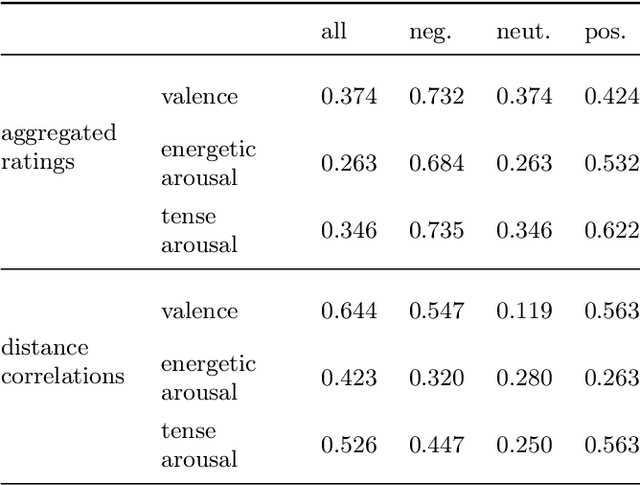

Abstract:The goal of this investigation was the assessment of acoustic infant vocalizations by laypersons. More specifically, the goal was to identify (1) the set of most salient classes for infant vocalizations, (2) their relationship to each other and to affective ratings, and (3) proposals for classification schemes based on these labels and relationships. The assessment behavior of laypersons has not yet been investigated, as current infant vocalization classification schemes have been aimed at professional and scientific applications. The study methodology was based on the Nijmegen protocol, in which participants rated vocalization recordings regarding acoustic class labels, and continuous affective scales valence, tense arousal and energetic arousal. We determined consensus stimuli ratings as well as stimuli similarities based on participant ratings. Our main findings are: (1) we identified 9 salient labels, (2) valence has the overall greatest association to label ratings, (3) there is a strong association between label and valence ratings in the negative valence space, but low association for neutral labels, and (4) stimuli separability is highest when grouping labels into 3 - 5 classes. We finally propose two classification schemes based on these findings.

Compensating class imbalance for acoustic chimpanzee detection with convolutional recurrent neural networks

May 26, 2021

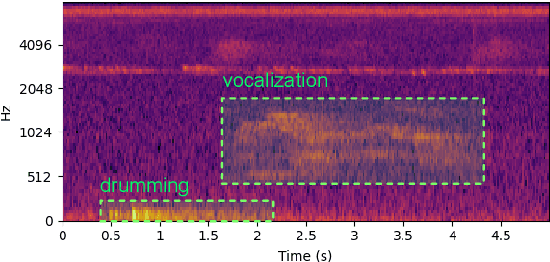

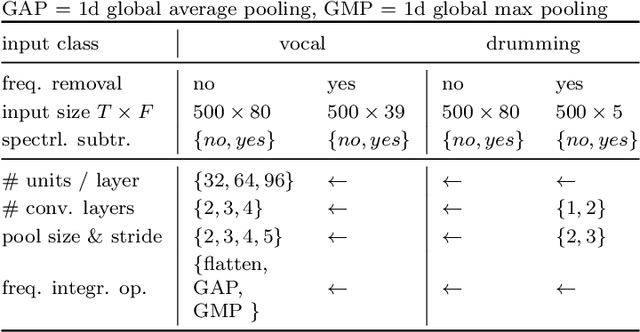

Abstract:Automatic detection systems are important in passive acoustic monitoring (PAM) systems, as these record large amounts of audio data which are infeasible for humans to evaluate manually. In this paper we evaluated methods for compensating class imbalance for deep-learning based automatic detection of acoustic chimpanzee calls. The prevalence of chimpanzee calls in natural habitats is very rare, i.e. databases feature a heavy imbalance between background and target calls. Such imbalances can have negative effects on classifier performances. We employed a state-of-the-art detection approach based on convolutional recurrent neural networks (CRNNs). We extended the detection pipeline through various stages for compensating class imbalance. These included (1) spectrogram denoising, (2) alternative loss functions, and (3) resampling. Our key findings are: (1) spectrogram denoising operations significantly improved performance for both target classes, (2) standard binary cross entropy reached the highest performance, and (3) manipulating relative class imbalance through resampling either decreased or maintained performance depending on the target class. Finally, we reached detection performances of 33% for drumming and 5% for vocalization, which is a >7 fold increase compared to previously published results. We conclude that supporting the network to learn decoupling noise conditions from foreground classes is of primary importance for increasing performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge