Immanuel Weber

BlessemFlood21: Advancing Flood Analysis with a High-Resolution Georeferenced Dataset for Humanitarian Aid Support

Jul 06, 2024

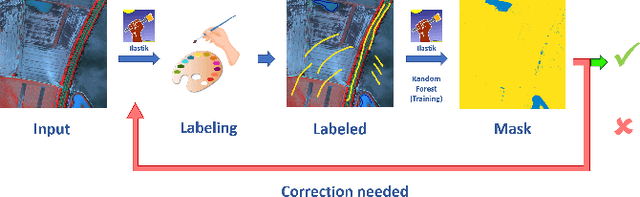

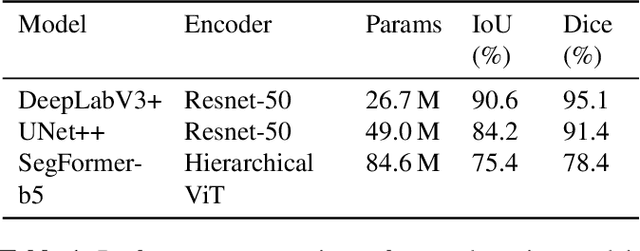

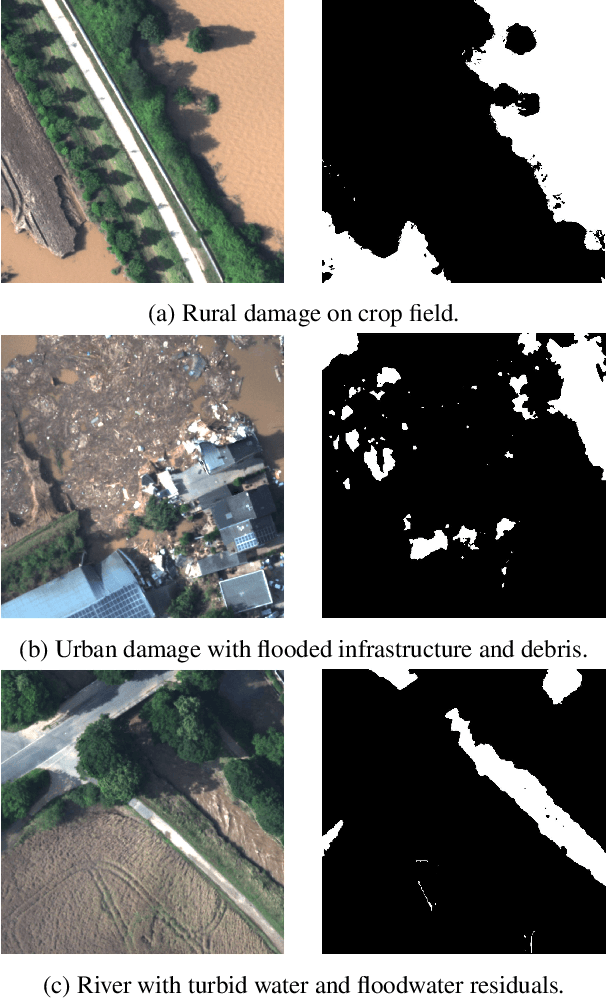

Abstract:Floods are an increasingly common global threat, causing emergencies and severe damage to infrastructure. During crises, organisations such as the World Food Programme use remotely sensed imagery, typically obtained through drones, for rapid situational analysis to plan life-saving actions. Computer Vision tools are needed to support task force experts on-site in the evaluation of the imagery to improve their efficiency and to allocate resources strategically. We introduce the BlessemFlood21 dataset to stimulate research on efficient flood detection tools. The imagery was acquired during the 2021 Erftstadt-Blessem flooding event and consists of high-resolution and georeferenced RGB-NIR images. In the resulting RGB dataset, the images are supplemented with detailed water masks, obtained via a semi-supervised human-in-the-loop technique, where in particular the NIR information is leveraged to classify pixels as either water or non-water. We evaluate our dataset by training and testing established Deep Learning models for semantic segmentation. With BlessemFlood21 we provide labeled high-resolution RGB data and a baseline for further development of algorithmic solutions tailored to flood detection in RGB imagery.

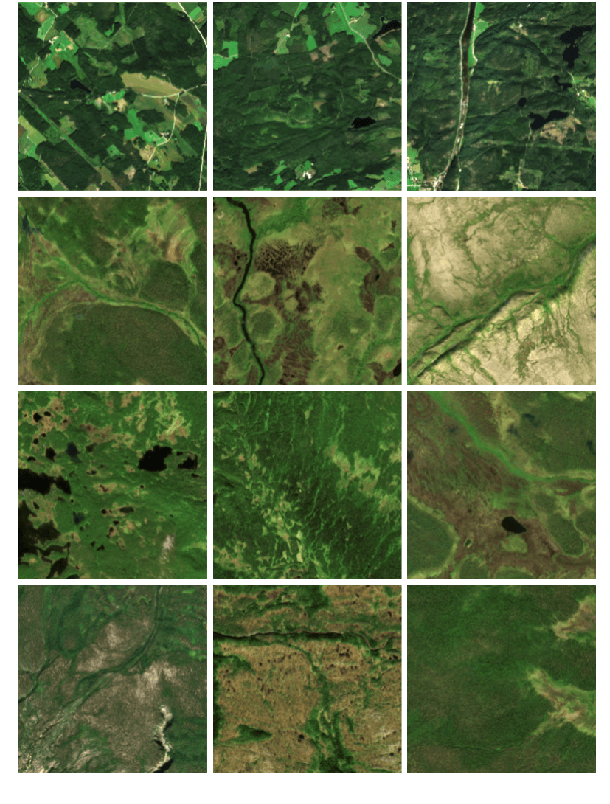

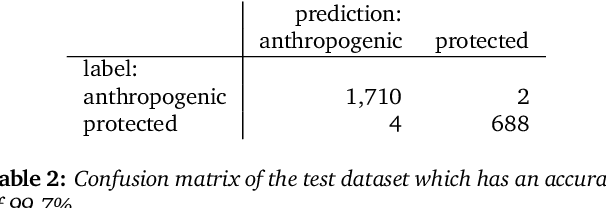

Exploring Wilderness Using Explainable Machine Learning in Satellite Imagery

Apr 11, 2022

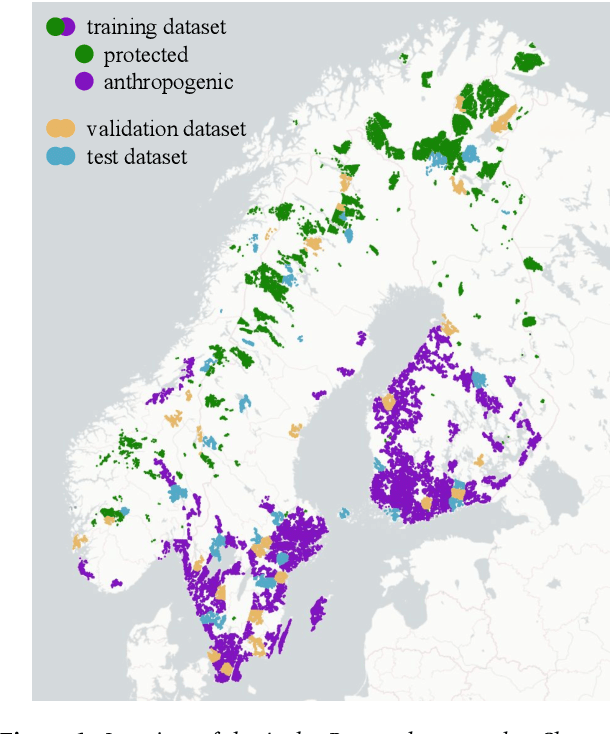

Abstract:Wilderness areas offer important ecological and social benefits and therefore warrant monitoring and preservation. Yet, the characteristics of wilderness are little known, making the detection and monitoring of wilderness areas via remote sensing techniques a challenging task. We explore the appearance and characteristics of the vague concept of wilderness via multispectral satellite imagery. For this, we apply a novel explainable machine learning technique to a dataset consisting of wild and anthropogenic areas in Fennoscandia. With our technique, we predict continuous, detailed, and high-resolution sensitivity maps of unseen remote sensing data for wild and anthropogenic characteristics. Our neural network provides an interpretable activation space in which regions are semantically arranged regarding these characteristics and certain land cover classes. Interpretability increases confidence in the method and allows for new explanations of the investigated concept. Our model advances explainable machine learning for remote sensing, offers opportunities for comprehensive analyses of existing wilderness, and has practical relevance for conservation efforts.

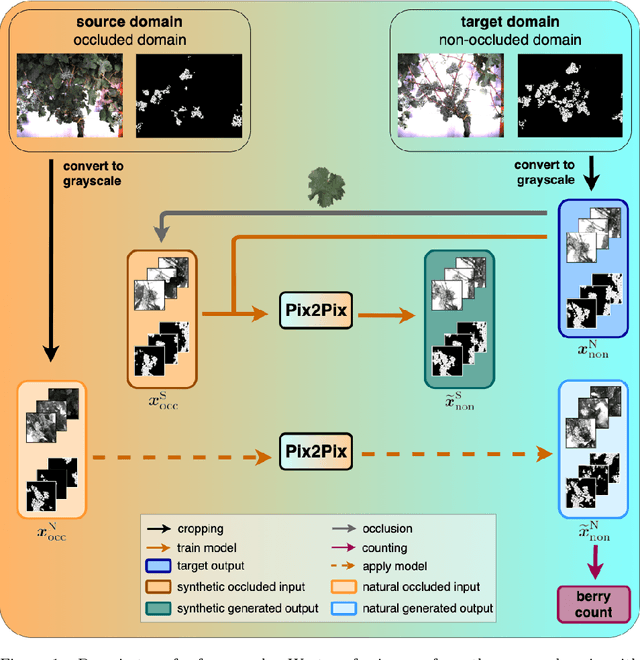

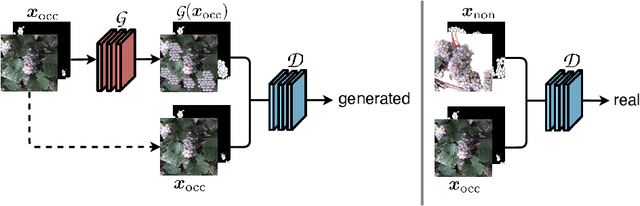

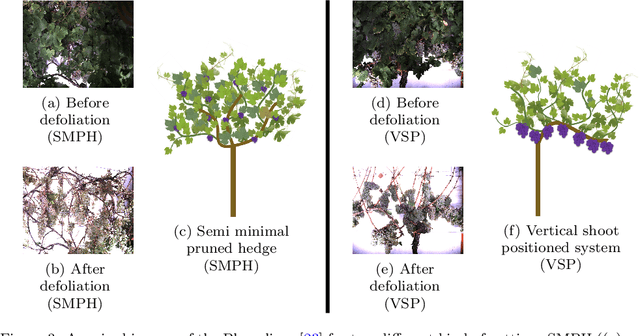

Behind the leaves -- Estimation of occluded grapevine berries with conditional generative adversarial networks

May 21, 2021

Abstract:The need for accurate yield estimates for viticulture is becoming more important due to increasing competition in the wine market worldwide. One of the most promising methods to estimate the harvest is berry counting, as it can be approached non-destructively, and its process can be automated. In this article, we present a method that addresses the challenge of occluded berries with leaves to obtain a more accurate estimate of the number of berries that will enable a better estimate of the harvest. We use generative adversarial networks, a deep learning-based approach that generates a likely scenario behind the leaves exploiting learned patterns from images with non-occluded berries. Our experiments show that the estimate of the number of berries after applying our method is closer to the manually counted reference. In contrast to applying a factor to the berry count, our approach better adapts to local conditions by directly involving the appearance of the visible berries. Furthermore, we show that our approach can identify which areas in the image should be changed by adding new berries without explicitly requiring information about hidden areas.

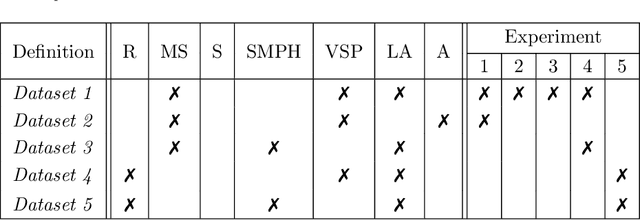

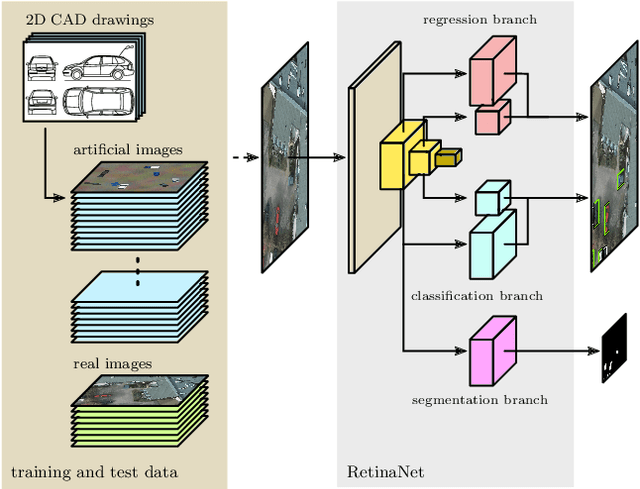

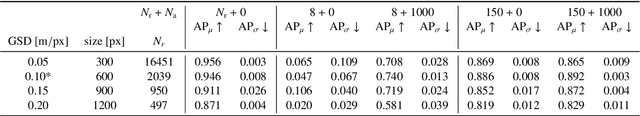

Artificial and beneficial -- Exploiting artificial images for aerial vehicle detection

Apr 07, 2021

Abstract:Object detection in aerial images is an important task in environmental, economic, and infrastructure-related tasks. One of the most prominent applications is the detection of vehicles, for which deep learning approaches are increasingly used. A major challenge in such approaches is the limited amount of data that arises, for example, when more specialized and rarer vehicles such as agricultural machinery or construction vehicles are to be detected. This lack of data contrasts with the enormous data hunger of deep learning methods in general and object recognition in particular. In this article, we address this issue in the context of the detection of road vehicles in aerial images. To overcome the lack of annotated data, we propose a generative approach that generates top-down images by overlaying artificial vehicles created from 2D CAD drawings on artificial or real backgrounds. Our experiments with a modified RetinaNet object detection network show that adding these images to small real-world datasets significantly improves detection performance. In cases of very limited or even no real-world images, we observe an improvement in average precision of up to 0.70 points. We address the remaining performance gap to real-world datasets by analyzing the effect of the image composition of background and objects and give insights into the importance of background.

* 14 pages, 13 figures, 4 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge