Andreas Brandstätter

Multi-Agent Spatial Predictive Control with Application to Drone Flocking (Extended Version)

Mar 31, 2022

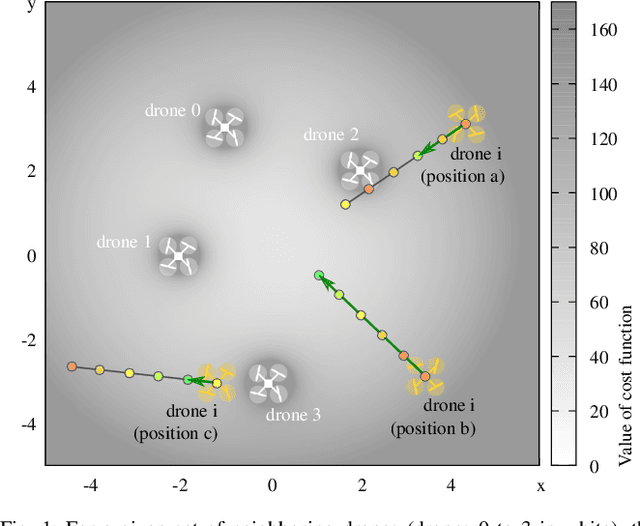

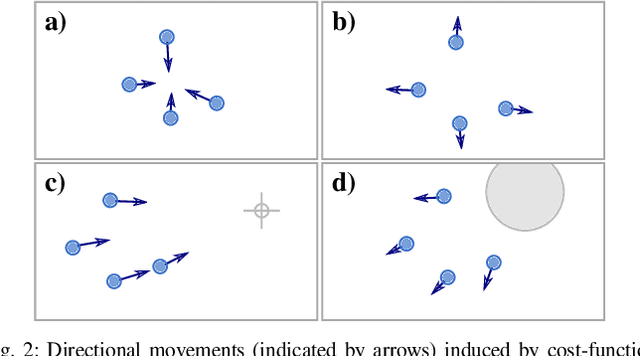

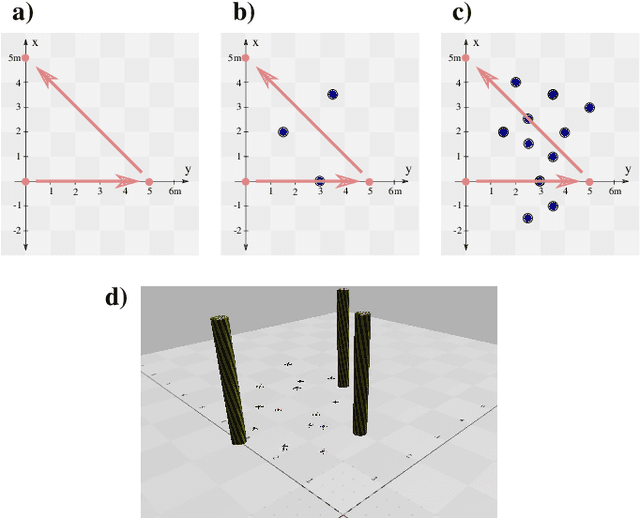

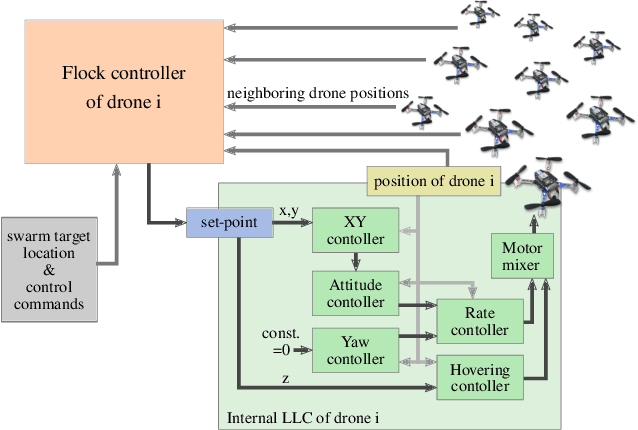

Abstract:We introduce the novel concept of Spatial Predictive Control (SPC) to solve the following problem: given a collection of agents (e.g., drones) with positional low-level controllers (LLCs) and a mission-specific distributed cost function, how can a distributed controller achieve and maintain cost-function minimization without a plant model and only positional observations of the environment? Our fully distributed SPC controller is based strictly on the position of the agent itself and on those of its neighboring agents. This information is used in every time-step to compute the gradient of the cost function and to perform a spatial look-ahead to predict the best next target position for the LLC. Using a high-fidelity simulation environment, we show that SPC outperforms the most closely related class of controllers, Potential Field Controllers, on the drone flocking problem. We also show that SPC is able to cope with a potential sim-to-real transfer gap by demonstrating its performance on real hardware, namely our implementation of flocking using nine Crazyflie 2.1 drones.

Model-based versus Model-free Deep Reinforcement Learning for Autonomous Racing Cars

Mar 08, 2021

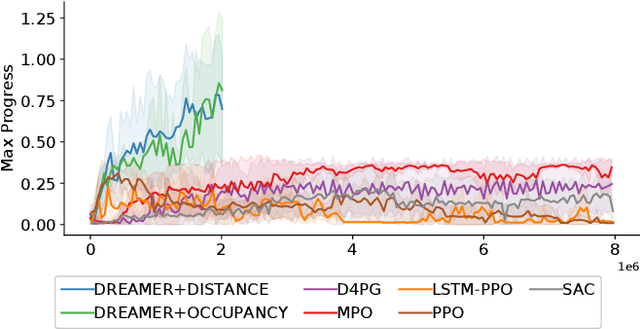

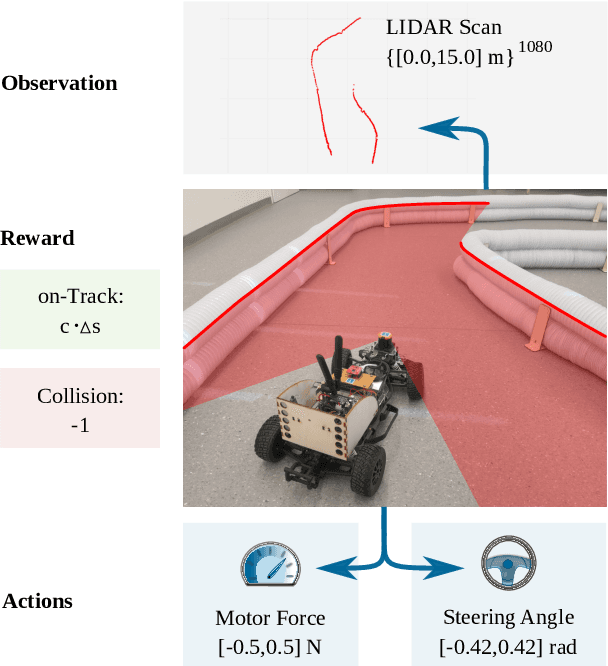

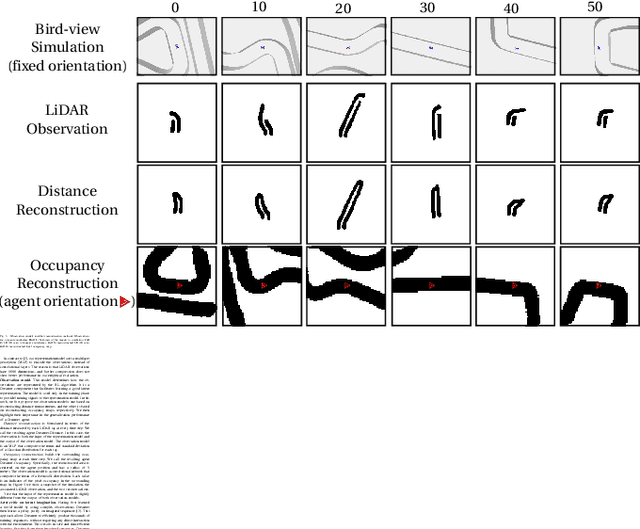

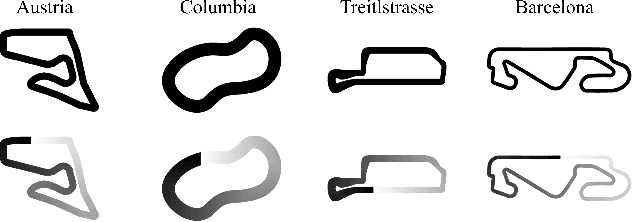

Abstract:Despite the rich theoretical foundation of model-based deep reinforcement learning (RL) agents, their effectiveness in real-world robotics-applications is less studied and understood. In this paper, we, therefore, investigate how such agents generalize to real-world autonomous-vehicle control-tasks, where advanced model-free deep RL algorithms fail. In particular, we set up a series of time-lap tasks for an F1TENTH racing robot, equipped with high-dimensional LiDAR sensors, on a set of test tracks with a gradual increase in their complexity. In this continuous-control setting, we show that model-based agents capable of learning in imagination, substantially outperform model-free agents with respect to performance, sample efficiency, successful task completion, and generalization. Moreover, we show that the generalization ability of model-based agents strongly depends on the observation-model choice. Finally, we provide extensive empirical evidence for the effectiveness of model-based agents provided with long enough memory horizons in sim2real tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge