Amparo Alonso-Betanzos

University of A Coruña - Research Center on Information and Communication Technologies

A robust methodology for long-term sustainability evaluation of Machine Learning models

Nov 11, 2025Abstract:Sustainability and efficiency have become essential considerations in the development and deployment of Artificial Intelligence systems, yet existing regulatory and reporting practices lack standardized, model-agnostic evaluation protocols. Current assessments often measure only short-term experimental resource usage and disproportionately emphasize batch learning settings, failing to reflect real-world, long-term AI lifecycles. In this work, we propose a comprehensive evaluation protocol for assessing the long-term sustainability of ML models, applicable to both batch and streaming learning scenarios. Through experiments on diverse classification tasks using a range of model types, we demonstrate that traditional static train-test evaluations do not reliably capture sustainability under evolving data and repeated model updates. Our results show that long-term sustainability varies significantly across models, and in many cases, higher environmental cost yields little performance benefit.

Positive-Unlabelled Learning for Improving Image-based Recommender System Explainability

Jul 09, 2024

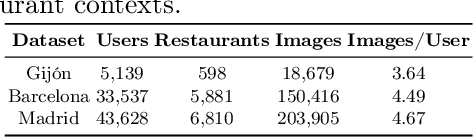

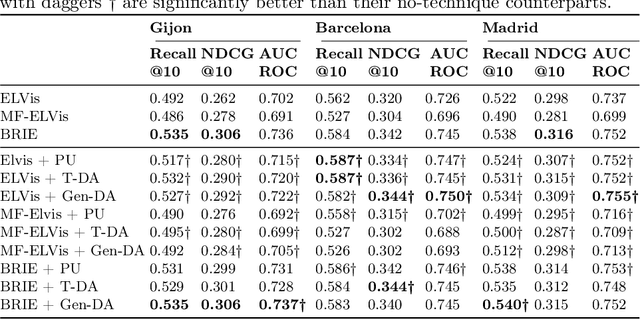

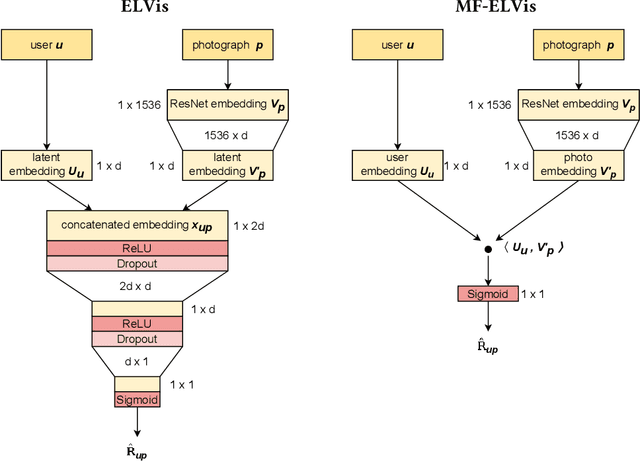

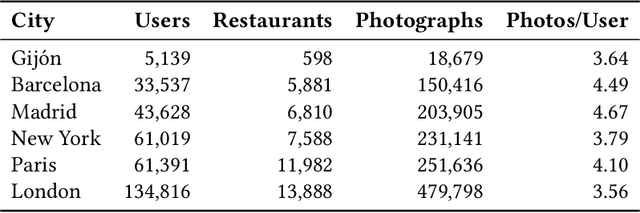

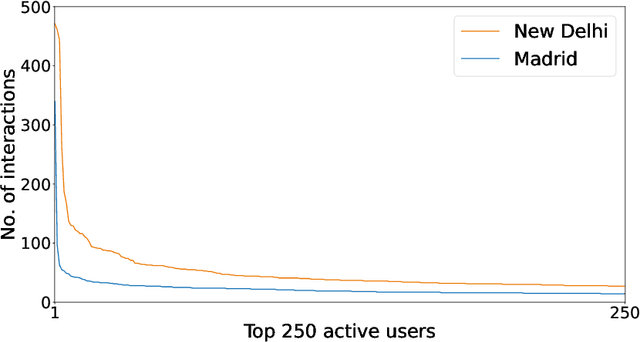

Abstract:Among the existing approaches for visual-based Recommender System (RS) explainability, utilizing user-uploaded item images as efficient, trustable explanations is a promising option. However, current models following this paradigm assume that, for any user, all images uploaded by other users can be considered negative training examples (i.e. bad explanatory images), an inadvertedly naive labelling assumption that contradicts the rationale of the approach. This work proposes a new explainer training pipeline by leveraging Positive-Unlabelled (PU) Learning techniques to train image-based explainer with refined subsets of reliable negative examples for each user selected through a novel user-personalized, two-step, similarity-based PU Learning algorithm. Computational experiments show this PU-based approach outperforms the state-of-the-art non-PU method in six popular real-world datasets, proving that an improvement of visual-based RS explainability can be achieved by maximizing training data quality rather than increasing model complexity.

Positive-Unlabelled Learning for Identifying New Candidate Dietary Restriction-related Genes among Ageing-related Genes

Jun 14, 2024Abstract:Dietary Restriction (DR) is one of the most popular anti-ageing interventions, prompting exhaustive research into genes associated with its mechanisms. Recently, Machine Learning (ML) has been explored to identify potential DR-related genes among ageing-related genes, aiming to minimize costly wet lab experiments needed to expand our knowledge on DR. However, to train a model from positive (DR-related) and negative (non-DR-related) examples, existing ML methods naively label genes without known DR relation as negative examples, assuming that lack of DR-related annotation for a gene represents evidence of absence of DR-relatedness, rather than absence of evidence; this hinders the reliability of the negative examples (non-DR-related genes) and the method's ability to identify novel DR-related genes. This work introduces a novel gene prioritization method based on the two-step Positive-Unlabelled (PU) Learning paradigm: using a similarity-based, KNN-inspired approach, our method first selects reliable negative examples among the genes without known DR associations. Then, these reliable negatives and all known positives are used to train a classifier that effectively differentiates DR-related and non-DR-related genes, which is finally employed to generate a more reliable ranking of promising genes for novel DR-relatedness. Our method significantly outperforms the existing state-of-the-art non-PU approach for DR-relatedness prediction in three relevant performance metrics. In addition, curation of existing literature finds support for the top-ranked candidate DR-related genes identified by our model.

Beyond RMSE and MAE: Introducing EAUC to unmask hidden bias and unfairness in dyadic regression models

Jan 19, 2024Abstract:Dyadic regression models, which predict real-valued outcomes for pairs of entities, are fundamental in many domains (e.g. predicting the rating of a user to a product in Recommender Systems) and promising and under exploration in many others (e.g. approximating the adequate dosage of a drug for a patient in personalized pharmacology). In this work, we demonstrate that non-uniformity in the observed value distributions of individual entities leads to severely biased predictions in state-of-the-art models, skewing predictions towards the average of observed past values for the entity and providing worse-than-random predictive power in eccentric yet equally important cases. We show that the usage of global error metrics like Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE) is insufficient to capture this phenomenon, which we name eccentricity bias, and we introduce Eccentricity-Area Under the Curve (EAUC) as a new complementary metric that can quantify it in all studied models and datasets. We also prove the adequateness of EAUC by using naive de-biasing corrections to demonstrate that a lower model bias correlates with a lower EAUC and vice-versa. This work contributes a bias-aware evaluation of dyadic regression models to avoid potential unfairness and risks in critical real-world applications of such systems.

Agent-Based Model: Simulating a Virus Expansion Based on the Acceptance of Containment Measures

Jul 28, 2023Abstract:Compartmental epidemiological models categorize individuals based on their disease status, such as the SEIRD model (Susceptible-Exposed-Infected-Recovered-Dead). These models determine the parameters that influence the magnitude of an outbreak, such as contagion and recovery rates. However, they don't account for individual characteristics or population actions, which are crucial for assessing mitigation strategies like mask usage in COVID-19 or condom distribution in HIV. Additionally, studies highlight the role of citizen solidarity, interpersonal trust, and government credibility in explaining differences in contagion rates between countries. Agent-Based Modeling (ABM) offers a valuable approach to study complex systems by simulating individual components, their actions, and interactions within an environment. ABM provides a useful tool for analyzing social phenomena. In this study, we propose an ABM architecture that combines an adapted SEIRD model with a decision-making model for citizens. In this paper, we propose an ABM architecture that allows us to analyze the evolution of virus infections in a society based on two components: 1) an adaptation of the SEIRD model and 2) a decision-making model for citizens. In this way, the evolution of infections is affected, in addition to the spread of the virus itself, by individual behavior when accepting or rejecting public health measures. We illustrate the designed model by examining the progression of SARS-CoV-2 infections in A Coru\~na, Spain. This approach makes it possible to analyze the effect of the individual actions of citizens during an epidemic on the spread of the virus.

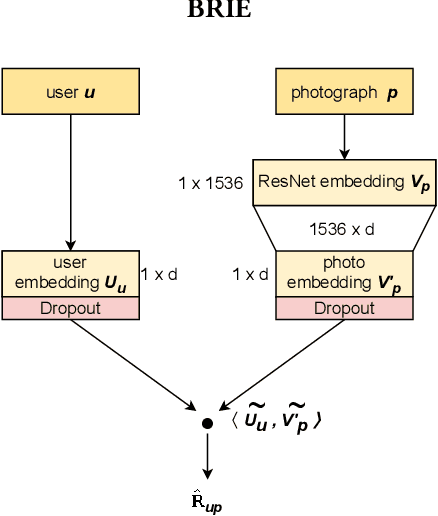

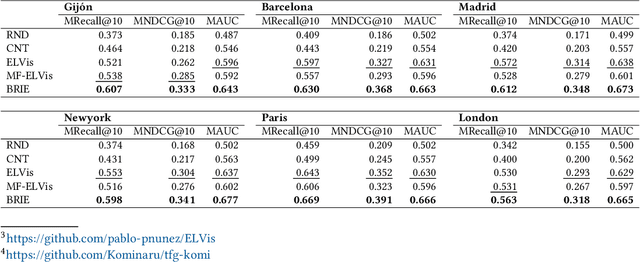

Sustainable Transparency in Recommender Systems: Bayesian Ranking of Images for Explainability

Jul 27, 2023

Abstract:Recommender Systems have become crucial in the modern world, commonly guiding users towards relevant content or products, and having a large influence over the decisions of users and citizens. However, ensuring transparency and user trust in these systems remains a challenge; personalized explanations have emerged as a solution, offering justifications for recommendations. Among the existing approaches for generating personalized explanations, using visual content created by the users is one particularly promising option, showing a potential to maximize transparency and user trust. Existing models for explaining recommendations in this context face limitations: sustainability has been a critical concern, as they often require substantial computational resources, leading to significant carbon emissions comparable to the Recommender Systems where they would be integrated. Moreover, most models employ surrogate learning goals that do not align with the objective of ranking the most effective personalized explanations for a given recommendation, leading to a suboptimal learning process and larger model sizes. To address these limitations, we present BRIE, a novel model designed to tackle the existing challenges by adopting a more adequate learning goal based on Bayesian Pairwise Ranking, enabling it to achieve consistently superior performance than state-of-the-art models in six real-world datasets, while exhibiting remarkable efficiency, emitting up to 75% less CO${_2}$ during training and inference with a model up to 64 times smaller than previous approaches.

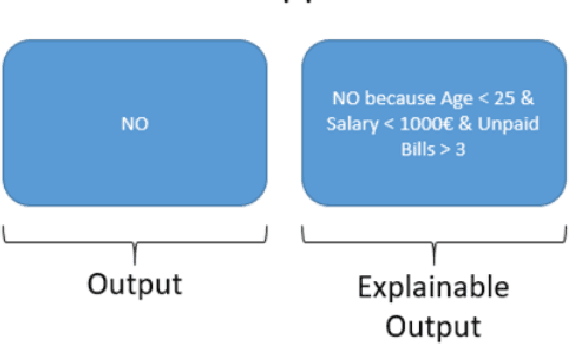

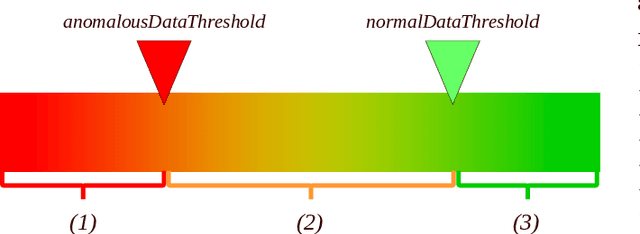

Explanation Method for Anomaly Detection on Mixed Numerical and Categorical Spaces

Sep 09, 2022

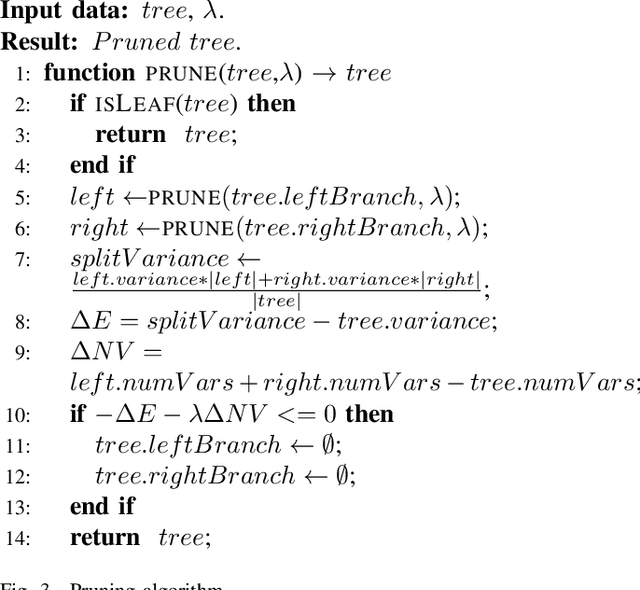

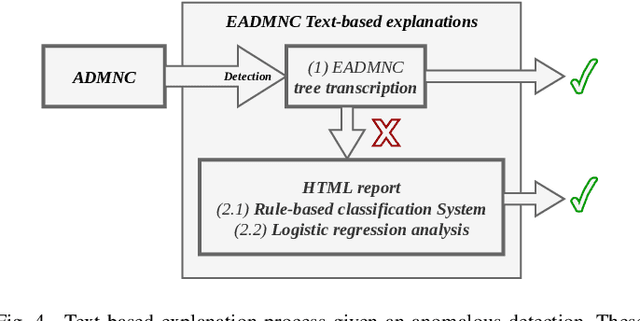

Abstract:Most proposals in the anomaly detection field focus exclusively on the detection stage, specially in the recent deep learning approaches. While providing highly accurate predictions, these models often lack transparency, acting as "black boxes". This criticism has grown to the point that explanation is now considered very relevant in terms of acceptability and reliability. In this paper, we addressed this issue by inspecting the ADMNC (Anomaly Detection on Mixed Numerical and Categorical Spaces) model, an existing very accurate although opaque anomaly detector capable to operate with both numerical and categorical inputs. This work presents the extension EADMNC (Explainable Anomaly Detection on Mixed Numerical and Categorical spaces), which adds explainability to the predictions obtained with the original model. We preserved the scalability of the original method thanks to the Apache Spark framework. EADMNC leverages the formulation of the previous ADMNC model to offer pre hoc and post hoc explainability, while maintaining the accuracy of the original architecture. We present a pre hoc model that globally explains the outputs by segmenting input data into homogeneous groups, described with only a few variables. We designed a graphical representation based on regression trees, which supervisors can inspect to understand the differences between normal and anomalous data. Our post hoc explanations consist of a text-based template method that locally provides textual arguments supporting each detection. We report experimental results on extensive real-world data, particularly in the domain of network intrusion detection. The usefulness of the explanations is assessed by theory analysis using expert knowledge in the network intrusion domain.

Explain and Conquer: Personalised Text-based Reviews to Achieve Transparency

May 03, 2022

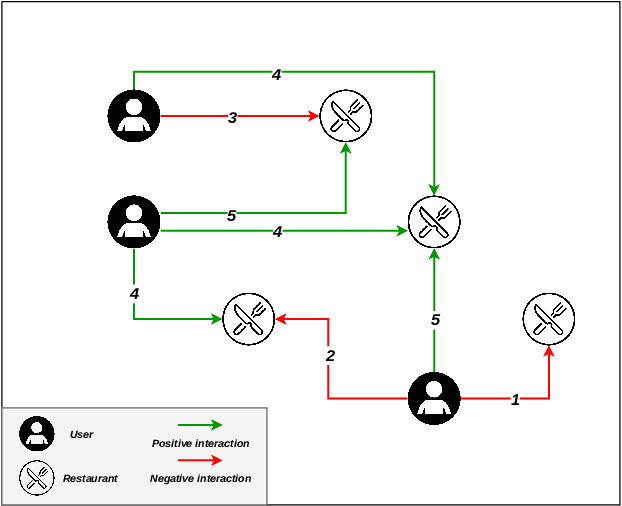

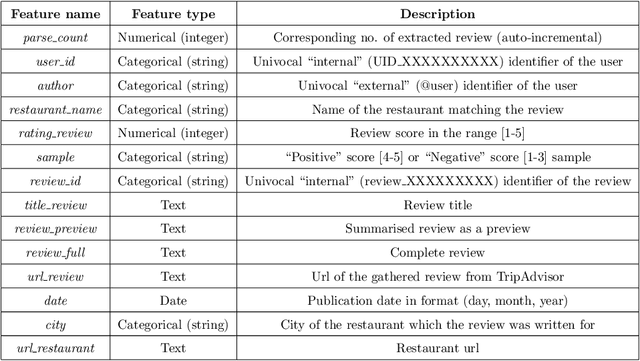

Abstract:There are many contexts where dyadic data is present. Social networking is a well-known example, where transparency has grown on importance. In these contexts, pairs of items are linked building a network where interactions play a crucial role. Explaining why these relationships are established is core to address transparency. These explanations are often presented using text, thanks to the spread of the natural language understanding tasks. We have focused on the TripAdvisor platform, considering the applicability to other dyadic data contexts. The items are a subset of users and restaurants and the interactions the reviews posted by these users. Our aim is to represent and explain pairs (user, restaurant) established by agents (e.g., a recommender system or a paid promotion mechanism), so that personalisation is taken into account. We propose the PTER (Personalised TExt-based Reviews) model. We predict, from the available reviews for a given restaurant, those that fit to the specific user interactions. PTER leverages the BERT (Bidirectional Encoders Representations from Transformers) language model. We customised a deep neural network following the feature-based approach. The performance metrics show the validity of our labelling proposal. We defined an evaluation framework based on a clustering process to assess our personalised representation. PTER clearly outperforms the proposed adversary in 5 of the 6 datasets, with a minimum ratio improvement of 4%.

E2E-FS: An End-to-End Feature Selection Method for Neural Networks

Dec 14, 2020

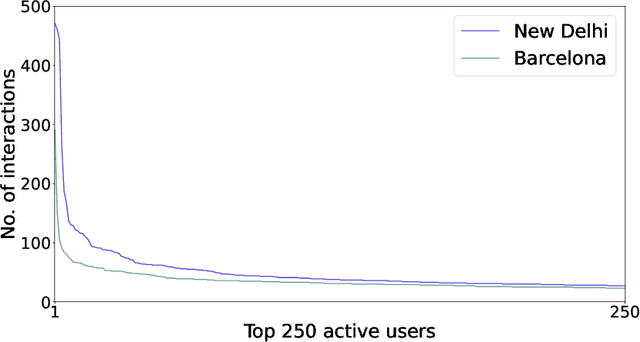

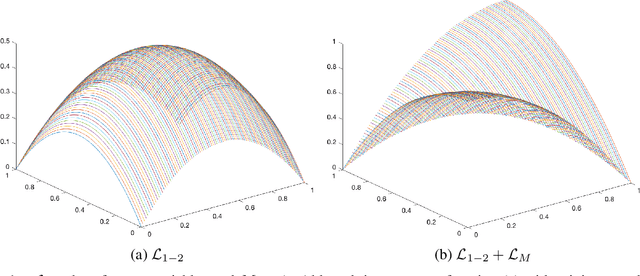

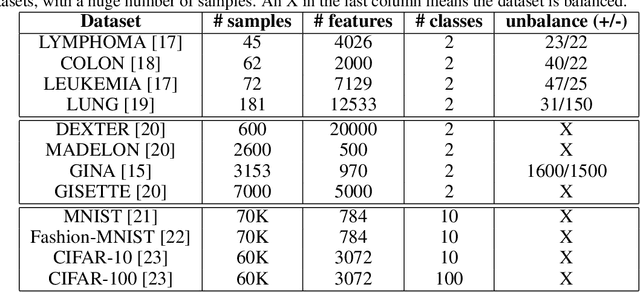

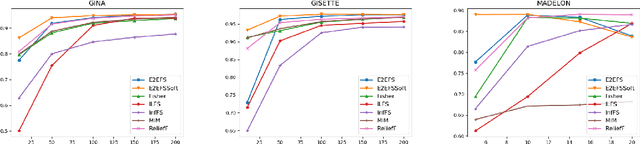

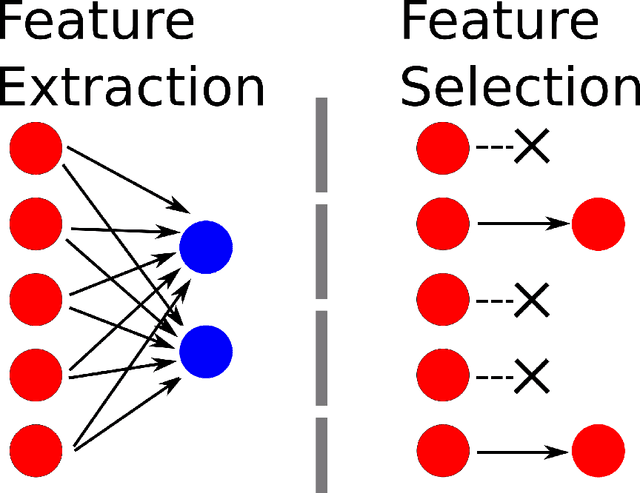

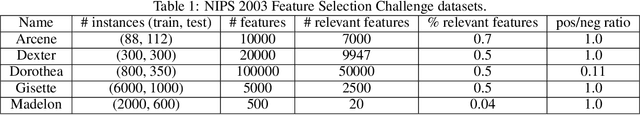

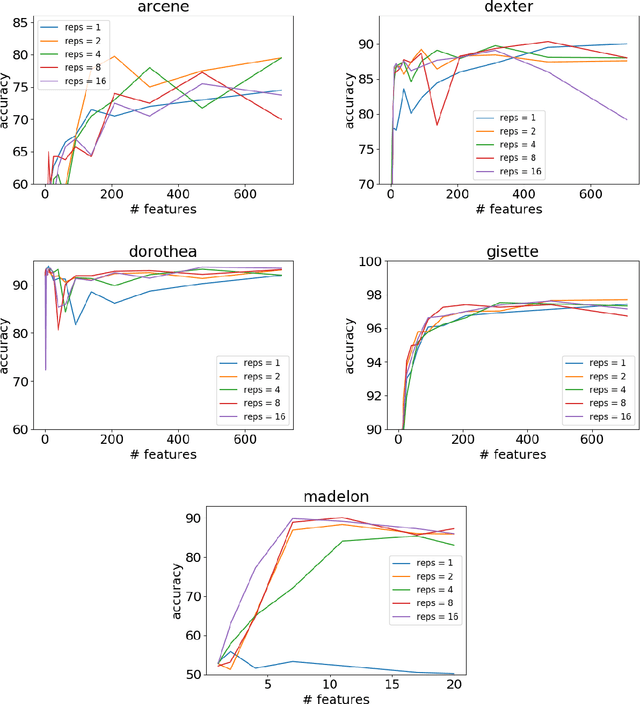

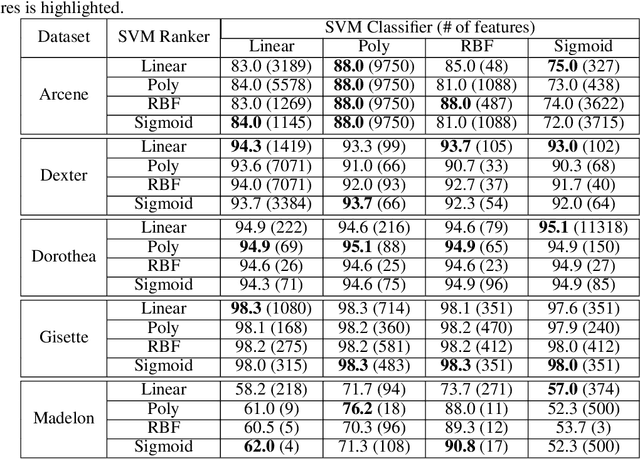

Abstract:Classic embedded feature selection algorithms are often divided in two large groups: tree-based algorithms and lasso variants. Both approaches are focused in different aspects: while the tree-based algorithms provide a clear explanation about which variables are being used to trigger a certain output, lasso-like approaches sacrifice a detailed explanation in favor of increasing its accuracy. In this paper, we present a novel embedded feature selection algorithm, called End-to-End Feature Selection (E2E-FS), that aims to provide both accuracy and explainability in a clever way. Despite having non-convex regularization terms, our algorithm, similar to the lasso approach, is solved with gradient descent techniques, introducing some restrictions that force the model to specifically select a maximum number of features that are going to be used subsequently by the classifier. Although these are hard restrictions, the experimental results obtained show that this algorithm can be used with any learning model that is trained using a gradient descent algorithm.

A scalable saliency-based Feature selection method with instance level information

Apr 30, 2019

Abstract:Classic feature selection techniques remove those features that are either irrelevant or redundant, achieving a subset of relevant features that help to provide a better knowledge extraction. This allows the creation of compact models that are easier to interpret. Most of these techniques work over the whole dataset, but they are unable to provide the user with successful information when only instance information is needed. In short, given any example, classic feature selection algorithms do not give any information about which the most relevant information is, regarding this sample. This work aims to overcome this handicap by developing a novel feature selection method, called Saliency-based Feature Selection (SFS), based in deep-learning saliency techniques. Our experimental results will prove that this algorithm can be successfully used not only in Neural Networks, but also under any given architecture trained by using Gradient Descent techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge