Luis de-Marcos

Distributed Correlation-Based Feature Selection in Spark

Jan 31, 2019

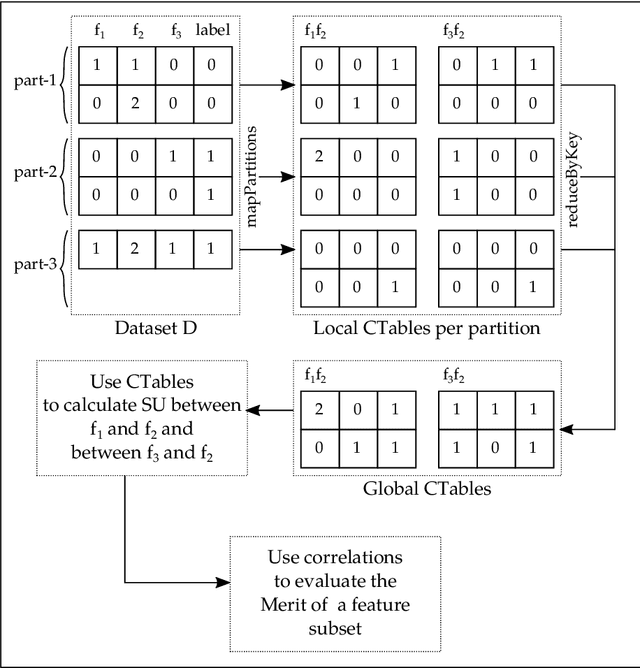

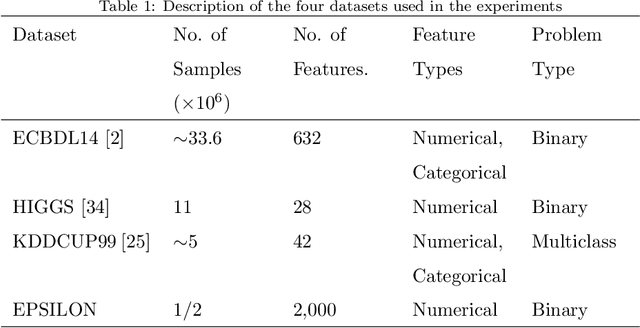

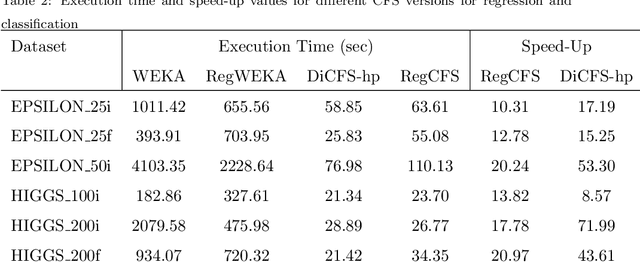

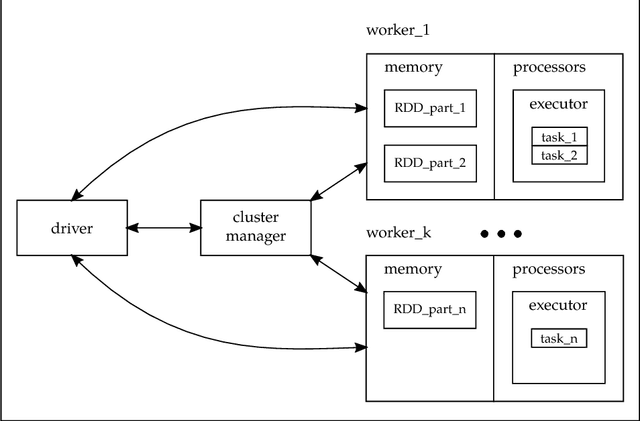

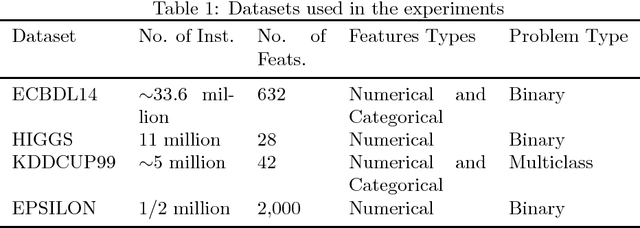

Abstract:CFS (Correlation-Based Feature Selection) is an FS algorithm that has been successfully applied to classification problems in many domains. We describe Distributed CFS (DiCFS) as a completely redesigned, scalable, parallel and distributed version of the CFS algorithm, capable of dealing with the large volumes of data typical of big data applications. Two versions of the algorithm were implemented and compared using the Apache Spark cluster computing model, currently gaining popularity due to its much faster processing times than Hadoop's MapReduce model. We tested our algorithms on four publicly available datasets, each consisting of a large number of instances and two also consisting of a large number of features. The results show that our algorithms were superior in terms of both time-efficiency and scalability. In leveraging a computer cluster, they were able to handle larger datasets than the non-distributed WEKA version while maintaining the quality of the results, i.e., exactly the same features were returned by our algorithms when compared to the original algorithm available in WEKA.

Distributed ReliefF based Feature Selection in Spark

Nov 01, 2018

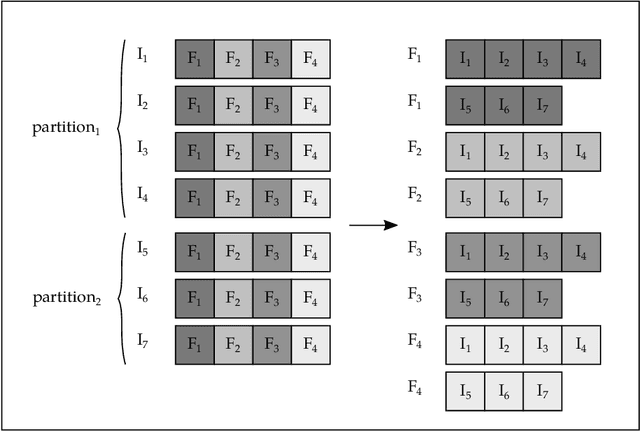

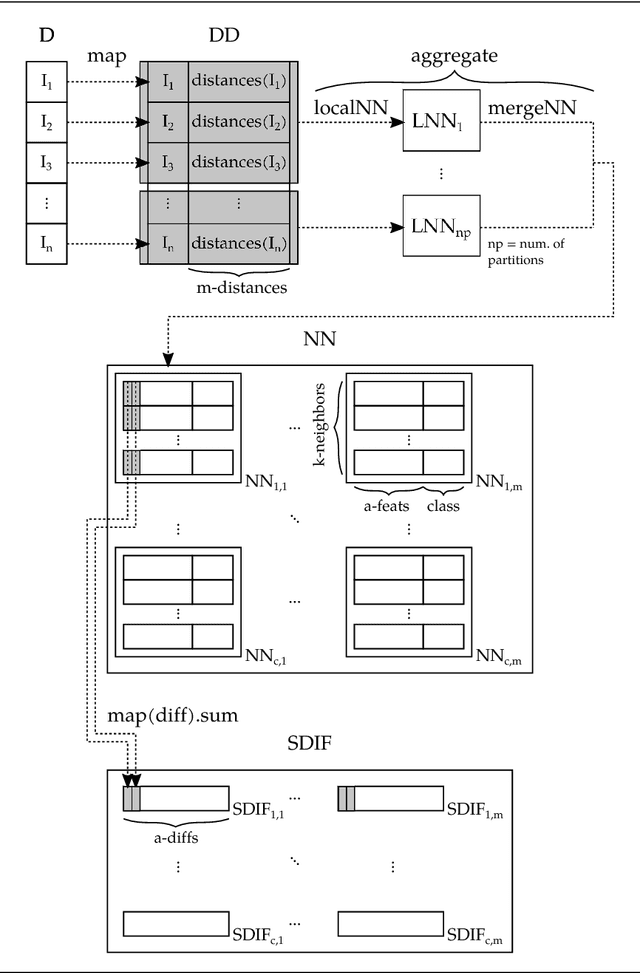

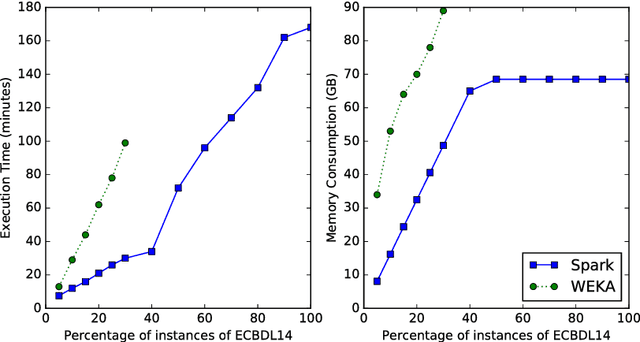

Abstract:Feature selection (FS) is a key research area in the machine learning and data mining fields, removing irrelevant and redundant features usually helps to reduce the effort required to process a dataset while maintaining or even improving the processing algorithm's accuracy. However, traditional algorithms designed for executing on a single machine lack scalability to deal with the increasing amount of data that has become available in the current Big Data era. ReliefF is one of the most important algorithms successfully implemented in many FS applications. In this paper, we present a completely redesigned distributed version of the popular ReliefF algorithm based on the novel Spark cluster computing model that we have called DiReliefF. Spark is increasing its popularity due to its much faster processing times compared with Hadoop's MapReduce model implementation. The effectiveness of our proposal is tested on four publicly available datasets, all of them with a large number of instances and two of them with also a large number of features. Subsets of these datasets were also used to compare the results to a non-distributed implementation of the algorithm. The results show that the non-distributed implementation is unable to handle such large volumes of data without specialized hardware, while our design can process them in a scalable way with much better processing times and memory usage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge