Amir Samadi

Good Data Is All Imitation Learning Needs

Sep 26, 2024

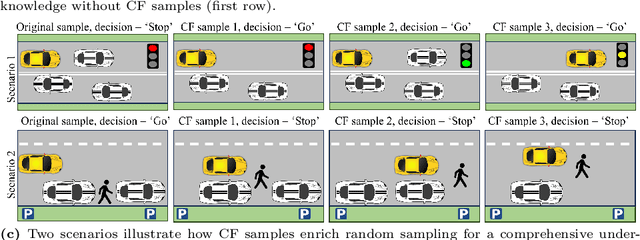

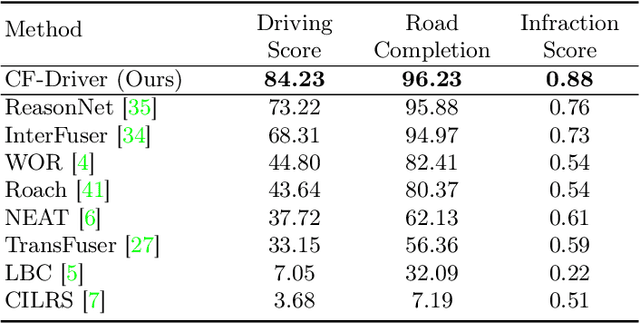

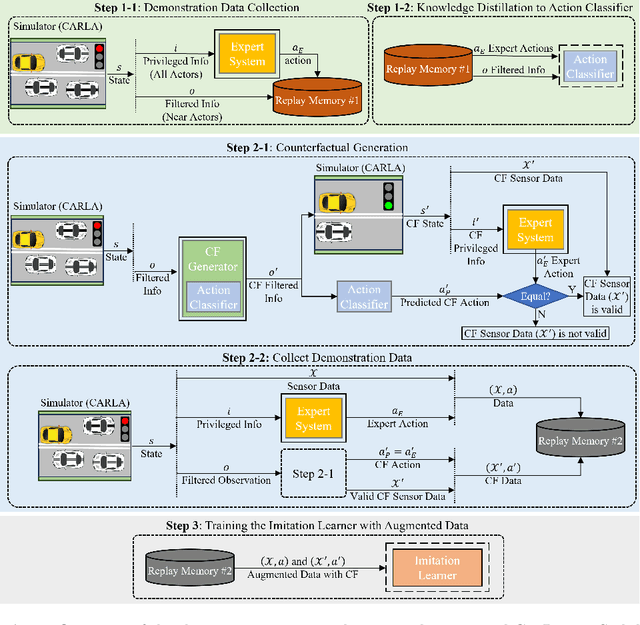

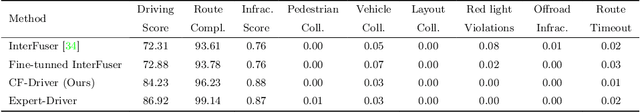

Abstract:In this paper, we address the limitations of traditional teacher-student models, imitation learning, and behaviour cloning in the context of Autonomous/Automated Driving Systems (ADS), where these methods often struggle with incomplete coverage of real-world scenarios. To enhance the robustness of such models, we introduce the use of Counterfactual Explanations (CFEs) as a novel data augmentation technique for end-to-end ADS. CFEs, by generating training samples near decision boundaries through minimal input modifications, lead to a more comprehensive representation of expert driver strategies, particularly in safety-critical scenarios. This approach can therefore help improve the model's ability to handle rare and challenging driving events, such as anticipating darting out pedestrians, ultimately leading to safer and more trustworthy decision-making for ADS. Our experiments in the CARLA simulator demonstrate that CF-Driver outperforms the current state-of-the-art method, achieving a higher driving score and lower infraction rates. Specifically, CF-Driver attains a driving score of 84.2, surpassing the previous best model by 15.02 percentage points. These results highlight the effectiveness of incorporating CFEs in training end-to-end ADS. To foster further research, the CF-Driver code is made publicly available.

SAFE-RL: Saliency-Aware Counterfactual Explainer for Deep Reinforcement Learning Policies

Apr 28, 2024

Abstract:While Deep Reinforcement Learning (DRL) has emerged as a promising solution for intricate control tasks, the lack of explainability of the learned policies impedes its uptake in safety-critical applications, such as automated driving systems (ADS). Counterfactual (CF) explanations have recently gained prominence for their ability to interpret black-box Deep Learning (DL) models. CF examples are associated with minimal changes in the input, resulting in a complementary output by the DL model. Finding such alternations, particularly for high-dimensional visual inputs, poses significant challenges. Besides, the temporal dependency introduced by the reliance of the DRL agent action on a history of past state observations further complicates the generation of CF examples. To address these challenges, we propose using a saliency map to identify the most influential input pixels across the sequence of past observed states by the agent. Then, we feed this map to a deep generative model, enabling the generation of plausible CFs with constrained modifications centred on the salient regions. We evaluate the effectiveness of our framework in diverse domains, including ADS, Atari Pong, Pacman and space-invaders games, using traditional performance metrics such as validity, proximity and sparsity. Experimental results demonstrate that this framework generates more informative and plausible CFs than the state-of-the-art for a wide range of environments and DRL agents. In order to foster research in this area, we have made our datasets and codes publicly available at https://github.com/Amir-Samadi/SAFE-RL.

Taming Transformers for Realistic Lidar Point Cloud Generation

Apr 08, 2024

Abstract:Diffusion Models (DMs) have achieved State-Of-The-Art (SOTA) results in the Lidar point cloud generation task, benefiting from their stable training and iterative refinement during sampling. However, DMs often fail to realistically model Lidar raydrop noise due to their inherent denoising process. To retain the strength of iterative sampling while enhancing the generation of raydrop noise, we introduce LidarGRIT, a generative model that uses auto-regressive transformers to iteratively sample the range images in the latent space rather than image space. Furthermore, LidarGRIT utilises VQ-VAE to separately decode range images and raydrop masks. Our results show that LidarGRIT achieves superior performance compared to SOTA models on KITTI-360 and KITTI odometry datasets. Code available at:https://github.com/hamedhaghighi/LidarGRIT.

A Novel Deep Neural Network for Trajectory Prediction in Automated Vehicles Using Velocity Vector Field

Sep 19, 2023Abstract:Anticipating the motion of other road users is crucial for automated driving systems (ADS), as it enables safe and informed downstream decision-making and motion planning. Unfortunately, contemporary learning-based approaches for motion prediction exhibit significant performance degradation as the prediction horizon increases or the observation window decreases. This paper proposes a novel technique for trajectory prediction that combines a data-driven learning-based method with a velocity vector field (VVF) generated from a nature-inspired concept, i.e., fluid flow dynamics. In this work, the vector field is incorporated as an additional input to a convolutional-recurrent deep neural network to help predict the most likely future trajectories given a sequence of bird's eye view scene representations. The performance of the proposed model is compared with state-of-the-art methods on the HighD dataset demonstrating that the VVF inclusion improves the prediction accuracy for both short and long-term (5~sec) time horizons. It is also shown that the accuracy remains consistent with decreasing observation windows which alleviates the requirement of a long history of past observations for accurate trajectory prediction. Source codes are available at: https://github.com/Amir-Samadi/VVF-TP.

SAFE: Saliency-Aware Counterfactual Explanations for DNN-based Automated Driving Systems

Jul 28, 2023

Abstract:A CF explainer identifies the minimum modifications in the input that would alter the model's output to its complement. In other words, a CF explainer computes the minimum modifications required to cross the model's decision boundary. Current deep generative CF models often work with user-selected features rather than focusing on the discriminative features of the black-box model. Consequently, such CF examples may not necessarily lie near the decision boundary, thereby contradicting the definition of CFs. To address this issue, we propose in this paper a novel approach that leverages saliency maps to generate more informative CF explanations. Source codes are available at: https://github.com/Amir-Samadi//Saliency_Aware_CF.

Counterfactual Explainer Framework for Deep Reinforcement Learning Models Using Policy Distillation

Jun 01, 2023Abstract:Deep Reinforcement Learning (DRL) has demonstrated promising capability in solving complex control problems. However, DRL applications in safety-critical systems are hindered by the inherent lack of robust verification techniques to assure their performance in such applications. One of the key requirements of the verification process is the development of effective techniques to explain the system functionality, i.e., why the system produces specific results in given circumstances. Recently, interpretation methods based on the Counterfactual (CF) explanation approach have been proposed to address the problem of explanation in DRLs. This paper proposes a novel CF explanation framework to explain the decisions made by a black-box DRL. To evaluate the efficacy of the proposed explanation framework, we carried out several experiments in the domains of automated driving systems and Atari Pong game. Our analysis demonstrates that the proposed framework generates plausible and meaningful explanations for various decisions made by deep underlying DRLs. Source codes are available at: \url{https://github.com/Amir-Samadi/Counterfactual-Explanation}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge