Roger Woodman

Towards A General-Purpose Motion Planning for Autonomous Vehicles Using Fluid Dynamics

Jun 09, 2024Abstract:General-purpose motion planners for automated/autonomous vehicles promise to handle the task of motion planning (including tactical decision-making and trajectory generation) for various automated driving functions (ADF) in a diverse range of operational design domains (ODDs). The challenges of designing a general-purpose motion planner arise from several factors: a) A plethora of scenarios with different semantic information in each driving scene should be addressed, b) a strong coupling between long-term decision-making and short-term trajectory generation shall be taken into account, c) the nonholonomic constraints of the vehicle dynamics must be considered, and d) the motion planner must be computationally efficient to run in real-time. The existing methods in the literature are either limited to specific scenarios (logic-based) or are data-driven (learning-based) and therefore lack explainability, which is important for safety-critical automated driving systems (ADS). This paper proposes a novel general-purpose motion planning solution for ADS inspired by the theory of fluid mechanics. A computationally efficient technique, i.e., the lattice Boltzmann method, is then adopted to generate a spatiotemporal vector field, which in accordance with the nonholonomic dynamic model of the Ego vehicle is employed to generate feasible candidate trajectories. The trajectory optimising ride quality, efficiency and safety is finally selected to calculate the imminent control signals, i.e., throttle/brake and steering angle. The performance of the proposed approach is evaluated by simulations in highway driving, on-ramp merging, and intersection crossing scenarios, and it is found to outperform traditional motion planning solutions based on model predictive control (MPC).

A Survey on Hybrid Motion Planning Methods for Automated Driving Systems

Jun 08, 2024

Abstract:Motion planning is an essential element of the modular architecture of autonomous vehicles, serving as a bridge between upstream perception modules and downstream low-level control signals. Traditional motion planners were initially designed for specific Automated Driving Functions (ADFs), yet the evolving landscape of highly automated driving systems (ADS) requires motion for a wide range of ADFs, including unforeseen ones. This need has motivated the development of the ``hybrid" approach in the literature, seeking to enhance motion planning performance by combining diverse techniques, such as data-driven (learning-based) and logic-driven (analytic) methodologies. Recent research endeavours have significantly contributed to the development of more efficient, accurate, and safe hybrid methods for Tactical Decision Making (TDM) and Trajectory Generation (TG), as well as integrating these algorithms into the motion planning module. Owing to the extensive variety and potential of hybrid methods, a timely and comprehensive review of the current literature is undertaken in this survey article. We classify the hybrid motion planners based on the types of components they incorporate, such as combinations of sampling-based with optimization-based/learning-based motion planners. The comparison of different classes is conducted by evaluating the addressed challenges and limitations, as well as assessing whether they focus on TG and/or TDM. We hope this approach will enable the researchers in this field to gain in-depth insights into the identification of current trends in hybrid motion planning and shed light on promising areas for future research.

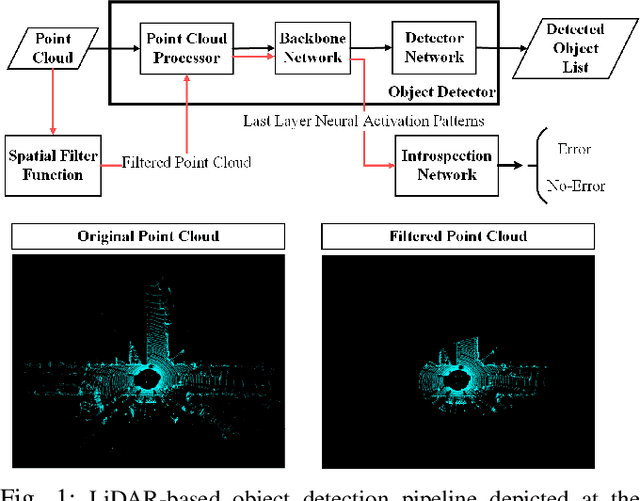

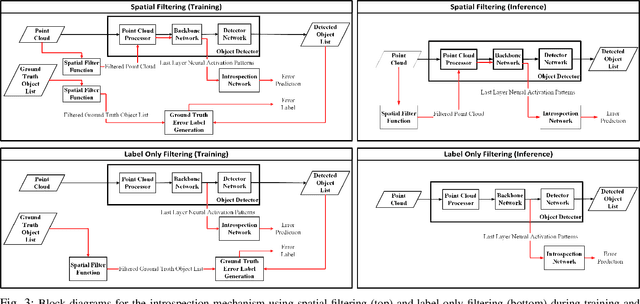

Integrity Monitoring of 3D Object Detection in Automated Driving Systems using Raw Activation Patterns and Spatial Filtering

May 13, 2024

Abstract:The deep neural network (DNN) models are widely used for object detection in automated driving systems (ADS). Yet, such models are prone to errors which can have serious safety implications. Introspection and self-assessment models that aim to detect such errors are therefore of paramount importance for the safe deployment of ADS. Current research on this topic has focused on techniques to monitor the integrity of the perception mechanism in ADS. Existing introspection models in the literature, however, largely concentrate on detecting perception errors by assigning equal importance to all parts of the input data frame to the perception module. This generic approach overlooks the varying safety significance of different objects within a scene, which obscures the recognition of safety-critical errors, posing challenges in assessing the reliability of perception in specific, crucial instances. Motivated by this shortcoming of state of the art, this paper proposes a novel method integrating raw activation patterns of the underlying DNNs, employed by the perception module, analysis with spatial filtering techniques. This novel approach enhances the accuracy of runtime introspection of the DNN-based 3D object detections by selectively focusing on an area of interest in the data, thereby contributing to the safety and efficacy of ADS perception self-assessment processes.

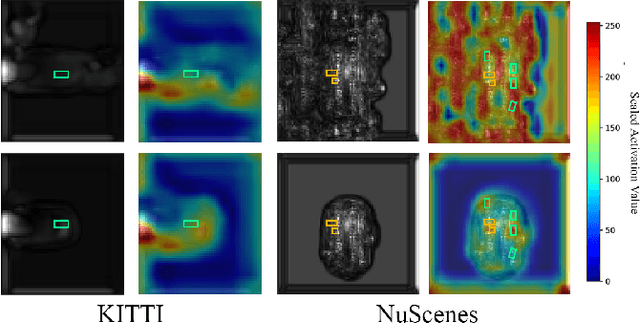

Run-time Monitoring of 3D Object Detection in Automated Driving Systems Using Early Layer Neural Activation Patterns

Apr 11, 2024

Abstract:Monitoring the integrity of object detection for errors within the perception module of automated driving systems (ADS) is paramount for ensuring safety. Despite recent advancements in deep neural network (DNN)-based object detectors, their susceptibility to detection errors, particularly in the less-explored realm of 3D object detection, remains a significant concern. State-of-the-art integrity monitoring (also known as introspection) mechanisms in 2D object detection mainly utilise the activation patterns in the final layer of the DNN-based detector's backbone. However, that may not sufficiently address the complexities and sparsity of data in 3D object detection. To this end, we conduct, in this article, an extensive investigation into the effects of activation patterns extracted from various layers of the backbone network for introspecting the operation of 3D object detectors. Through a comparative analysis using Kitti and NuScenes datasets with PointPillars and CenterPoint detectors, we demonstrate that using earlier layers' activation patterns enhances the error detection performance of the integrity monitoring system, yet increases computational complexity. To address the real-time operation requirements in ADS, we also introduce a novel introspection method that combines activation patterns from multiple layers of the detector's backbone and report its performance.

Run-time Introspection of 2D Object Detection in Automated Driving Systems Using Learning Representations

Mar 02, 2024

Abstract:Reliable detection of various objects and road users in the surrounding environment is crucial for the safe operation of automated driving systems (ADS). Despite recent progresses in developing highly accurate object detectors based on Deep Neural Networks (DNNs), they still remain prone to detection errors, which can lead to fatal consequences in safety-critical applications such as ADS. An effective remedy to this problem is to equip the system with run-time monitoring, named as introspection in the context of autonomous systems. Motivated by this, we introduce a novel introspection solution, which operates at the frame level for DNN-based 2D object detection and leverages neural network activation patterns. The proposed approach pre-processes the neural activation patterns of the object detector's backbone using several different modes. To provide extensive comparative analysis and fair comparison, we also adapt and implement several state-of-the-art (SOTA) introspection mechanisms for error detection in 2D object detection, using one-stage and two-stage object detectors evaluated on KITTI and BDD datasets. We compare the performance of the proposed solution in terms of error detection, adaptability to dataset shift, and, computational and memory resource requirements. Our performance evaluation shows that the proposed introspection solution outperforms SOTA methods, achieving an absolute reduction in the missed error ratio of 9% to 17% in the BDD dataset.

A Novel Deep Neural Network for Trajectory Prediction in Automated Vehicles Using Velocity Vector Field

Sep 19, 2023Abstract:Anticipating the motion of other road users is crucial for automated driving systems (ADS), as it enables safe and informed downstream decision-making and motion planning. Unfortunately, contemporary learning-based approaches for motion prediction exhibit significant performance degradation as the prediction horizon increases or the observation window decreases. This paper proposes a novel technique for trajectory prediction that combines a data-driven learning-based method with a velocity vector field (VVF) generated from a nature-inspired concept, i.e., fluid flow dynamics. In this work, the vector field is incorporated as an additional input to a convolutional-recurrent deep neural network to help predict the most likely future trajectories given a sequence of bird's eye view scene representations. The performance of the proposed model is compared with state-of-the-art methods on the HighD dataset demonstrating that the VVF inclusion improves the prediction accuracy for both short and long-term (5~sec) time horizons. It is also shown that the accuracy remains consistent with decreasing observation windows which alleviates the requirement of a long history of past observations for accurate trajectory prediction. Source codes are available at: https://github.com/Amir-Samadi/VVF-TP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge