Amine Bohi

Improving Pain Classification using Spatio-Temporal Deep Learning Approaches with Facial Expressions

Jan 15, 2025Abstract:Pain management and severity detection are crucial for effective treatment, yet traditional self-reporting methods are subjective and may be unsuitable for non-verbal individuals (people with limited speaking skills). To address this limitation, we explore automated pain detection using facial expressions. Our study leverages deep learning techniques to improve pain assessment by analyzing facial images from the Pain Emotion Faces Database (PEMF). We propose two novel approaches1: (1) a hybrid ConvNeXt model combined with Long Short-Term Memory (LSTM) blocks to analyze video frames and predict pain presence, and (2) a Spatio-Temporal Graph Convolution Network (STGCN) integrated with LSTM to process landmarks from facial images for pain detection. Our work represents the first use of the PEMF dataset for binary pain classification and demonstrates the effectiveness of these models through extensive experimentation. The results highlight the potential of combining spatial and temporal features for enhanced pain detection, offering a promising advancement in objective pain assessment methodologies.

CG-MER: A Card Game-based Multimodal dataset for Emotion Recognition

Jan 14, 2025Abstract:The field of affective computing has seen significant advancements in exploring the relationship between emotions and emerging technologies. This paper presents a novel and valuable contribution to this field with the introduction of a comprehensive French multimodal dataset designed specifically for emotion recognition. The dataset encompasses three primary modalities: facial expressions, speech, and gestures, providing a holistic perspective on emotions. Moreover, the dataset has the potential to incorporate additional modalities, such as Natural Language Processing (NLP) to expand the scope of emotion recognition research. The dataset was curated through engaging participants in card game sessions, where they were prompted to express a range of emotions while responding to diverse questions. The study included 10 sessions with 20 participants (9 females and 11 males). The dataset serves as a valuable resource for furthering research in emotion recognition and provides an avenue for exploring the intricate connections between human emotions and digital technologies.

EmoNeXt: an Adapted ConvNeXt for Facial Emotion Recognition

Jan 14, 2025

Abstract:Facial expressions play a crucial role in human communication serving as a powerful and impactful means to express a wide range of emotions. With advancements in artificial intelligence and computer vision, deep neural networks have emerged as effective tools for facial emotion recognition. In this paper, we propose EmoNeXt, a novel deep learning framework for facial expression recognition based on an adapted ConvNeXt architecture network. We integrate a Spatial Transformer Network (STN) to focus on feature-rich regions of the face and Squeeze-and-Excitation blocks to capture channel-wise dependencies. Moreover, we introduce a self-attention regularization term, encouraging the model to generate compact feature vectors. We demonstrate the superiority of our model over existing state-of-the-art deep learning models on the FER2013 dataset regarding emotion classification accuracy.

Multi-face emotion detection for effective Human-Robot Interaction

Jan 13, 2025

Abstract:The integration of dialogue interfaces in mobile devices has become ubiquitous, providing a wide array of services. As technology progresses, humanoid robots designed with human-like features to interact effectively with people are gaining prominence, and the use of advanced human-robot dialogue interfaces is continually expanding. In this context, emotion recognition plays a crucial role in enhancing human-robot interaction by enabling robots to understand human intentions. This research proposes a facial emotion detection interface integrated into a mobile humanoid robot, capable of displaying real-time emotions from multiple individuals on a user interface. To this end, various deep neural network models for facial expression recognition were developed and evaluated under consistent computer-based conditions, yielding promising results. Afterwards, a trade-off between accuracy and memory footprint was carefully considered to effectively implement this application on a mobile humanoid robot.

Comparing vector fields across surfaces: interest for characterizing the orientations of cortical folds

Jun 14, 2021

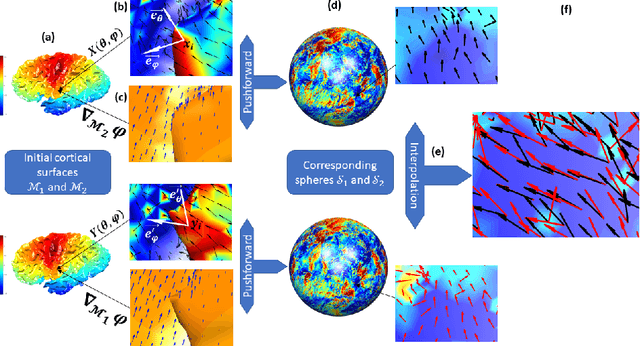

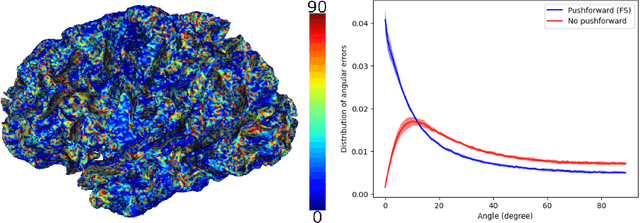

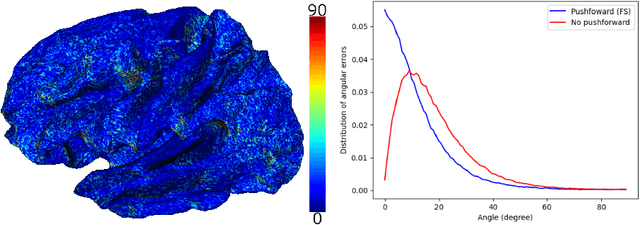

Abstract:Vectors fields defined on surfaces constitute relevant and useful representations but are rarely used. One reason might be that comparing vector fields across two surfaces of the same genus is not trivial: it requires to transport the vector fields from the original surfaces onto a common domain. In this paper, we propose a framework to achieve this task by mapping the vector fields onto a common space, using some notions of differential geometry. The proposed framework enables the computation of statistics on vector fields. We demonstrate its interest in practice with an application on real data with a quantitative assessment of the reproducibility of curvature directions that describe the complex geometry of cortical folding patterns. The proposed framework is general and can be applied to different types of vector fields and surfaces, allowing for a large number of high potential applications in medical imaging.

Characterization of surface motion patterns in highly deformable soft tissue organs from dynamic Magnetic Resonance Imaging

Oct 09, 2020

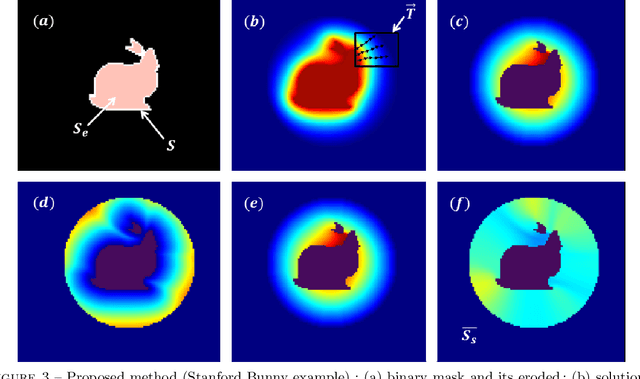

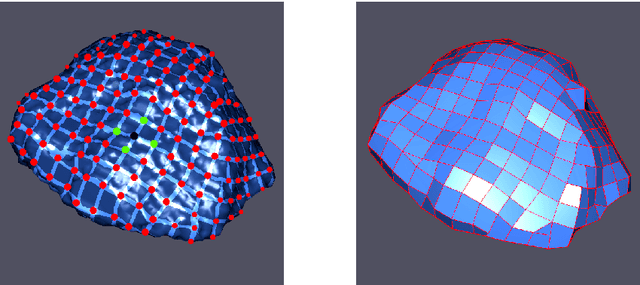

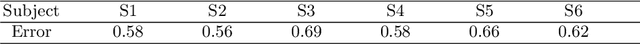

Abstract:In this work, we present a pipeline for characterization of bladder surface dynamics during deep respiratory movements from dynamic Magnetic Resonance Imaging (MRI). Dynamic MRI may capture temporal anatomical changes in soft tissue organs with high-contrast but the obtained sequences usually suffer from limited volume coverage which makes the high resolution reconstruction of organ shape trajectories a major challenge in temporal studies. For a compact shape representation, the reconstructed temporal data with full volume coverage are first used to establish a subject-specific dynamical 4D mesh sequences using the large deformation diffeomorphic metric mapping (LDDMM) framework. Then, we performed a statistical characterization of organ shape changes from mechanical parameters such as mesh elongations and distortions. Since shape space is curved, we have also used the intrinsic curvature changes as metric to quantify surface evolution. However, the numerical computation of curvature is strongly dependant on the surface parameterization (i.e. the mesh resolution). To cope with this dependency, we propose a non-parametric level set method to evaluate spatio-temporal surface evolution. Independent of parameterization and minimizing the length of the geodesic curves, it shrinks smoothly the surface curves towards a sphere by minimizing a Dirichlet energy. An Eulerian PDE approach is used for evaluation of surface dynamics from the curve-shortening flow. Results demonstrate the numerical stability of the derived descriptor throughout smooth continuous-time organ trajectories. Intercorrelations between individuals' motion patterns from different geometric features are computed using the Laplace-Beltrami Operator (LBO) eigenfunctions for spherical mapping.

A new geodesic-based feature for characterization of 3D shapes: application to soft tissue organ temporal deformations

Mar 18, 2020

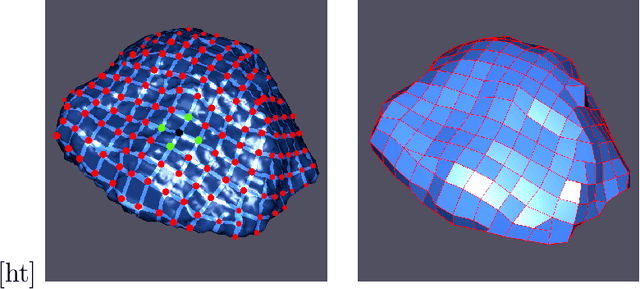

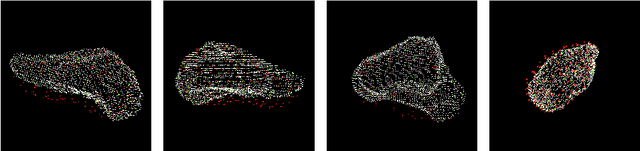

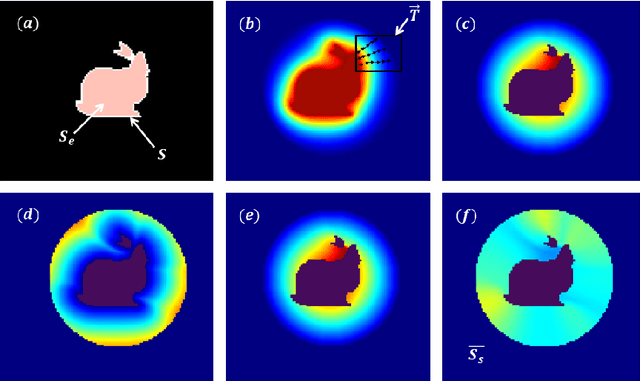

Abstract:In this paper, we propose a method for characterizing 3D shapes from point clouds and we show a direct application on a study of organ temporal deformations. As an example, we characterize the behavior of a bladder during a forced respiratory motion with a reduced number of 3D surface points: first, a set of equidistant points representing the vertices of quadrilateral mesh for the surface in the first time frame are tracked throughout a long dynamic MRI sequence using a Large Deformation Diffeomorphic Metric Mapping (LDDMM) framework. Second, a novel geometric feature which is invariant to scaling and rotation is proposed for characterizing the temporal organ deformations by employing an Eulerian Partial Differential Equations (PDEs) methodology. We demonstrate the robustness of our feature on both synthetic 3D shapes and realistic dynamic MRI data portraying the bladder deformation during forced respiratory motions. Promising results are obtained, showing that the proposed feature may be useful for several computer vision applications such as medical imaging, aerodynamics and robotics.

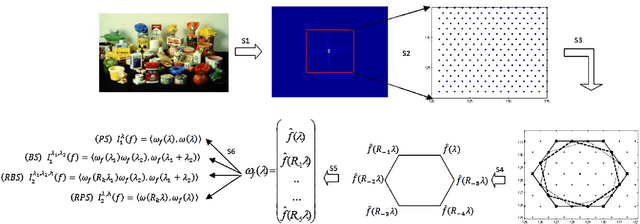

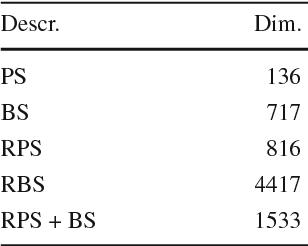

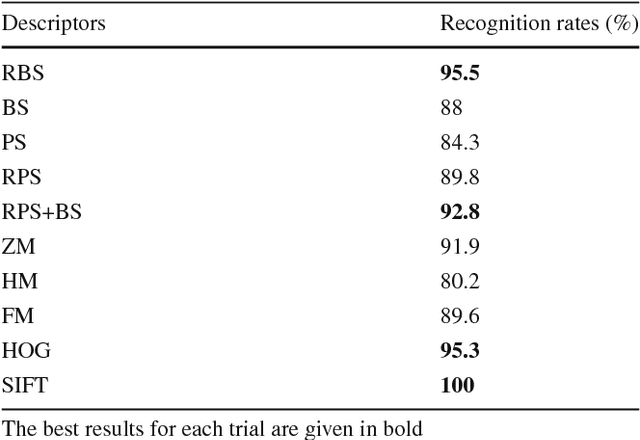

Fourier descriptors based on the structure of the human primary visual cortex with applications to object recognition

Jun 28, 2016

Abstract:In this paper we propose a supervised object recognition method using new global features and inspired by the model of the human primary visual cortex V1 as the semidiscrete roto-translation group $SE(2,N) = \mathbb Z_N\rtimes \mathbb R^2$. The proposed technique is based on generalized Fourier descriptors on the latter group, which are invariant to natural geometric transformations (rotations, translations). These descriptors are then used to feed an SVM classifier. We have tested our method against the COIL-100 image database and the ORL face database, and compared it with other techniques based on traditional descriptors, global and local. The obtained results have shown that our approach looks extremely efficient and stable to noise, in presence of which it outperforms the other techniques analyzed in the paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge