Amin Azari

Ultra-Low-Power IoT Communications: A novel address decoding approach for wake-up receivers

Nov 15, 2021

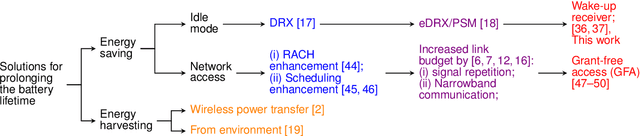

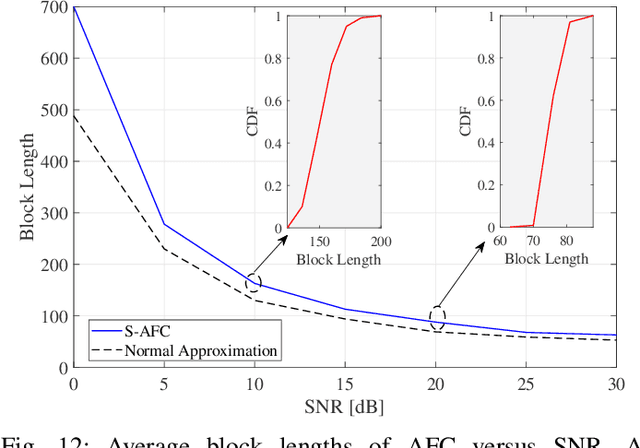

Abstract:Providing energy-efficient Internet of Things (IoT) connectivity has attracted significant attention in fifth-generation (5G) wireless networks and beyond. A potential solution for realizing a long-lasting network of IoT devices is to equip each IoT device with a wake-up receiver (WuR) to have always-accessible devices instead of always-on devices. WuRs typically comprise a radio frequency demodulator, sequence decoder, and digital address decoder and are provided with a unique authentication address in the network. Although the literature on efficient demodulators is mature, it lacks research on fast, low-power, and reliable address decoders. As this module continuously monitors the received ambient energy for potential paging of the device, its contribution to WuR's power consumption is crucial. Motivated by this need, a low-power, reliable address decoder is developed in this paper. We further investigate the integration of WuR in low-power uplink/downlink communications and, using system-level energy analysis; we characterize operation regions in which WuR can contribute significantly to energy saving. The device-level energy analysis confirms the superior performance of our decoder. The results show that the proposed decoder significantly outperforms the state-of-the-art with a power consumption of 60 nW, at cost of compromising a negligible increase in decoding delay.

Cellular, Wide-Area, and Non-Terrestrial IoT: A Survey on 5G Advances and the Road Towards 6G

Jul 07, 2021

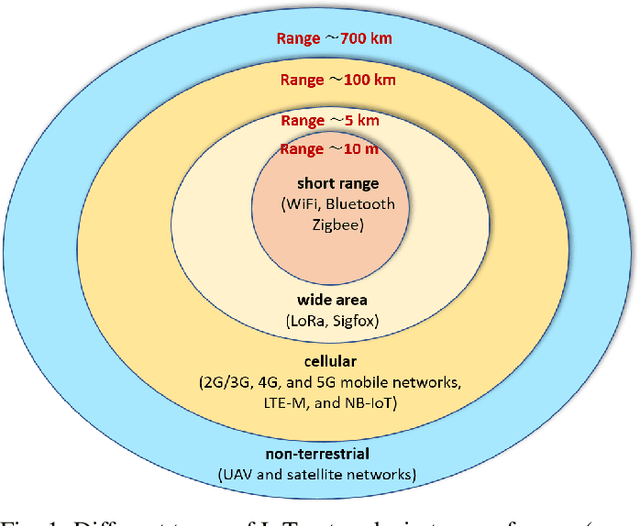

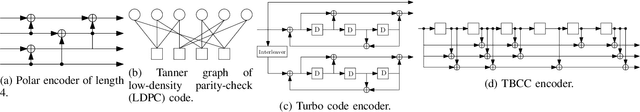

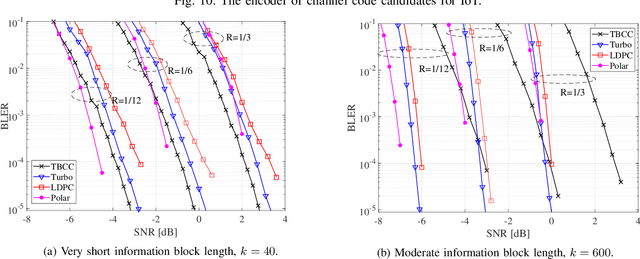

Abstract:The next wave of wireless technologies is proliferating in connecting things among themselves as well as to humans. In the era of the Internet of things (IoT), billions of sensors, machines, vehicles, drones, and robots will be connected, making the world around us smarter. The IoT will encompass devices that must wirelessly communicate a diverse set of data gathered from the environment for myriad new applications. The ultimate goal is to extract insights from this data and develop solutions that improve quality of life and generate new revenue. Providing large-scale, long-lasting, reliable, and near real-time connectivity is the major challenge in enabling a smart connected world. This paper provides a comprehensive survey on existing and emerging communication solutions for serving IoT applications in the context of cellular, wide-area, as well as non-terrestrial networks. Specifically, wireless technology enhancements for providing IoT access in fifth-generation (5G) and beyond cellular networks, and communication networks over the unlicensed spectrum are presented. Aligned with the main key performance indicators of 5G and beyond 5G networks, we investigate solutions and standards that enable energy efficiency, reliability, low latency, and scalability (connection density) of current and future IoT networks. The solutions include grant-free access and channel coding for short-packet communications, non-orthogonal multiple access, and on-device intelligence. Further, a vision of new paradigm shifts in communication networks in the 2030s is provided, and the integration of the associated new technologies like artificial intelligence, non-terrestrial networks, and new spectra is elaborated. Finally, future research directions toward beyond 5G IoT networks are pointed out.

* Submitted for review to IEEE CS&T

Machine Learning assisted Handover and Resource Management for Cellular Connected Drones

Jan 22, 2020

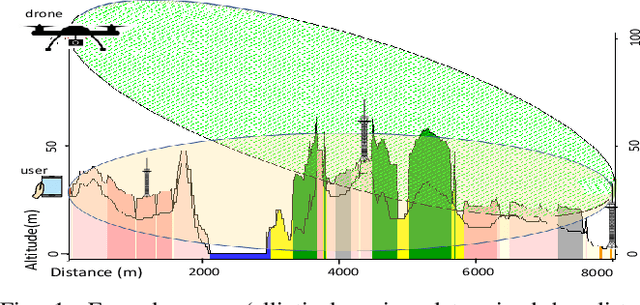

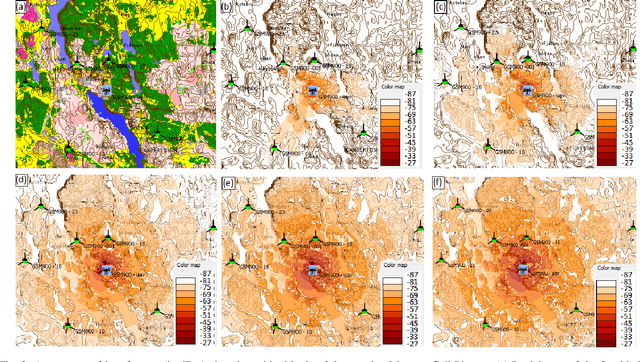

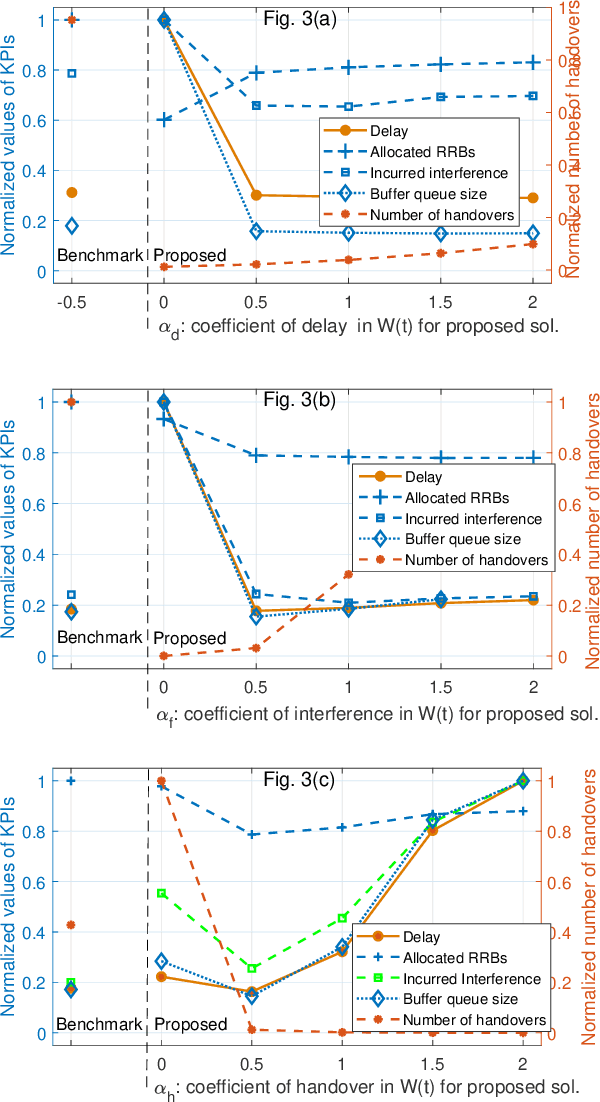

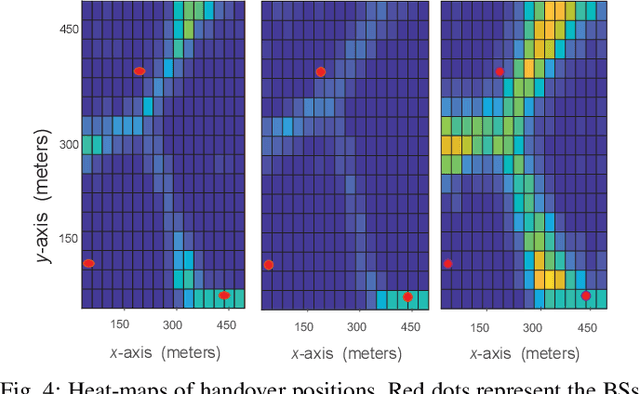

Abstract:Enabling cellular connectivity for drones introduces a wide set of challenges and opportunities. Communication of cellular-connected drones is influenced by 3-dimensional mobility and line-of-sight channel characteristics which results in higher number of handovers with increasing altitude. Our cell planning simulations in coexistence of aerial and terrestrial users indicate that the severe interference from drones to base stations is a major challenge for uplink communications of terrestrial users. Here, we first present the major challenges in co-existence of terrestrial and drone communications by considering real geographical network data for Stockholm. Then, we derive analytical models for the key performance indicators (KPIs), including communications delay and interference over cellular networks, and formulate the handover and radio resource management (H-RRM) optimization problem. Afterwards, we transform this problem into a machine learning problem, and propose a deep reinforcement learning solution to solve H-RRM problem. Finally, using simulation results, we present how the speed and altitude of drones, and the tolerable level of interference, shape the optimal H-RRM policy in the network. Especially, the heat-maps of handover decisions in different drone's altitudes/speeds have been presented, which promote a revision of the legacy handover schemes and redefining the boundaries of cells in the sky.

Cellular Traffic Prediction and Classification: a comparative evaluation of LSTM and ARIMA

Jun 03, 2019

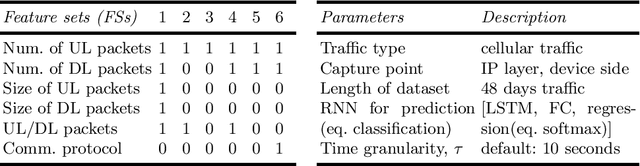

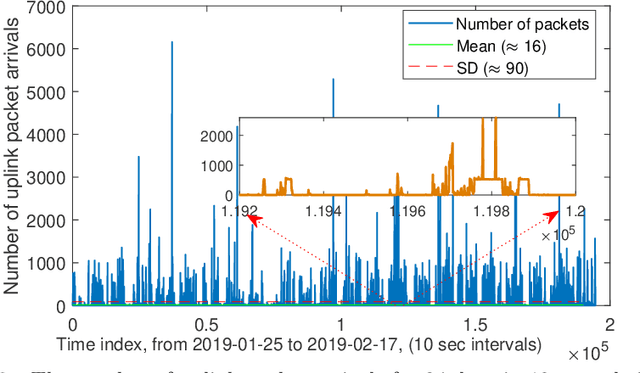

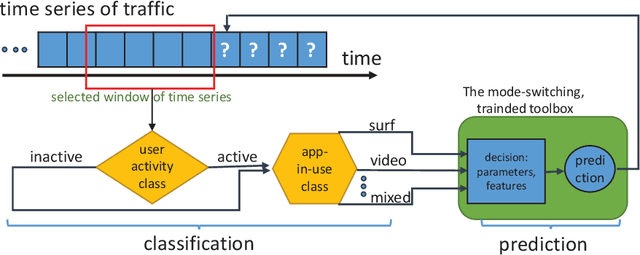

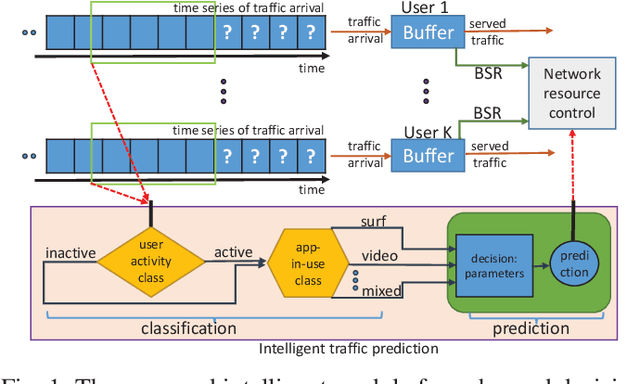

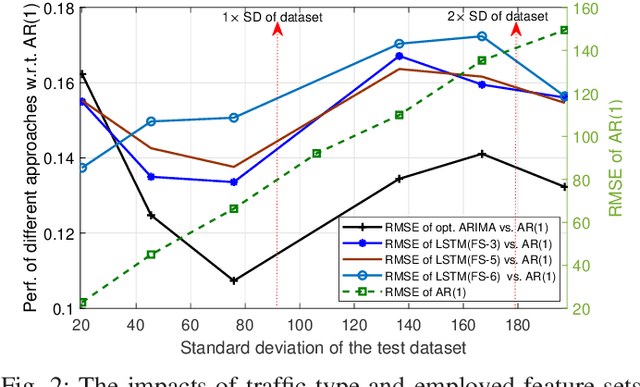

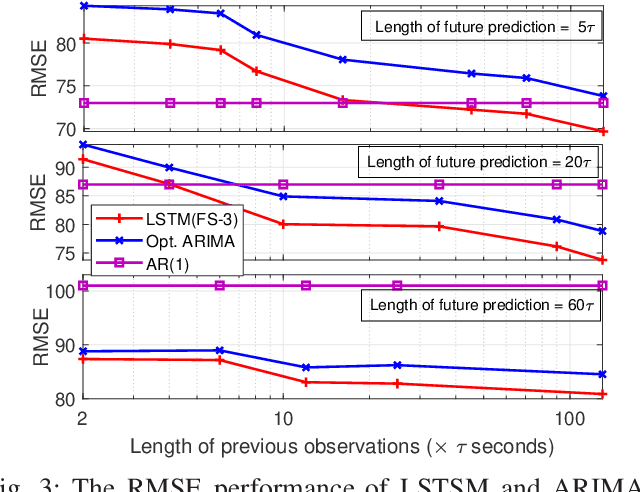

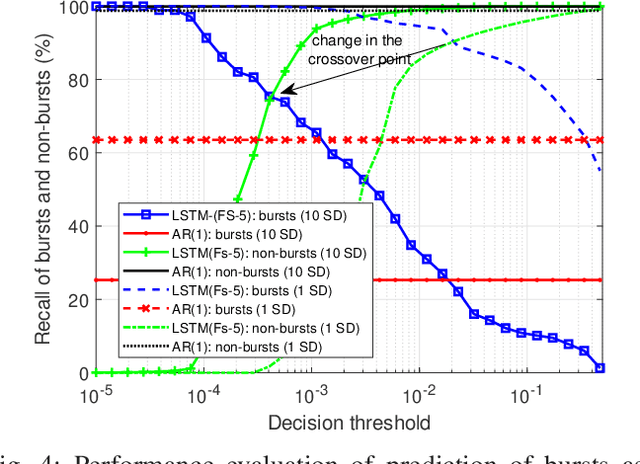

Abstract:Prediction of user traffic in cellular networks has attracted profound attention for improving resource utilization. In this paper, we study the problem of network traffic traffic prediction and classification by employing standard machine learning and statistical learning time series prediction methods, including long short-term memory (LSTM) and autoregressive integrated moving average (ARIMA), respectively. We present an extensive experimental evaluation of the designed tools over a real network traffic dataset. Within this analysis, we explore the impact of different parameters to the effectiveness of the predictions. We further extend our analysis to the problem of network traffic classification and prediction of traffic bursts. The results, on the one hand, demonstrate superior performance of LSTM over ARIMA in general, especially when the length of the training time series is high enough, and it is augmented by a wisely-selected set of features. On the other hand, the results shed light on the circumstances in which, ARIMA performs close to the optimal with lower complexity.

User Traffic Prediction for Proactive Resource Management: Learning-Powered Approaches

May 09, 2019

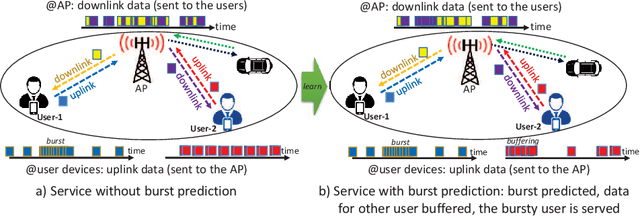

Abstract:Traffic prediction plays a vital role in efficient planning and usage of network resources in wireless networks. While traffic prediction in wired networks is an established field, there is a lack of research on the analysis of traffic in cellular networks, especially in a content-blind manner at the user level. Here, we shed light into this problem by designing traffic prediction tools that employ either statistical, rule-based, or deep machine learning methods. First, we present an extensive experimental evaluation of the designed tools over a real traffic dataset. Within this analysis, the impact of different parameters, such as length of prediction, feature set used in analyses, and granularity of data, on accuracy of prediction are investigated. Second, regarding the coupling observed between behavior of traffic and its generating application, we extend our analysis to the blind classification of applications generating the traffic based on the statistics of traffic arrival/departure. The results demonstrate presence of a threshold number of previous observations, beyond which, deep machine learning can outperform linear statistical learning, and before which, statistical learning outperforms deep learning approaches. Further analysis of this threshold value represents a strong coupling between this threshold, the length of future prediction, and the feature set in use. Finally, through a case study, we present how the experienced delay could be decreased by traffic arrival prediction.

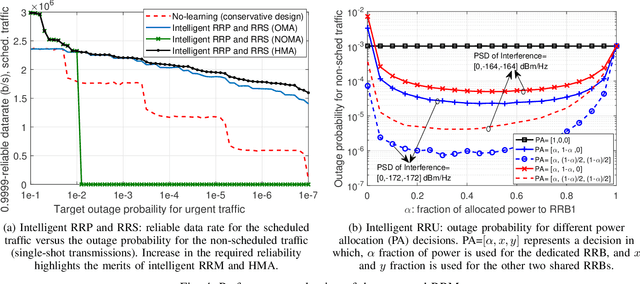

Risk-Aware Resource Allocation for URLLC: Challenges and Strategies with Machine Learning

Dec 22, 2018

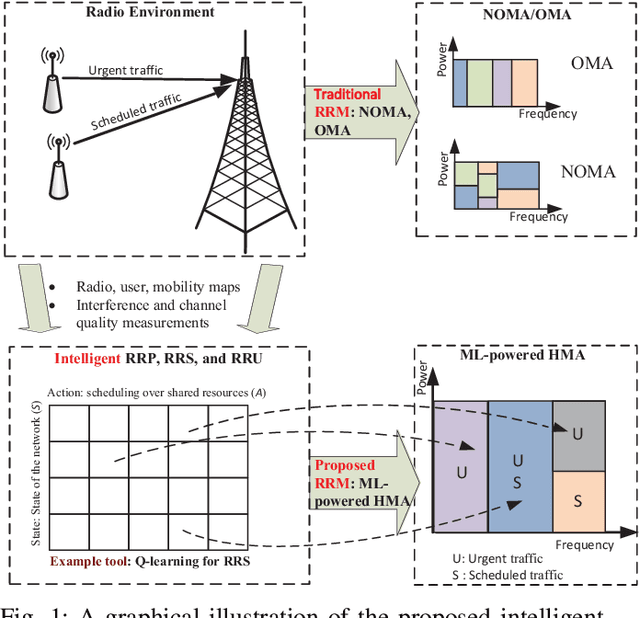

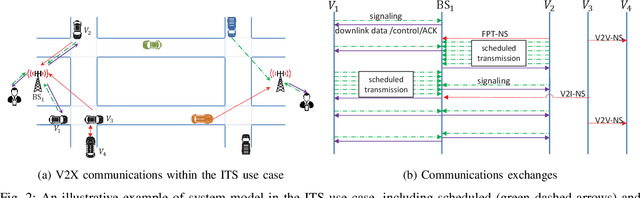

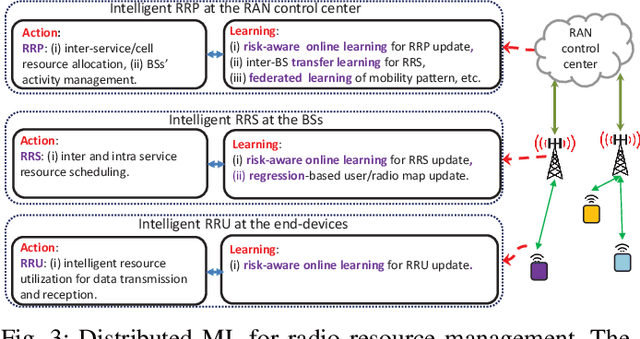

Abstract:Supporting ultra-reliable low-latency communications (URLLC) is a major challenge of 5G wireless networks. Stringent delay and reliability requirements need to be satisfied for both scheduled and non-scheduled URLLC traffic to enable a diverse set of 5G applications. Although physical and media access control layer solutions have been investigated to satisfy only scheduled URLLC traffic, there is a lack of study on enabling transmission of non-scheduled URLLC traffic, especially in coexistence with the scheduled URLLC traffic. Machine learning (ML) is an important enabler for such a co-existence scenario due to its ability to exploit spatial/temporal correlation in user behaviors and use of radio resources. Hence, in this paper, we first study the coexistence design challenges, especially the radio resource management (RRM) problem and propose a distributed risk-aware ML solution for RRM. The proposed solution benefits from hybrid orthogonal/non-orthogonal radio resource slicing, and proactively regulates the spectrum needed for satisfying delay/reliability requirement of each URLLC traffic type. A case study is introduced to investigate the potential of the proposed RRM in serving coexisting URLLC traffic types. The results further provide insights on the benefits of leveraging intelligent RRM, e.g. a 75% increase in data rate with respect to the conservative design approach for the scheduled traffic is achieved, while the 99.99% reliability of both scheduled and nonscheduled traffic types is satisfied.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge