Stojan Denic

Recurrent Neural Networks for Handover Management in Next-Generation Self-Organized Networks

Jun 11, 2020

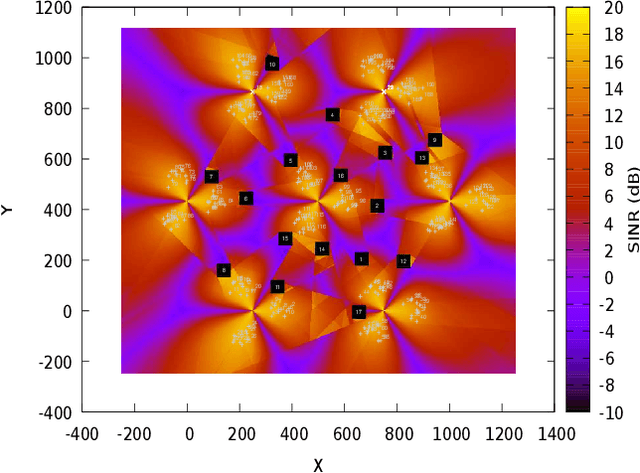

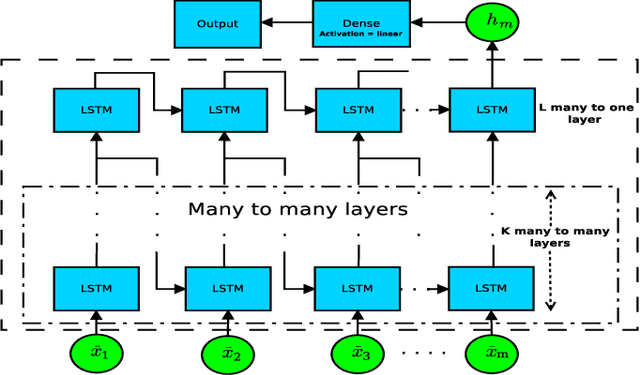

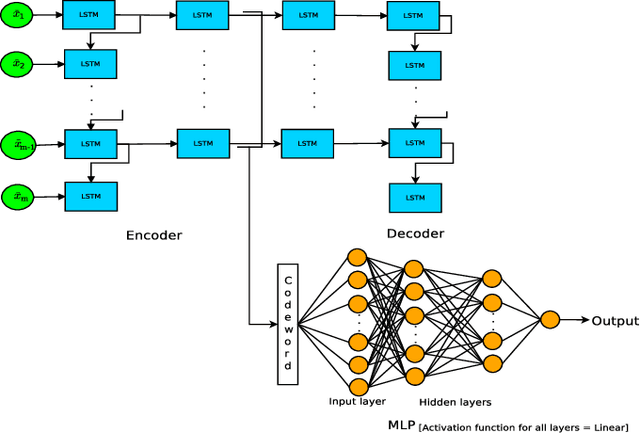

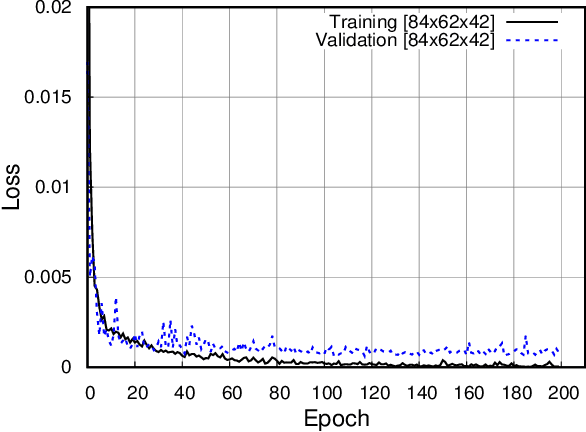

Abstract:In this paper, we discuss a handover management scheme for Next Generation Self-Organized Networks. We propose to extract experience from full protocol stack data, to make smart handover decisions in a multi-cell scenario, where users move and are challenged by deep zones of an outage. Traditional handover schemes have the drawback of taking into account only the signal strength from the serving, and the target cell, before the handover. However, we believe that the expected Quality of Experience (QoE) resulting from the decision of target cell to handover to, should be the driving principle of the handover decision. In particular, we propose two models based on multi-layer many-to-one LSTM architecture, and a multi-layer LSTM AutoEncoder (AE) in conjunction with a MultiLayer Perceptron (MLP) neural network. We show that using experience extracted from data, we can improve the number of users finalizing the download by 18%, and we can reduce the time to download, with respect to a standard event-based handover benchmark scheme. Moreover, for the sake of generalization, we test the LSTM Autoencoder in a different scenario, where it maintains its performance improvements with a slight degradation, compared to the original scenario.

Cellular Traffic Prediction and Classification: a comparative evaluation of LSTM and ARIMA

Jun 03, 2019

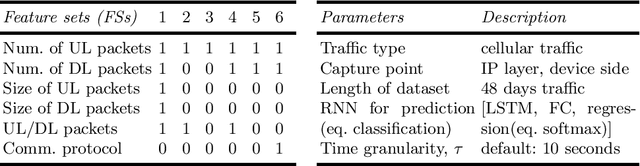

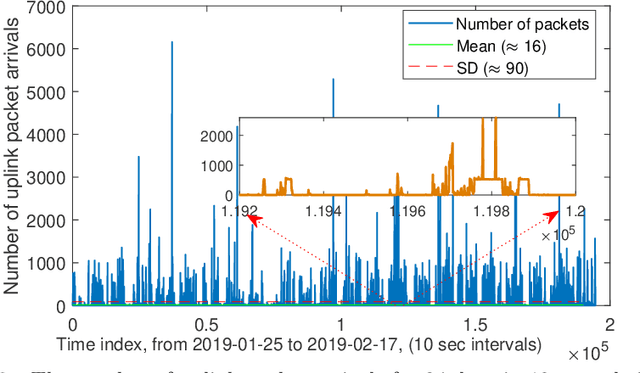

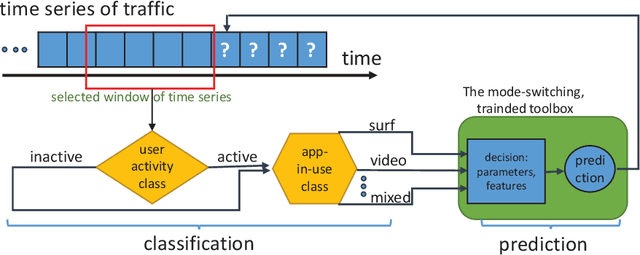

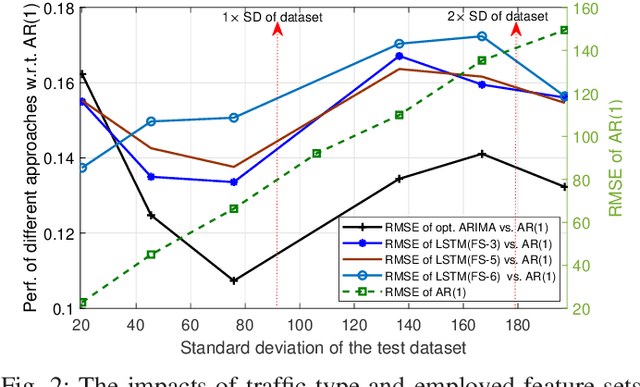

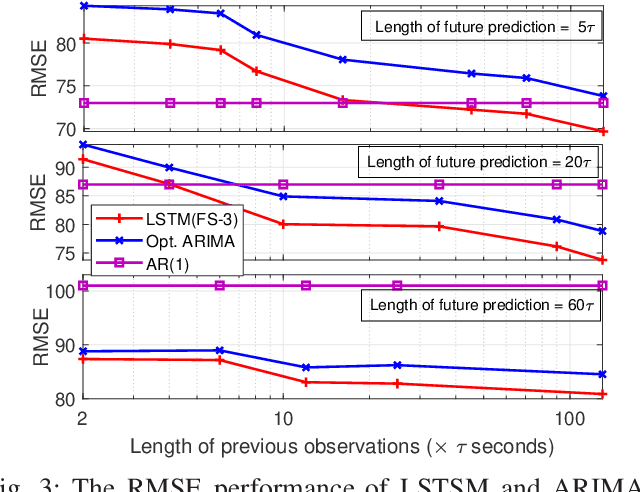

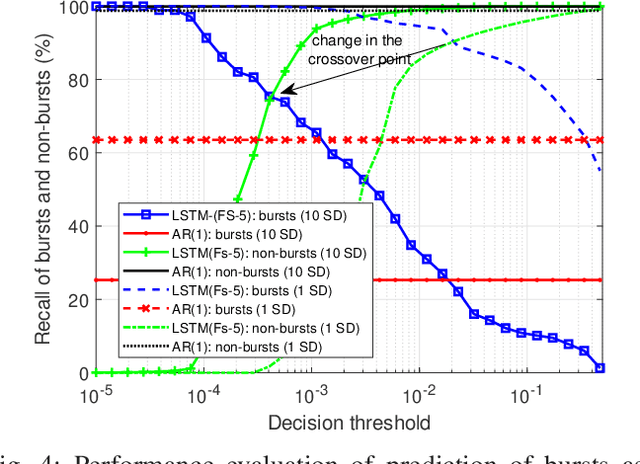

Abstract:Prediction of user traffic in cellular networks has attracted profound attention for improving resource utilization. In this paper, we study the problem of network traffic traffic prediction and classification by employing standard machine learning and statistical learning time series prediction methods, including long short-term memory (LSTM) and autoregressive integrated moving average (ARIMA), respectively. We present an extensive experimental evaluation of the designed tools over a real network traffic dataset. Within this analysis, we explore the impact of different parameters to the effectiveness of the predictions. We further extend our analysis to the problem of network traffic classification and prediction of traffic bursts. The results, on the one hand, demonstrate superior performance of LSTM over ARIMA in general, especially when the length of the training time series is high enough, and it is augmented by a wisely-selected set of features. On the other hand, the results shed light on the circumstances in which, ARIMA performs close to the optimal with lower complexity.

User Traffic Prediction for Proactive Resource Management: Learning-Powered Approaches

May 09, 2019

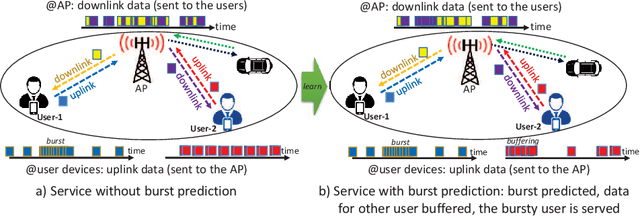

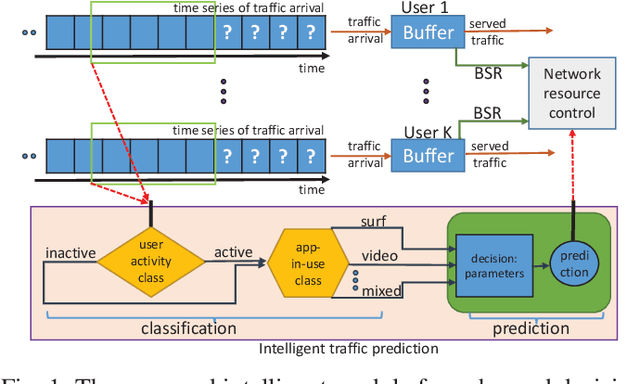

Abstract:Traffic prediction plays a vital role in efficient planning and usage of network resources in wireless networks. While traffic prediction in wired networks is an established field, there is a lack of research on the analysis of traffic in cellular networks, especially in a content-blind manner at the user level. Here, we shed light into this problem by designing traffic prediction tools that employ either statistical, rule-based, or deep machine learning methods. First, we present an extensive experimental evaluation of the designed tools over a real traffic dataset. Within this analysis, the impact of different parameters, such as length of prediction, feature set used in analyses, and granularity of data, on accuracy of prediction are investigated. Second, regarding the coupling observed between behavior of traffic and its generating application, we extend our analysis to the blind classification of applications generating the traffic based on the statistics of traffic arrival/departure. The results demonstrate presence of a threshold number of previous observations, beyond which, deep machine learning can outperform linear statistical learning, and before which, statistical learning outperforms deep learning approaches. Further analysis of this threshold value represents a strong coupling between this threshold, the length of future prediction, and the feature set in use. Finally, through a case study, we present how the experienced delay could be decreased by traffic arrival prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge