Alireza Sadeghi

Interpretable graph-based models on multimodal biomedical data integration: A technical review and benchmarking

May 03, 2025

Abstract:Integrating heterogeneous biomedical data including imaging, omics, and clinical records supports accurate diagnosis and personalised care. Graph-based models fuse such non-Euclidean data by capturing spatial and relational structure, yet clinical uptake requires regulator-ready interpretability. We present the first technical survey of interpretable graph based models for multimodal biomedical data, covering 26 studies published between Jan 2019 and Sep 2024. Most target disease classification, notably cancer and rely on static graphs from simple similarity measures, while graph-native explainers are rare; post-hoc methods adapted from non-graph domains such as gradient saliency, and SHAP predominate. We group existing approaches into four interpretability families, outline trends such as graph-in-graph hierarchies, knowledge-graph edges, and dynamic topology learning, and perform a practical benchmark. Using an Alzheimer disease cohort, we compare Sensitivity Analysis, Gradient Saliency, SHAP and Graph Masking. SHAP and Sensitivity Analysis recover the broadest set of known AD pathways and Gene-Ontology terms, whereas Gradient Saliency and Graph Masking surface complementary metabolic and transport signatures. Permutation tests show all four beat random gene sets, but with distinct trade-offs: SHAP and Graph Masking offer deeper biology at higher compute cost, while Gradient Saliency and Sensitivity Analysis are quicker though coarser. We also provide a step-by-step flowchart covering graph construction, explainer choice and resource budgeting to help researchers balance transparency and performance. This review synthesises the state of interpretable graph learning for multimodal medicine, benchmarks leading techniques, and charts future directions, from advanced XAI tools to under-studied diseases, serving as a concise reference for method developers and translational scientists.

Deep conv-attention model for diagnosing left bundle branch block from 12-lead electrocardiograms

Dec 07, 2022Abstract:Cardiac resynchronization therapy (CRT) is a treatment that is used to compensate for irregularities in the heartbeat. Studies have shown that this treatment is more effective in heart patients with left bundle branch block (LBBB) arrhythmia. Therefore, identifying this arrhythmia is an important initial step in determining whether or not to use CRT. On the other hand, traditional methods for detecting LBBB on electrocardiograms (ECG) are often associated with errors. Thus, there is a need for an accurate method to diagnose this arrhythmia from ECG data. Machine learning, as a new field of study, has helped to increase human systems' performance. Deep learning, as a newer subfield of machine learning, has more power to analyze data and increase systems accuracy. This study presents a deep learning model for the detection of LBBB arrhythmia from 12-lead ECG data. This model consists of 1D dilated convolutional layers. Attention mechanism has also been used to identify important input data features and classify inputs more accurately. The proposed model is trained and validated on a database containing 10344 12-lead ECG samples using the 10-fold cross-validation method. The final results obtained by the model on the 12-lead ECG data are as follows. Accuracy: 98.80+-0.08%, specificity: 99.33+-0.11 %, F1 score: 73.97+-1.8%, and area under the receiver operating characteristics curve (AUC): 0.875+-0.0192. These results indicate that the proposed model in this study can effectively diagnose LBBB with good efficiency and, if used in medical centers, will greatly help diagnose this arrhythmia and early treatment.

Robust, Deep, and Reinforcement Learning for Management of Communication and Power Networks

Feb 08, 2022

Abstract:This thesis develops data-driven machine learning algorithms to managing and optimizing the next-generation highly complex cyberphysical systems, which desperately need ground-breaking control, monitoring, and decision making schemes that can guarantee robustness, scalability, and situational awareness. The present thesis first develops principled methods to make generic machine learning models robust against distributional uncertainties and adversarial data. Particular focus will be on parametric models where some training data are being used to learn a parametric model. The developed framework is of high interest especially when training and testing data are drawn from "slightly" different distribution. We then introduce distributionally robust learning frameworks to minimize the worst-case expected loss over a prescribed ambiguity set of training distributions quantified via Wasserstein distance. Later, we build on this robust framework to design robust semi-supervised learning over graph methods. The second part of this thesis aspires to fully unleash the potential of next-generation wired and wireless networks, where we design "smart" network entities using (deep) reinforcement learning approaches. Finally, this thesis enhances the power system operation and control. Our contribution is on sustainable distribution grids with high penetration of renewable sources and demand response programs. To account for unanticipated and rapidly changing renewable generation and load consumption scenarios, we specifically delegate reactive power compensation to both utility-owned control devices (e.g., capacitor banks), as well as smart inverters of distributed generation units with cyber-capabilities.

Distributionally Robust Semi-Supervised Learning Over Graphs

Oct 20, 2021

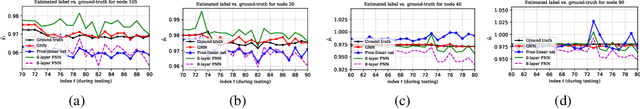

Abstract:Semi-supervised learning (SSL) over graph-structured data emerges in many network science applications. To efficiently manage learning over graphs, variants of graph neural networks (GNNs) have been developed recently. By succinctly encoding local graph structures and features of nodes, state-of-the-art GNNs can scale linearly with the size of graph. Despite their success in practice, most of existing methods are unable to handle graphs with uncertain nodal attributes. Specifically whenever mismatches between training and testing data distribution exists, these models fail in practice. Challenges also arise due to distributional uncertainties associated with data acquired by noisy measurements. In this context, a distributionally robust learning framework is developed, where the objective is to train models that exhibit quantifiable robustness against perturbations. The data distribution is considered unknown, but lies within a Wasserstein ball centered around empirical data distribution. A robust model is obtained by minimizing the worst expected loss over this ball. However, solving the emerging functional optimization problem is challenging, if not impossible. Advocating a strong duality condition, we develop a principled method that renders the problem tractable and efficiently solvable. Experiments assess the performance of the proposed method.

Heavy Ball Momentum for Conditional Gradient

Oct 08, 2021

Abstract:Conditional gradient, aka Frank Wolfe (FW) algorithms, have well-documented merits in machine learning and signal processing applications. Unlike projection-based methods, momentum cannot improve the convergence rate of FW, in general. This limitation motivates the present work, which deals with heavy ball momentum, and its impact to FW. Specifically, it is established that heavy ball offers a unifying perspective on the primal-dual (PD) convergence, and enjoys a tighter per iteration PD error rate, for multiple choices of step sizes, where PD error can serve as the stopping criterion in practice. In addition, it is asserted that restart, a scheme typically employed jointly with Nesterov's momentum, can further tighten this PD error bound. Numerical results demonstrate the usefulness of heavy ball momentum in FW iterations.

Learning while Respecting Privacy and Robustness to Distributional Uncertainties and Adversarial Data

Jul 07, 2020

Abstract:Data used to train machine learning models can be adversarial--maliciously constructed by adversaries to fool the model. Challenge also arises by privacy, confidentiality, or due to legal constraints when data are geographically gathered and stored across multiple learners, some of which may hold even an "anonymized" or unreliable dataset. In this context, the distributionally robust optimization framework is considered for training a parametric model, both in centralized and federated learning settings. The objective is to endow the trained model with robustness against adversarially manipulated input data, or, distributional uncertainties, such as mismatches between training and testing data distributions, or among datasets stored at different workers. To this aim, the data distribution is assumed unknown, and lies within a Wasserstein ball centered around the empirical data distribution. This robust learning task entails an infinite-dimensional optimization problem, which is challenging. Leveraging a strong duality result, a surrogate is obtained, for which three stochastic primal-dual algorithms are developed: i) stochastic proximal gradient descent with an $\epsilon$-accurate oracle, which invokes an oracle to solve the convex sub-problems; ii) stochastic proximal gradient descent-ascent, which approximates the solution of the convex sub-problems via a single gradient ascent step; and, iii) a distributionally robust federated learning algorithm, which solves the sub-problems locally at different workers where data are stored. Compared to the empirical risk minimization and federated learning methods, the proposed algorithms offer robustness with little computation overhead. Numerical tests using image datasets showcase the merits of the proposed algorithms under several existing adversarial attacks and distributional uncertainties.

Reinforcement Learning for Caching with Space-Time Popularity Dynamics

May 19, 2020

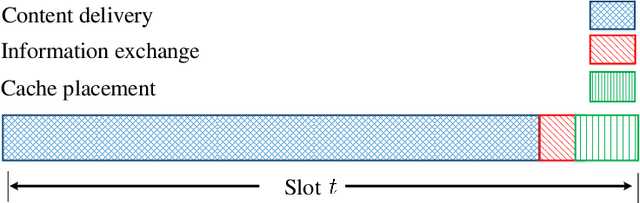

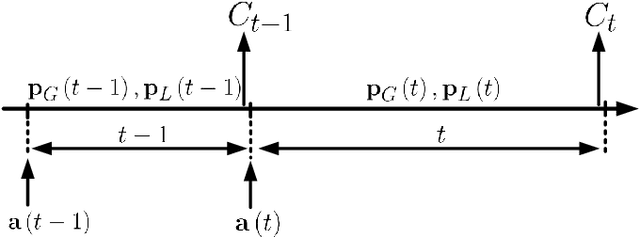

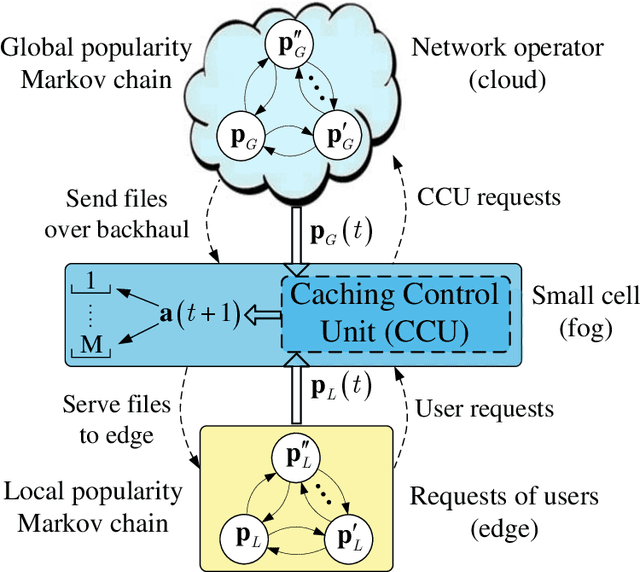

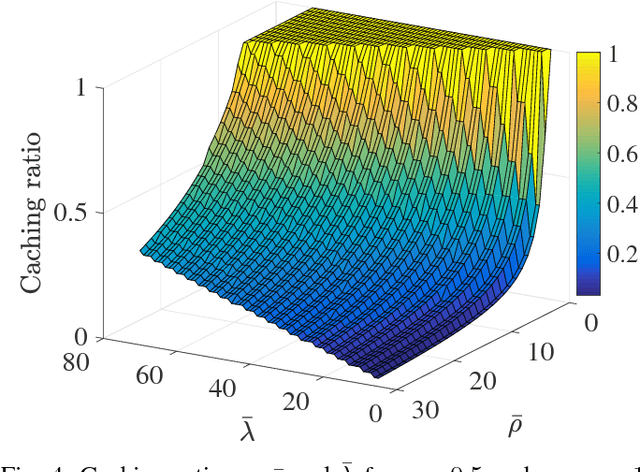

Abstract:With the tremendous growth of data traffic over wired and wireless networks along with the increasing number of rich-media applications, caching is envisioned to play a critical role in next-generation networks. To intelligently prefetch and store contents, a cache node should be able to learn what and when to cache. Considering the geographical and temporal content popularity dynamics, the limited available storage at cache nodes, as well as the interactive in uence of caching decisions in networked caching settings, developing effective caching policies is practically challenging. In response to these challenges, this chapter presents a versatile reinforcement learning based approach for near-optimal caching policy design, in both single-node and network caching settings under dynamic space-time popularities. The herein presented policies are complemented using a set of numerical tests, which showcase the merits of the presented approach relative to several standard caching policies.

A Statistical Learning Approach to Reactive Power Control in Distribution Systems

Oct 25, 2019

Abstract:Pronounced variability due to the growth of renewable energy sources, flexible loads, and distributed generation is challenging residential distribution systems. This context, motivates well fast, efficient, and robust reactive power control. Real-time optimal reactive power control is possible in theory by solving a non-convex optimization problem based on the exact model of distribution flow. However, lack of high-precision instrumentation and reliable communications, as well as the heavy computational burden of non-convex optimization solvers render computing and implementing the optimal control challenging in practice. Taking a statistical learning viewpoint, the input-output relationship between each grid state and the corresponding optimal reactive power control is parameterized in the present work by a deep neural network, whose unknown weights are learned offline by minimizing the power loss over a number of historical and simulated training pairs. In the inference phase, one just feeds the real-time state vector into the learned neural network to obtain the `optimal' reactive power control with only several matrix-vector multiplications. The merits of this novel statistical learning approach are computational efficiency as well as robustness to random input perturbations. Numerical tests on a 47-bus distribution network using real data corroborate these practical merits.

Adaptive Caching via Deep Reinforcement Learning

Feb 27, 2019

Abstract:Caching is envisioned to play a critical role in next-generation content delivery infrastructure, cellular networks, and Internet architectures. By smartly storing the most popular contents at the storage-enabled network entities during off-peak demand instances, caching can benefit both network infrastructure as well as end users, during on-peak periods. In this context, distributing the limited storage capacity across network entities calls for decentralized caching schemes. Many practical caching systems involve a parent caching node connected to multiple leaf nodes to serve user file requests. To model the two-way interactive influence between caching decisions at the parent and leaf nodes, a reinforcement learning framework is put forth. To handle the large continuous state space, a scalable deep reinforcement learning approach is pursued. The novel approach relies on a deep Q-network to learn the Q-function, and thus the optimal caching policy, in an online fashion. Reinforcing the parent node with ability to learn-and-adapt to unknown policies of leaf nodes as well as spatio-temporal dynamic evolution of file requests, results in remarkable caching performance, as corroborated through numerical tests.

Reinforcement Learning for Adaptive Caching with Dynamic Storage Pricing

Dec 21, 2018

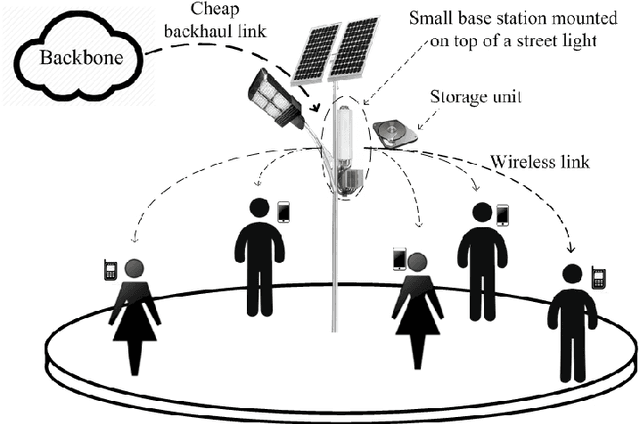

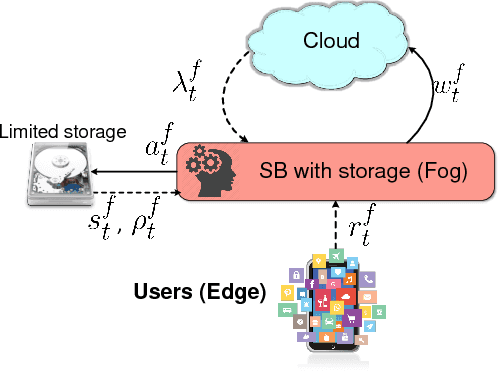

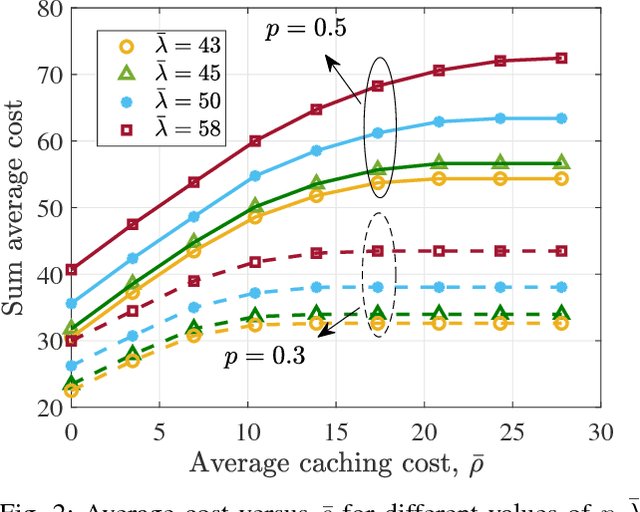

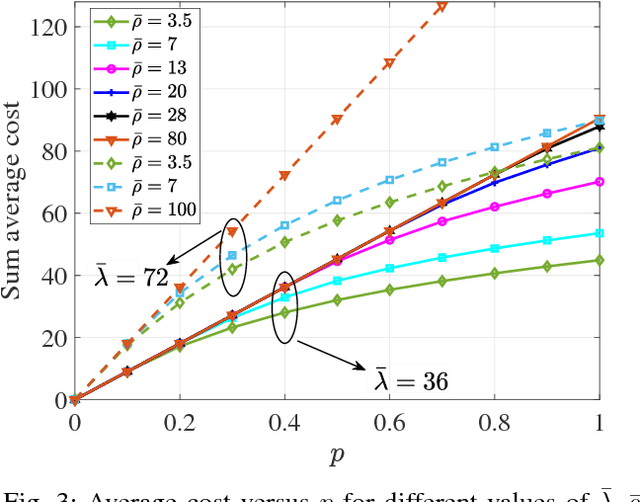

Abstract:Small base stations (SBs) of fifth-generation (5G) cellular networks are envisioned to have storage devices to locally serve requests for reusable and popular contents by \emph{caching} them at the edge of the network, close to the end users. The ultimate goal is to shift part of the predictable load on the back-haul links, from on-peak to off-peak periods, contributing to a better overall network performance and service experience. To enable the SBs with efficient \textit{fetch-cache} decision-making schemes operating in dynamic settings, this paper introduces simple but flexible generic time-varying fetching and caching costs, which are then used to formulate a constrained minimization of the aggregate cost across files and time. Since caching decisions per time slot influence the content availability in future slots, the novel formulation for optimal fetch-cache decisions falls into the class of dynamic programming. Under this generic formulation, first by considering stationary distributions for the costs and file popularities, an efficient reinforcement learning-based solver known as value iteration algorithm can be used to solve the emerging optimization problem. Later, it is shown that practical limitations on cache capacity can be handled using a particular instance of the generic dynamic pricing formulation. Under this setting, to provide a light-weight online solver for the corresponding optimization, the well-known reinforcement learning algorithm, $Q$-learning, is employed to find optimal fetch-cache decisions. Numerical tests corroborating the merits of the proposed approach wrap up the paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge