Alireza Furutanpey

Multi-dimensional Autoscaling of Processing Services: A Comparison of Agent-based Methods

Jun 12, 2025Abstract:Edge computing breaks with traditional autoscaling due to strict resource constraints, thus, motivating more flexible scaling behaviors using multiple elasticity dimensions. This work introduces an agent-based autoscaling framework that dynamically adjusts both hardware resources and internal service configurations to maximize requirements fulfillment in constrained environments. We compare four types of scaling agents: Active Inference, Deep Q Network, Analysis of Structural Knowledge, and Deep Active Inference, using two real-world processing services running in parallel: YOLOv8 for visual recognition and OpenCV for QR code detection. Results show all agents achieve acceptable SLO performance with varying convergence patterns. While the Deep Q Network benefits from pre-training, the structural analysis converges quickly, and the deep active inference agent combines theoretical foundations with practical scalability advantages. Our findings provide evidence for the viability of multi-dimensional agent-based autoscaling for edge environments and encourage future work in this research direction.

Leveraging Neural Graph Compilers in Machine Learning Research for Edge-Cloud Systems

Apr 28, 2025Abstract:This work presents a comprehensive evaluation of neural network graph compilers across heterogeneous hardware platforms, addressing the critical gap between theoretical optimization techniques and practical deployment scenarios. We demonstrate how vendor-specific optimizations can invalidate relative performance comparisons between architectural archetypes, with performance advantages sometimes completely reversing after compilation. Our systematic analysis reveals that graph compilers exhibit performance patterns highly dependent on both neural architecture and batch sizes. Through fine-grained block-level experimentation, we establish that vendor-specific compilers can leverage repeated patterns in simple architectures, yielding disproportionate throughput gains as model depth increases. We introduce novel metrics to quantify a compiler's ability to mitigate performance friction as batch size increases. Our methodology bridges the gap between academic research and practical deployment by incorporating compiler effects throughout the research process, providing actionable insights for practitioners navigating complex optimization landscapes across heterogeneous hardware environments.

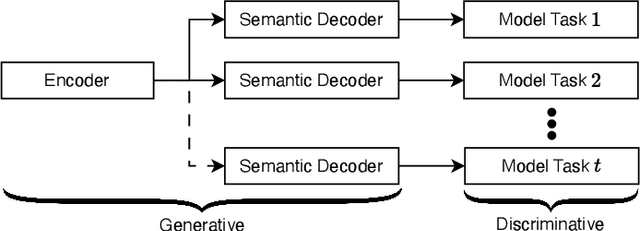

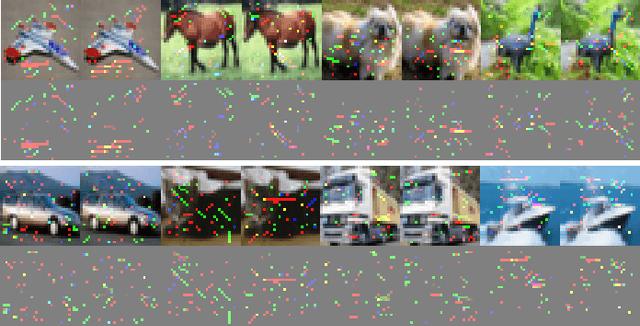

Adversarial Robustness of Bottleneck Injected Deep Neural Networks for Task-Oriented Communication

Dec 13, 2024

Abstract:This paper investigates the adversarial robustness of Deep Neural Networks (DNNs) using Information Bottleneck (IB) objectives for task-oriented communication systems. We empirically demonstrate that while IB-based approaches provide baseline resilience against attacks targeting downstream tasks, the reliance on generative models for task-oriented communication introduces new vulnerabilities. Through extensive experiments on several datasets, we analyze how bottleneck depth and task complexity influence adversarial robustness. Our key findings show that Shallow Variational Bottleneck Injection (SVBI) provides less adversarial robustness compared to Deep Variational Information Bottleneck (DVIB) approaches, with the gap widening for more complex tasks. Additionally, we reveal that IB-based objectives exhibit stronger robustness against attacks focusing on salient pixels with high intensity compared to those perturbing many pixels with lower intensity. Lastly, we demonstrate that task-oriented communication systems that rely on generative models to extract and recover salient information have an increased attack surface. The results highlight important security considerations for next-generation communication systems that leverage neural networks for goal-oriented compression.

Reactive Orchestration for Hierarchical Federated Learning Under a Communication Cost Budget

Dec 04, 2024Abstract:Deploying a Hierarchical Federated Learning (HFL) pipeline across the computing continuum (CC) requires careful organization of participants into a hierarchical structure with intermediate aggregation nodes between FL clients and the global FL server. This is challenging to achieve due to (i) cost constraints, (ii) varying data distributions, and (iii) the volatile operating environment of the CC. In response to these challenges, we present a framework for the adaptive orchestration of HFL pipelines, designed to be reactive to client churn and infrastructure-level events, while balancing communication cost and ML model accuracy. Our mechanisms identify and react to events that cause HFL reconfiguration actions at runtime, building on multi-level monitoring information (model accuracy, resource availability, resource cost). Moreover, our framework introduces a generic methodology for estimating reconfiguration costs to continuously re-evaluate the quality of adaptation actions, while being extensible to optimize for various HFL performance criteria. By extending the Kubernetes ecosystem, our framework demonstrates the ability to react promptly and effectively to changes in the operating environment, making the best of the available communication cost budget and effectively balancing costs and ML performance at runtime.

Adaptive Active Inference Agents for Heterogeneous and Lifelong Federated Learning

Oct 09, 2024Abstract:Handling heterogeneity and unpredictability are two core problems in pervasive computing. The challenge is to seamlessly integrate devices with varying computational resources in a dynamic environment to form a cohesive system that can fulfill the needs of all participants. Existing work on systems that adapt to changing requirements typically focuses on optimizing individual variables or low-level Service Level Objectives (SLOs), such as constraining the usage of specific resources. While low-level control mechanisms permit fine-grained control over a system, they introduce considerable complexity, particularly in dynamic environments. To this end, we propose drawing from Active Inference (AIF), a neuroscientific framework for designing adaptive agents. Specifically, we introduce a conceptual agent for heterogeneous pervasive systems that permits setting global systems constraints as high-level SLOs. Instead of manually setting low-level SLOs, the system finds an equilibrium that can adapt to environmental changes. We demonstrate the viability of AIF agents with an extensive experiment design, using heterogeneous and lifelong federated learning as an application scenario. We conduct our experiments on a physical testbed of devices with different resource types and vendor specifications. The results provide convincing evidence that an AIF agent can adapt a system to environmental changes. In particular, the AIF agent can balance competing SLOs in resource heterogeneous environments to ensure up to 98% fulfillment rate.

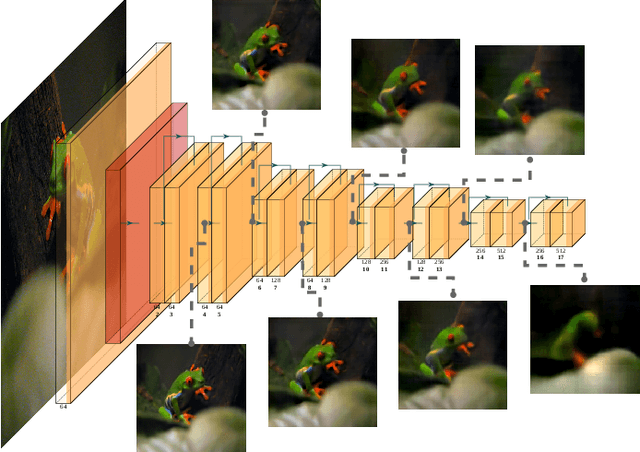

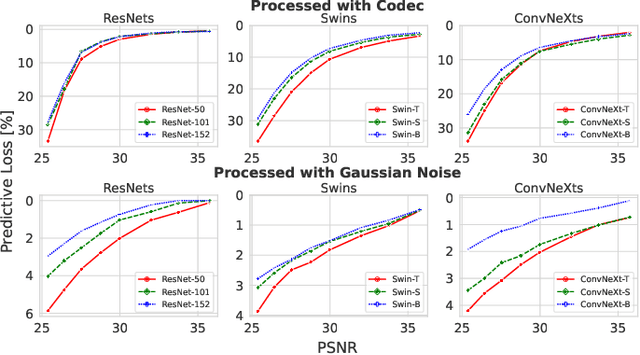

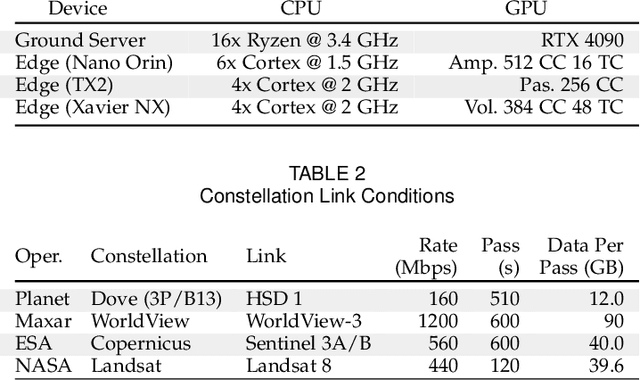

FOOL: Addressing the Downlink Bottleneck in Satellite Computing with Neural Feature Compression

Mar 25, 2024

Abstract:Nanosatellite constellations equipped with sensors capturing large geographic regions provide unprecedented opportunities for Earth observation. As constellation sizes increase, network contention poses a downlink bottleneck. Orbital Edge Computing (OEC) leverages limited onboard compute resources to reduce transfer costs by processing the raw captures at the source. However, current solutions have limited practicability due to reliance on crude filtering methods or over-prioritizing particular downstream tasks. This work presents FOOL, an OEC-native and task-agnostic feature compression method that preserves prediction performance. FOOL partitions high-resolution satellite imagery to maximize throughput. Further, it embeds context and leverages inter-tile dependencies to lower transfer costs with negligible overhead. While FOOL is a feature compressor, it can recover images with competitive scores on perceptual quality measures at lower bitrates. We extensively evaluate transfer cost reduction by including the peculiarity of intermittently available network connections in low earth orbit. Lastly, we test the feasibility of our system for standardized nanosatellite form factors. We demonstrate that FOOL permits downlinking over 100x the data volume without relying on prior information on the downstream tasks.

Architectural Vision for Quantum Computing in the Edge-Cloud Continuum

May 09, 2023Abstract:Quantum processing units (QPUs) are currently exclusively available from cloud vendors. However, with recent advancements, hosting QPUs is soon possible everywhere. Existing work has yet to draw from research in edge computing to explore systems exploiting mobile QPUs, or how hybrid applications can benefit from distributed heterogeneous resources. Hence, this work presents an architecture for Quantum Computing in the edge-cloud continuum. We discuss the necessity, challenges, and solution approaches for extending existing work on classical edge computing to integrate QPUs. We describe how warm-starting allows defining workflows that exploit the hierarchical resources spread across the continuum. Then, we introduce a distributed inference engine with hybrid classical-quantum neural networks (QNNs) to aid system designers in accommodating applications with complex requirements that incur the highest degree of heterogeneity. We propose solutions focusing on classical layer partitioning and quantum circuit cutting to demonstrate the potential of utilizing classical and quantum computation across the continuum. To evaluate the importance and feasibility of our vision, we provide a proof of concept that exemplifies how extending a classical partition method to integrate quantum circuits can improve the solution quality. Specifically, we implement a split neural network with optional hybrid QNN predictors. Our results show that extending classical methods with QNNs is viable and promising for future work.

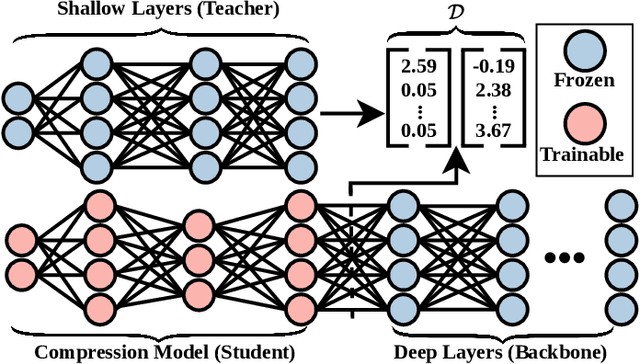

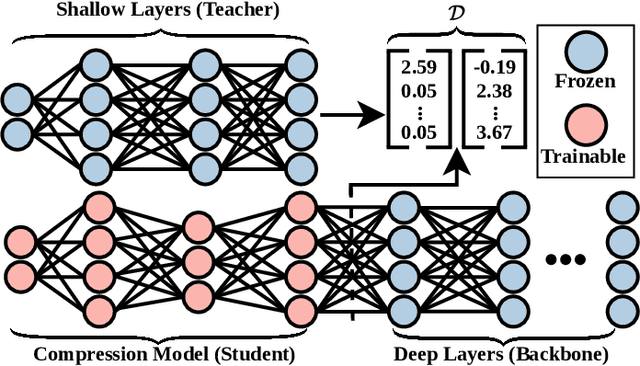

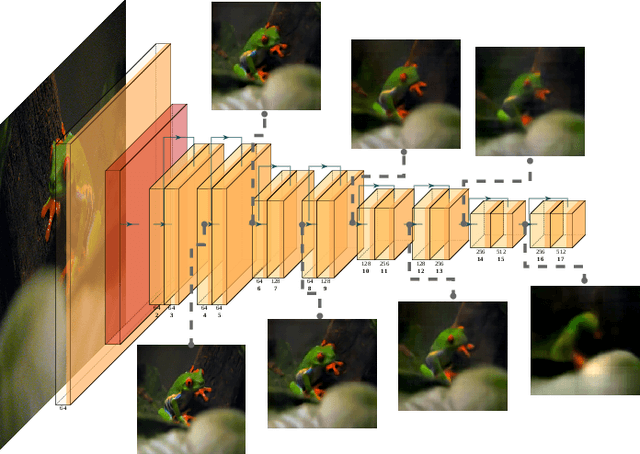

FrankenSplit: Saliency Guided Neural Feature Compression with Shallow Variational Bottleneck Injection

Feb 21, 2023Abstract:Lightweight neural networks exchange fast inference for predictive strength. Conversely, large deep neural networks have low prediction error but incur prolonged inference times and high energy consumption on resource-constrained devices. This trade-off is unacceptable for latency-sensitive and performance-critical applications. Offloading inference tasks to a server is unsatisfactory due to the inevitable network congestion by high-dimensional data competing for limited bandwidth and leaving valuable client-side resources idle. This work demonstrates why existing methods cannot adequately address the need for high-performance inference in mobile edge computing. Then, we show how to overcome current limitations by introducing a novel training method to reduce bandwidth consumption in Machine-to-Machine communication and a generalizable design heuristic for resource-conscious compression models. We extensively evaluate our proposed method against a wide range of baselines for latency and compressive strength in an environment with asymmetric resource distribution between edge devices and servers. Despite our edge-oriented lightweight encoder, our method achieves considerably better compression rates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge