Ali Sabri

MEDUSA: Multi-scale Encoder-Decoder Self-Attention Deep Neural Network Architecture for Medical Image Analysis

Oct 12, 2021

Abstract:Medical image analysis continues to hold interesting challenges given the subtle characteristics of certain diseases and the significant overlap in appearance between diseases. In this work, we explore the concept of self-attention for tackling such subtleties in and between diseases. To this end, we introduce MEDUSA, a multi-scale encoder-decoder self-attention mechanism tailored for medical image analysis. While self-attention deep convolutional neural network architectures in existing literature center around the notion of multiple isolated lightweight attention mechanisms with limited individual capacities being incorporated at different points in the network architecture, MEDUSA takes a significant departure from this notion by possessing a single, unified self-attention mechanism with significantly higher capacity with multiple attention heads feeding into different scales in the network architecture. To the best of the authors' knowledge, this is the first "single body, multi-scale heads" realization of self-attention and enables explicit global context amongst selective attention at different levels of representational abstractions while still enabling differing local attention context at individual levels of abstractions. With MEDUSA, we obtain state-of-the-art performance on multiple challenging medical image analysis benchmarks including COVIDx, RSNA RICORD, and RSNA Pneumonia Challenge when compared to previous work. Our MEDUSA model is publicly available.

COVID-Net CXR-2: An Enhanced Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-ray Images

May 14, 2021

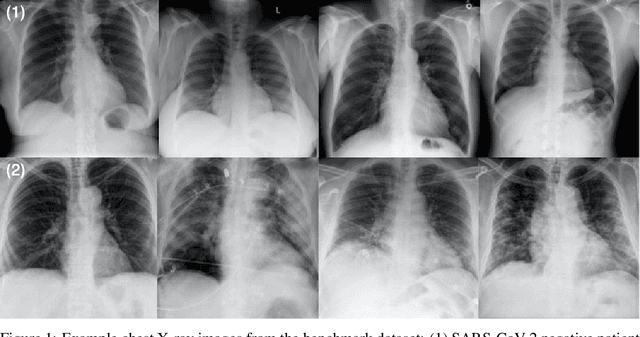

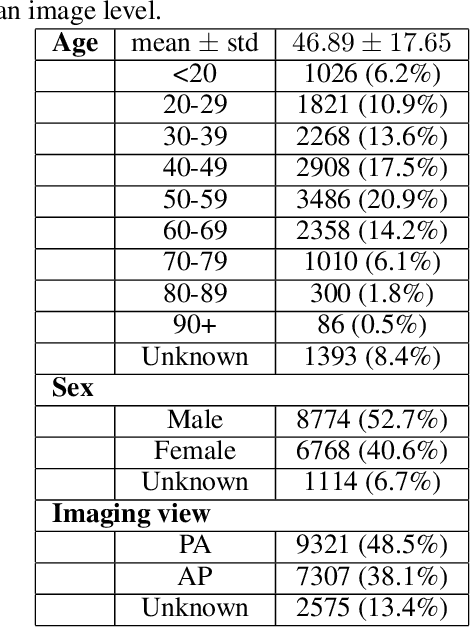

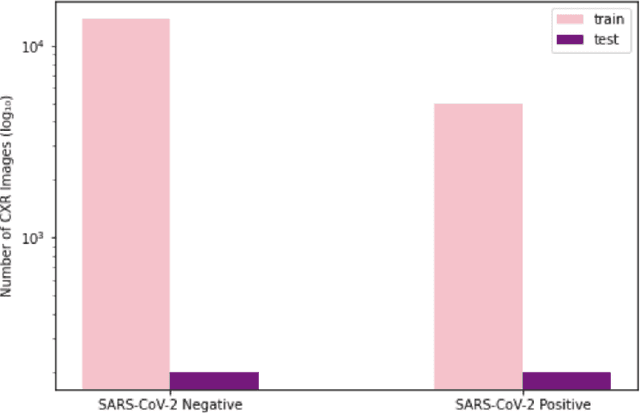

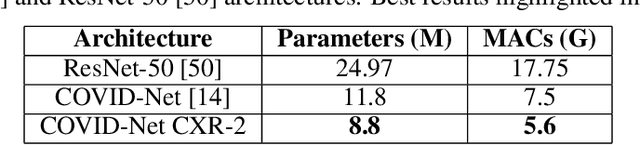

Abstract:As the COVID-19 pandemic continues to devastate globally, the use of chest X-ray (CXR) imaging as a complimentary screening strategy to RT-PCR testing continues to grow given its routine clinical use for respiratory complaint. As part of the COVID-Net open source initiative, we introduce COVID-Net CXR-2, an enhanced deep convolutional neural network design for COVID-19 detection from CXR images built using a greater quantity and diversity of patients than the original COVID-Net. To facilitate this, we also introduce a new benchmark dataset composed of 19,203 CXR images from a multinational cohort of 16,656 patients from at least 51 countries, making it the largest, most diverse COVID-19 CXR dataset in open access form. The COVID-Net CXR-2 network achieves sensitivity and positive predictive value of 95.5%/97.0%, respectively, and was audited in a transparent and responsible manner. Explainability-driven performance validation was used during auditing to gain deeper insights in its decision-making behaviour and to ensure clinically relevant factors are leveraged for improving trust in its usage. Radiologist validation was also conducted, where select cases were reviewed and reported on by two board-certified radiologists with over 10 and 19 years of experience, respectively, and showed that the critical factors leveraged by COVID-Net CXR-2 are consistent with radiologist interpretations. While not a production-ready solution, we hope the open-source, open-access release of COVID-Net CXR-2 and the respective CXR benchmark dataset will encourage researchers, clinical scientists, and citizen scientists to accelerate advancements and innovations in the fight against the pandemic.

COVID-Net CXR-S: Deep Convolutional Neural Network for Severity Assessment of COVID-19 Cases from Chest X-ray Images

May 01, 2021

Abstract:The world is still struggling in controlling and containing the spread of the COVID-19 pandemic caused by the SARS-CoV-2 virus. The medical conditions associated with SARS-CoV-2 infections have resulted in a surge in the number of patients at clinics and hospitals, leading to a significantly increased strain on healthcare resources. As such, an important part of managing and handling patients with SARS-CoV-2 infections within the clinical workflow is severity assessment, which is often conducted with the use of chest x-ray (CXR) images. In this work, we introduce COVID-Net CXR-S, a convolutional neural network for predicting the airspace severity of a SARS-CoV-2 positive patient based on a CXR image of the patient's chest. More specifically, we leveraged transfer learning to transfer representational knowledge gained from over 16,000 CXR images from a multinational cohort of over 15,000 patient cases into a custom network architecture for severity assessment. Experimental results with a multi-national patient cohort curated by the Radiological Society of North America (RSNA) RICORD initiative showed that the proposed COVID-Net CXR-S has potential to be a powerful tool for computer-aided severity assessment of CXR images of COVID-19 positive patients. Furthermore, radiologist validation on select cases by two board-certified radiologists with over 10 and 19 years of experience, respectively, showed consistency between radiologist interpretation and critical factors leveraged by COVID-Net CXR-S for severity assessment. While not a production-ready solution, the ultimate goal for the open source release of COVID-Net CXR-S is to act as a catalyst for clinical scientists, machine learning researchers, as well as citizen scientists to develop innovative new clinical decision support solutions for helping clinicians around the world manage the continuing pandemic.

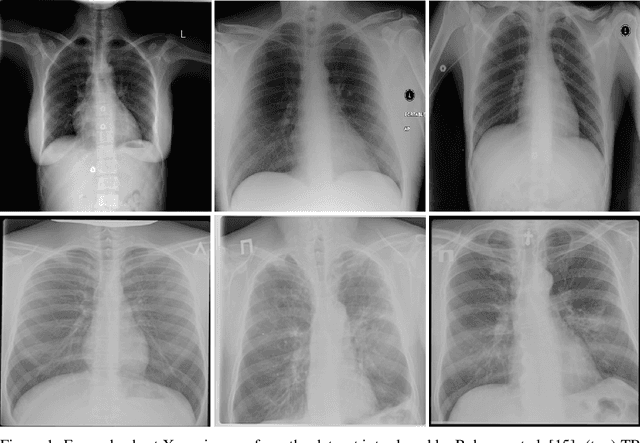

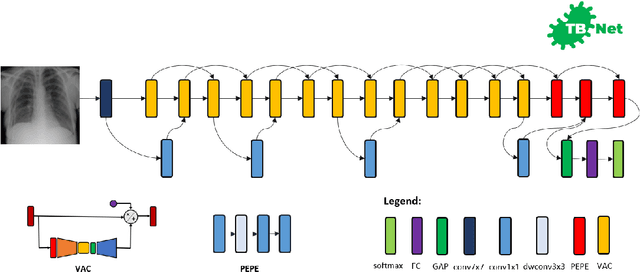

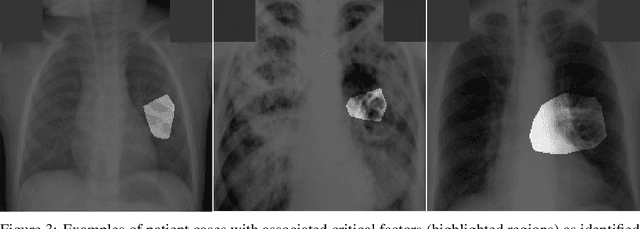

TB-Net: A Tailored, Self-Attention Deep Convolutional Neural Network Design for Detection of Tuberculosis Cases from Chest X-ray Images

Apr 14, 2021

Abstract:Tuberculosis (TB) remains a global health problem, and is the leading cause of death from an infectious disease. A crucial step in the treatment of tuberculosis is screening high risk populations and the early detection of the disease, with chest x-ray (CXR) imaging being the most widely-used imaging modality. As such, there has been significant recent interest in artificial intelligence-based TB screening solutions for use in resource-limited scenarios where there is a lack of trained healthcare workers with expertise in CXR interpretation. Motivated by this pressing need and the recent recommendation by the World Health Organization (WHO) for the use of computer-aided diagnosis of TB, we introduce TB-Net, a self-attention deep convolutional neural network tailored for TB case screening. More specifically, we leveraged machine-driven design exploration to build a highly customized deep neural network architecture with attention condensers. We conducted an explainability-driven performance validation process to validate TB-Net's decision-making behaviour. Experiments on CXR data from a multi-national patient cohort showed that the proposed TB-Net is able to achieve accuracy/sensitivity/specificity of 99.86%/100.0%/99.71%. Radiologist validation was conducted on select cases by two board-certified radiologists with over 10 and 19 years of experience, respectively, and showed consistency between radiologist interpretation and critical factors leveraged by TB-Net for TB case detection for the case where radiologists identified anomalies. While not a production-ready solution, we hope that the open-source release of TB-Net as part of the COVID-Net initiative will support researchers, clinicians, and citizen data scientists in advancing this field in the fight against this global public health crisis.

COVID-Net CT-2: Enhanced Deep Neural Networks for Detection of COVID-19 from Chest CT Images Through Bigger, More Diverse Learning

Jan 26, 2021

Abstract:The COVID-19 pandemic continues to rage on, with multiple waves causing substantial harm to health and economies around the world. Motivated by the use of CT imaging at clinical institutes around the world as an effective complementary screening method to RT-PCR testing, we introduced COVID-Net CT, a neural network tailored for detection of COVID-19 cases from chest CT images as part of the open source COVID-Net initiative. However, one potential limiting factor is restricted quantity and diversity given the single nation patient cohort used. In this study, we introduce COVID-Net CT-2, enhanced deep neural networks for COVID-19 detection from chest CT images trained on the largest quantity and diversity of multinational patient cases in research literature. We introduce two new CT benchmark datasets, the largest comprising a multinational cohort of 4,501 patients from at least 15 countries. We leverage explainability to investigate the decision-making behaviour of COVID-Net CT-2, with the results for select cases reviewed and reported on by two board-certified radiologists with over 10 and 30 years of experience, respectively. The COVID-Net CT-2 neural networks achieved accuracy, COVID-19 sensitivity, PPV, specificity, and NPV of 98.1%/96.2%/96.7%/99%/98.8% and 97.9%/95.7%/96.4%/98.9%/98.7%, respectively. Explainability-driven performance validation shows that COVID-Net CT-2's decision-making behaviour is consistent with radiologist interpretation by leveraging correct, clinically relevant critical factors. The results are promising and suggest the strong potential of deep neural networks as an effective tool for computer-aided COVID-19 assessment. While not a production-ready solution, we hope the open-source, open-access release of COVID-Net CT-2 and benchmark datasets will continue to enable researchers, clinicians, and citizen data scientists alike to build upon them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge