Siddharth Surana

COVID-Net MLSys: Designing COVID-Net for the Clinical Workflow

Sep 14, 2021

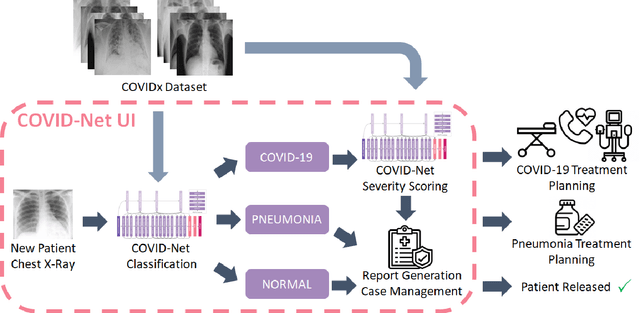

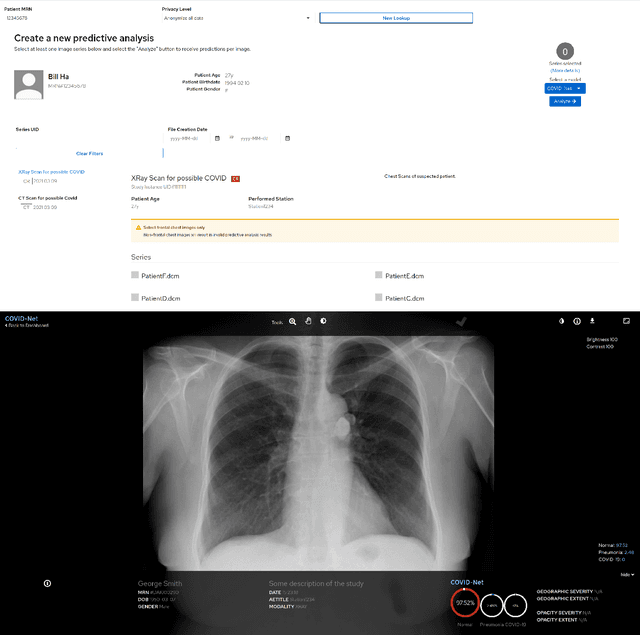

Abstract:As the COVID-19 pandemic continues to devastate globally, one promising field of research is machine learning-driven computer vision to streamline various parts of the COVID-19 clinical workflow. These machine learning methods are typically stand-alone models designed without consideration for the integration necessary for real-world application workflows. In this study, we take a machine learning and systems (MLSys) perspective to design a system for COVID-19 patient screening with the clinical workflow in mind. The COVID-Net system is comprised of the continuously evolving COVIDx dataset, COVID-Net deep neural network for COVID-19 patient detection, and COVID-Net S deep neural networks for disease severity scoring for COVID-19 positive patient cases. The deep neural networks within the COVID-Net system possess state-of-the-art performance, and are designed to be integrated within a user interface (UI) for clinical decision support with automatic report generation to assist clinicians in their treatment decisions.

COVID-Net CXR-2: An Enhanced Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-ray Images

May 14, 2021

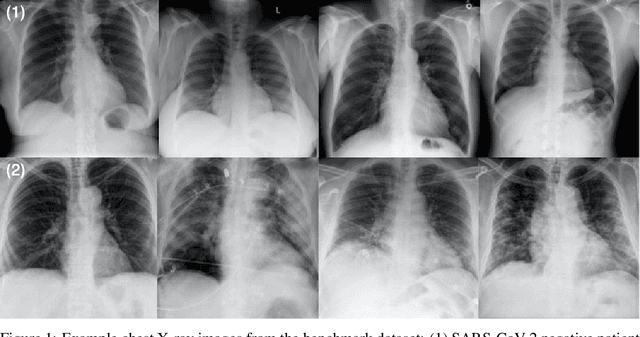

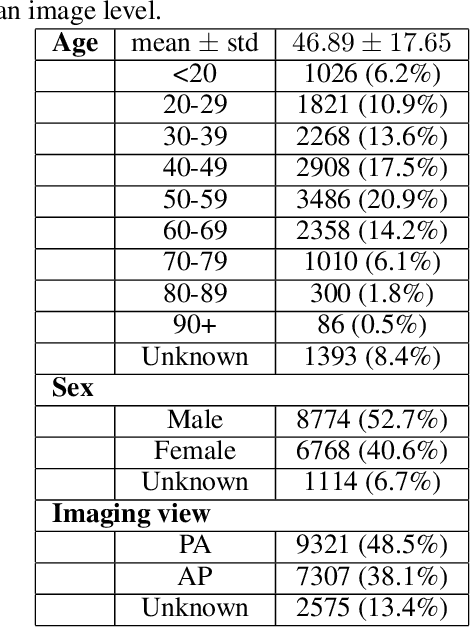

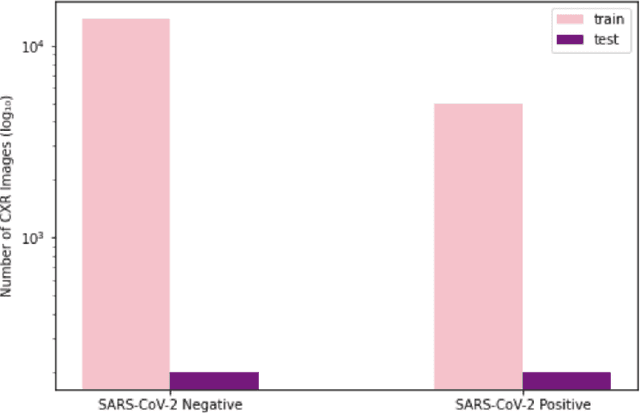

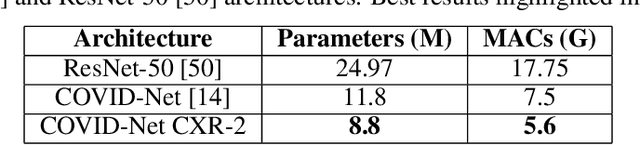

Abstract:As the COVID-19 pandemic continues to devastate globally, the use of chest X-ray (CXR) imaging as a complimentary screening strategy to RT-PCR testing continues to grow given its routine clinical use for respiratory complaint. As part of the COVID-Net open source initiative, we introduce COVID-Net CXR-2, an enhanced deep convolutional neural network design for COVID-19 detection from CXR images built using a greater quantity and diversity of patients than the original COVID-Net. To facilitate this, we also introduce a new benchmark dataset composed of 19,203 CXR images from a multinational cohort of 16,656 patients from at least 51 countries, making it the largest, most diverse COVID-19 CXR dataset in open access form. The COVID-Net CXR-2 network achieves sensitivity and positive predictive value of 95.5%/97.0%, respectively, and was audited in a transparent and responsible manner. Explainability-driven performance validation was used during auditing to gain deeper insights in its decision-making behaviour and to ensure clinically relevant factors are leveraged for improving trust in its usage. Radiologist validation was also conducted, where select cases were reviewed and reported on by two board-certified radiologists with over 10 and 19 years of experience, respectively, and showed that the critical factors leveraged by COVID-Net CXR-2 are consistent with radiologist interpretations. While not a production-ready solution, we hope the open-source, open-access release of COVID-Net CXR-2 and the respective CXR benchmark dataset will encourage researchers, clinical scientists, and citizen scientists to accelerate advancements and innovations in the fight against the pandemic.

TinySpeech: Attention Condensers for Deep Speech Recognition Neural Networks on Edge Devices

Aug 23, 2020

Abstract:Advances in deep learning have led to state-of-the-art performance across a multitude of speech recognition tasks. Nevertheless, the widespread deployment of deep neural networks for on-device speech recognition remains a challenge, particularly in edge scenarios where the memory and computing resources are highly constrained (e.g., low-power embedded devices) or where the memory and computing budget dedicated to speech recognition is low (e.g., mobile devices performing numerous tasks besides speech recognition). In this study, we introduce the concept of attention condensers for building low-footprint, highly-efficient deep neural networks for on-device speech recognition on the edge. More specifically, an attention condenser is a self-attention mechanism that learns and produces a condensed embedding characterizing joint local and cross-channel activation relationships, and performs selective attention accordingly. To illustrate its efficacy, we introduce TinySpeech, low-precision deep neural networks comprising largely of attention condensers tailored for on-device speech recognition using a machine-driven design exploration strategy. Experimental results on the Google Speech Commands benchmark dataset for limited-vocabulary speech recognition showed that TinySpeech networks achieved significantly lower architectural complexity (as much as $207\times$ fewer parameters) and lower computational complexity (as much as $21\times$ fewer multiply-add operations) when compared to previous deep neural networks in research literature. These results not only demonstrate the efficacy of attention condensers for building highly efficient deep neural networks for on-device speech recognition, but also illuminate its potential for accelerating deep learning on the edge and empowering a wide range of TinyML applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge