James Ren Hou Lee

TB-Net: A Tailored, Self-Attention Deep Convolutional Neural Network Design for Detection of Tuberculosis Cases from Chest X-ray Images

Apr 14, 2021

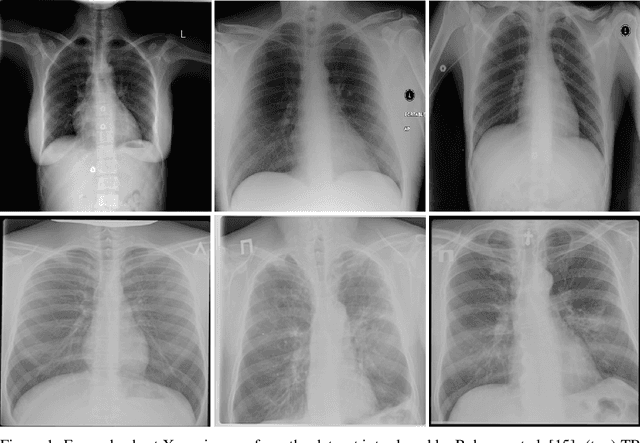

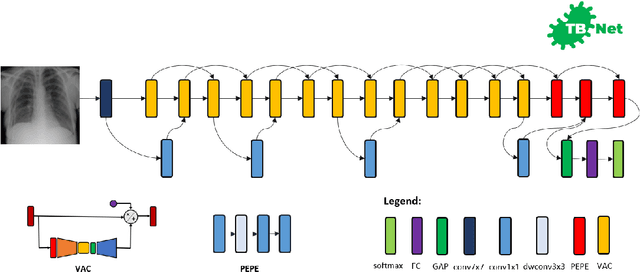

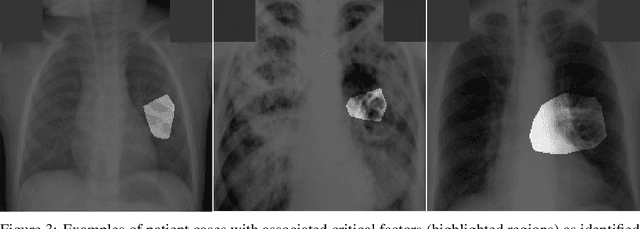

Abstract:Tuberculosis (TB) remains a global health problem, and is the leading cause of death from an infectious disease. A crucial step in the treatment of tuberculosis is screening high risk populations and the early detection of the disease, with chest x-ray (CXR) imaging being the most widely-used imaging modality. As such, there has been significant recent interest in artificial intelligence-based TB screening solutions for use in resource-limited scenarios where there is a lack of trained healthcare workers with expertise in CXR interpretation. Motivated by this pressing need and the recent recommendation by the World Health Organization (WHO) for the use of computer-aided diagnosis of TB, we introduce TB-Net, a self-attention deep convolutional neural network tailored for TB case screening. More specifically, we leveraged machine-driven design exploration to build a highly customized deep neural network architecture with attention condensers. We conducted an explainability-driven performance validation process to validate TB-Net's decision-making behaviour. Experiments on CXR data from a multi-national patient cohort showed that the proposed TB-Net is able to achieve accuracy/sensitivity/specificity of 99.86%/100.0%/99.71%. Radiologist validation was conducted on select cases by two board-certified radiologists with over 10 and 19 years of experience, respectively, and showed consistency between radiologist interpretation and critical factors leveraged by TB-Net for TB case detection for the case where radiologists identified anomalies. While not a production-ready solution, we hope that the open-source release of TB-Net as part of the COVID-Net initiative will support researchers, clinicians, and citizen data scientists in advancing this field in the fight against this global public health crisis.

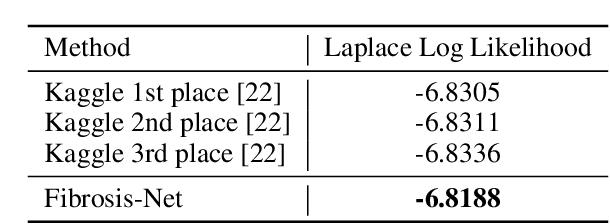

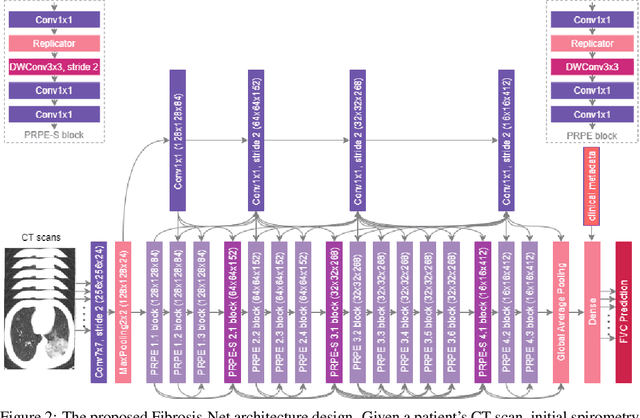

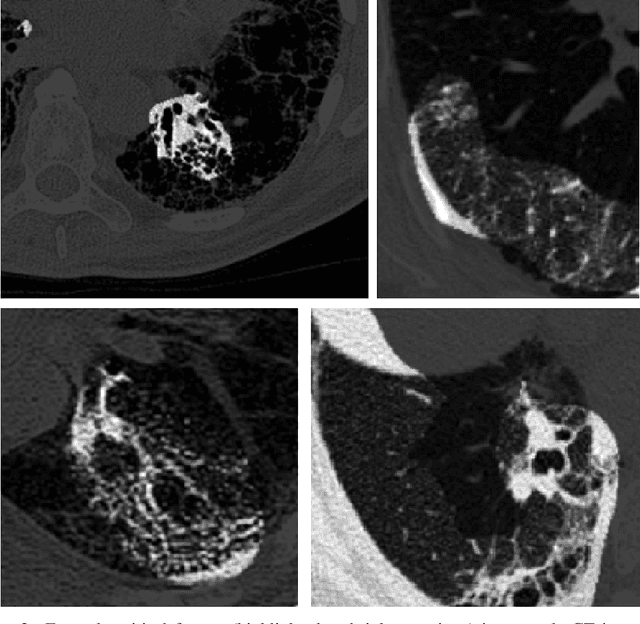

Fibrosis-Net: A Tailored Deep Convolutional Neural Network Design for Prediction of Pulmonary Fibrosis Progression from Chest CT Images

Mar 06, 2021

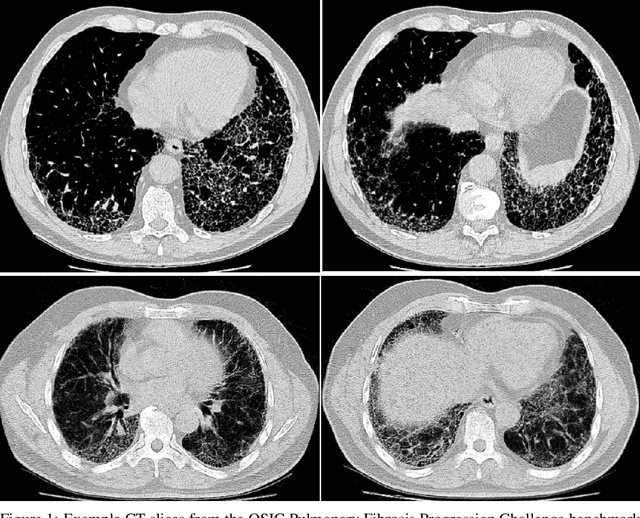

Abstract:Pulmonary fibrosis is a devastating chronic lung disease that causes irreparable lung tissue scarring and damage, resulting in progressive loss in lung capacity and has no known cure. A critical step in the treatment and management of pulmonary fibrosis is the assessment of lung function decline, with computed tomography (CT) imaging being a particularly effective method for determining the extent of lung damage caused by pulmonary fibrosis. Motivated by this, we introduce Fibrosis-Net, a deep convolutional neural network design tailored for the prediction of pulmonary fibrosis progression from chest CT images. More specifically, machine-driven design exploration was leveraged to determine a strong architectural design for CT lung analysis, upon which we build a customized network design tailored for predicting forced vital capacity (FVC) based on a patient's CT scan, initial spirometry measurement, and clinical metadata. Finally, we leverage an explainability-driven performance validation strategy to study the decision-making behaviour of Fibrosis-Net as to verify that predictions are based on relevant visual indicators in CT images. Experiments using the OSIC Pulmonary Fibrosis Progression Challenge benchmark dataset showed that the proposed Fibrosis-Net is able to achieve a significantly higher modified Laplace Log Likelihood score than the winning solutions on the challenge leaderboard. Furthermore, explainability-driven performance validation demonstrated that the proposed Fibrosis-Net exhibits correct decision-making behaviour by leveraging clinically-relevant visual indicators in CT images when making predictions on pulmonary fibrosis progress. While Fibrosis-Net is not yet a production-ready clinical assessment solution, we hope that releasing the model in open source manner will encourage researchers, clinicians, and citizen data scientists alike to leverage and build upon it.

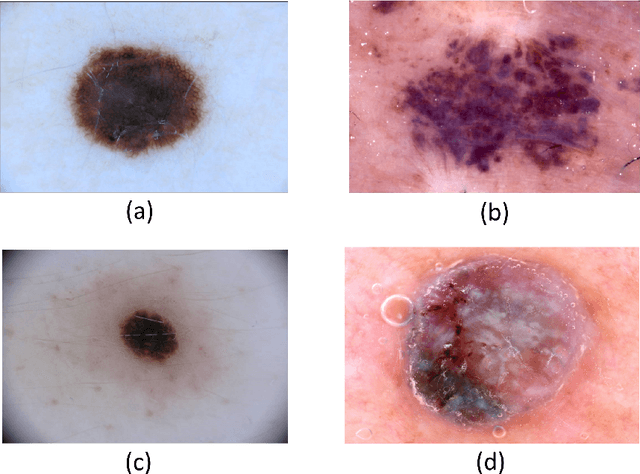

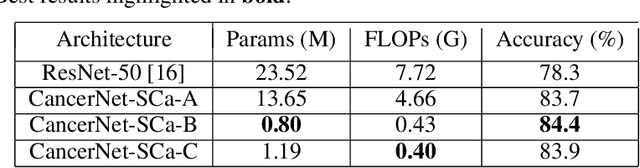

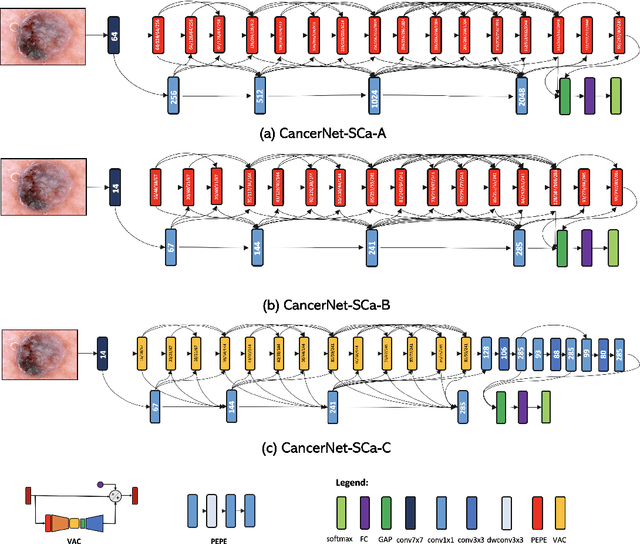

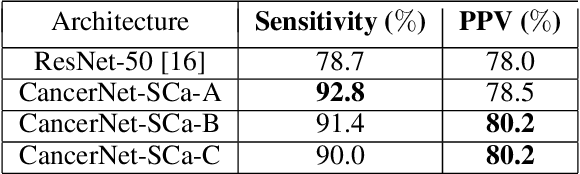

CancerNet-SCa: Tailored Deep Neural Network Designs for Detection of Skin Cancer from Dermoscopy Images

Nov 21, 2020

Abstract:Skin cancer continues to be the most frequently diagnosed form of cancer in the U.S., with not only significant effects on health and well-being but also significant economic costs associated with treatment. A crucial step to the treatment and management of skin cancer is effective skin cancer detection due to strong prognosis when treated at an early stage, with one of the key screening approaches being dermoscopy examination. Motivated by the advances of deep learning and inspired by the open source initiatives in the research community, in this study we introduce CancerNet-SCa, a suite of deep neural network designs tailored for the detection of skin cancer from dermoscopy images that is open source and available to the general public as part of the Cancer-Net initiative. To the best of the authors' knowledge, CancerNet-SCa comprises of the first machine-designed deep neural network architecture designs tailored specifically for skin cancer detection, one of which possessing a self-attention architecture design with attention condensers. Furthermore, we investigate and audit the behaviour of CancerNet-SCa in a responsible and transparent manner via explainability-driven model auditing. While CancerNet-SCa is not a production-ready screening solution, the hope is that the release of CancerNet-SCa in open source, open access form will encourage researchers, clinicians, and citizen data scientists alike to leverage and build upon them.

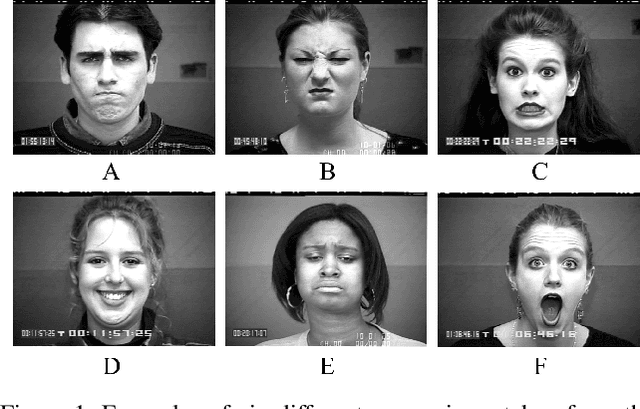

AEGIS: A real-time multimodal augmented reality computer vision based system to assist facial expression recognition for individuals with autism spectrum disorder

Oct 22, 2020Abstract:The ability to interpret social cues comes naturally for most people, but for those living with Autism Spectrum Disorder (ASD), some experience a deficiency in this area. This paper presents the development of a multimodal augmented reality (AR) system which combines the use of computer vision and deep convolutional neural networks (CNN) in order to assist individuals with the detection and interpretation of facial expressions in social settings. The proposed system, which we call AEGIS (Augmented-reality Expression Guided Interpretation System), is an assistive technology deployable on a variety of user devices including tablets, smartphones, video conference systems, or smartglasses, showcasing its extreme flexibility and wide range of use cases, to allow integration into daily life with ease. Given a streaming video camera source, each real-world frame is passed into AEGIS, processed for facial bounding boxes, and then fed into our novel deep convolutional time windowed neural network (TimeConvNet). We leverage both spatial and temporal information in order to provide an accurate expression prediction, which is then converted into its corresponding visualization and drawn on top of the original video frame. The system runs in real-time, requires minimal set up and is simple to use. With the use of AEGIS, we can assist individuals living with ASD to learn to better identify expressions and thus improve their social experiences.

EmotionNet Nano: An Efficient Deep Convolutional Neural Network Design for Real-time Facial Expression Recognition

Jun 29, 2020

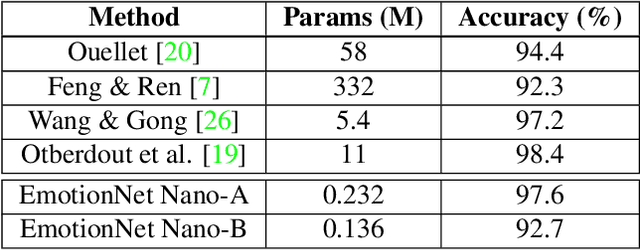

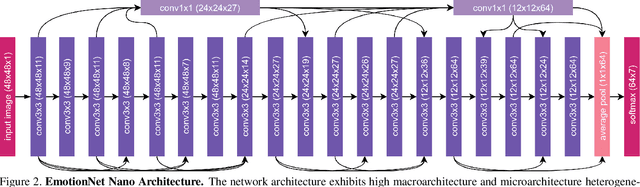

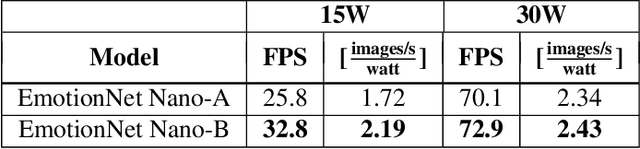

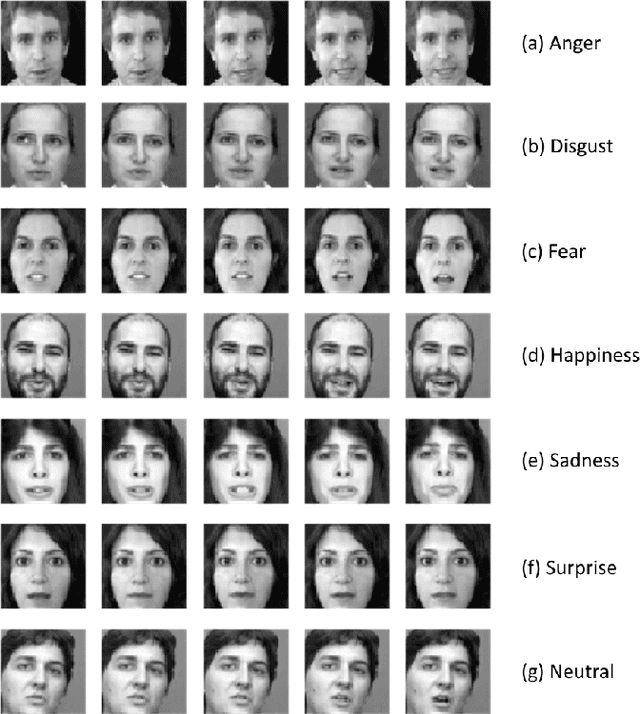

Abstract:While recent advances in deep learning have led to significant improvements in facial expression classification (FEC), a major challenge that remains a bottleneck for the widespread deployment of such systems is their high architectural and computational complexities. This is especially challenging given the operational requirements of various FEC applications, such as safety, marketing, learning, and assistive living, where real-time requirements on low-cost embedded devices is desired. Motivated by this need for a compact, low latency, yet accurate system capable of performing FEC in real-time on low-cost embedded devices, this study proposes EmotionNet Nano, an efficient deep convolutional neural network created through a human-machine collaborative design strategy, where human experience is combined with machine meticulousness and speed in order to craft a deep neural network design catered towards real-time embedded usage. Two different variants of EmotionNet Nano are presented, each with a different trade-off between architectural and computational complexity and accuracy. Experimental results using the CK+ facial expression benchmark dataset demonstrate that the proposed EmotionNet Nano networks demonstrated accuracies comparable to state-of-the-art in FEC networks, while requiring significantly fewer parameters (e.g., 23$\times$ fewer at a higher accuracy). Furthermore, we demonstrate that the proposed EmotionNet Nano networks achieved real-time inference speeds (e.g. $>25$ FPS and $>70$ FPS at 15W and 30W, respectively) and high energy efficiency (e.g. $>1.7$ images/sec/watt at 15W) on an ARM embedded processor, thus further illustrating the efficacy of EmotionNet Nano for deployment on embedded devices.

TimeConvNets: A Deep Time Windowed Convolution Neural Network Design for Real-time Video Facial Expression Recognition

Mar 03, 2020

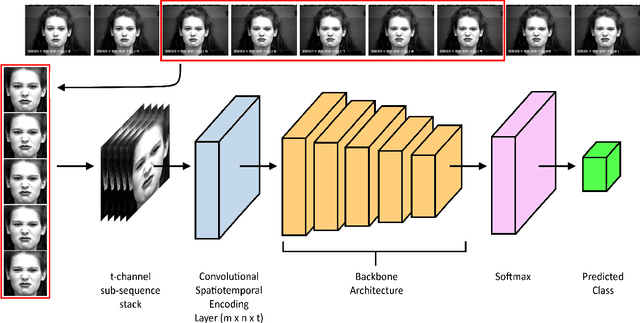

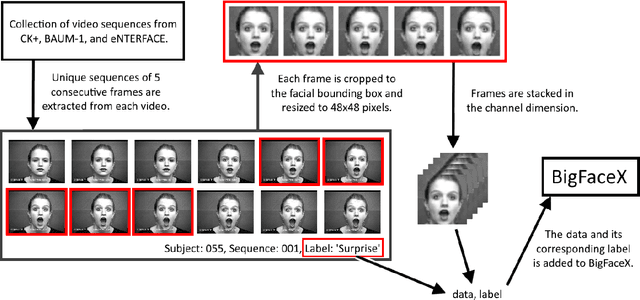

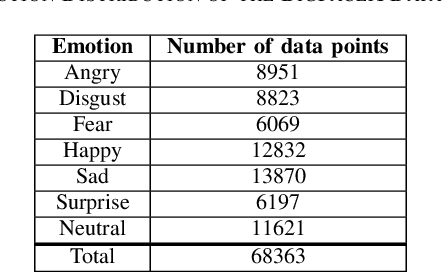

Abstract:A core challenge faced by the majority of individuals with Autism Spectrum Disorder (ASD) is an impaired ability to infer other people's emotions based on their facial expressions. With significant recent advances in machine learning, one potential approach to leveraging technology to assist such individuals to better recognize facial expressions and reduce the risk of possible loneliness and depression due to social isolation is the design of computer vision-driven facial expression recognition systems. Motivated by this social need as well as the low latency requirement of such systems, this study explores a novel deep time windowed convolutional neural network design (TimeConvNets) for the purpose of real-time video facial expression recognition. More specifically, we explore an efficient convolutional deep neural network design for spatiotemporal encoding of time windowed video frame sub-sequences and study the respective balance between speed and accuracy. Furthermore, to evaluate the proposed TimeConvNet design, we introduce a more difficult dataset called BigFaceX, composed of a modified aggregation of the extended Cohn-Kanade (CK+), BAUM-1, and the eNTERFACE public datasets. Different variants of the proposed TimeConvNet design with different backbone network architectures were evaluated using BigFaceX alongside other network designs for capturing spatiotemporal information, and experimental results demonstrate that TimeConvNets can better capture the transient nuances of facial expressions and boost classification accuracy while maintaining a low inference time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge