Ali Alizadeh

An End-to-end Deep Reinforcement Learning Approach for the Long-term Short-term Planning on the Frenet Space

Nov 26, 2020

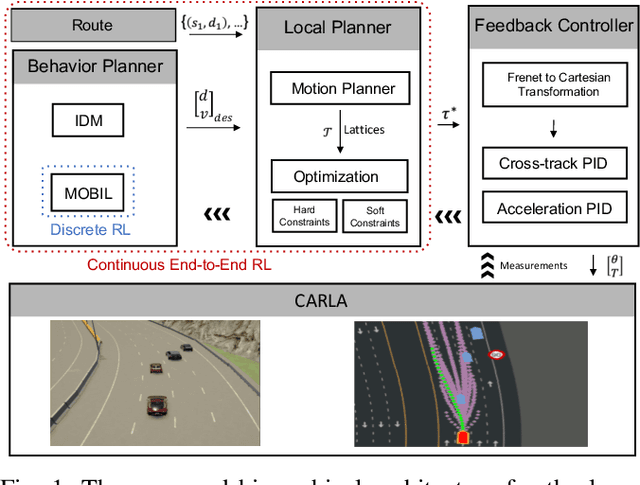

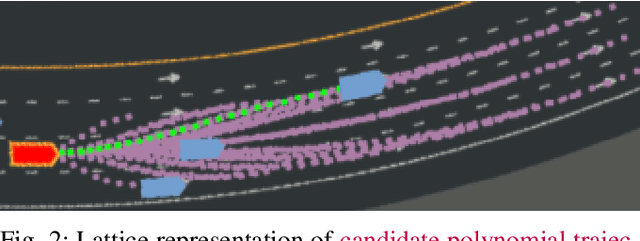

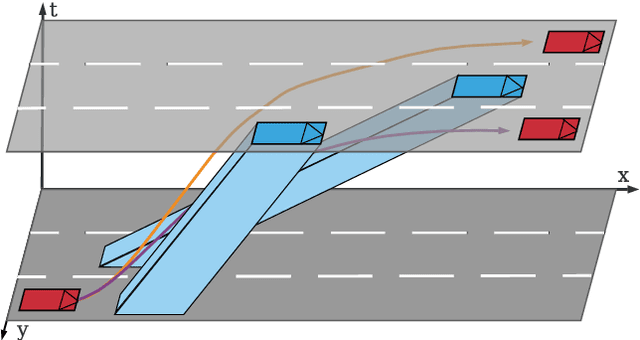

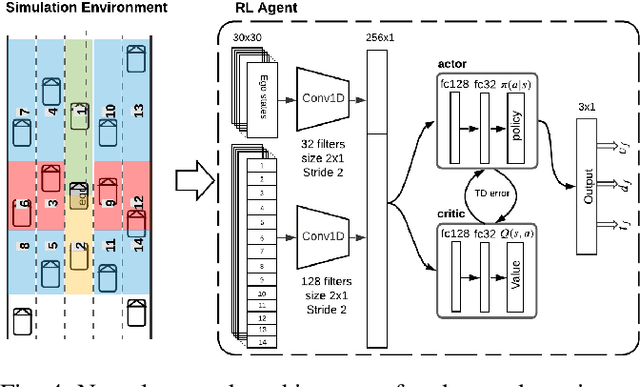

Abstract:Tactical decision making and strategic motion planning for autonomous highway driving are challenging due to the complication of predicting other road users' behaviors, diversity of environments, and complexity of the traffic interactions. This paper presents a novel end-to-end continuous deep reinforcement learning approach towards autonomous cars' decision-making and motion planning. For the first time, we define both states and action spaces on the Frenet space to make the driving behavior less variant to the road curvatures than the surrounding actors' dynamics and traffic interactions. The agent receives time-series data of past trajectories of the surrounding vehicles and applies convolutional neural networks along the time channels to extract features in the backbone. The algorithm generates continuous spatiotemporal trajectories on the Frenet frame for the feedback controller to track. Extensive high-fidelity highway simulations on CARLA show the superiority of the presented approach compared with commonly used baselines and discrete reinforcement learning on various traffic scenarios. Furthermore, the proposed method's advantage is confirmed with a more comprehensive performance evaluation against 1000 randomly generated test scenarios.

Sample Efficient Interactive End-to-End Deep Learning for Self-Driving Cars with Selective Multi-Class Safe Dataset Aggregation

Jul 29, 2020

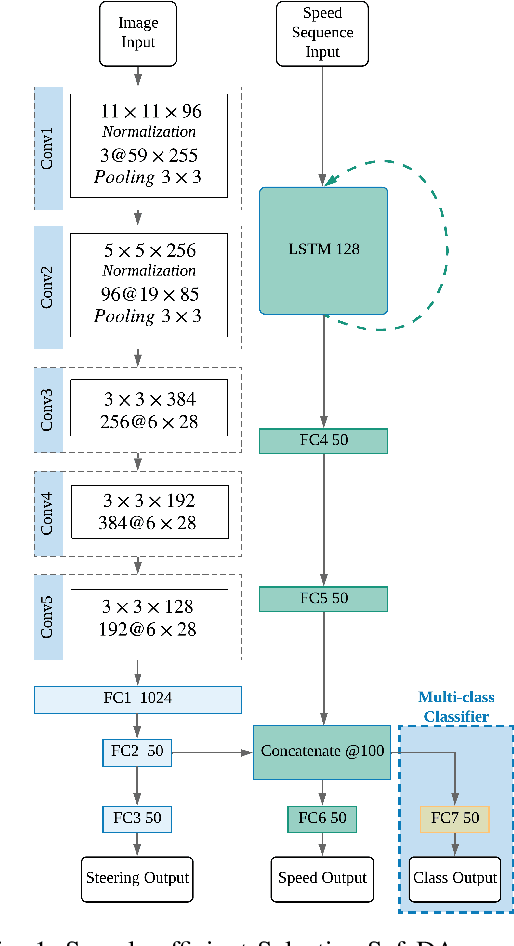

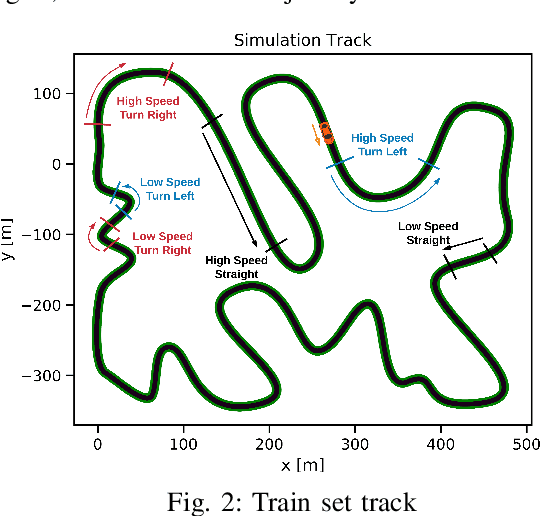

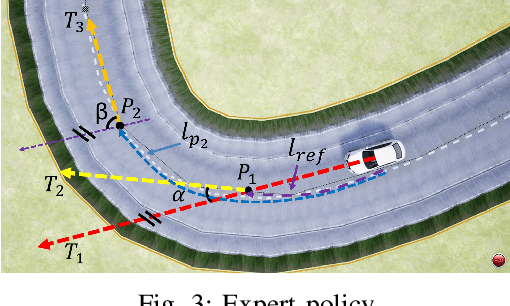

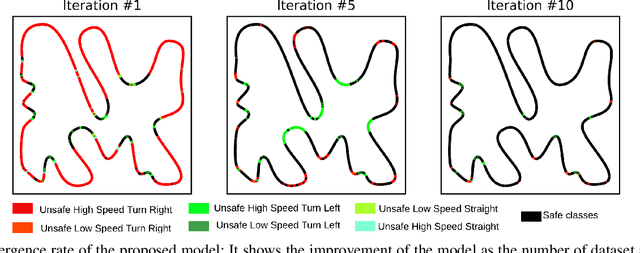

Abstract:The objective of this paper is to develop a sample efficient end-to-end deep learning method for self-driving cars, where we attempt to increase the value of the information extracted from samples, through careful analysis obtained from each call to expert driver\'s policy. End-to-end imitation learning is a popular method for computing self-driving car policies. The standard approach relies on collecting pairs of inputs (camera images) and outputs (steering angle, etc.) from an expert policy and fitting a deep neural network to this data to learn the driving policy. Although this approach had some successful demonstrations in the past, learning a good policy might require a lot of samples from the expert driver, which might be resource-consuming. In this work, we develop a novel framework based on the Safe Dateset Aggregation (safe DAgger) approach, where the current learned policy is automatically segmented into different trajectory classes, and the algorithm identifies trajectory segments or classes with the weak performance at each step. Once the trajectory segments with weak performance identified, the sampling algorithm focuses on calling the expert policy only on these segments, which improves the convergence rate. The presented simulation results show that the proposed approach can yield significantly better performance compared to the standard Safe DAgger algorithm while using the same amount of samples from the expert.

* 6 pages, 6 figures, IROS2019 conference

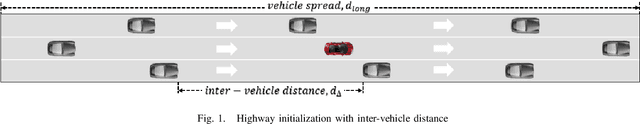

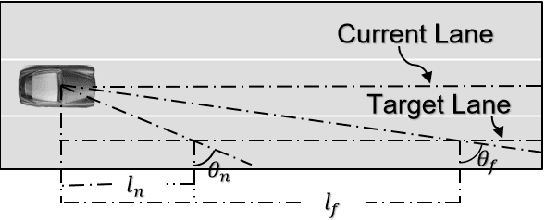

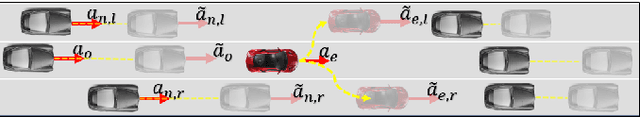

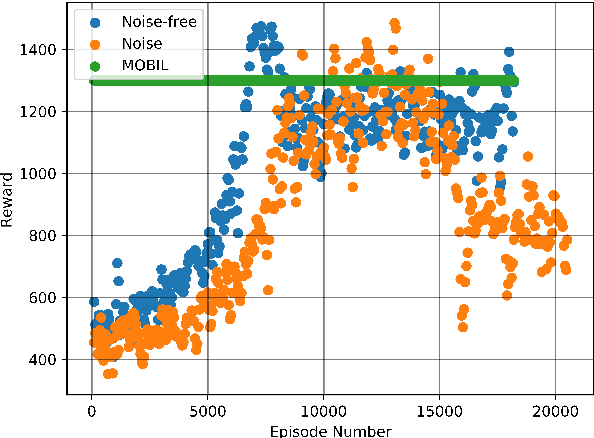

Automated Lane Change Decision Making using Deep Reinforcement Learning in Dynamic and Uncertain Highway Environment

Sep 18, 2019

Abstract:Autonomous lane changing is a critical feature for advanced autonomous driving systems, that involves several challenges such as uncertainty in other driver's behaviors and the trade-off between safety and agility. In this work, we develop a novel simulation environment that emulates these challenges and train a deep reinforcement learning agent that yields consistent performance in a variety of dynamic and uncertain traffic scenarios. Results show that the proposed data-driven approach performs significantly better in noisy environments compared to methods that rely solely on heuristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge