Gabriel Hugh Elkaim

An Autonomous Driving Framework for Long-term Decision-making and Short-term Trajectory Planning on Frenet Space

Nov 26, 2020

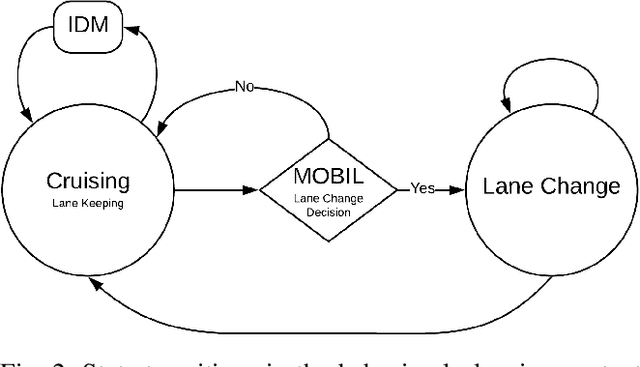

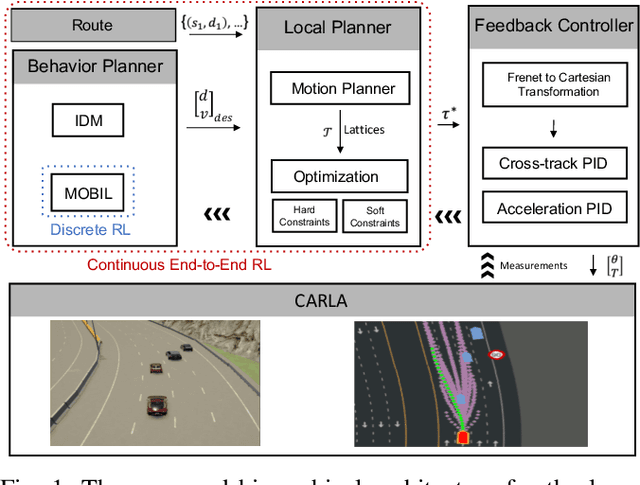

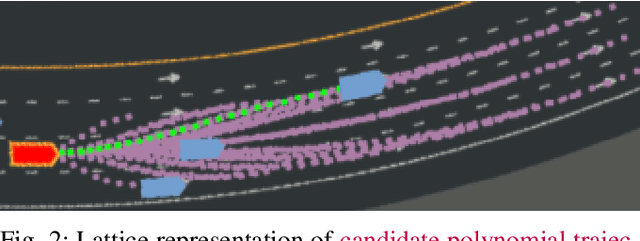

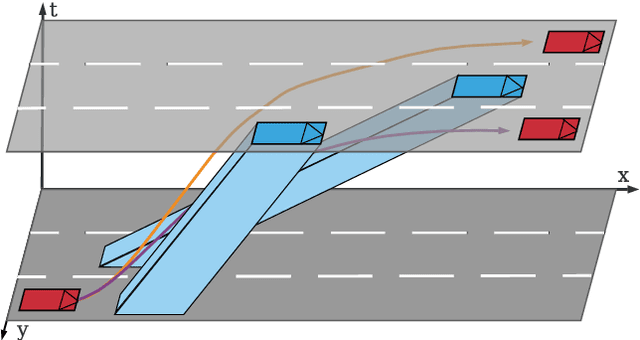

Abstract:In this paper, we present a hierarchical framework for decision-making and planning on highway driving tasks. We utilized intelligent driving models (IDM and MOBIL) to generate long-term decisions based on the traffic situation flowing around the ego. The decisions both maximize ego performance while respecting other vehicles' objectives. Short-term trajectory optimization is performed on the Frenet space to make the calculations invariant to the road's three-dimensional curvatures. A novel obstacle avoidance approach is introduced on the Frenet frame for the moving obstacles. The optimization explores the driving corridors to generate spatiotemporal polynomial trajectories to navigate through the traffic safely and obey the BP commands. The framework also introduces a heuristic supervisor that identifies unexpected situations and recalculates each module in case of a potential emergency. Experiments in CARLA simulation have shown the potential and the scalability of the framework in implementing various driving styles that match human behavior.

An End-to-end Deep Reinforcement Learning Approach for the Long-term Short-term Planning on the Frenet Space

Nov 26, 2020

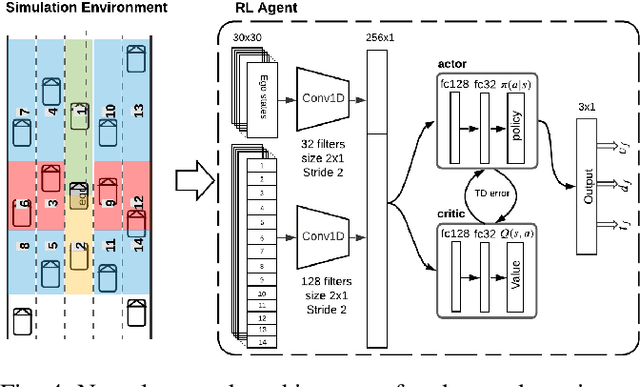

Abstract:Tactical decision making and strategic motion planning for autonomous highway driving are challenging due to the complication of predicting other road users' behaviors, diversity of environments, and complexity of the traffic interactions. This paper presents a novel end-to-end continuous deep reinforcement learning approach towards autonomous cars' decision-making and motion planning. For the first time, we define both states and action spaces on the Frenet space to make the driving behavior less variant to the road curvatures than the surrounding actors' dynamics and traffic interactions. The agent receives time-series data of past trajectories of the surrounding vehicles and applies convolutional neural networks along the time channels to extract features in the backbone. The algorithm generates continuous spatiotemporal trajectories on the Frenet frame for the feedback controller to track. Extensive high-fidelity highway simulations on CARLA show the superiority of the presented approach compared with commonly used baselines and discrete reinforcement learning on various traffic scenarios. Furthermore, the proposed method's advantage is confirmed with a more comprehensive performance evaluation against 1000 randomly generated test scenarios.

A Hierarchical Architecture for Sequential Decision-Making in Autonomous Driving using Deep Reinforcement Learning

Jun 20, 2019

Abstract:Tactical decision making is a critical feature for advanced driving systems, that incorporates several challenges such as complexity of the uncertain environment and reliability of the autonomous system. In this work, we develop a multi-modal architecture that includes the environmental modeling of ego surrounding and train a deep reinforcement learning (DRL) agent that yields consistent performance in stochastic highway driving scenarios. To this end, we feed the occupancy grid of the ego surrounding into the DRL agent and obtain the high-level sequential commands (i.e. lane change) to send them to lower-level controllers. We will show that dividing the autonomous driving problem into a multi-layer control architecture enables us to leverage the AI power to solve each layer separately and achieve an admissible reliability score. Comparing with end-to-end approaches, this architecture enables us to end up with a more reliable system which can be implemented in actual self-driving cars.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge