Alexei Novikov

Imaging with super-resolution in changing random media

Nov 18, 2025

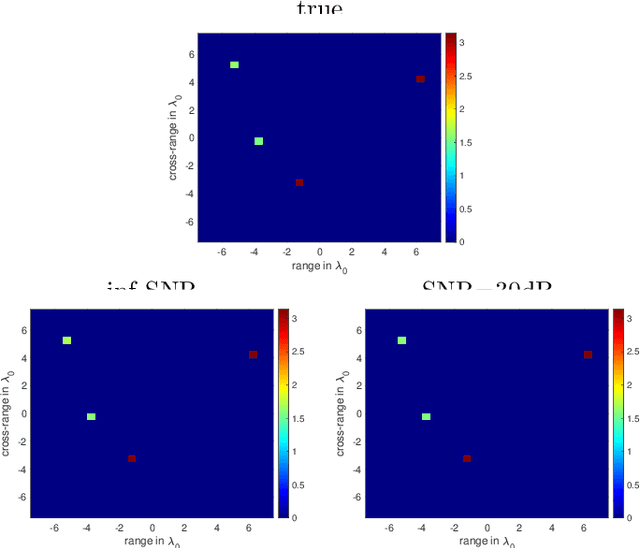

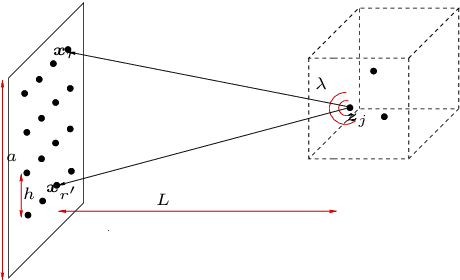

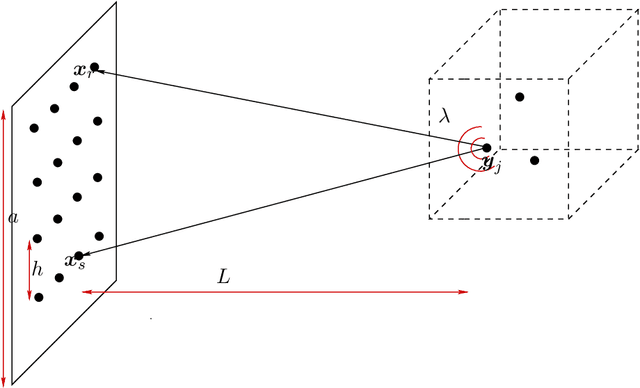

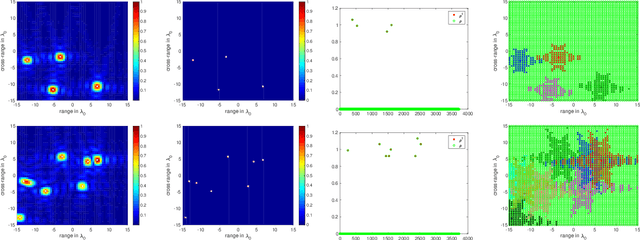

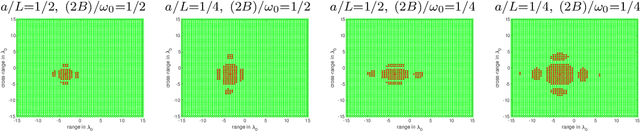

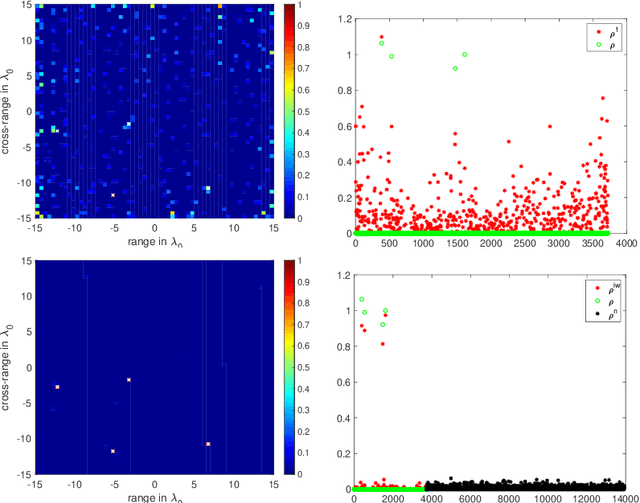

Abstract:We develop an imaging algorithm that exploits strong scattering to achieve super-resolution in changing random media. The method processes large and diverse array datasets using sparse dictionary learning, clustering, and multidimensional scaling. Starting from random initializations, the algorithm reliably extracts the unknown medium properties necessary for accurate imaging using back-propagation, $\ell_2$ or $\ell_1$ methods. Remarkably, scattering enhances resolution beyond homogeneous medium limits. When abundant data are available, the algorithm allows the realization of super-resolution in imaging.

Super-resolution in disordered media using neural networks

Oct 28, 2024

Abstract:We propose a methodology that exploits large and diverse data sets to accurately estimate the ambient medium's Green's functions in strongly scattering media. Given these estimates, obtained with and without the use of neural networks, excellent imaging results are achieved, with a resolution that is better than that of a homogeneous medium. This phenomenon, also known as super-resolution, occurs because the ambient scattering medium effectively enhances the physical imaging aperture.

Spectral Subspace Dictionary Learning

Oct 19, 2022

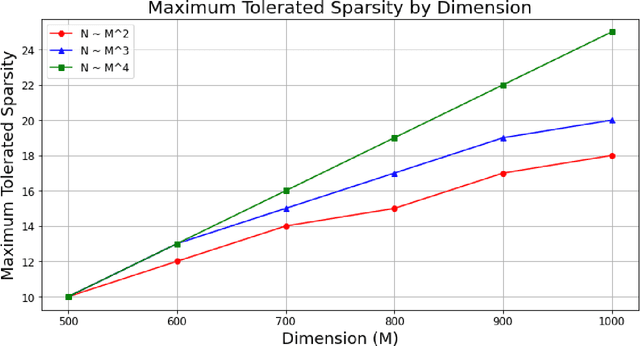

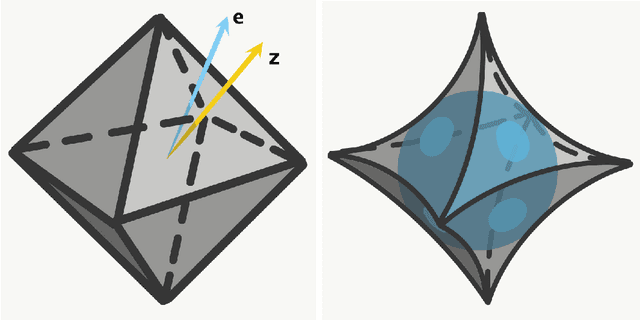

Abstract:\textit{Dictionary learning}, the problem of recovering a sparsely used matrix $\mathbf{D} \in \mathbb{R}^{M \times K}$ and $N$ independent $K \times 1$ $s$-sparse vectors $\mathbf{X} \in \mathbb{R}^{K \times N}$ from samples of the form $\mathbf{Y} = \mathbf{D}\mathbf{X}$, is of increasing importance to applications in signal processing and data science. Early papers on provable dictionary learning identified that one can detect whether two samples $\mathbf{y}_i, \mathbf{y}_j$ share a common dictionary element by testing if their absolute inner product (correlation) exceeds a certain threshold: $|\left\langle \mathbf{y}_i, \mathbf{y}_j \right\rangle| > \tau$. These correlation-based methods work well when sparsity is small, but suffer from declining performance when sparsity grows faster than $\sqrt{M}$; as a result, such methods were abandoned in the search for dictionary learning algorithms when sparsity is nearly linear in $M$. In this paper, we revisit correlation-based dictionary learning. Instead of seeking to recover individual dictionary atoms, we employ a spectral method to recover the subspace spanned by the dictionary atoms in the support of each sample. This approach circumvents the primary challenge encountered by previous correlation methods, namely that when sharing information between two samples it is difficult to tell \textit{which} dictionary element the two samples share. We prove that under a suitable random model the resulting algorithm recovers dictionaries in polynomial time for sparsity linear in $M$ up to log factors. Our results improve on the best known methods by achieving a decaying error bound in dimension $M$; the best previously known results for the overcomplete ($K > M$) setting achieve polynomial time linear regime only for constant error bounds. Numerical simulations confirm our results.

Quantitative phase and absorption contrast imaging

Mar 23, 2022

Abstract:Phase retrieval in its most general form is the problem of reconstructing a complex valued function from phaseless information of some transform of that function. This problem arises in various fields such as X-ray crystallography, electron microscopy, coherent diffractive imaging, astronomy, speech recognition, and quantum mechanics. The mathematical and computational analysis of these problems has a long history and a variety of different algorithms has been proposed in the literature. The performance of which usually depends on the constraints imposed on the sought function and the number of measurements. In this paper, we present an algorithm for coherent diffractive imaging with phaseless measurements. The algorithm accounts for both coherent and incoherent wave propagation and allows for reconstructing absorption as well as phase images that quantify the attenuation and the refraction of the waves when they go through an object. The algorithm requires coherent or partially coherent illumination, and several detectors to record the intensity of the distorted wave that passes through the object under inspection. To obtain enough information for imaging, a series of masks are introduced between the source and the object that create a diversity of illumination patterns.

Thresholding Greedy Pursuit for Sparse Recovery Problems

Mar 17, 2021

Abstract:We study here sparse recovery problems in the presence of additive noise. We analyze a thresholding version of the CoSaMP algorithm, named Thresholding Greedy Pursuit (TGP). We demonstrate that an appropriate choice of thresholding parameter, even without the knowledge of sparsity level of the signal and strength of the noise, can result in exact recovery with no false discoveries as the dimension of the data increases to infinity.

Fast signal recovery from quadratic measurements

Oct 11, 2020

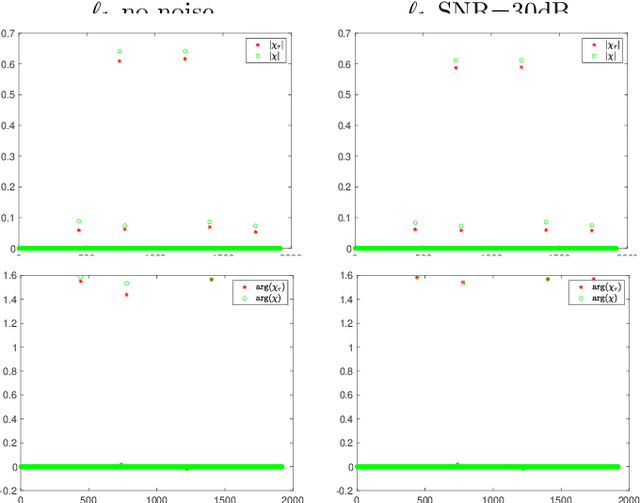

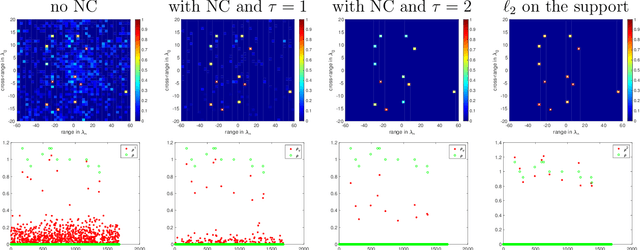

Abstract:We present a novel approach for recovering a sparse signal from cross-correlated data. Cross-correlations naturally arise in many fields of imaging, such as optics, holography and seismic interferometry. Compared to the sparse signal recovery problem that uses linear measurements, the unknown is now a matrix formed by the cross correlation of the unknown signal. Hence, the bottleneck for inversion is the number of unknowns that grows quadratically. The main idea of our proposed approach is to reduce the dimensionality of the problem by recovering only the diagonal of the unknown matrix, whose dimension grows linearly with the size of the problem. The keystone of the methodology is the use of an efficient {\em Noise Collector} that absorbs the data that come from the off-diagonal elements of the unknown matrix and that do not carry extra information about the support of the signal. This results in a linear problem whose cost is similar to the one that uses linear measurements. Our theory shows that the proposed approach provides exact support recovery when the data is not too noisy, and that there are no false positives for any level of noise. Moreover, our theory also demonstrates that when using cross-correlated data, the level of sparsity that can be recovered increases, scaling almost linearly with the number of data. The numerical experiments presented in the paper corroborate these findings.

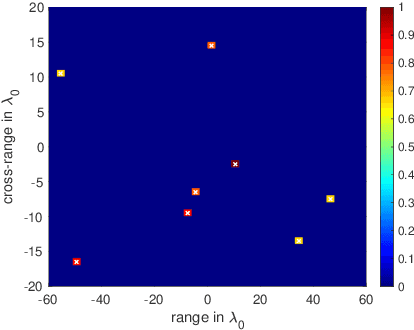

The Noise Collector for sparse recovery in high dimensions

Aug 05, 2019

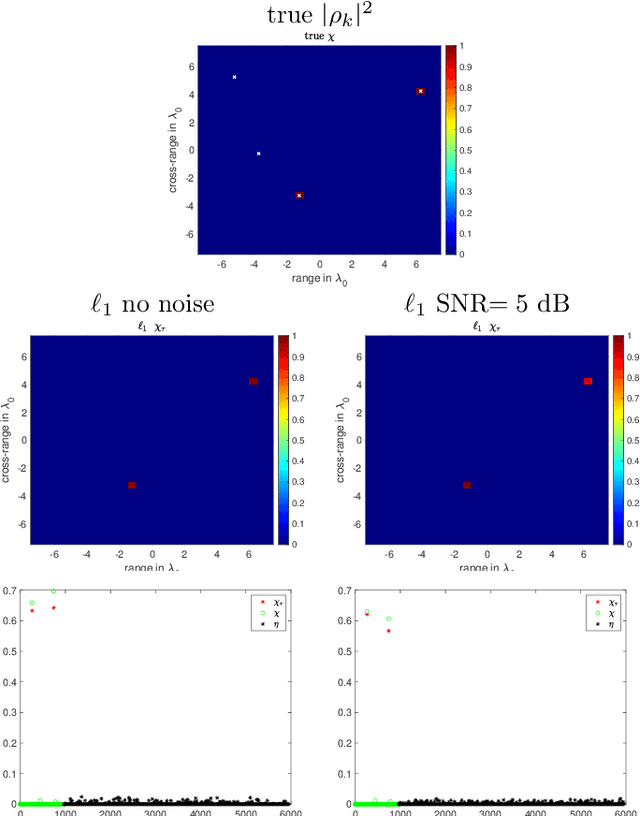

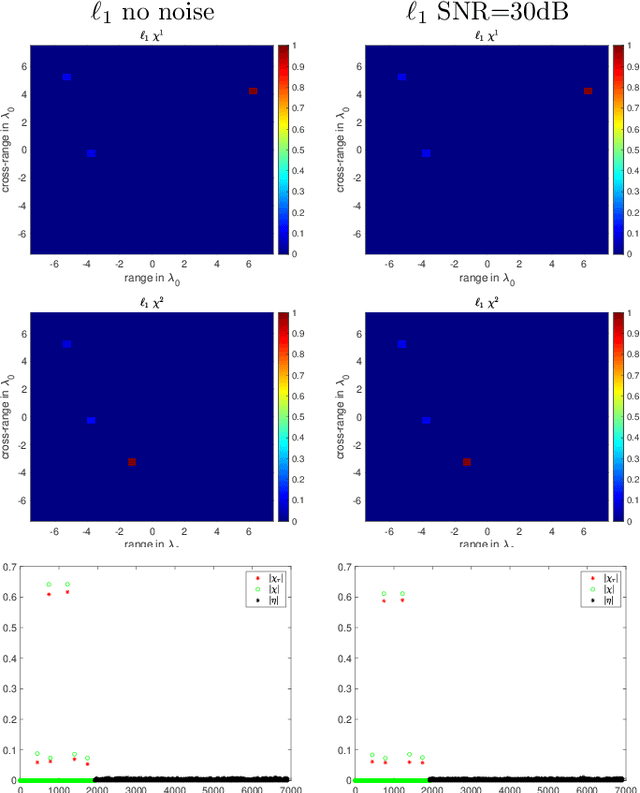

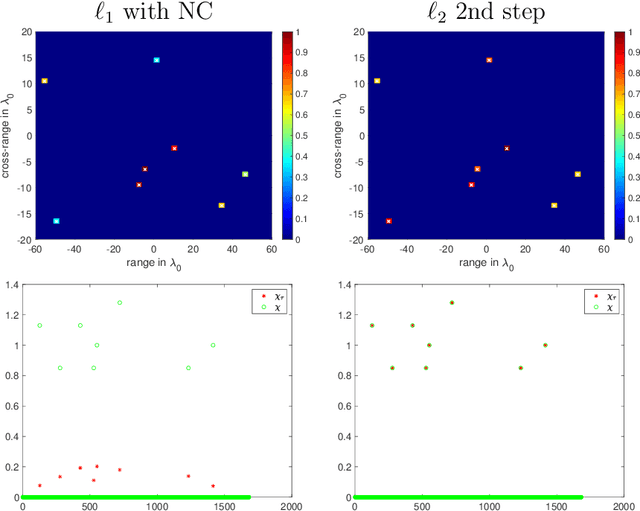

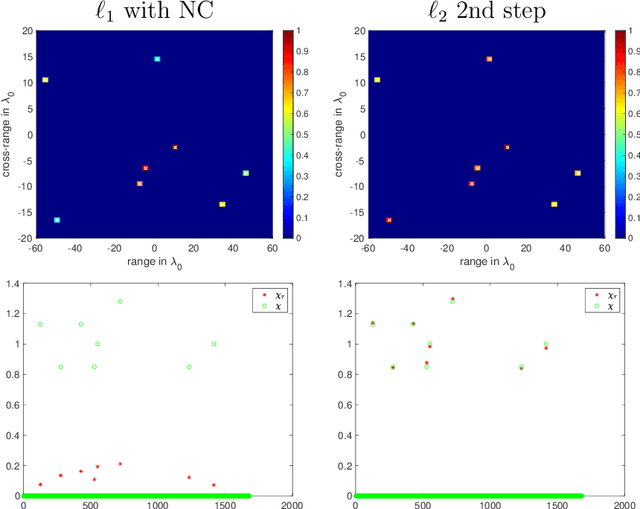

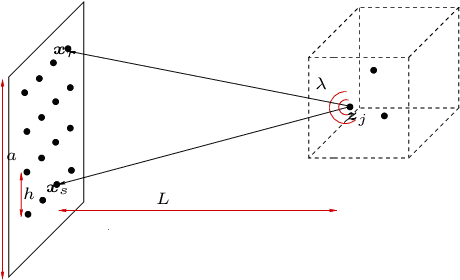

Abstract:The ability to detect sparse signals from noisy high-dimensional data is a top priority in modern science and engineering. A sparse solution of the linear system $A \rho = b_0$ can be found efficiently with an $l_1$-norm minimization approach if the data is noiseless. Detection of the signal's support from data corrupted by noise is still a challenging problem, especially if the level of noise must be estimated. We propose a new efficient approach that does not require any parameter estimation. We introduce the Noise Collector (NC) matrix $C$ and solve an augmented system $A \rho + C \eta = b_0 + e$, where $ e$ is the noise. We show that the $l_1$-norm minimal solution of the augmented system has zero false discovery rate for any level of noise and with probability that tends to one as the dimension of $ b_0$ increases to infinity. We also obtain exact support recovery if the noise is not too large, and develop a Fast Noise Collector Algorithm which makes the computational cost of solving the augmented system comparable to that of the original one. Finally, we demonstrate the effectiveness of the method in applications to passive array imaging.

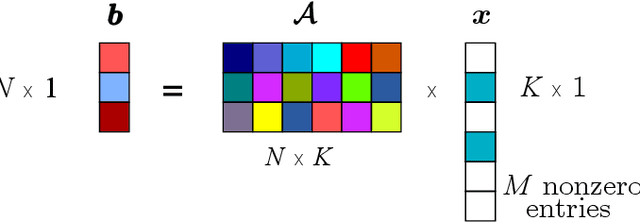

Imaging with highly incomplete and corrupted data

Aug 05, 2019

Abstract:We consider the problem of imaging sparse scenes from a few noisy data using an $l_1$-minimization approach. This problem can be cast as a linear system of the form $A \, \rho =b$, where $A$ is an $N\times K$ measurement matrix. We assume that the dimension of the unknown sparse vector $\rho \in {\mathbb{C}}^K$ is much larger than the dimension of the data vector $b \in {\mathbb{C}}^N$, i.e, $K \gg N$. We provide a theoretical framework that allows us to examine under what conditions the $\ell_1$-minimization problem admits a solution that is close to the exact one in the presence of noise. Our analysis shows that $l_1$-minimization is not robust for imaging with noisy data when high resolution is required. To improve the performance of $l_1$-minimization we propose to solve instead the augmented linear system $ [A \, | \, C] \rho =b$, where the $N \times \Sigma$ matrix $C$ is a noise collector. It is constructed so as its column vectors provide a frame on which the noise of the data, a vector of dimension $N$, can be well approximated. Theoretically, the dimension $\Sigma$ of the noise collector should be $e^N$ which would make its use not practical. However, our numerical results illustrate that robust results in the presence of noise can be obtained with a large enough number of columns $\Sigma \approx 10 K$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge