Hai Le

Large Language Models Meet Knowledge Graphs to Answer Factoid Questions

Oct 03, 2023Abstract:Recently, it has been shown that the incorporation of structured knowledge into Large Language Models significantly improves the results for a variety of NLP tasks. In this paper, we propose a method for exploring pre-trained Text-to-Text Language Models enriched with additional information from Knowledge Graphs for answering factoid questions. More specifically, we propose an algorithm for subgraphs extraction from a Knowledge Graph based on question entities and answer candidates. Then, we procure easily interpreted information with Transformer-based models through the linearization of the extracted subgraphs. Final re-ranking of the answer candidates with the extracted information boosts Hits@1 scores of the pre-trained text-to-text language models by 4-6%.

Thresholding Greedy Pursuit for Sparse Recovery Problems

Mar 17, 2021

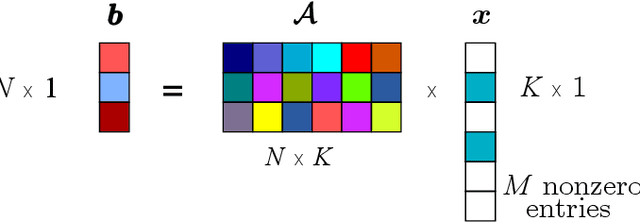

Abstract:We study here sparse recovery problems in the presence of additive noise. We analyze a thresholding version of the CoSaMP algorithm, named Thresholding Greedy Pursuit (TGP). We demonstrate that an appropriate choice of thresholding parameter, even without the knowledge of sparsity level of the signal and strength of the noise, can result in exact recovery with no false discoveries as the dimension of the data increases to infinity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge