Alexandros Ntoulas

DeepReduce: A Sparse-tensor Communication Framework for Distributed Deep Learning

Feb 05, 2021

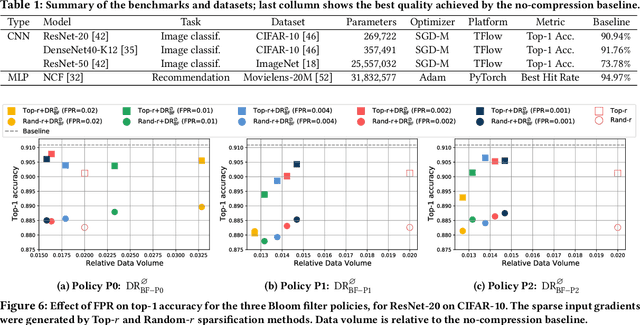

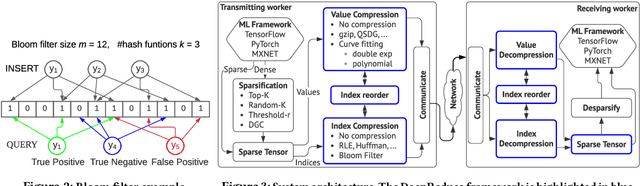

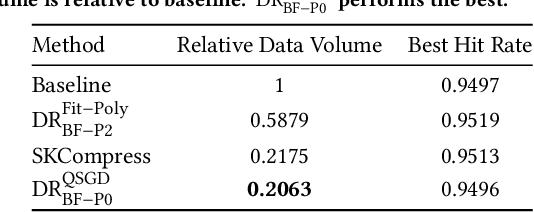

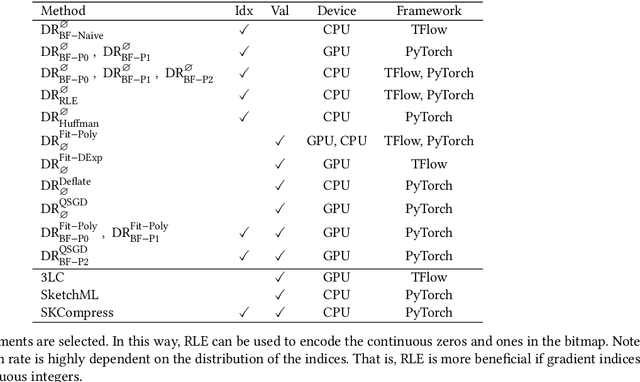

Abstract:Sparse tensors appear frequently in distributed deep learning, either as a direct artifact of the deep neural network's gradients, or as a result of an explicit sparsification process. Existing communication primitives are agnostic to the peculiarities of deep learning; consequently, they impose unnecessary communication overhead. This paper introduces DeepReduce, a versatile framework for the compressed communication of sparse tensors, tailored for distributed deep learning. DeepReduce decomposes sparse tensors in two sets, values and indices, and allows both independent and combined compression of these sets. We support a variety of common compressors, such as Deflate for values, or run-length encoding for indices. We also propose two novel compression schemes that achieve superior results: curve fitting-based for values and bloom filter-based for indices. DeepReduce is orthogonal to existing gradient sparsifiers and can be applied in conjunction with them, transparently to the end-user, to significantly lower the communication overhead. As proof of concept, we implement our approach on Tensorflow and PyTorch. Our experiments with large real models demonstrate that DeepReduce transmits fewer data and imposes lower computational overhead than existing methods, without affecting the training accuracy.

A Data Management Approach for Dataset Selection Using Human Computation

Jul 13, 2013

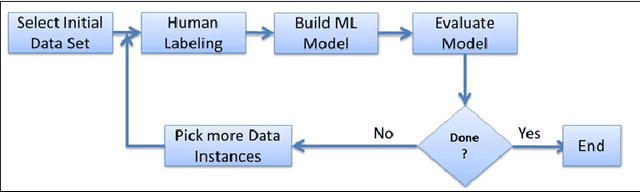

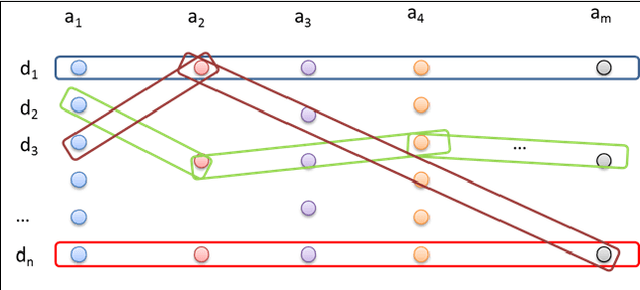

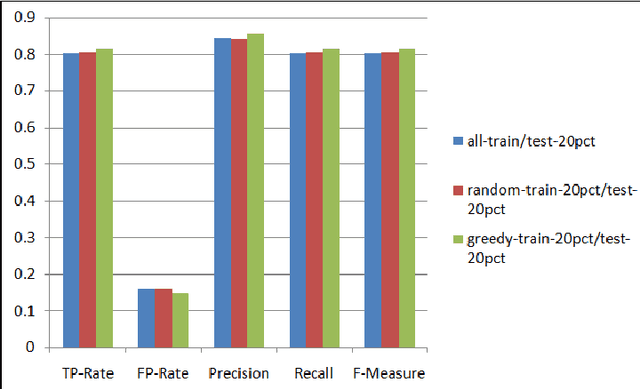

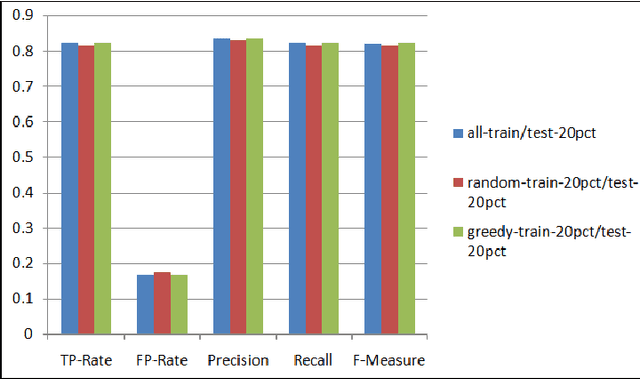

Abstract:As the number of applications that use machine learning algorithms increases, the need for labeled data useful for training such algorithms intensifies. Getting labels typically involves employing humans to do the annotation, which directly translates to training and working costs. Crowdsourcing platforms have made labeling cheaper and faster, but they still involve significant costs, especially for the cases where the potential set of candidate data to be labeled is large. In this paper we describe a methodology and a prototype system aiming at addressing this challenge for Web-scale problems in an industrial setting. We discuss ideas on how to efficiently select the data to use for training of machine learning algorithms in an attempt to reduce cost. We show results achieving good performance with reduced cost by carefully selecting which instances to label. Our proposed algorithm is presented as part of a framework for managing and generating training datasets, which includes, among other components, a human computation element.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge