Alexander Zook

On the Use and Misuse of Absorbing States in Multi-agent Reinforcement Learning

Nov 10, 2021

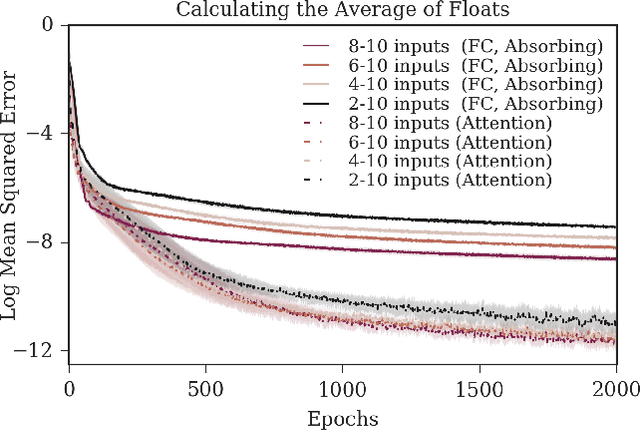

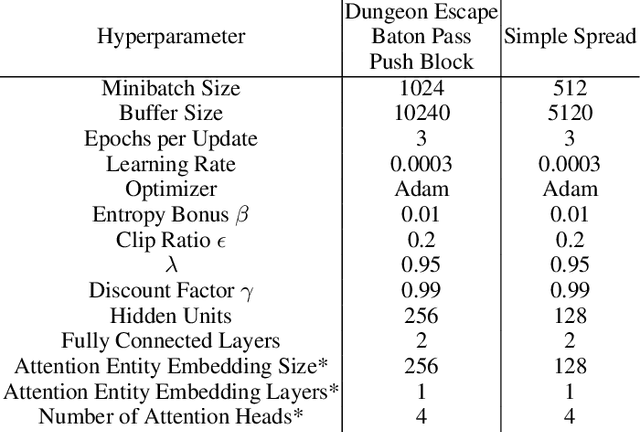

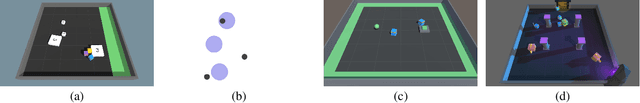

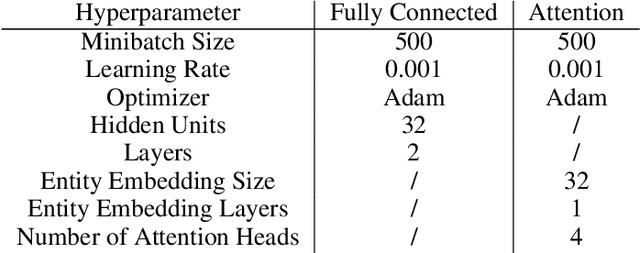

Abstract:The creation and destruction of agents in cooperative multi-agent reinforcement learning (MARL) is a critically under-explored area of research. Current MARL algorithms often assume that the number of agents within a group remains fixed throughout an experiment. However, in many practical problems, an agent may terminate before their teammates. This early termination issue presents a challenge: the terminated agent must learn from the group's success or failure which occurs beyond its own existence. We refer to propagating value from rewards earned by remaining teammates to terminated agents as the Posthumous Credit Assignment problem. Current MARL methods handle this problem by placing these agents in an absorbing state until the entire group of agents reaches a termination condition. Although absorbing states enable existing algorithms and APIs to handle terminated agents without modification, practical training efficiency and resource use problems exist. In this work, we first demonstrate that sample complexity increases with the quantity of absorbing states in a toy supervised learning task for a fully connected network, while attention is more robust to variable size input. Then, we present a novel architecture for an existing state-of-the-art MARL algorithm which uses attention instead of a fully connected layer with absorbing states. Finally, we demonstrate that this novel architecture significantly outperforms the standard architecture on tasks in which agents are created or destroyed within episodes as well as standard multi-agent coordination tasks.

Monte-Carlo Tree Search for Simulation-based Strategy Analysis

Aug 04, 2019

Abstract:Games are often designed to shape player behavior in a desired way; however, it can be unclear how design decisions affect the space of behaviors in a game. Designers usually explore this space through human playtesting, which can be time-consuming and of limited effectiveness in exhausting the space of possible behaviors. In this paper, we propose the use of automated planning agents to simulate humans of varying skill levels to generate game playthroughs. Metrics can then be gathered from these playthroughs to evaluate the current game design and identify its potential flaws. We demonstrate this technique in two games: the popular word game Scrabble and a collectible card game of our own design named Cardonomicon. Using these case studies, we show how using simulated agents to model humans of varying skill levels allows us to extract metrics to describe game balance (in the case of Scrabble) and highlight potential design flaws (in the case of Cardonomicon).

Automatic Game Design via Mechanic Generation

Aug 04, 2019

Abstract:Game designs often center on the game mechanics---rules governing the logical evolution of the game. We seek to develop an intelligent system that generates computer games. As first steps towards this goal we present a composable and cross-domain representation for game mechanics that draws from AI planning action representations. We use a constraint solver to generate mechanics subject to design requirements on the form of those mechanics---what they do in the game. A planner takes a set of generated mechanics and tests whether those mechanics meet playability requirements---controlling how mechanics function in a game to affect player behavior. We demonstrate our system by modeling and generating mechanics in a role-playing game, platformer game, and combined role-playing-platformer game.

Automatic Playtesting for Game Parameter Tuning via Active Learning

Aug 04, 2019

Abstract:Game designers use human playtesting to gather feedback about game design elements when iteratively improving a game. Playtesting, however, is expensive: human testers must be recruited, playtest results must be aggregated and interpreted, and changes to game designs must be extrapolated from these results. Can automated methods reduce this expense? We show how active learning techniques can formalize and automate a subset of playtesting goals. Specifically, we focus on the low-level parameter tuning required to balance a game once the mechanics have been chosen. Through a case study on a shoot-`em-up game we demonstrate the efficacy of active learning to reduce the amount of playtesting needed to choose the optimal set of game parameters for two classes of (formal) design objectives. This work opens the potential for additional methods to reduce the human burden of performing playtesting for a variety of relevant design concerns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge