Alexander Libov

ChaI-TeA: A Benchmark for Evaluating Autocompletion of Interactions with LLM-based Chatbots

Dec 24, 2024

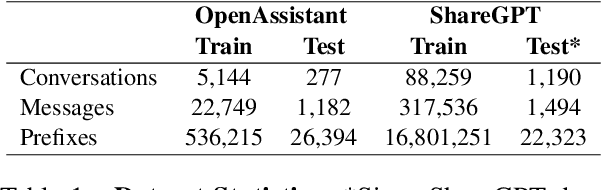

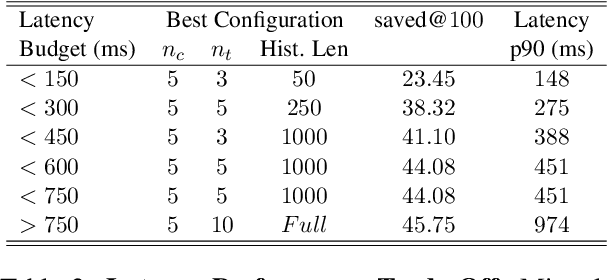

Abstract:The rise of LLMs has deflected a growing portion of human-computer interactions towards LLM-based chatbots. The remarkable abilities of these models allow users to interact using long, diverse natural language text covering a wide range of topics and styles. Phrasing these messages is a time and effort consuming task, calling for an autocomplete solution to assist users. We introduce the task of chatbot interaction autocomplete. We present ChaI-TeA: CHat InTEraction Autocomplete; An autcomplete evaluation framework for LLM-based chatbot interactions. The framework includes a formal definition of the task, coupled with suitable datasets and metrics. We use the framework to evaluate After formally defining the task along with suitable datasets and metrics, we test 9 models on the defined auto completion task, finding that while current off-the-shelf models perform fairly, there is still much room for improvement, mainly in ranking of the generated suggestions. We provide insights for practitioners working on this task and open new research directions for researchers in the field. We release our framework to serve as a foundation for future research.

Evaluating D-MERIT of Partial-annotation on Information Retrieval

Jun 23, 2024

Abstract:Retrieval models are often evaluated on partially-annotated datasets. Each query is mapped to a few relevant texts and the remaining corpus is assumed to be irrelevant. As a result, models that successfully retrieve false negatives are punished in evaluation. Unfortunately, completely annotating all texts for every query is not resource efficient. In this work, we show that using partially-annotated datasets in evaluation can paint a distorted picture. We curate D-MERIT, a passage retrieval evaluation set from Wikipedia, aspiring to contain all relevant passages for each query. Queries describe a group (e.g., ``journals about linguistics'') and relevant passages are evidence that entities belong to the group (e.g., a passage indicating that Language is a journal about linguistics). We show that evaluating on a dataset containing annotations for only a subset of the relevant passages might result in misleading ranking of the retrieval systems and that as more relevant texts are included in the evaluation set, the rankings converge. We propose our dataset as a resource for evaluation and our study as a recommendation for balance between resource-efficiency and reliable evaluation when annotating evaluation sets for text retrieval.

"Alexa, what do you do for fun?" Characterizing playful requests with virtual assistants

May 12, 2021

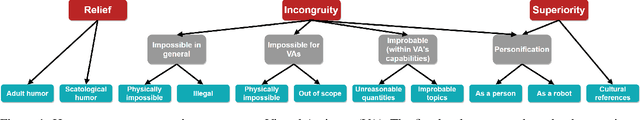

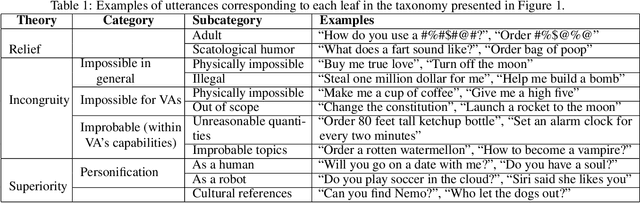

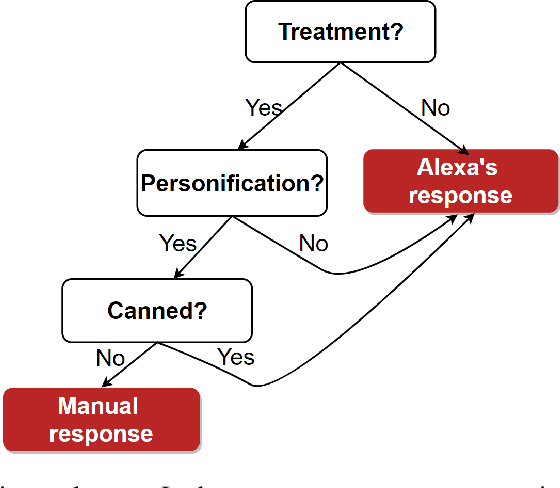

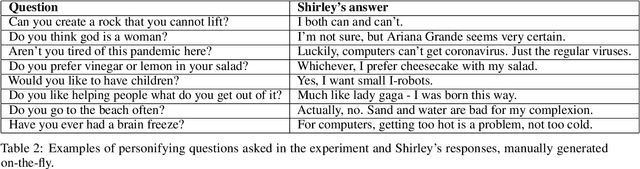

Abstract:Virtual assistants such as Amazon's Alexa, Apple's Siri, Google Home, and Microsoft's Cortana, are becoming ubiquitous in our daily lives and successfully help users in various daily tasks, such as making phone calls or playing music. Yet, they still struggle with playful utterances, which are not meant to be interpreted literally. Examples include jokes or absurd requests or questions such as, "Are you afraid of the dark?", "Who let the dogs out?", or "Order a zillion gummy bears". Today, virtual assistants often return irrelevant answers to such utterances, except for hard-coded ones addressed by canned replies. To address the challenge of automatically detecting playful utterances, we first characterize the different types of playful human-virtual assistant interaction. We introduce a taxonomy of playful requests rooted in theories of humor and refined by analyzing real-world traffic from Alexa. We then focus on one node, personification, where users refer to the virtual assistant as a person ("What do you do for fun?"). Our conjecture is that understanding such utterances will improve user experience with virtual assistants. We conducted a Wizard-of-Oz user study and showed that endowing virtual assistant s with the ability to identify humorous opportunities indeed has the potential to increase user satisfaction. We hope this work will contribute to the understanding of the landscape of the problem and inspire novel ideas and techniques towards the vision of giving virtual assistants a sense of humor.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge