Alexander Krawciw

Lunar Rover Cargo Transport: Mission Concept and Field Test

Jan 06, 2026Abstract:In future operations on the lunar surface, automated vehicles will be required to transport cargo between known locations. Such vehicles must be able to navigate precisely in safe regions to avoid natural hazards, human-constructed infrastructure, and dangerous dark shadows. Rovers must be able to park their cargo autonomously within a small tolerance to achieve a successful pickup and delivery. In this field test, Lidar Teach and Repeat provides an ideal autonomy solution for transporting cargo in this way. A one-tonne path-to-flight rover was driven in a semi-autonomous remote-control mode to create a network of safe paths. Once the route was taught, the rover immediately repeated the entire network of paths autonomously while carrying cargo. The closed-loop performance is accurate enough to align the vehicle to the cargo and pick it up. This field report describes a two-week deployment at the Canadian Space Agency's Analogue Terrain, culminating in a simulated lunar operation to evaluate the system's capabilities. Successful cargo collection and delivery were demonstrated in harsh environmental conditions.

UAV See, UGV Do: Aerial Imagery and Virtual Teach Enabling Zero-Shot Ground Vehicle Repeat

May 22, 2025Abstract:This paper presents Virtual Teach and Repeat (VirT&R): an extension of the Teach and Repeat (T&R) framework that enables GPS-denied, zero-shot autonomous ground vehicle navigation in untraversed environments. VirT&R leverages aerial imagery captured for a target environment to train a Neural Radiance Field (NeRF) model so that dense point clouds and photo-textured meshes can be extracted. The NeRF mesh is used to create a high-fidelity simulation of the environment for piloting an unmanned ground vehicle (UGV) to virtually define a desired path. The mission can then be executed in the actual target environment by using NeRF-derived point cloud submaps associated along the path and an existing LiDAR Teach and Repeat (LT&R) framework. We benchmark the repeatability of VirT&R on over 12 km of autonomous driving data using physical markings that allow a sim-to-real lateral path-tracking error to be obtained and compared with LT&R. VirT&R achieved measured root mean squared errors (RMSE) of 19.5 cm and 18.4 cm in two different environments, which are slightly less than one tire width (24 cm) on the robot used for testing, and respective maximum errors were 39.4 cm and 47.6 cm. This was done using only the NeRF-derived teach map, demonstrating that VirT&R has similar closed-loop path-tracking performance to LT&R but does not require a human to manually teach the path to the UGV in the actual environment.

Toward Teach and Repeat Across Seasonal Deep Snow Accumulation

May 02, 2025Abstract:Teach and repeat is a rapid way to achieve autonomy in challenging terrain and off-road environments. A human operator pilots the vehicles to create a network of paths that are mapped and associated with odometry. Immediately after teaching, the system can drive autonomously within its tracks. This precision lets operators remain confident that the robot will follow a traversable route. However, this operational paradigm has rarely been explored in off-road environments that change significantly through seasonal variation. This paper presents preliminary field trials using lidar and radar implementations of teach and repeat. Using a subset of the data from the upcoming FoMo dataset, we attempted to repeat routes that were 4 days, 44 days, and 113 days old. Lidar teach and repeat demonstrated a stronger ability to localize when the ground points were removed. FMCW radar was often able to localize on older maps, but only with small deviations from the taught path. Additionally, we highlight specific cases where radar localization failed with recent maps due to the high pitch or roll of the vehicle. We highlight lessons learned during the field deployment and highlight areas to improve to achieve reliable teach and repeat with seasonal changes in the environment. Please follow the dataset at https://norlab-ulaval.github.io/FoMo-website for updates and information on the data release.

Radar Teach and Repeat: Architecture and Initial Field Testing

Sep 16, 2024

Abstract:Frequency-modulated continuous-wave (FMCW) scanning radar has emerged as an alternative to spinning LiDAR for state estimation on mobile robots. Radar's longer wavelength is less affected by small particulates, providing operational advantages in challenging environments such as dust, smoke, and fog. This paper presents Radar Teach and Repeat (RT&R): a full-stack radar system for long-term off-road robot autonomy. RT&R can drive routes reliably in off-road cluttered areas without any GPS. We benchmark the radar system's closed-loop path-tracking performance and compare it to its 3D LiDAR counterpart. 11.8 km of autonomous driving was completed without interventions using only radar and gyro for navigation. RT&R was evaluated on different routes with progressively less structured scene geometry. RT&R achieved lateral path-tracking root mean squared errors (RMSE) of 5.6 cm, 7.5 cm, and 12.1 cm as the routes became more challenging. On the robot we used for testing, these RMSE values are less than half of the width of one tire (24 cm). These same routes have worst-case errors of 21.7 cm, 24.0 cm, and 43.8 cm. We conclude that radar is a viable alternative to LiDAR for long-term autonomy in challenging off-road scenarios. The implementation of RT&R is open-source and available at: https://github.com/utiasASRL/vtr3.

FoMo: A Proposal for a Multi-Season Dataset for Robot Navigation in Forêt Montmorency

Apr 19, 2024Abstract:In this paper, we propose the FoMo (For\^et Montmorency) dataset: a comprehensive, multi-season data collection. Located in the Montmorency Forest, Quebec, Canada, our dataset will capture a rich variety of sensory data over six distinct trajectories totaling 6 kilometers, repeated through different seasons to accumulate 42 kilometers of recorded data. The boreal forest environment increases the diversity of datasets for mobile robot navigation. This proposed dataset will feature a broad array of sensor modalities, including lidar, radar, and a navigation-grade Inertial Measurement Unit (IMU), against the backdrop of challenging boreal forest conditions. Notably, the FoMo dataset will be distinguished by its inclusion of seasonal variations, such as changes in tree canopy and snow depth up to 2 meters, presenting new challenges for robot navigation algorithms. Alongside, we will offer a centimeter-level accurate ground truth, obtained through Post Processed Kinematic (PPK) Global Navigation Satellite System (GNSS) correction, facilitating precise evaluation of odometry and localization algorithms. This work aims to spur advancements in autonomous navigation, enabling the development of robust algorithms capable of handling the dynamic, unstructured environments characteristic of boreal forests. With a public odometry and localization leaderboard and a dedicated software suite, we invite the robotics community to engage with the FoMo dataset by exploring new frontiers in robot navigation under extreme environmental variations. We seek feedback from the community based on this proposal to make the dataset as useful as possible. For further details and supplementary materials, please visit https://norlab-ulaval.github.io/FoMo-website/.

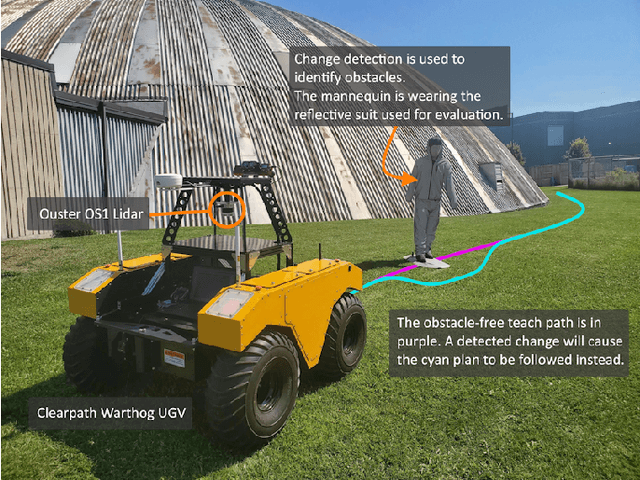

LaserSAM: Zero-Shot Change Detection Using Visual Segmentation of Spinning LiDAR

Feb 15, 2024Abstract:This paper presents an approach for applying camera perception techniques to spinning LiDAR data. To improve the robustness of long-term change detection from a 3D LiDAR, range and intensity information are rendered into virtual perspectives using a pinhole camera model. Hue-saturation-value image encoding is used to colourize the images by range and near-IR intensity. The LiDAR's active scene illumination makes it invariant to ambient brightness, which enables night-to-day change detection without additional processing. Using the colourized, perspective range image allows existing foundation models to detect semantic regions. Specifically, the Segment Anything Model detects semantically similar regions in both a previously acquired map and live view from a path-repeating robot. By comparing the masks in both views, changes in the live scan are detected. Results indicate that the Segment Anything Model is capable of accurately capturing the shape of arbitrary changes introduced into scenes. The system achieves an object recall of 82.6% and a precision of 47.0%. Changes can be detected through day-to-night illumination variations reliably. After pixel-level masks are generated, the one-to-one correspondence with 3D points means that the 2D masks can be directly used to recover the 3D location of the changes. Eventually, the detected 3D changes can be avoided by treating them as obstacles in a local motion planner.

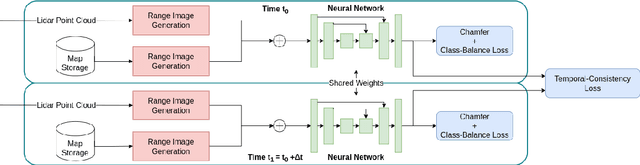

Change of Scenery: Unsupervised LiDAR Change Detection for Mobile Robots

Sep 19, 2023

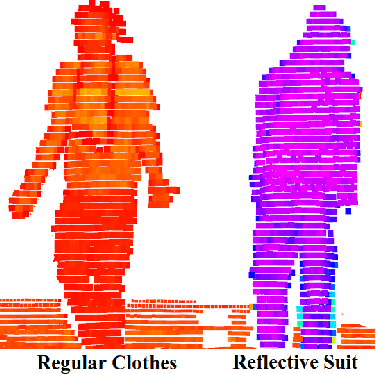

Abstract:This paper presents a fully unsupervised deep change detection approach for mobile robots with 3D LiDAR. In unstructured environments, it is infeasible to define a closed set of semantic classes. Instead, semantic segmentation is reformulated as binary change detection. We develop a neural network, RangeNetCD, that uses an existing point-cloud map and a live LiDAR scan to detect scene changes with respect to the map. Using a novel loss function, existing point-cloud semantic segmentation networks can be trained to perform change detection without any labels or assumptions about local semantics. We demonstrate the performance of this approach on data from challenging terrains; mean intersection over union (mIoU) scores range between 67.4% and 82.2% depending on the amount of environmental structure. This outperforms the geometric baseline used in all experiments. The neural network runs faster than 10Hz and is integrated into a robot's autonomy stack to allow safe navigation around obstacles that intersect the planned path. In addition, a novel method for the rapid automated acquisition of per-point ground-truth labels is described. Covering changed parts of the scene with retroreflective materials and applying a threshold filter to the intensity channel of the LiDAR allows for quantitative evaluation of the change detector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge