Akshay Hinduja

AONeuS: A Neural Rendering Framework for Acoustic-Optical Sensor Fusion

Feb 05, 2024

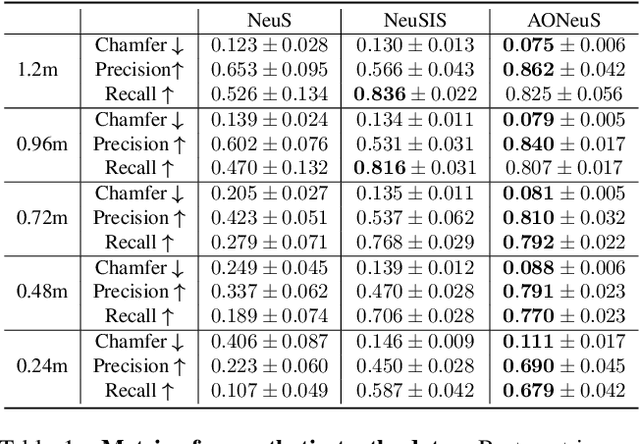

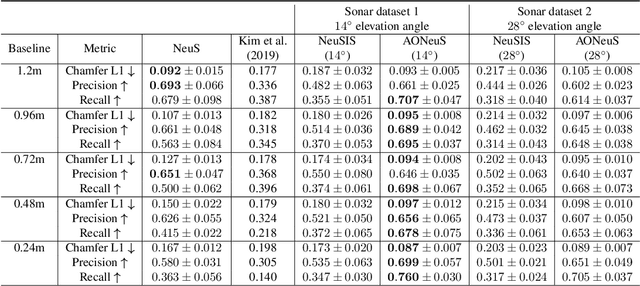

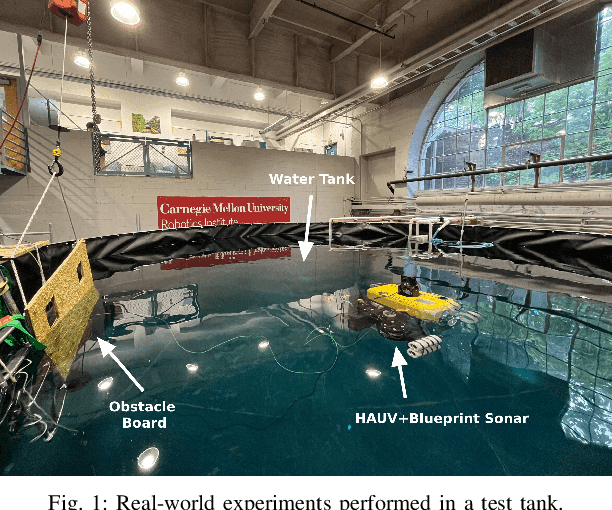

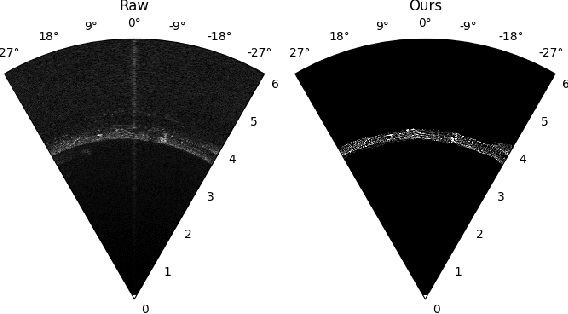

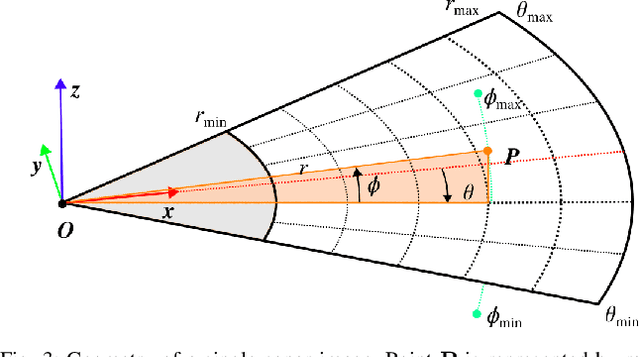

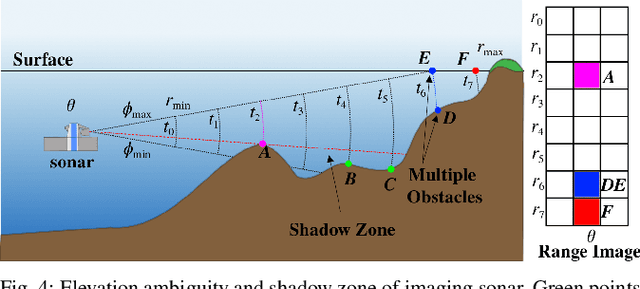

Abstract:Underwater perception and 3D surface reconstruction are challenging problems with broad applications in construction, security, marine archaeology, and environmental monitoring. Treacherous operating conditions, fragile surroundings, and limited navigation control often dictate that submersibles restrict their range of motion and, thus, the baseline over which they can capture measurements. In the context of 3D scene reconstruction, it is well-known that smaller baselines make reconstruction more challenging. Our work develops a physics-based multimodal acoustic-optical neural surface reconstruction framework (AONeuS) capable of effectively integrating high-resolution RGB measurements with low-resolution depth-resolved imaging sonar measurements. By fusing these complementary modalities, our framework can reconstruct accurate high-resolution 3D surfaces from measurements captured over heavily-restricted baselines. Through extensive simulations and in-lab experiments, we demonstrate that AONeuS dramatically outperforms recent RGB-only and sonar-only inverse-differentiable-rendering--based surface reconstruction methods. A website visualizing the results of our paper is located at this address: https://aoneus.github.io/

Multi-Radar Inertial Odometry for 3D State Estimation using mmWave Imaging Radar

Nov 15, 2023Abstract:State estimation is a crucial component for the successful implementation of robotic systems, relying on sensors such as cameras, LiDAR, and IMUs. However, in real-world scenarios, the performance of these sensors is degraded by challenging environments, e.g. adverse weather conditions and low-light scenarios. The emerging 4D imaging radar technology is capable of providing robust perception in adverse conditions. Despite its potential, challenges remain for indoor settings where noisy radar data does not present clear geometric features. Moreover, disparities in radar data resolution and field of view (FOV) can lead to inaccurate measurements. While prior research has explored radar-inertial odometry based on Doppler velocity information, challenges remain for the estimation of 3D motion because of the discrepancy in the FOV and resolution of the radar sensor. In this paper, we address Doppler velocity measurement uncertainties. We present a method to optimize body frame velocity while managing Doppler velocity uncertainty. Based on our observations, we propose a dual imaging radar configuration to mitigate the challenge of discrepancy in radar data. To attain high-precision 3D state estimation, we introduce a strategy that seamlessly integrates radar data with a consumer-grade IMU sensor using fixed-lag smoothing optimization. Finally, we evaluate our approach using real-world 3D motion data.

SONIC: Sonar Image Correspondence using Pose Supervised Learning for Imaging Sonars

Oct 23, 2023

Abstract:In this paper, we address the challenging problem of data association for underwater SLAM through a novel method for sonar image correspondence using learned features. We introduce SONIC (SONar Image Correspondence), a pose-supervised network designed to yield robust feature correspondence capable of withstanding viewpoint variations. The inherent complexity of the underwater environment stems from the dynamic and frequently limited visibility conditions, restricting vision to a few meters of often featureless expanses. This makes camera-based systems suboptimal in most open water application scenarios. Consequently, multibeam imaging sonars emerge as the preferred choice for perception sensors. However, they too are not without their limitations. While imaging sonars offer superior long-range visibility compared to cameras, their measurements can appear different from varying viewpoints. This inherent variability presents formidable challenges in data association, particularly for feature-based methods. Our method demonstrates significantly better performance in generating correspondences for sonar images which will pave the way for more accurate loop closure constraints and sonar-based place recognition. Code as well as simulated and real-world datasets will be made public to facilitate further development in the field.

Acoustic Localization and Communication Using a MEMS Microphone for Low-cost and Low-power Bio-inspired Underwater Robots

Oct 03, 2022

Abstract:Having accurate localization capabilities is one of the fundamental requirements of autonomous robots. For underwater vehicles, the choices for effective localization are limited due to limitations of GPS use in water and poor environmental visibility that makes camera-based methods ineffective. Popular inertial navigation methods for underwater localization using Doppler-velocity log sensors, sonar, high-end inertial navigation systems, or acoustic positioning systems require bulky expensive hardware which are incompatible with low cost, bio-inspired underwater robots. In this paper, we introduce an approach for underwater robot localization inspired by GPS methods known as acoustic pseudoranging. Our method allows us to potentially localize multiple bio-inspired robots equipped with commonly available micro electro-mechanical systems microphones. This is achieved through estimating the time difference of arrival of acoustic signals sent simultaneously through four speakers with a known constellation geometry. We also leverage the same acoustic framework to perform oneway communication with the robot to execute some primitive motions. To our knowledge, this is the first application of the approach for the on-board localization of small bio-inspired robots in water. Hardware schematics and the accompanying code are released to aid further development in the field3.

Conditional GANs for Sonar Image Filtering with Applications to Underwater Occupancy Mapping

Sep 23, 2022

Abstract:Underwater robots typically rely on acoustic sensors like sonar to perceive their surroundings. However, these sensors are often inundated with multiple sources and types of noise, which makes using raw data for any meaningful inference with features, objects, or boundary returns very difficult. While several conventional methods of dealing with noise exist, their success rates are unsatisfactory. This paper presents a novel application of conditional Generative Adversarial Networks (cGANs) to train a model to produce noise-free sonar images, outperforming several conventional filtering methods. Estimating free space is crucial for autonomous robots performing active exploration and mapping. Thus, we apply our approach to the task of underwater occupancy mapping and show superior free and occupied space inference when compared to conventional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge