Tianxiang Lin

Acoustic Neural 3D Reconstruction Under Pose Drift

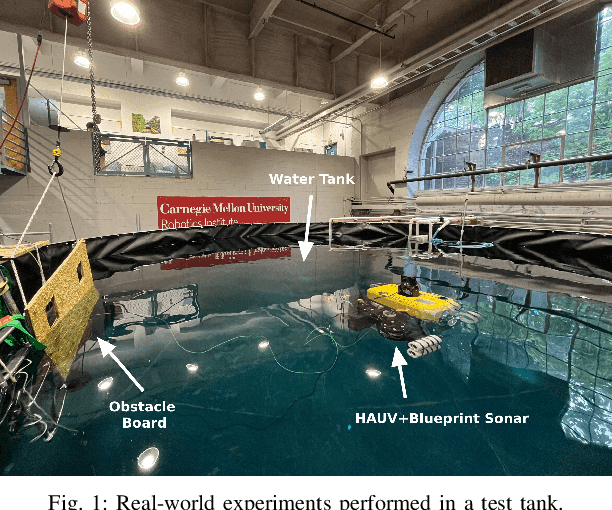

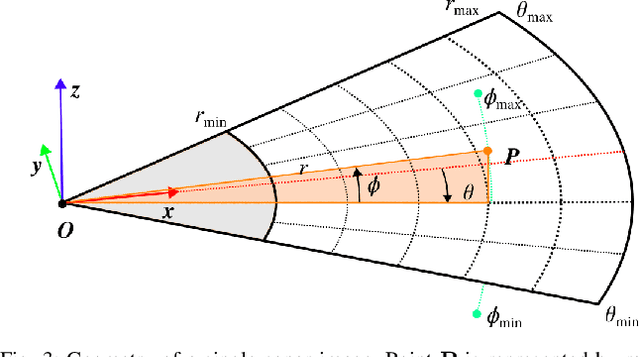

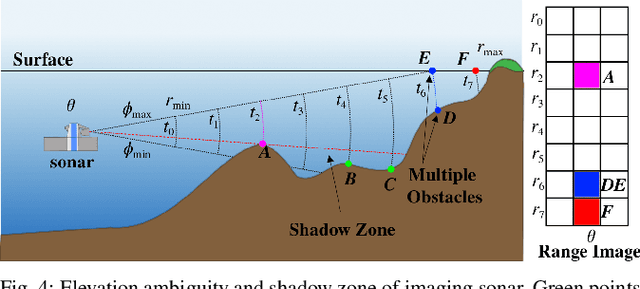

Mar 11, 2025Abstract:We consider the problem of optimizing neural implicit surfaces for 3D reconstruction using acoustic images collected with drifting sensor poses. The accuracy of current state-of-the-art 3D acoustic modeling algorithms is highly dependent on accurate pose estimation; small errors in sensor pose can lead to severe reconstruction artifacts. In this paper, we propose an algorithm that jointly optimizes the neural scene representation and sonar poses. Our algorithm does so by parameterizing the 6DoF poses as learnable parameters and backpropagating gradients through the neural renderer and implicit representation. We validated our algorithm on both real and simulated datasets. It produces high-fidelity 3D reconstructions even under significant pose drift.

Conditional GANs for Sonar Image Filtering with Applications to Underwater Occupancy Mapping

Sep 23, 2022

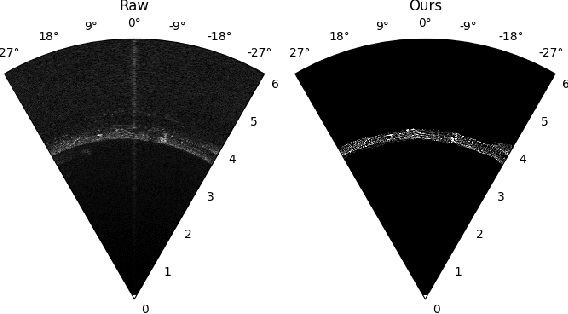

Abstract:Underwater robots typically rely on acoustic sensors like sonar to perceive their surroundings. However, these sensors are often inundated with multiple sources and types of noise, which makes using raw data for any meaningful inference with features, objects, or boundary returns very difficult. While several conventional methods of dealing with noise exist, their success rates are unsatisfactory. This paper presents a novel application of conditional Generative Adversarial Networks (cGANs) to train a model to produce noise-free sonar images, outperforming several conventional filtering methods. Estimating free space is crucial for autonomous robots performing active exploration and mapping. Thus, we apply our approach to the task of underwater occupancy mapping and show superior free and occupied space inference when compared to conventional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge