Akash Dhamasia

Reliable Multimodal Trajectory Prediction via Error Aligned Uncertainty Optimization

Dec 09, 2022

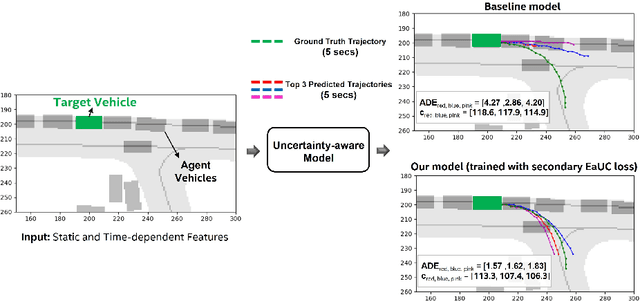

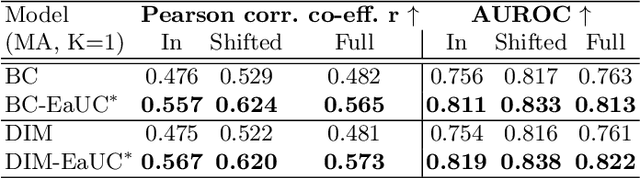

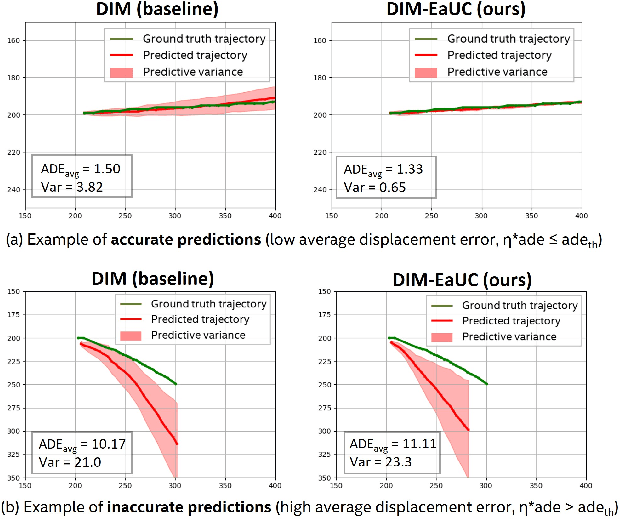

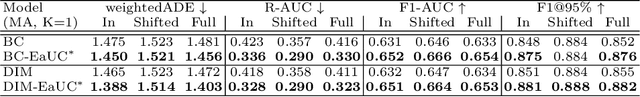

Abstract:Reliable uncertainty quantification in deep neural networks is very crucial in safety-critical applications such as automated driving for trustworthy and informed decision-making. Assessing the quality of uncertainty estimates is challenging as ground truth for uncertainty estimates is not available. Ideally, in a well-calibrated model, uncertainty estimates should perfectly correlate with model error. We propose a novel error aligned uncertainty optimization method and introduce a trainable loss function to guide the models to yield good quality uncertainty estimates aligning with the model error. Our approach targets continuous structured prediction and regression tasks, and is evaluated on multiple datasets including a large-scale vehicle motion prediction task involving real-world distributional shifts. We demonstrate that our method improves average displacement error by 1.69% and 4.69%, and the uncertainty correlation with model error by 17.22% and 19.13% as quantified by Pearson correlation coefficient on two state-of-the-art baselines.

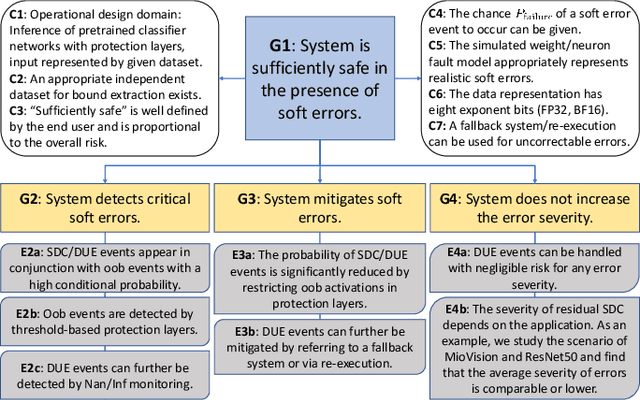

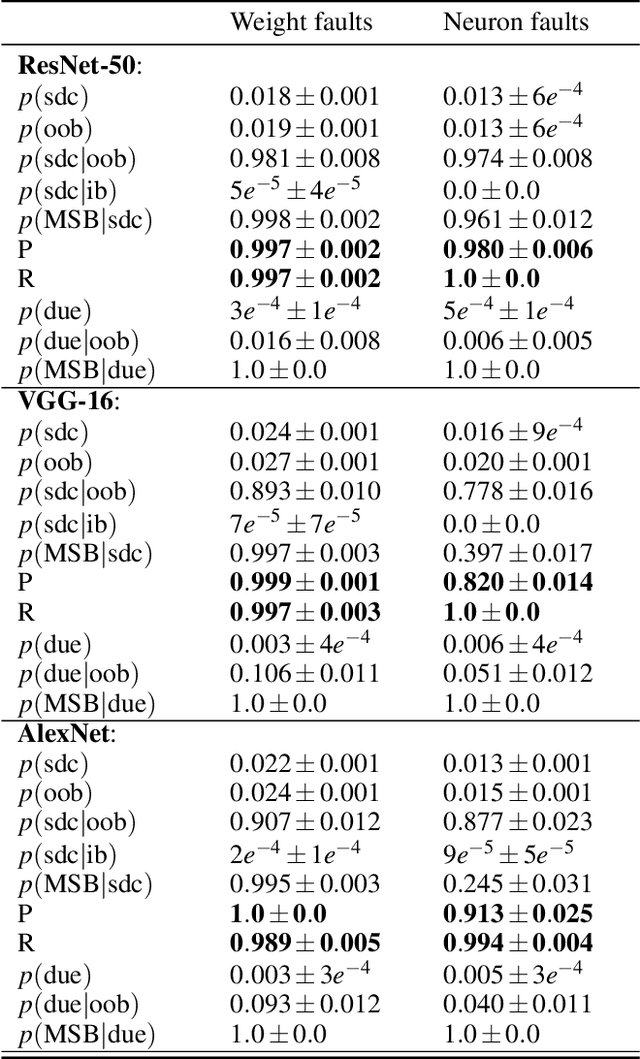

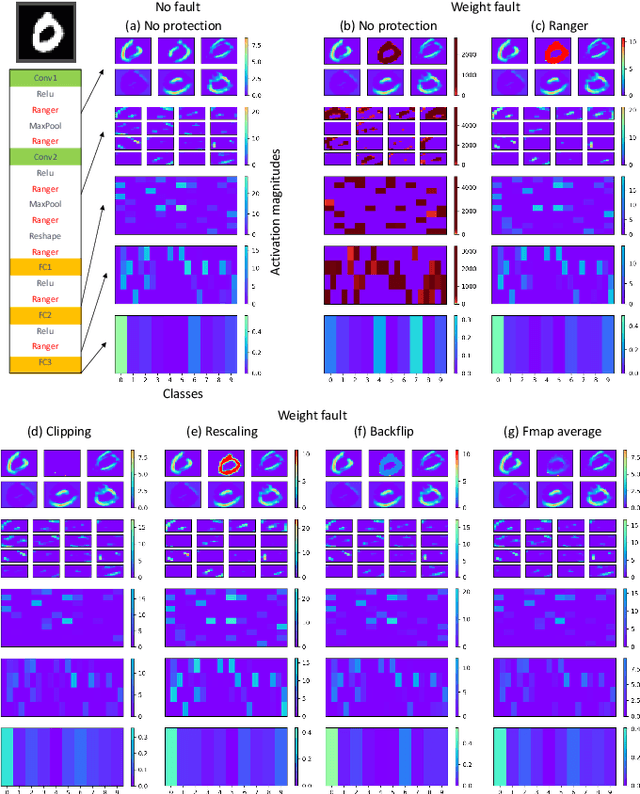

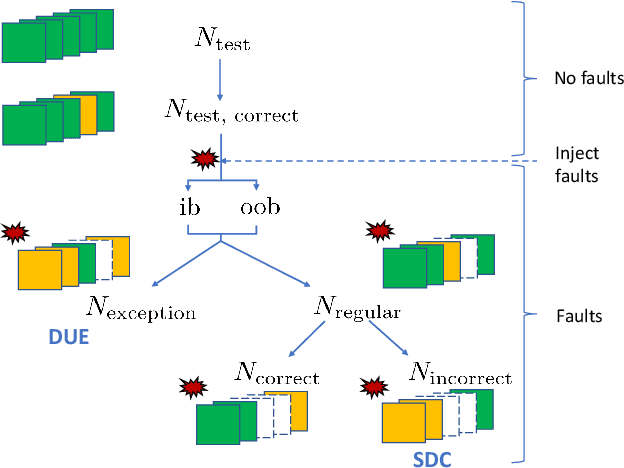

Towards a Safety Case for Hardware Fault Tolerance in Convolutional Neural Networks Using Activation Range Supervision

Aug 16, 2021

Abstract:Convolutional neural networks (CNNs) have become an established part of numerous safety-critical computer vision applications, including human robot interactions and automated driving. Real-world implementations will need to guarantee their robustness against hardware soft errors corrupting the underlying platform memory. Based on the previously observed efficacy of activation clipping techniques, we build a prototypical safety case for classifier CNNs by demonstrating that range supervision represents a highly reliable fault detector and mitigator with respect to relevant bit flips, adopting an eight-exponent floating point data representation. We further explore novel, non-uniform range restriction methods that effectively suppress the probability of silent data corruptions and uncorrectable errors. As a safety-relevant end-to-end use case, we showcase the benefit of our approach in a vehicle classification scenario, using ResNet-50 and the traffic camera data set MIOVision. The quantitative evidence provided in this work can be leveraged to inspire further and possibly more complex CNN safety arguments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge