Ajay Seth

A Tightly Coupled IMU-Based Motion Capture Approach for Estimating Multibody Kinematics and Kinetics

May 13, 2025Abstract:Inertial Measurement Units (IMUs) enable portable, multibody motion capture (MoCap) in diverse environments beyond the laboratory, making them a practical choice for diagnosing mobility disorders and supporting rehabilitation in clinical or home settings. However, challenges associated with IMU measurements, including magnetic distortions and drift errors, complicate their broader use for MoCap. In this work, we propose a tightly coupled motion capture approach that directly integrates IMU measurements with multibody dynamic models via an Iterated Extended Kalman Filter (IEKF) to simultaneously estimate the system's kinematics and kinetics. By enforcing kinematic and kinetic properties and utilizing only accelerometer and gyroscope data, our method improves IMU-based state estimation accuracy. Our approach is designed to allow for incorporating additional sensor data, such as optical MoCap measurements and joint torque readings, to further enhance estimation accuracy. We validated our approach using highly accurate ground truth data from a 3 Degree of Freedom (DoF) pendulum and a 6 DoF Kuka robot. We demonstrate a maximum Root Mean Square Difference (RMSD) in the pendulum's computed joint angles of 3.75 degrees compared to optical MoCap Inverse Kinematics (IK), which serves as the gold standard in the absence of internal encoders. For the Kuka robot, we observe a maximum joint angle RMSD of 3.24 degrees compared to the Kuka's internal encoders, while the maximum joint angle RMSD of the optical MoCap IK compared to the encoders was 1.16 degrees. Additionally, we report a maximum joint torque RMSD of 2 Nm in the pendulum compared to optical MoCap Inverse Dynamics (ID), and 3.73 Nm in the Kuka robot relative to its internal torque sensors.

Biomechanics-Aware Trajectory Optimization for Navigation during Robotic Physiotherapy

Nov 06, 2024

Abstract:Robotic devices hold promise for aiding patients in orthopedic rehabilitation. However, current robotic-assisted physiotherapy methods struggle including biomechanical metrics in their control algorithms, crucial for safe and effective therapy. This paper introduces BATON, a Biomechanics-Aware Trajectory Optimization approach to robotic Navigation of human musculoskeletal loads. The method integrates a high-fidelity musculoskeletal model of the human shoulder into real-time control of robot-patient interaction during rotator cuff tendon rehabilitation. We extract skeletal dynamics and tendon loading information from an OpenSim shoulder model to solve an optimal control problem, generating strain-minimizing trajectories. Trajectories were realized on a healthy subject by an impedance-controlled robot while estimating the state of the subject's shoulder. Target poses were prescribed to design personalized rehabilitation across a wide range of shoulder motion avoiding high-strain areas. BATON was designed with real-time capabilities, enabling continuous trajectory replanning to address unforeseen variations in tendon strain, such as those from changing muscle activation of the subject.

3D Kinematics Estimation from Video with a Biomechanical Model and Synthetic Training Data

Feb 26, 2024Abstract:Accurate 3D kinematics estimation of human body is crucial in various applications for human health and mobility, such as rehabilitation, injury prevention, and diagnosis, as it helps to understand the biomechanical loading experienced during movement. Conventional marker-based motion capture is expensive in terms of financial investment, time, and the expertise required. Moreover, due to the scarcity of datasets with accurate annotations, existing markerless motion capture methods suffer from challenges including unreliable 2D keypoint detection, limited anatomic accuracy, and low generalization capability. In this work, we propose a novel biomechanics-aware network that directly outputs 3D kinematics from two input views with consideration of biomechanical prior and spatio-temporal information. To train the model, we create synthetic dataset ODAH with accurate kinematics annotations generated by aligning the body mesh from the SMPL-X model and a full-body OpenSim skeletal model. Our extensive experiments demonstrate that the proposed approach, only trained on synthetic data, outperforms previous state-of-the-art methods when evaluated across multiple datasets, revealing a promising direction for enhancing video-based human motion capture.

Towards Single Camera Human 3D-Kinematics

Jan 13, 2023

Abstract:Markerless estimation of 3D Kinematics has the great potential to clinically diagnose and monitor movement disorders without referrals to expensive motion capture labs; however, current approaches are limited by performing multiple de-coupled steps to estimate the kinematics of a person from videos. Most current techniques work in a multi-step approach by first detecting the pose of the body and then fitting a musculoskeletal model to the data for accurate kinematic estimation. Errors in training data of the pose detection algorithms, model scaling, as well the requirement of multiple cameras limit the use of these techniques in a clinical setting. Our goal is to pave the way toward fast, easily applicable and accurate 3D kinematic estimation \xdeleted{in a clinical setting}. To this end, we propose a novel approach for direct 3D human kinematic estimation D3KE from videos using deep neural networks. Our experiments demonstrate that the proposed end-to-end training is robust and outperforms 2D and 3D markerless motion capture based kinematic estimation pipelines in terms of joint angles error by a large margin (35\% from 5.44 to 3.54 degrees). We show that D3KE is superior to the multi-step approach and can run at video framerate speeds. This technology shows the potential for clinical analysis from mobile devices in the future.

* Published in the MDPI Sensors special Issue "Sensors and Musculoskeletal Dynamics to Evaluate Human Movement" on December 28, 2022

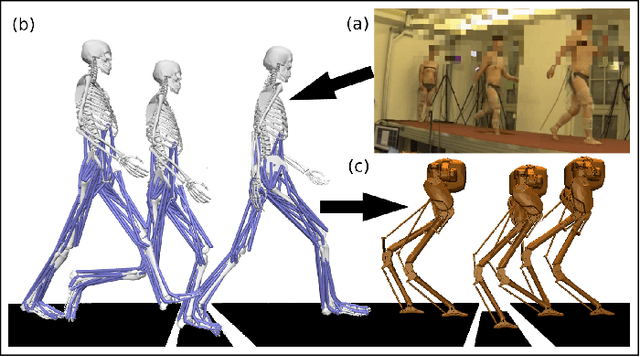

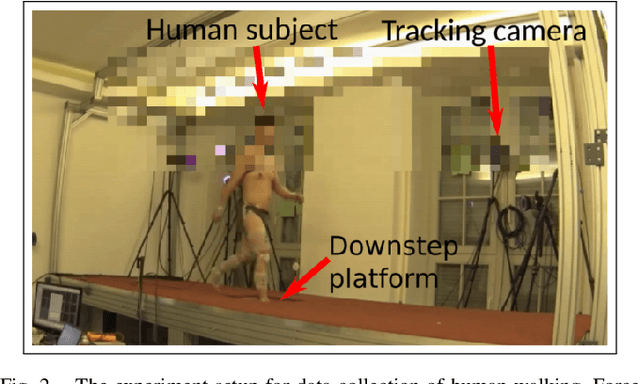

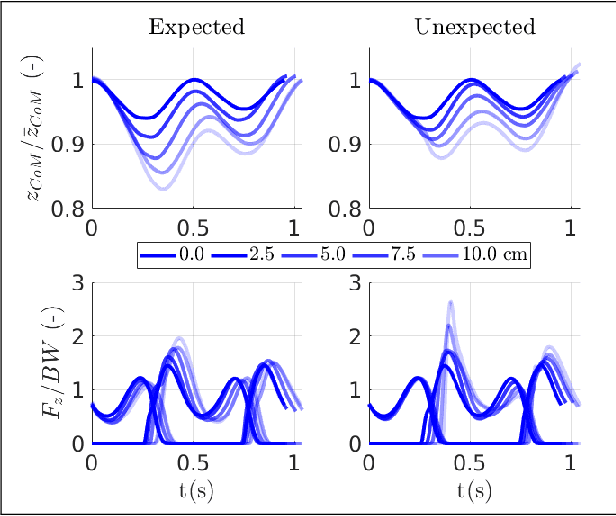

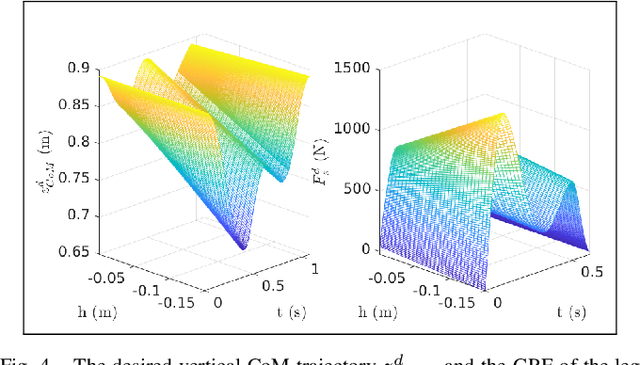

From Human Walking to Bipedal Robot Locomotion: Reflex Inspired Compensation on Planned and Unplanned Downsteps

Sep 07, 2022

Abstract:Humans are able to negotiate downstep behaviors -- both planned and unplanned -- with remarkable agility and ease. The goal of this paper is to systematically study the translation of this human behavior to bipedal walking robots, even if the morphology is inherently different. Concretely, we begin with human data wherein planned and unplanned downsteps are taken. We analyze this data from the perspective of reduced-order modeling of the human, encoding the center of mass (CoM) kinematics and contact forces, which allows for the translation of these behaviors into the corresponding reduced-order model of a bipedal robot. We embed the resulting behaviors into the full-order dynamics of a bipedal robot via nonlinear optimization-based controllers. The end result is the demonstration of planned and unplanned downsteps in simulation on an underactuated walking robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge