Ahmed Selim

Using Deep Learning-based Features Extracted from CT scans to Predict Outcomes in COVID-19 Patients

May 10, 2022

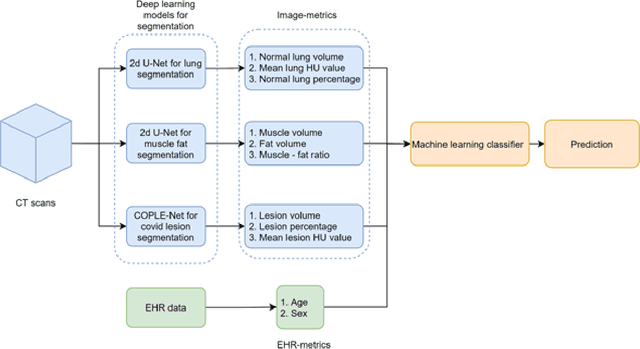

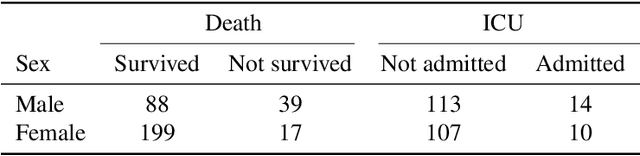

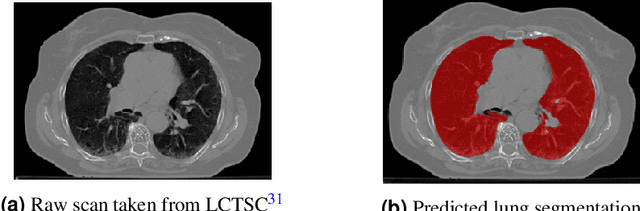

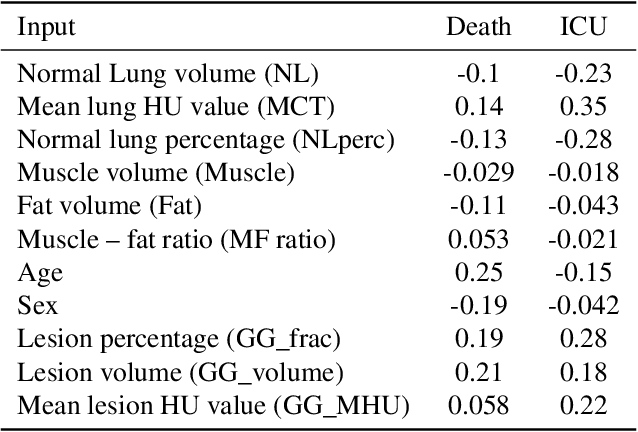

Abstract:The COVID-19 pandemic has had a considerable impact on day-to-day life. Tackling the disease by providing the necessary resources to the affected is of paramount importance. However, estimation of the required resources is not a trivial task given the number of factors which determine the requirement. This issue can be addressed by predicting the probability that an infected patient requires Intensive Care Unit (ICU) support and the importance of each of the factors that influence it. Moreover, to assist the doctors in determining the patients at high risk of fatality, the probability of death is also calculated. For determining both the patient outcomes (ICU admission and death), a novel methodology is proposed by combining multi-modal features, extracted from Computed Tomography (CT) scans and Electronic Health Record (EHR) data. Deep learning models are leveraged to extract quantitative features from CT scans. These features combined with those directly read from the EHR database are fed into machine learning models to eventually output the probabilities of patient outcomes. This work demonstrates both the ability to apply a broad set of deep learning methods for general quantification of Chest CT scans and the ability to link these quantitative metrics to patient outcomes. The effectiveness of the proposed method is shown by testing it on an internally curated dataset, achieving a mean area under Receiver operating characteristic curve (AUC) of 0.77 on ICU admission prediction and a mean AUC of 0.73 on death prediction using the best performing classifiers.

Large-scale, Fast and Accurate Shot Boundary Detection through Spatio-temporal Convolutional Neural Networks

Jul 27, 2017

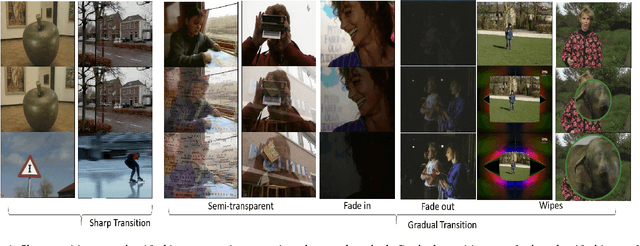

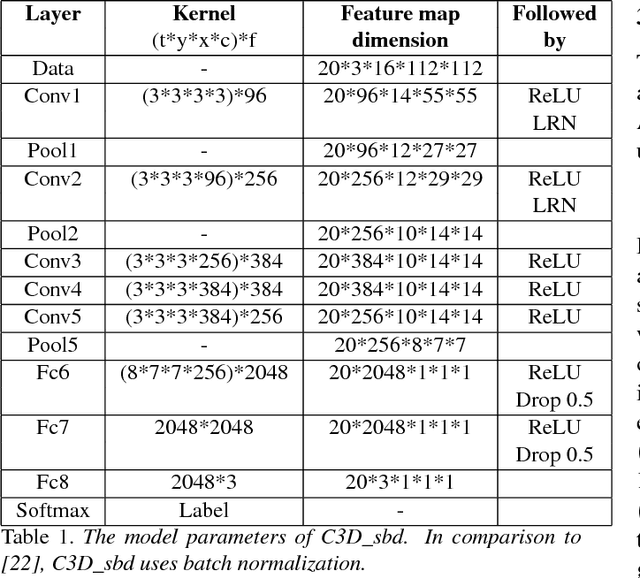

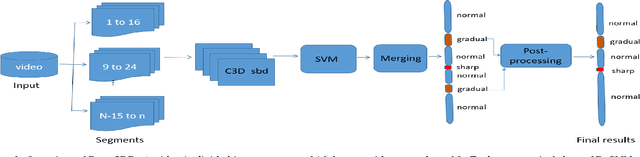

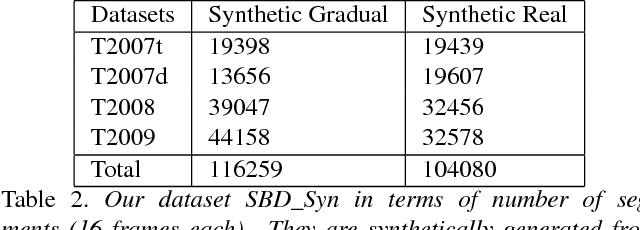

Abstract:Shot boundary detection (SBD) is an important pre-processing step for video manipulation. Here, each segment of frames is classified as either sharp, gradual or no transition. Current SBD techniques analyze hand-crafted features and attempt to optimize both detection accuracy and processing speed. However, the heavy computations of optical flow prevents this. To achieve this aim, we present an SBD technique based on spatio-temporal Convolutional Neural Networks (CNN). Since current datasets are not large enough to train an accurate SBD CNN, we present a new dataset containing more than 3.5 million frames of sharp and gradual transitions. The transitions are generated synthetically using image compositing models. Our dataset contain additional 70,000 frames of important hard-negative no transitions. We perform the largest evaluation to date for one SBD algorithm, on real and synthetic data, containing more than 4.85 million frames. In comparison to the state of the art, we outperform dissolve gradual detection, generate competitive performance for sharp detections and produce significant improvement in wipes. In addition, we are up to 11 times faster than the state of the art.

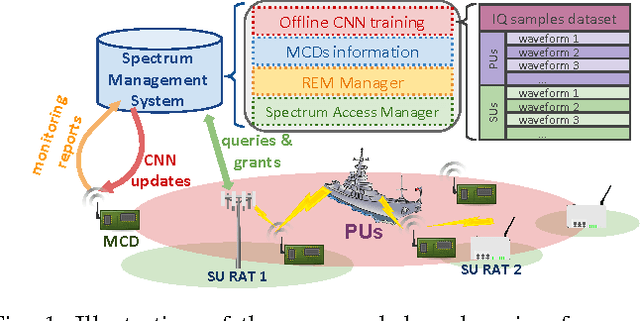

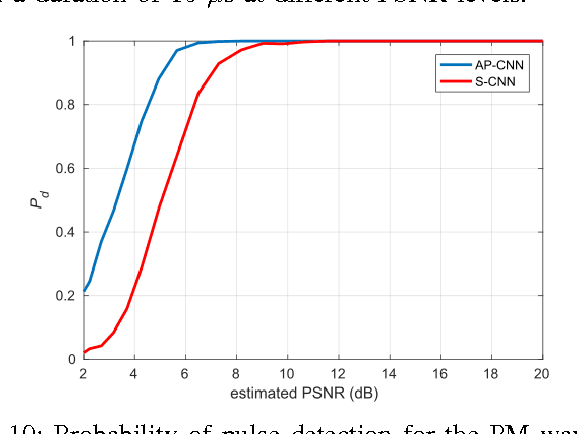

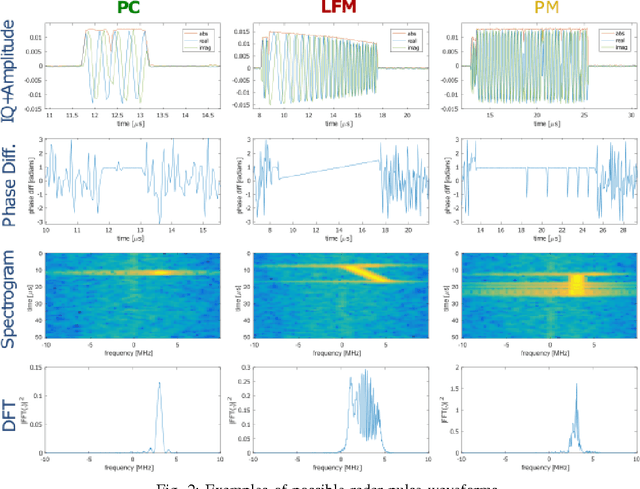

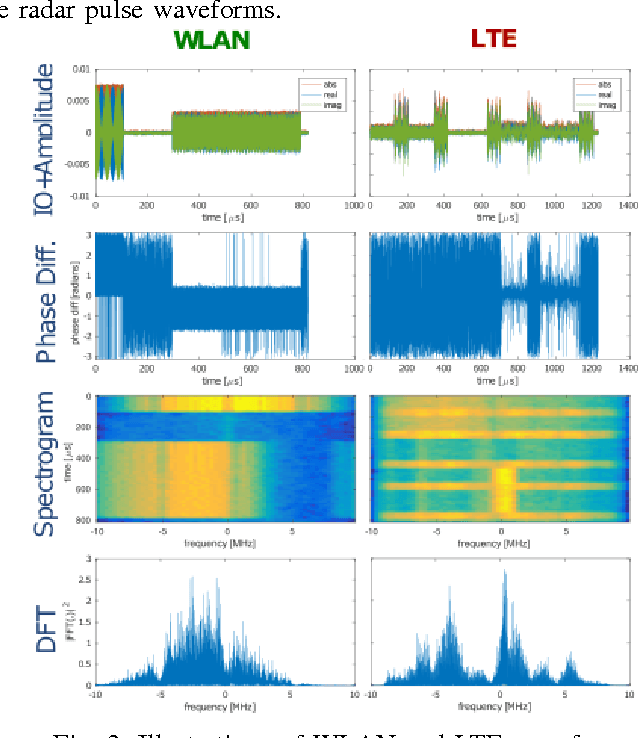

Spectrum Monitoring for Radar Bands using Deep Convolutional Neural Networks

May 01, 2017

Abstract:In this paper, we present a spectrum monitoring framework for the detection of radar signals in spectrum sharing scenarios. The core of our framework is a deep convolutional neural network (CNN) model that enables Measurement Capable Devices to identify the presence of radar signals in the radio spectrum, even when these signals are overlapped with other sources of interference, such as commercial LTE and WLAN. We collected a large dataset of RF measurements, which include the transmissions of multiple radar pulse waveforms, downlink LTE, WLAN, and thermal noise. We propose a pre-processing data representation that leverages the amplitude and phase shifts of the collected samples. This representation allows our CNN model to achieve a classification accuracy of 99.6% on our testing dataset. The trained CNN model is then tested under various SNR values, outperforming other models, such as spectrogram-based CNN models.

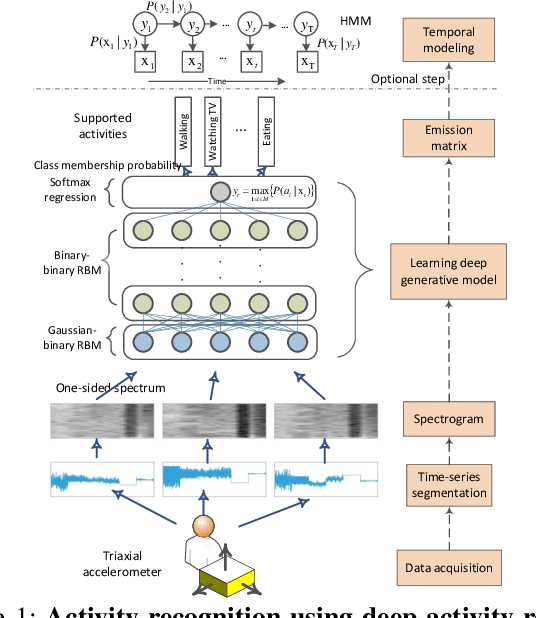

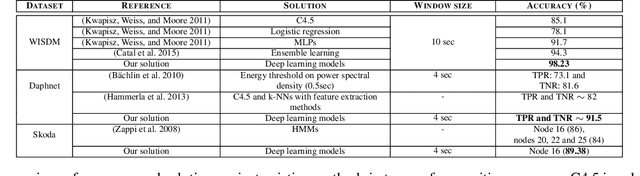

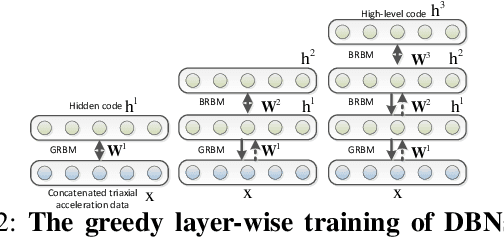

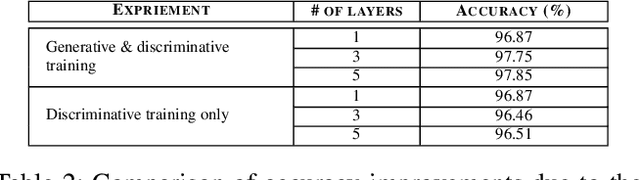

Deep Activity Recognition Models with Triaxial Accelerometers

Oct 25, 2016

Abstract:Despite the widespread installation of accelerometers in almost all mobile phones and wearable devices, activity recognition using accelerometers is still immature due to the poor recognition accuracy of existing recognition methods and the scarcity of labeled training data. We consider the problem of human activity recognition using triaxial accelerometers and deep learning paradigms. This paper shows that deep activity recognition models (a) provide better recognition accuracy of human activities, (b) avoid the expensive design of handcrafted features in existing systems, and (c) utilize the massive unlabeled acceleration samples for unsupervised feature extraction. Moreover, a hybrid approach of deep learning and hidden Markov models (DL-HMM) is presented for sequential activity recognition. This hybrid approach integrates the hierarchical representations of deep activity recognition models with the stochastic modeling of temporal sequences in the hidden Markov models. We show substantial recognition improvement on real world datasets over state-of-the-art methods of human activity recognition using triaxial accelerometers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge