Ahmed Irfan

OVERT: An Algorithm for Safety Verification of Neural Network Control Policies for Nonlinear Systems

Aug 03, 2021

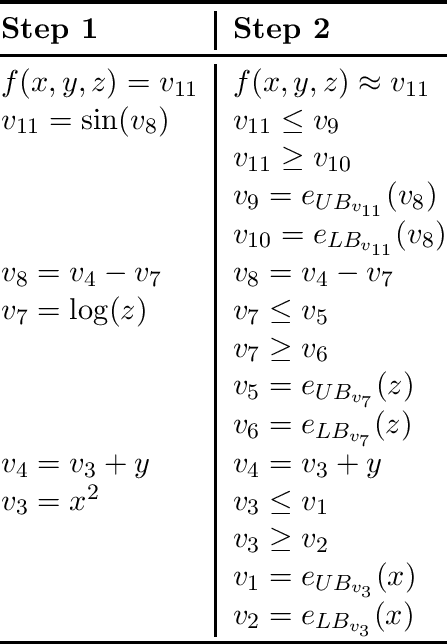

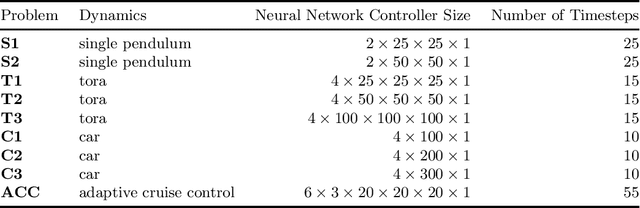

Abstract:Deep learning methods can be used to produce control policies, but certifying their safety is challenging. The resulting networks are nonlinear and often very large. In response to this challenge, we present OVERT: a sound algorithm for safety verification of nonlinear discrete-time closed loop dynamical systems with neural network control policies. The novelty of OVERT lies in combining ideas from the classical formal methods literature with ideas from the newer neural network verification literature. The central concept of OVERT is to abstract nonlinear functions with a set of optimally tight piecewise linear bounds. Such piecewise linear bounds are designed for seamless integration into ReLU neural network verification tools. OVERT can be used to prove bounded-time safety properties by either computing reachable sets or solving feasibility queries directly. We demonstrate various examples of safety verification for several classical benchmark examples. OVERT compares favorably to existing methods both in computation time and in tightness of the reachable set.

Parallelization Techniques for Verifying Neural Networks

Apr 26, 2020

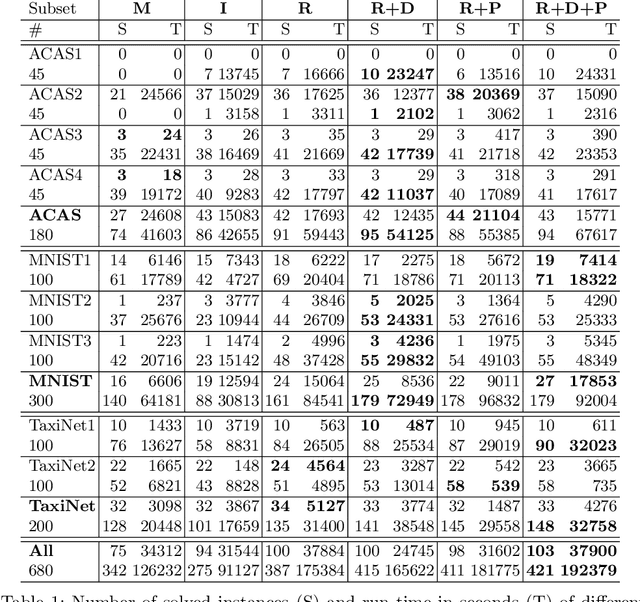

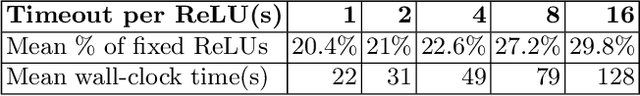

Abstract:Inspired by recent successes with parallel optimization techniques for solving Boolean satisfiability, we investigate a set of strategies and heuristics that aim to leverage parallel computing to improve the scalability of neural network verification. We introduce an algorithm based on partitioning the verification problem in an iterative manner and explore two partitioning strategies, that work by partitioning the input space or by case splitting on the phases of the neuron activations, respectively. We also introduce a highly parallelizable pre-processing algorithm that uses the neuron activation phases to simplify the neural network verification problems. An extensive experimental evaluation shows the benefit of these techniques on both existing benchmarks and new benchmarks from the aviation domain. A preliminary experiment with ultra-scaling our algorithm using a large distributed cloud-based platform also shows promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge