Aditya Kadam

GAME-ON: Graph Attention Network based Multimodal Fusion for Fake News Detection

Mar 01, 2022

Abstract:Social media in present times has a significant and growing influence. Fake news being spread on these platforms have a disruptive and damaging impact on our lives. Furthermore, as multimedia content improves the visibility of posts more than text data, it has been observed that often multimedia is being used for creating fake content. A plethora of previous multimodal-based work has tried to address the problem of modeling heterogeneous modalities in identifying fake content. However, these works have the following limitations: (1) inefficient encoding of inter-modal relations by utilizing a simple concatenation operator on the modalities at a later stage in a model, which might result in information loss; (2) training very deep neural networks with a disproportionate number of parameters on small but complex real-life multimodal datasets result in higher chances of overfitting. To address these limitations, we propose GAME-ON, a Graph Neural Network based end-to-end trainable framework that allows granular interactions within and across different modalities to learn more robust data representations for multimodal fake news detection. We use two publicly available fake news datasets, Twitter and Weibo, for evaluations. Our model outperforms on Twitter by an average of 11% and keeps competitive performance on Weibo, within a 2.6% margin, while using 65% fewer parameters than the best comparable state-of-the-art baseline.

Battling Hateful Content in Indic Languages HASOC '21

Nov 05, 2021

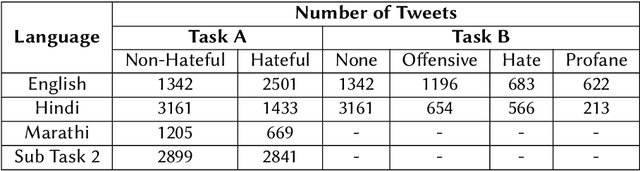

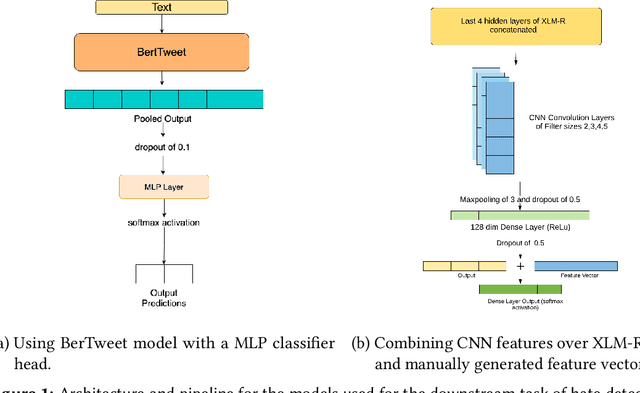

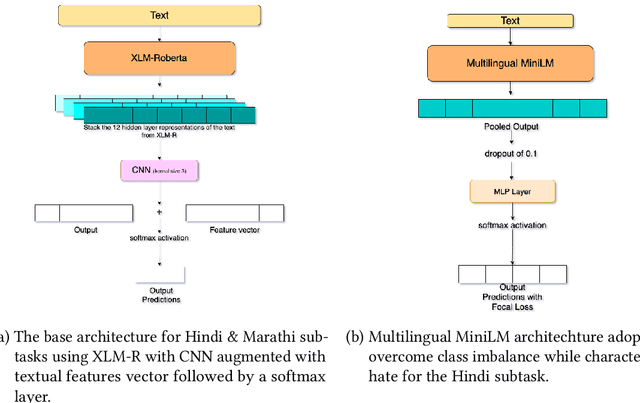

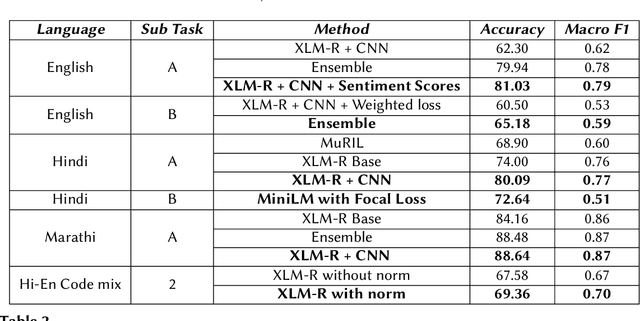

Abstract:The extensive rise in consumption of online social media (OSMs) by a large number of people poses a critical problem of curbing the spread of hateful content on these platforms. With the growing usage of OSMs in multiple languages, the task of detecting and characterizing hate becomes more complex. The subtle variations of code-mixed texts along with switching scripts only add to the complexity. This paper presents a solution for the HASOC 2021 Multilingual Twitter Hate-Speech Detection challenge by team PreCog IIIT Hyderabad. We adopt a multilingual transformer based approach and describe our architecture for all 6 subtasks as part of the challenge. Out of the 6 teams that participated in all the subtasks, our submissions rank 3rd overall.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge