Abhinav Garlapati

Towards Understanding Confusion and Affective States Under Communication Failures in Voice-Based Human-Machine Interaction

Jul 15, 2022

Abstract:We present a series of two studies conducted to understand user's affective states during voice-based human-machine interactions. Emphasis is placed on the cases of communication errors or failures. In particular, we are interested in understanding "confusion" in relation with other affective states. The studies consist of two types of tasks: (1) related to communication with a voice-based virtual agent: speaking to the machine and understanding what the machine says, (2) non-communication related, problem-solving tasks where the participants solve puzzles and riddles but are asked to verbally explain the answers to the machine. We collected audio-visual data and self-reports of affective states of the participants. We report results of two studies and analysis of the collected data. The first study was analyzed based on the annotator's observation, and the second study was analyzed based on the self-report.

A Reinforcement Learning Approach to Online Learning of Decision Trees

Jul 24, 2015

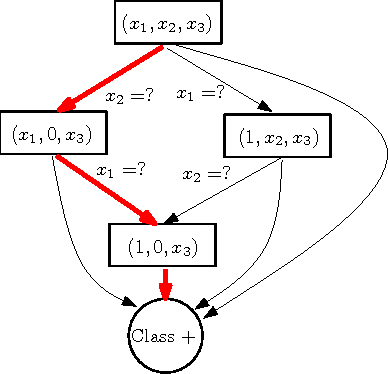

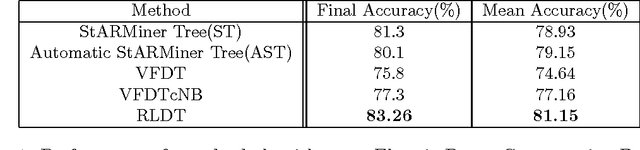

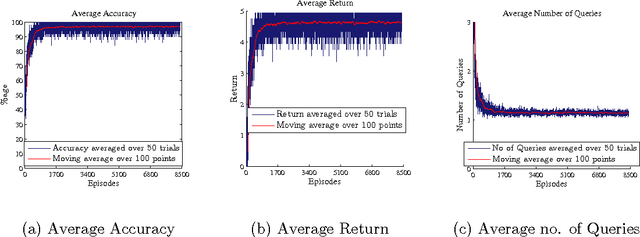

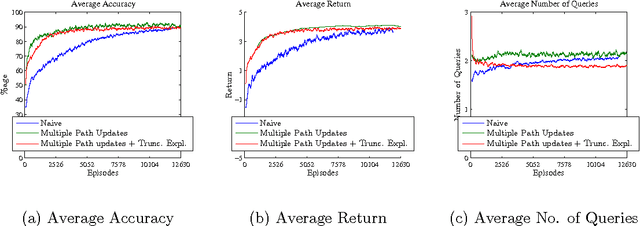

Abstract:Online decision tree learning algorithms typically examine all features of a new data point to update model parameters. We propose a novel alternative, Reinforcement Learning- based Decision Trees (RLDT), that uses Reinforcement Learning (RL) to actively examine a minimal number of features of a data point to classify it with high accuracy. Furthermore, RLDT optimizes a long term return, providing a better alternative to the traditional myopic greedy approach to growing decision trees. We demonstrate that this approach performs as well as batch learning algorithms and other online decision tree learning algorithms, while making significantly fewer queries about the features of the data points. We also show that RLDT can effectively handle concept drift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge