A Reinforcement Learning Approach to Online Learning of Decision Trees

Paper and Code

Jul 24, 2015

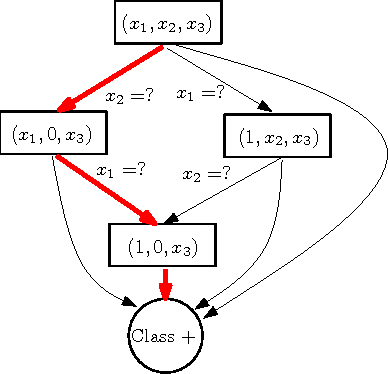

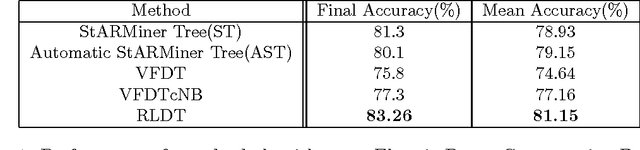

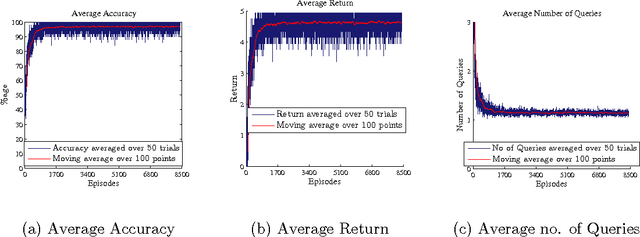

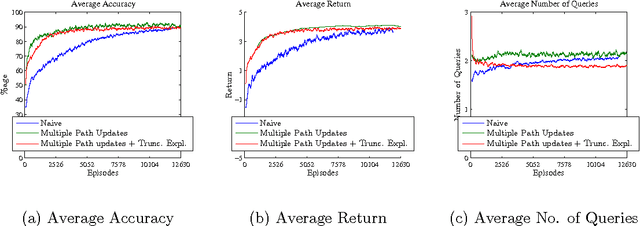

Online decision tree learning algorithms typically examine all features of a new data point to update model parameters. We propose a novel alternative, Reinforcement Learning- based Decision Trees (RLDT), that uses Reinforcement Learning (RL) to actively examine a minimal number of features of a data point to classify it with high accuracy. Furthermore, RLDT optimizes a long term return, providing a better alternative to the traditional myopic greedy approach to growing decision trees. We demonstrate that this approach performs as well as batch learning algorithms and other online decision tree learning algorithms, while making significantly fewer queries about the features of the data points. We also show that RLDT can effectively handle concept drift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge