Abdulhamid Adebayo

GneissWeb: Preparing High Quality Data for LLMs at Scale

Feb 19, 2025

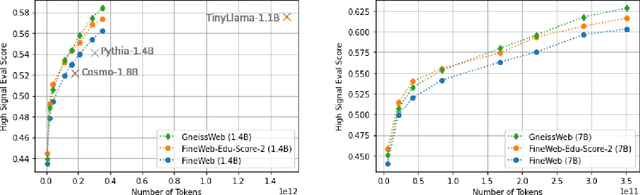

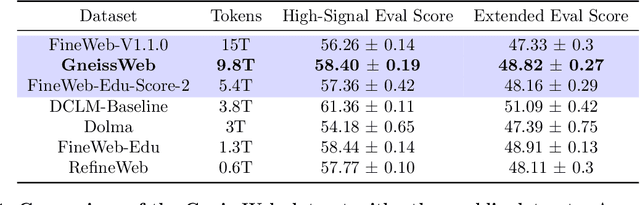

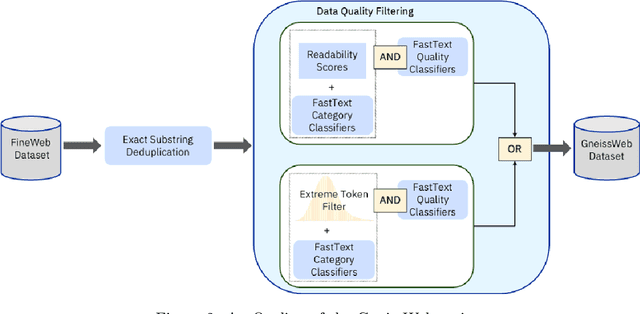

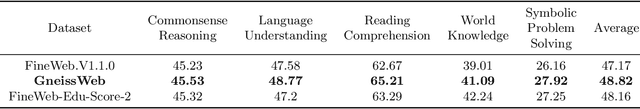

Abstract:Data quantity and quality play a vital role in determining the performance of Large Language Models (LLMs). High-quality data, in particular, can significantly boost the LLM's ability to generalize on a wide range of downstream tasks. Large pre-training datasets for leading LLMs remain inaccessible to the public, whereas many open datasets are small in size (less than 5 trillion tokens), limiting their suitability for training large models. In this paper, we introduce GneissWeb, a large dataset yielding around 10 trillion tokens that caters to the data quality and quantity requirements of training LLMs. Our GneissWeb recipe that produced the dataset consists of sharded exact sub-string deduplication and a judiciously constructed ensemble of quality filters. GneissWeb achieves a favorable trade-off between data quality and quantity, producing models that outperform models trained on state-of-the-art open large datasets (5+ trillion tokens). We show that models trained using GneissWeb dataset outperform those trained on FineWeb-V1.1.0 by 2.73 percentage points in terms of average score computed on a set of 11 commonly used benchmarks (both zero-shot and few-shot) for pre-training dataset evaluation. When the evaluation set is extended to 20 benchmarks (both zero-shot and few-shot), models trained using GneissWeb still achieve a 1.75 percentage points advantage over those trained on FineWeb-V1.1.0.

Data-Prep-Kit: getting your data ready for LLM application development

Sep 26, 2024

Abstract:Data preparation is the first and a very important step towards any Large Language Model (LLM) development. This paper introduces an easy-to-use, extensible, and scale-flexible open-source data preparation toolkit called Data Prep Kit (DPK). DPK is architected and designed to enable users to scale their data preparation to their needs. With DPK they can prepare data on a local machine or effortlessly scale to run on a cluster with thousands of CPU Cores. DPK comes with a highly scalable, yet extensible set of modules that transform natural language and code data. If the user needs additional transforms, they can be easily developed using extensive DPK support for transform creation. These modules can be used independently or pipelined to perform a series of operations. In this paper, we describe DPK architecture and show its performance from a small scale to a very large number of CPUs. The modules from DPK have been used for the preparation of Granite Models [1] [2]. We believe DPK is a valuable contribution to the AI community to easily prepare data to enhance the performance of their LLM models or to fine-tune models with Retrieval-Augmented Generation (RAG).

Automated Compliance Blueprint Optimization with Artificial Intelligence

Jun 22, 2022

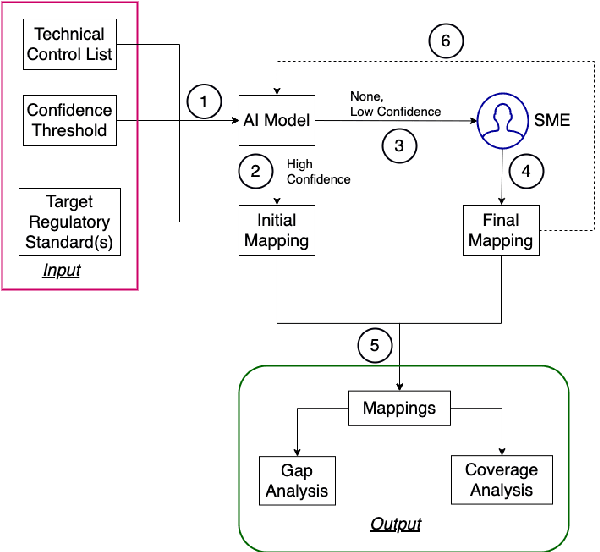

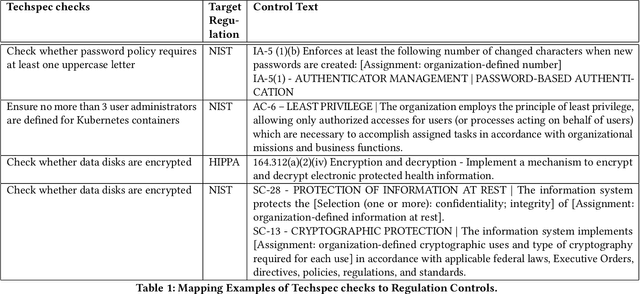

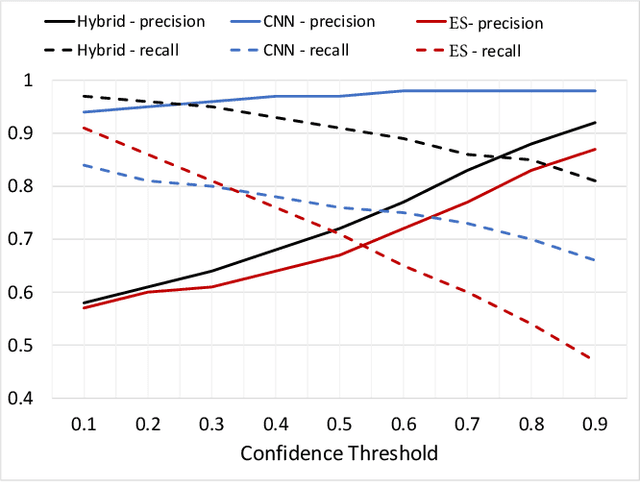

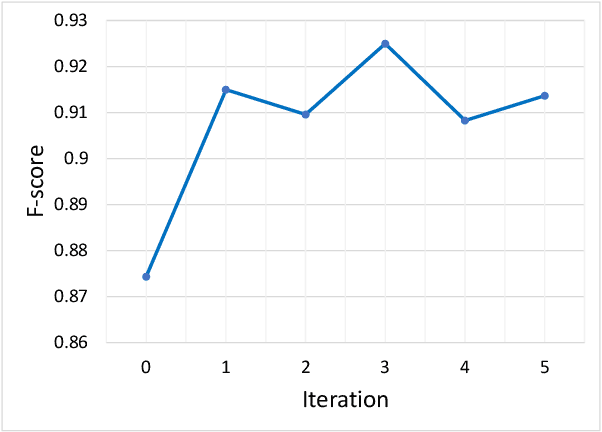

Abstract:For highly regulated industries such as banking and healthcare, one of the major hindrances to the adoption of cloud computing is compliance with regulatory standards. This is a complex problem due to many regulatory and technical specification (techspec) documents that the companies need to comply with. The critical problem is to establish the mapping between techspecs and regulation controls so that from day one, companies can comply with regulations with minimal effort. We demonstrate the practicality of an approach to automatically analyze regulatory standards using Artificial Intelligence (AI) techniques. We present early results to identify the mapping between techspecs and regulation controls, and discuss challenges that must be overcome for this solution to be fully practical.

Vulnerability Prioritization: An Offensive Security Approach

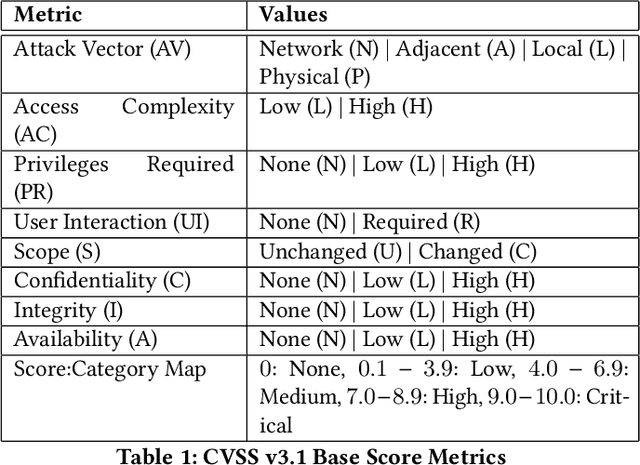

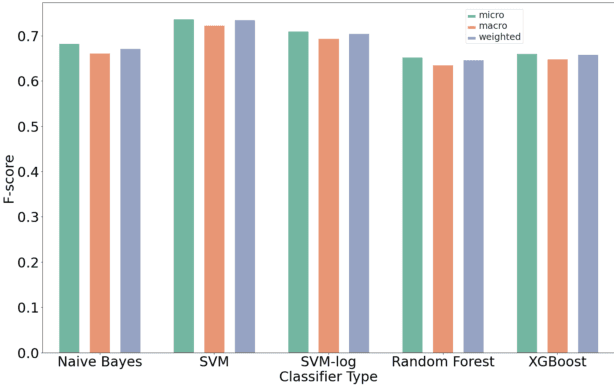

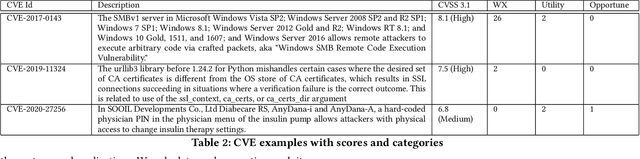

Jun 22, 2022

Abstract:Organizations struggle to handle sheer number of vulnerabilities in their cloud environments. The de facto methodology used for prioritizing vulnerabilities is to use Common Vulnerability Scoring System (CVSS). However, CVSS has inherent limitations that makes it not ideal for prioritization. In this work, we propose a new way of prioritizing vulnerabilities. Our approach is inspired by how offensive security practitioners perform penetration testing. We evaluate our approach with a real world case study for a large client, and the accuracy of machine learning to automate the process end to end.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge