Abdallah El Ali

Full Disclosure, Less Trust? How the Level of Detail about AI Use in News Writing Affects Readers' Trust

Jan 14, 2026Abstract:As artificial intelligence (AI) is increasingly integrated into news production, calls for transparency about the use of AI have gained considerable traction. Recent studies suggest that AI disclosures can lead to a ``transparency dilemma'', where disclosure reduces readers' trust. However, little is known about how the \textit{level of detail} in AI disclosures influences trust and contributes to this dilemma within the news context. In this 3$\times$2$\times$2 mixed factorial study with 40 participants, we investigate how three levels of AI disclosures (none, one-line, detailed) across two types of news (politics and lifestyle) and two levels of AI involvement (low and high) affect news readers' trust. We measured trust using the News Media Trust questionnaire, along with two decision behaviors: source-checking and subscription decisions. Questionnaire responses and subscription rates showed a decline in trust only for detailed AI disclosures, whereas source-checking behavior increased for both one-line and detailed disclosures, with the effect being more pronounced for detailed disclosures. Insights from semi-structured interviews suggest that source-checking behavior was primarily driven by interest in the topic, followed by trust, whereas trust was the main factor influencing subscription decisions. Around two-thirds of participants expressed a preference for detailed disclosures, while most participants who preferred one-line indicated a need for detail-on-demand disclosure formats. Our findings show that not all AI disclosures lead to a transparency dilemma, but instead reflect a trade-off between readers' desire for more transparency and their trust in AI-assisted news content.

Script-Strategy Aligned Generation: Aligning LLMs with Expert-Crafted Dialogue Scripts and Therapeutic Strategies for Psychotherapy

Nov 11, 2024

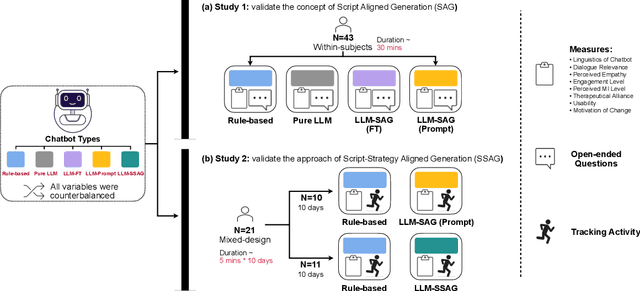

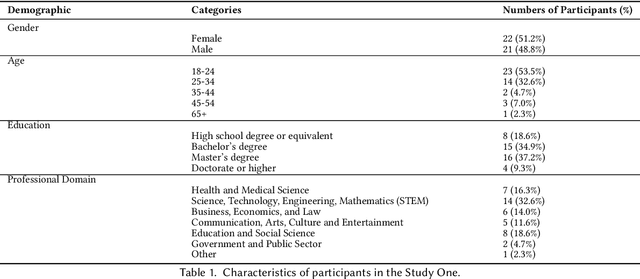

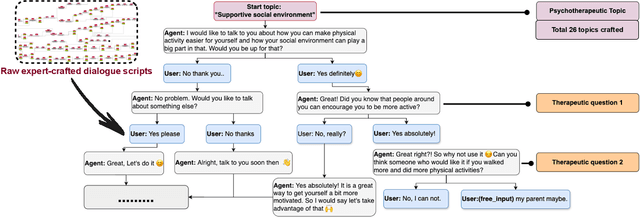

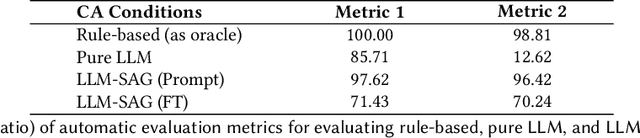

Abstract:Chatbots or conversational agents (CAs) are increasingly used to improve access to digital psychotherapy. Many current systems rely on rigid, rule-based designs, heavily dependent on expert-crafted dialogue scripts for guiding therapeutic conversations. Although recent advances in large language models (LLMs) offer the potential for more flexible interactions, their lack of controllability and transparency poses significant challenges in sensitive areas like psychotherapy. In this work, we explored how aligning LLMs with expert-crafted scripts can enhance psychotherapeutic chatbot performance. Our comparative study showed that LLMs aligned with expert-crafted scripts through prompting and fine-tuning significantly outperformed both pure LLMs and rule-based chatbots, achieving a more effective balance between dialogue flexibility and adherence to therapeutic principles. Building on findings, we proposed ``Script-Strategy Aligned Generation (SSAG)'', a flexible alignment approach that reduces reliance on fully scripted content while enhancing LLMs' therapeutic adherence and controllability. In a 10-day field study, SSAG demonstrated performance comparable to full script alignment and outperformed rule-based chatbots, empirically supporting SSAG as an efficient approach for aligning LLMs with domain expertise. Our work advances LLM applications in psychotherapy by providing a controllable, adaptable, and scalable solution for digital interventions, reducing reliance on expert effort. It also provides a collaborative framework for domain experts and developers to efficiently build expertise-aligned chatbots, broadening access to psychotherapy and behavioral interventions.

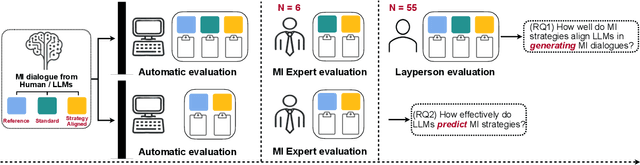

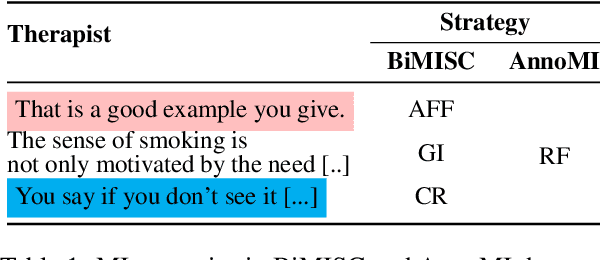

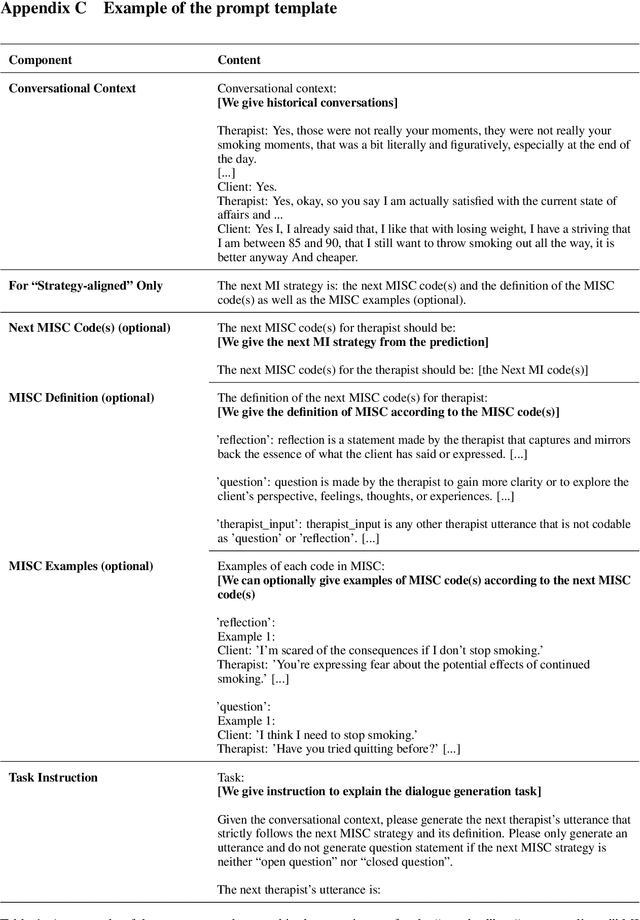

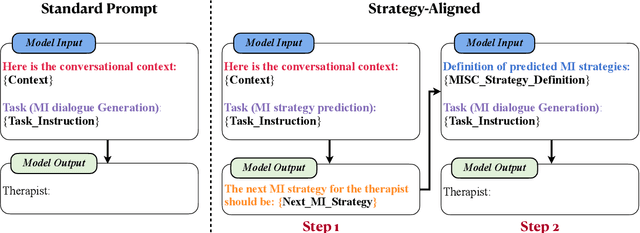

Chain-of-Strategy Planning with LLMs: Aligning the Generation of Psychotherapy Dialogue with Strategy in Motivational Interviewing

Aug 12, 2024

Abstract:Recent advancements in large language models (LLMs) have shown promise in generating psychotherapeutic dialogues, especially in Motivational Interviewing (MI). However, how to employ strategies, a set of motivational interviewing (MI) skills, to generate therapeutic-adherent conversations with explainability is underexplored. We propose an approach called strategy-aware dialogue generation with Chain-of-Strategy (CoS) planning, which first predicts MI strategies as reasoning and utilizes these strategies to guide the subsequent dialogue generation. It brings the potential for controllable and explainable generation in psychotherapy by aligning the generated MI dialogues with therapeutic strategies. Extensive experiments including automatic and human evaluations are conducted to validate the effectiveness of the MI strategy. Our findings demonstrate the potential of LLMs in producing strategically aligned dialogues and suggest directions for practical applications in psychotherapeutic settings.

User Experience Design Professionals' Perceptions of Generative Artificial Intelligence

Sep 26, 2023

Abstract:Among creative professionals, Generative Artificial Intelligence (GenAI) has sparked excitement over its capabilities and fear over unanticipated consequences. How does GenAI impact User Experience Design (UXD) practice, and are fears warranted? We interviewed 20 UX Designers, with diverse experience and across companies (startups to large enterprises). We probed them to characterize their practices, and sample their attitudes, concerns, and expectations. We found that experienced designers are confident in their originality, creativity, and empathic skills, and find GenAI's role as assistive. They emphasized the unique human factors of "enjoyment" and "agency", where humans remain the arbiters of "AI alignment". However, skill degradation, job replacement, and creativity exhaustion can adversely impact junior designers. We discuss implications for human-GenAI collaboration, specifically copyright and ownership, human creativity and agency, and AI literacy and access. Through the lens of responsible and participatory AI, we contribute a deeper understanding of GenAI fears and opportunities for UXD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge