Aaron Bostrom

HIVE-COTE 2.0: a new meta ensemble for time series classification

Apr 15, 2021

Abstract:The Hierarchical Vote Collective of Transformation-based Ensembles (HIVE-COTE) is a heterogeneous meta ensemble for time series classification. HIVE-COTE forms its ensemble from classifiers of multiple domains, including phase-independent shapelets, bag-of-words based dictionaries and phase-dependent intervals. Since it was first proposed in 2016, the algorithm has remained state of the art for accuracy on the UCR time series classification archive. Over time it has been incrementally updated, culminating in its current state, HIVE-COTE 1.0. During this time a number of algorithms have been proposed which match the accuracy of HIVE-COTE. We propose comprehensive changes to the HIVE-COTE algorithm which significantly improve its accuracy and usability, presenting this upgrade as HIVE-COTE 2.0. We introduce two novel classifiers, the Temporal Dictionary Ensemble (TDE) and Diverse Representation Canonical Interval Forest (DrCIF), which replace existing ensemble members. Additionally, we introduce the Arsenal, an ensemble of ROCKET classifiers as a new HIVE-COTE 2.0 constituent. We demonstrate that HIVE-COTE 2.0 is significantly more accurate than the current state of the art on 112 univariate UCR archive datasets and 26 multivariate UEA archive datasets.

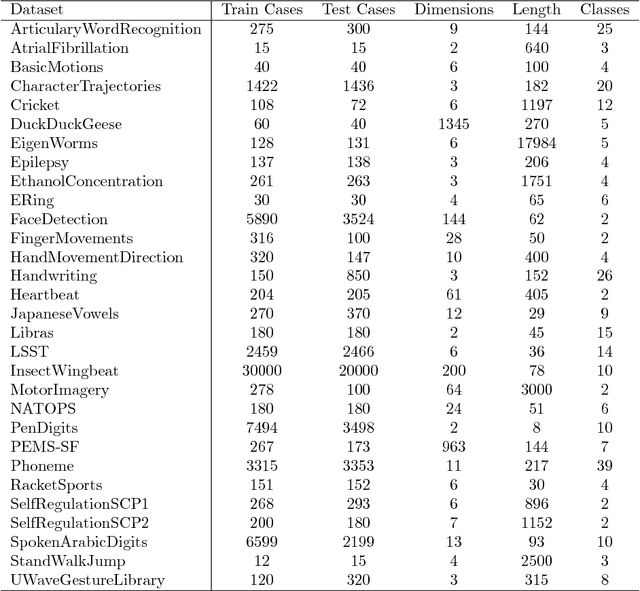

The UEA multivariate time series classification archive, 2018

Oct 31, 2018

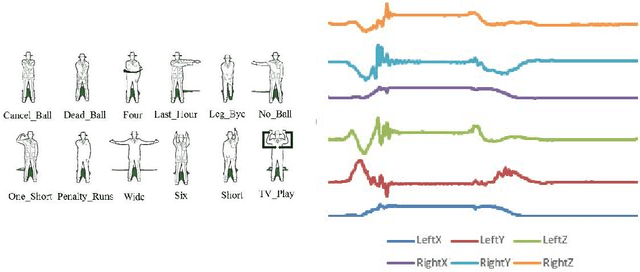

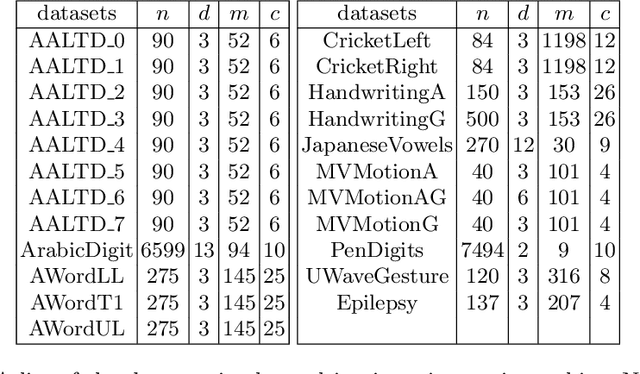

Abstract:In 2002, the UCR time series classification archive was first released with sixteen datasets. It gradually expanded, until 2015 when it increased in size from 45 datasets to 85 datasets. In October 2018 more datasets were added, bringing the total to 128. The new archive contains a wide range of problems, including variable length series, but it still only contains univariate time series classification problems. One of the motivations for introducing the archive was to encourage researchers to perform a more rigorous evaluation of newly proposed time series classification (TSC) algorithms. It has worked: most recent research into TSC uses all 85 datasets to evaluate algorithmic advances. Research into multivariate time series classification, where more than one series are associated with each class label, is in a position where univariate TSC research was a decade ago. Algorithms are evaluated using very few datasets and claims of improvement are not based on statistical comparisons. We aim to address this problem by forming the first iteration of the MTSC archive, to be hosted at the website www.timeseriesclassification.com. Like the univariate archive, this formulation was a collaborative effort between researchers at the University of East Anglia (UEA) and the University of California, Riverside (UCR). The 2018 vintage consists of 30 datasets with a wide range of cases, dimensions and series lengths. For this first iteration of the archive we format all data to be of equal length, include no series with missing data and provide train/test splits.

A Shapelet Transform for Multivariate Time Series Classification

Dec 18, 2017

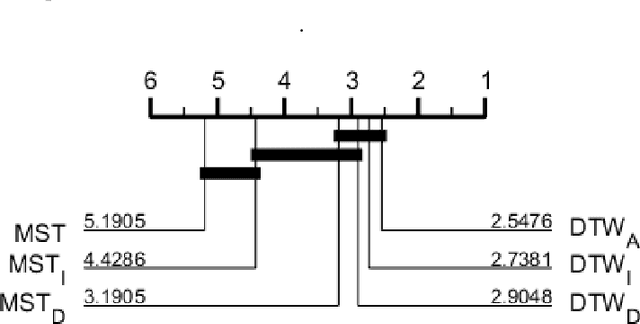

Abstract:Shapelets are phase independent subsequences designed for time series classification. We propose three adaptations to the Shapelet Transform (ST) to capture multivariate features in multivariate time series classification. We create a unified set of data to benchmark our work on, and compare with three other algorithms. We demonstrate that multivariate shapelets are not significantly worse than other state-of-the-art algorithms.

Simulated Data Experiments for Time Series Classification Part 1: Accuracy Comparison with Default Settings

Mar 28, 2017

Abstract:There are now a broad range of time series classification (TSC) algorithms designed to exploit different representations of the data. These have been evaluated on a range of problems hosted at the UCR-UEA TSC Archive (www.timeseriesclassification.com), and there have been extensive comparative studies. However, our understanding of why one algorithm outperforms another is still anecdotal at best. This series of experiments is meant to help provide insights into what sort of discriminatory features in the data lead one set of algorithms that exploit a particular representation to be better than other algorithms. We categorise five different feature spaces exploited by TSC algorithms then design data simulators to generate randomised data from each representation. We describe what results we expected from each class of algorithm and data representation, then observe whether these prior beliefs are supported by the experimental evidence. We provide an open source implementation of all the simulators to allow for the controlled testing of hypotheses relating to classifier performance on different data representations. We identify many surprising results that confounded our expectations, and use these results to highlight how an over simplified view of classifier structure can often lead to erroneous prior beliefs. We believe ensembling can often overcome prior bias, and our results support the belief by showing that the ensemble approach adopted by the Hierarchical Collective of Transform based Ensembles (HIVE-COTE) is significantly better than the alternatives when the data representation is unknown, and is significantly better than, or not significantly significantly better than, or not significantly worse than, the best other approach on three out of five of the individual simulators.

The Great Time Series Classification Bake Off: An Experimental Evaluation of Recently Proposed Algorithms. Extended Version

Feb 04, 2016

Abstract:In the last five years there have been a large number of new time series classification algorithms proposed in the literature. These algorithms have been evaluated on subsets of the 47 data sets in the University of California, Riverside time series classification archive. The archive has recently been expanded to 85 data sets, over half of which have been donated by researchers at the University of East Anglia. Aspects of previous evaluations have made comparisons between algorithms difficult. For example, several different programming languages have been used, experiments involved a single train/test split and some used normalised data whilst others did not. The relaunch of the archive provides a timely opportunity to thoroughly evaluate algorithms on a larger number of datasets. We have implemented 18 recently proposed algorithms in a common Java framework and compared them against two standard benchmark classifiers (and each other) by performing 100 resampling experiments on each of the 85 datasets. We use these results to test several hypotheses relating to whether the algorithms are significantly more accurate than the benchmarks and each other. Our results indicate that only 9 of these algorithms are significantly more accurate than both benchmarks and that one classifier, the Collective of Transformation Ensembles, is significantly more accurate than all of the others. All of our experiments and results are reproducible: we release all of our code, results and experimental details and we hope these experiments form the basis for more rigorous testing of new algorithms in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge