A K M Mahbubur Rahman

BAPPA: Benchmarking Agents, Plans, and Pipelines for Automated Text-to-SQL Generation

Nov 06, 2025

Abstract:Text-to-SQL systems provide a natural language interface that can enable even laymen to access information stored in databases. However, existing Large Language Models (LLM) struggle with SQL generation from natural instructions due to large schema sizes and complex reasoning. Prior work often focuses on complex, somewhat impractical pipelines using flagship models, while smaller, efficient models remain overlooked. In this work, we explore three multi-agent LLM pipelines, with systematic performance benchmarking across a range of small to large open-source models: (1) Multi-agent discussion pipeline, where agents iteratively critique and refine SQL queries, and a judge synthesizes the final answer; (2) Planner-Coder pipeline, where a thinking model planner generates stepwise SQL generation plans and a coder synthesizes queries; and (3) Coder-Aggregator pipeline, where multiple coders independently generate SQL queries, and a reasoning agent selects the best query. Experiments on the Bird-Bench Mini-Dev set reveal that Multi-Agent discussion can improve small model performance, with up to 10.6% increase in Execution Accuracy for Qwen2.5-7b-Instruct seen after three rounds of discussion. Among the pipelines, the LLM Reasoner-Coder pipeline yields the best results, with DeepSeek-R1-32B and QwQ-32B planners boosting Gemma 3 27B IT accuracy from 52.4% to the highest score of 56.4%. Codes are available at https://github.com/treeDweller98/bappa-sql.

DM-Codec: Distilling Multimodal Representations for Speech Tokenization

Oct 19, 2024

Abstract:Recent advancements in speech-language models have yielded significant improvements in speech tokenization and synthesis. However, effectively mapping the complex, multidimensional attributes of speech into discrete tokens remains challenging. This process demands acoustic, semantic, and contextual information for precise speech representations. Existing speech representations generally fall into two categories: acoustic tokens from audio codecs and semantic tokens from speech self-supervised learning models. Although recent efforts have unified acoustic and semantic tokens for improved performance, they overlook the crucial role of contextual representation in comprehensive speech modeling. Our empirical investigations reveal that the absence of contextual representations results in elevated Word Error Rate (WER) and Word Information Lost (WIL) scores in speech transcriptions. To address these limitations, we propose two novel distillation approaches: (1) a language model (LM)-guided distillation method that incorporates contextual information, and (2) a combined LM and self-supervised speech model (SM)-guided distillation technique that effectively distills multimodal representations (acoustic, semantic, and contextual) into a comprehensive speech tokenizer, termed DM-Codec. The DM-Codec architecture adopts a streamlined encoder-decoder framework with a Residual Vector Quantizer (RVQ) and incorporates the LM and SM during the training process. Experiments show DM-Codec significantly outperforms state-of-the-art speech tokenization models, reducing WER by up to 13.46%, WIL by 9.82%, and improving speech quality by 5.84% and intelligibility by 1.85% on the LibriSpeech benchmark dataset. The code, samples, and model checkpoints are available at https://github.com/mubtasimahasan/DM-Codec.

Automatic Detection of Natural Disaster Effect on Paddy Field from Satellite Images using Deep Learning Techniques

Apr 02, 2023Abstract:This paper aims to detect rice field damage from natural disasters in Bangladesh using high-resolution satellite imagery. The authors developed ground truth data for rice field damage from the field level. At first, NDVI differences before and after the disaster are calculated to identify possible crop loss. The areas equal to and above the 0.33 threshold are marked as crop loss areas as significant changes are observed. The authors also verified crop loss areas by collecting data from local farmers. Later, different bands of satellite data (Red, Green, Blue) and (False Color Infrared) are useful to detect crop loss area. We used the NDVI different images as ground truth to train the DeepLabV3plus model. With RGB, we got IoU 0.41 and with FCI, we got IoU 0.51. As FCI uses NIR, Red, Blue bands and NDVI is normalized difference between NIR and Red bands, so greater FCI's IoU score than RGB is expected. But RGB does not perform very badly here. So, where other bands are not available, RGB can use to understand crop loss areas to some extent. The ground truth developed in this paper can be used for segmentation models with very high resolution RGB only images such as Bing, Google etc.

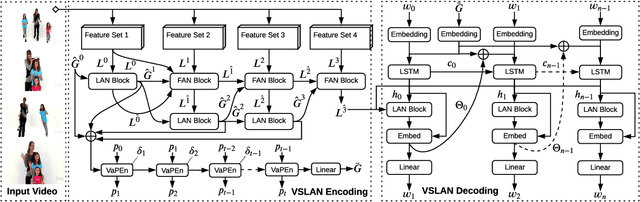

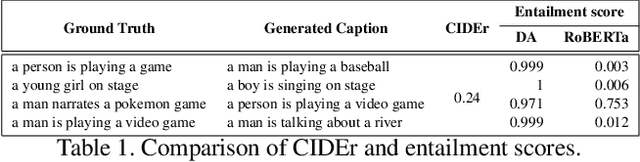

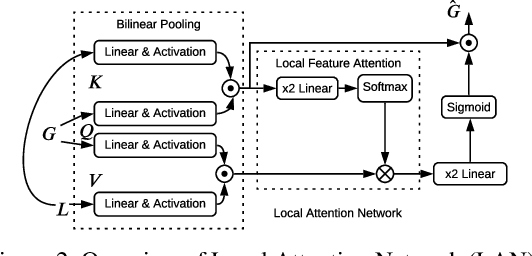

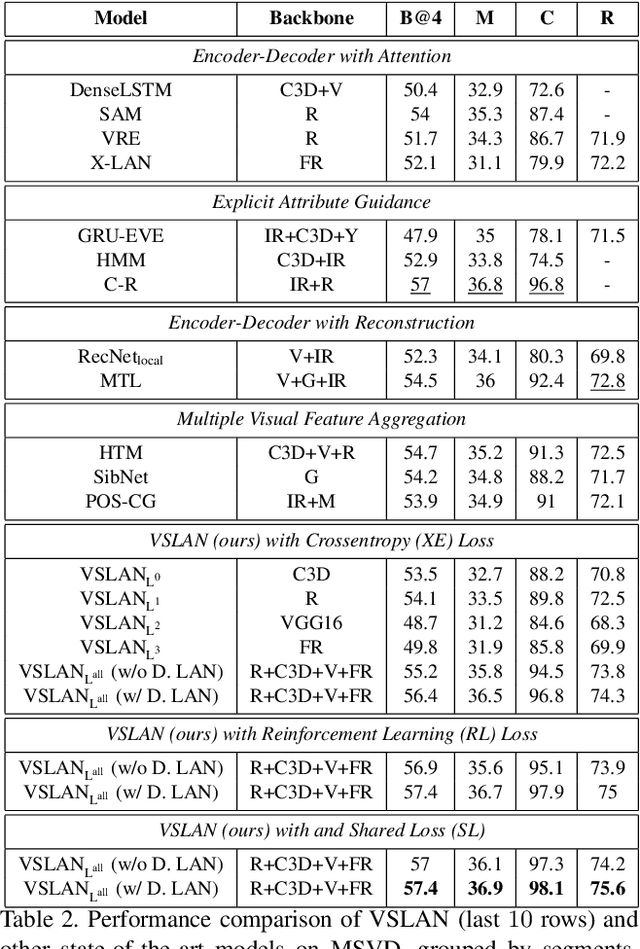

Variational Stacked Local Attention Networks for Diverse Video Captioning

Jan 04, 2022

Abstract:While describing Spatio-temporal events in natural language, video captioning models mostly rely on the encoder's latent visual representation. Recent progress on the encoder-decoder model attends encoder features mainly in linear interaction with the decoder. However, growing model complexity for visual data encourages more explicit feature interaction for fine-grained information, which is currently absent in the video captioning domain. Moreover, feature aggregations methods have been used to unveil richer visual representation, either by the concatenation or using a linear layer. Though feature sets for a video semantically overlap to some extent, these approaches result in objective mismatch and feature redundancy. In addition, diversity in captions is a fundamental component of expressing one event from several meaningful perspectives, currently missing in the temporal, i.e., video captioning domain. To this end, we propose Variational Stacked Local Attention Network (VSLAN), which exploits low-rank bilinear pooling for self-attentive feature interaction and stacking multiple video feature streams in a discount fashion. Each feature stack's learned attributes contribute to our proposed diversity encoding module, followed by the decoding query stage to facilitate end-to-end diverse and natural captions without any explicit supervision on attributes. We evaluate VSLAN on MSVD and MSR-VTT datasets in terms of syntax and diversity. The CIDEr score of VSLAN outperforms current off-the-shelf methods by $7.8\%$ on MSVD and $4.5\%$ on MSR-VTT, respectively. On the same datasets, VSLAN achieves competitive results in caption diversity metrics.

Unified Spatio-Temporal Modeling for Traffic Forecasting using Graph Neural Network

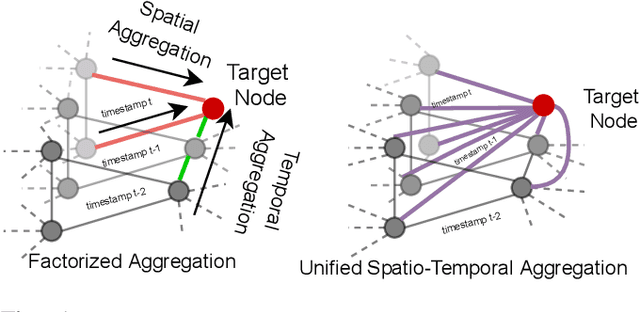

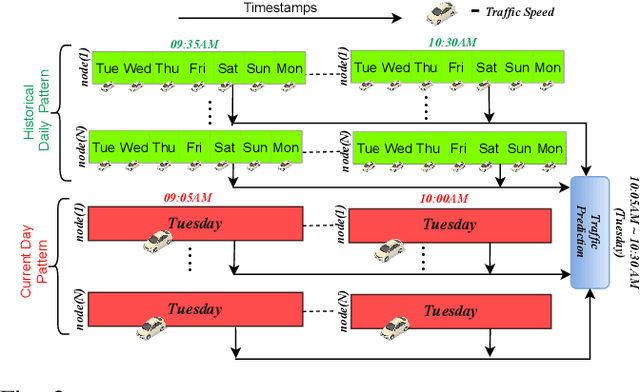

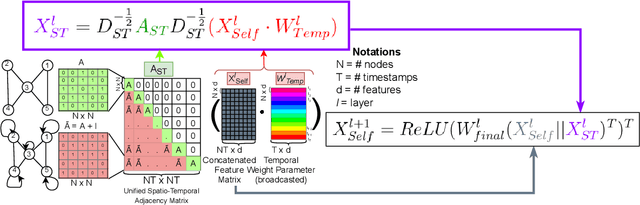

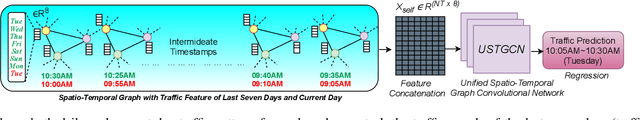

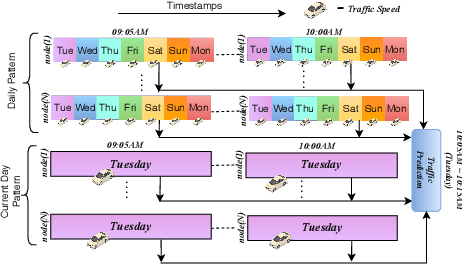

Apr 28, 2021

Abstract:Research in deep learning models to forecast traffic intensities has gained great attention in recent years due to their capability to capture the complex spatio-temporal relationships within the traffic data. However, most state-of-the-art approaches have designed spatial-only (e.g. Graph Neural Networks) and temporal-only (e.g. Recurrent Neural Networks) modules to separately extract spatial and temporal features. However, we argue that it is less effective to extract the complex spatio-temporal relationship with such factorized modules. Besides, most existing works predict the traffic intensity of a particular time interval only based on the traffic data of the previous one hour of that day. And thereby ignores the repetitive daily/weekly pattern that may exist in the last hour of data. Therefore, we propose a Unified Spatio-Temporal Graph Convolution Network (USTGCN) for traffic forecasting that performs both spatial and temporal aggregation through direct information propagation across different timestamp nodes with the help of spectral graph convolution on a spatio-temporal graph. Furthermore, it captures historical daily patterns in previous days and current-day patterns in current-day traffic data. Finally, we validate our work's effectiveness through experimental analysis, which shows that our model USTGCN can outperform state-of-the-art performances in three popular benchmark datasets from the Performance Measurement System (PeMS). Moreover, the training time is reduced significantly with our proposed USTGCN model.

Node Embedding using Mutual Information and Self-Supervision based Bi-level Aggregation

Apr 27, 2021

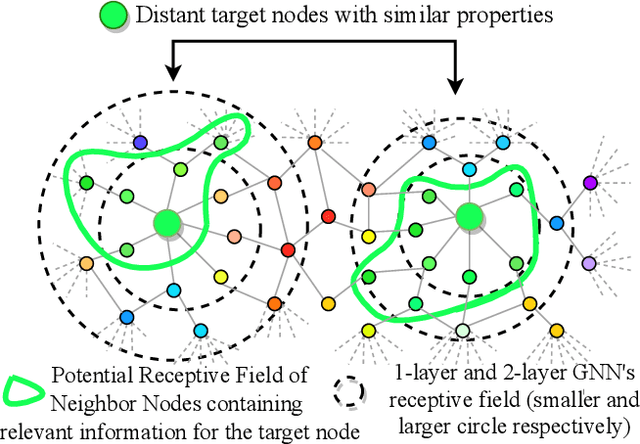

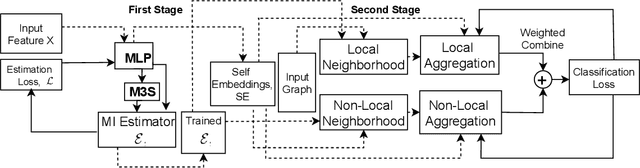

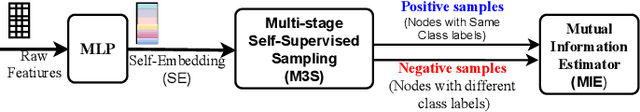

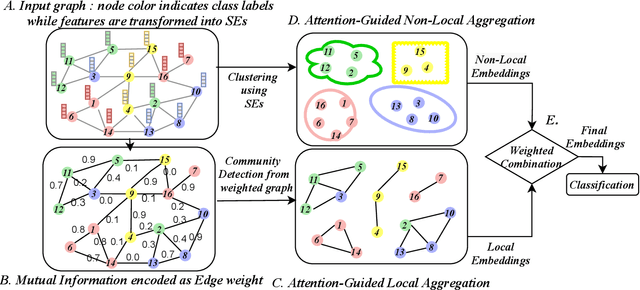

Abstract:Graph Neural Networks (GNNs) learn low dimensional representations of nodes by aggregating information from their neighborhood in graphs. However, traditional GNNs suffer from two fundamental shortcomings due to their local ($l$-hop neighborhood) aggregation scheme. First, not all nodes in the neighborhood carry relevant information for the target node. Since GNNs do not exclude noisy nodes in their neighborhood, irrelevant information gets aggregated, which reduces the quality of the representation. Second, traditional GNNs also fail to capture long-range non-local dependencies between nodes. To address these limitations, we exploit mutual information (MI) to define two types of neighborhood, 1) \textit{Local Neighborhood} where nodes are densely connected within a community and each node would share higher MI with its neighbors, and 2) \textit{Non-Local Neighborhood} where MI-based node clustering is introduced to assemble informative but graphically distant nodes in the same cluster. To generate node presentations, we combine the embeddings generated by bi-level aggregation - local aggregation to aggregate features from local neighborhoods to avoid noisy information and non-local aggregation to aggregate features from non-local neighborhoods. Furthermore, we leverage self-supervision learning to estimate MI with few labeled data. Finally, we show that our model significantly outperforms the state-of-the-art methods in a wide range of assortative and disassortative graphs.

Structure-Aware Hierarchical Graph Pooling using Information Bottleneck

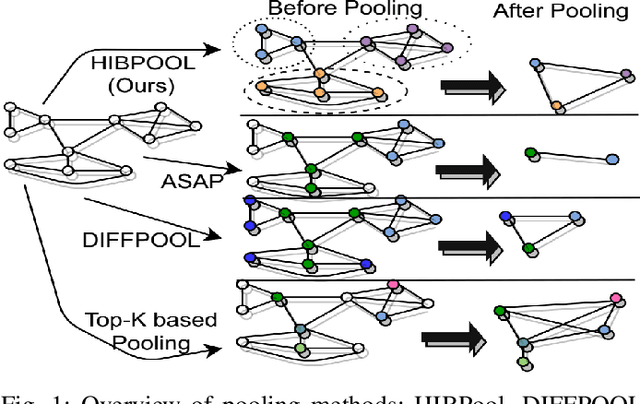

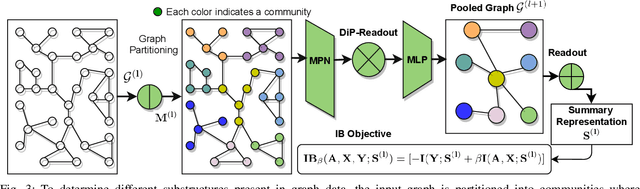

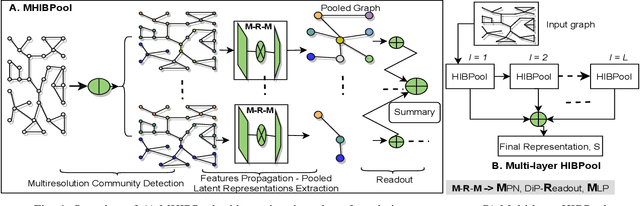

Apr 27, 2021

Abstract:Graph pooling is an essential ingredient of Graph Neural Networks (GNNs) in graph classification and regression tasks. For these tasks, different pooling strategies have been proposed to generate a graph-level representation by downsampling and summarizing nodes' features in a graph. However, most existing pooling methods are unable to capture distinguishable structural information effectively. Besides, they are prone to adversarial attacks. In this work, we propose a novel pooling method named as {HIBPool} where we leverage the Information Bottleneck (IB) principle that optimally balances the expressiveness and robustness of a model to learn representations of input data. Furthermore, we introduce a novel structure-aware Discriminative Pooling Readout ({DiP-Readout}) function to capture the informative local subgraph structures in the graph. Finally, our experimental results show that our model significantly outperforms other state-of-art methods on several graph classification benchmarks and more resilient to feature-perturbation attack than existing pooling methods.

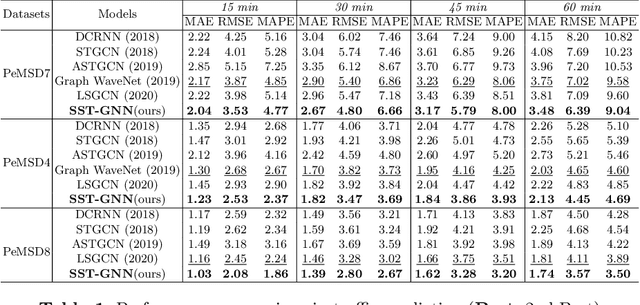

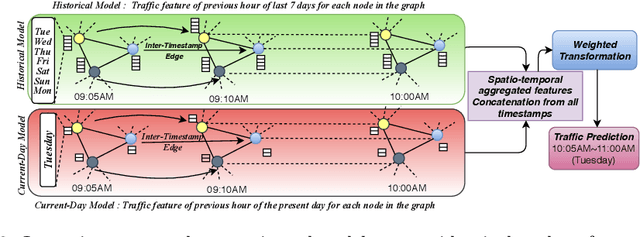

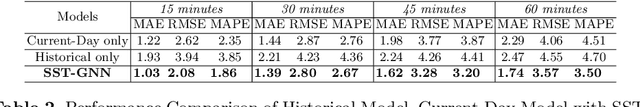

SST-GNN: Simplified Spatio-temporal Traffic forecasting model using Graph Neural Network

Mar 31, 2021

Abstract:To capture spatial relationships and temporal dynamics in traffic data, spatio-temporal models for traffic forecasting have drawn significant attention in recent years. Most of the recent works employed graph neural networks(GNN) with multiple layers to capture the spatial dependency. However, road junctions with different hop-distance can carry distinct traffic information which should be exploited separately but existing multi-layer GNNs are incompetent to discriminate between their impact. Again, to capture the temporal interrelationship, recurrent neural networks are common in state-of-the-art approaches that often fail to capture long-range dependencies. Furthermore, traffic data shows repeated patterns in a daily or weekly period which should be addressed explicitly. To address these limitations, we have designed a Simplified Spatio-temporal Traffic forecasting GNN(SST-GNN) that effectively encodes the spatial dependency by separately aggregating different neighborhood representations rather than with multiple layers and capture the temporal dependency with a simple yet effective weighted spatio-temporal aggregation mechanism. We capture the periodic traffic patterns by using a novel position encoding scheme with historical and current data in two different models. With extensive experimental analysis, we have shown that our model has significantly outperformed the state-of-the-art models on three real-world traffic datasets from the Performance Measurement System (PeMS).

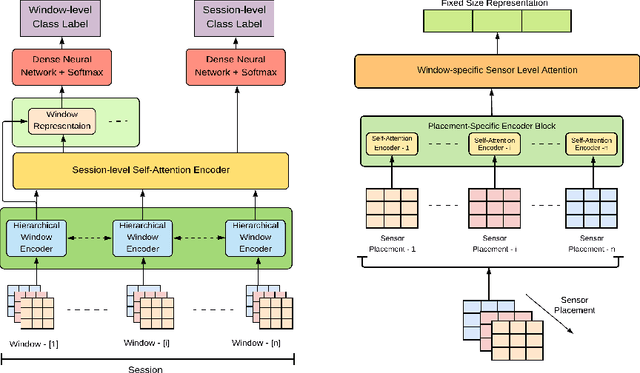

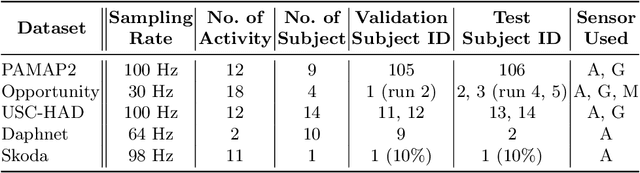

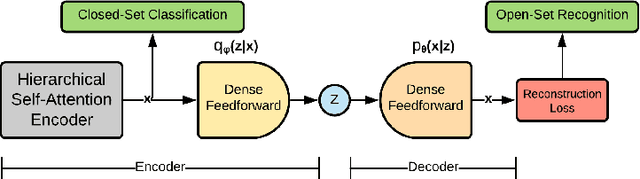

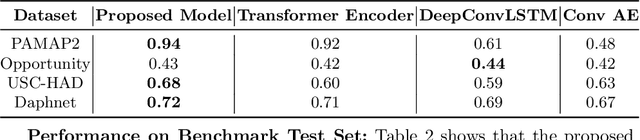

Hierarchical Self Attention Based Autoencoder for Open-Set Human Activity Recognition

Mar 07, 2021

Abstract:Wearable sensor based human activity recognition is a challenging problem due to difficulty in modeling spatial and temporal dependencies of sensor signals. Recognition models in closed-set assumption are forced to yield members of known activity classes as prediction. However, activity recognition models can encounter an unseen activity due to body-worn sensor malfunction or disability of the subject performing the activities. This problem can be addressed through modeling solution according to the assumption of open-set recognition. Hence, the proposed self attention based approach combines data hierarchically from different sensor placements across time to classify closed-set activities and it obtains notable performance improvement over state-of-the-art models on five publicly available datasets. The decoder in this autoencoder architecture incorporates self-attention based feature representations from encoder to detect unseen activity classes in open-set recognition setting. Furthermore, attention maps generated by the hierarchical model demonstrate explainable selection of features in activity recognition. We conduct extensive leave one subject out validation experiments that indicate significantly improved robustness to noise and subject specific variability in body-worn sensor signals. The source code is available at: github.com/saif-mahmud/hierarchical-attention-HAR

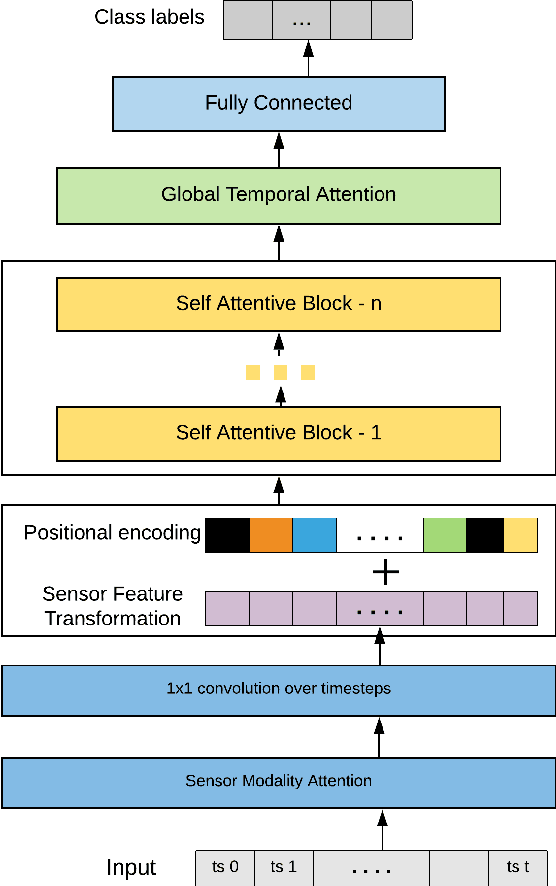

Human Activity Recognition from Wearable Sensor Data Using Self-Attention

Mar 17, 2020

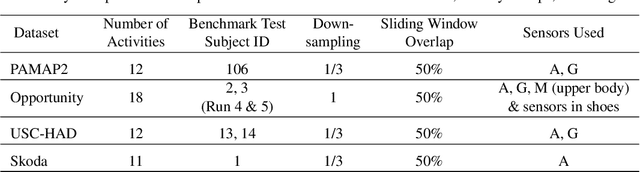

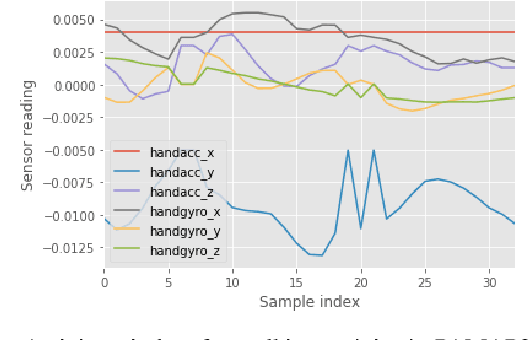

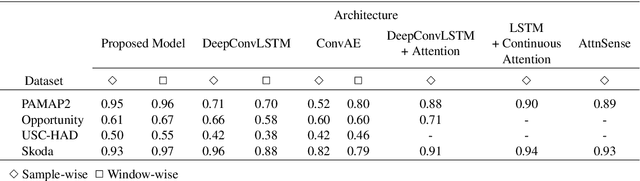

Abstract:Human Activity Recognition from body-worn sensor data poses an inherent challenge in capturing spatial and temporal dependencies of time-series signals. In this regard, the existing recurrent or convolutional or their hybrid models for activity recognition struggle to capture spatio-temporal context from the feature space of sensor reading sequence. To address this complex problem, we propose a self-attention based neural network model that foregoes recurrent architectures and utilizes different types of attention mechanisms to generate higher dimensional feature representation used for classification. We performed extensive experiments on four popular publicly available HAR datasets: PAMAP2, Opportunity, Skoda and USC-HAD. Our model achieve significant performance improvement over recent state-of-the-art models in both benchmark test subjects and Leave-one-subject-out evaluation. We also observe that the sensor attention maps produced by our model is able capture the importance of the modality and placement of the sensors in predicting the different activity classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge