Using Natural Language and Program Abstractions to Instill Human Inductive Biases in Machines

Paper and Code

May 23, 2022

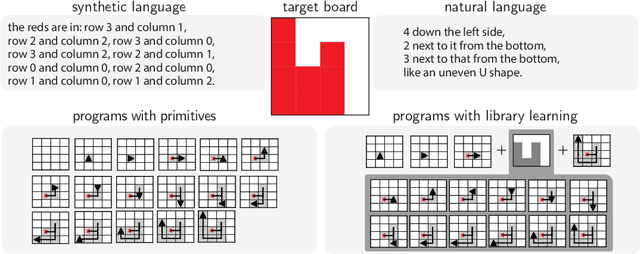

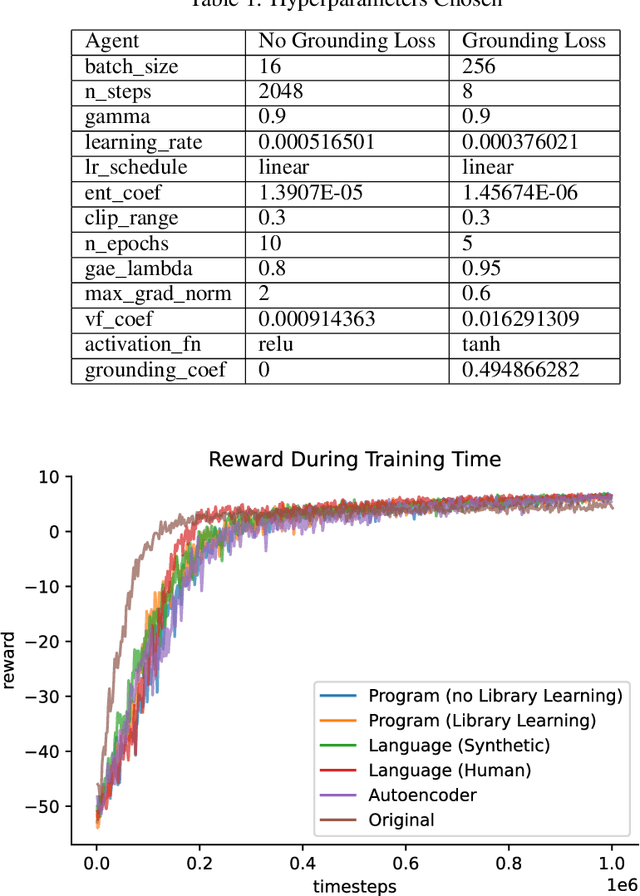

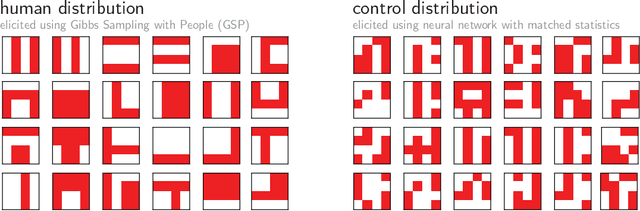

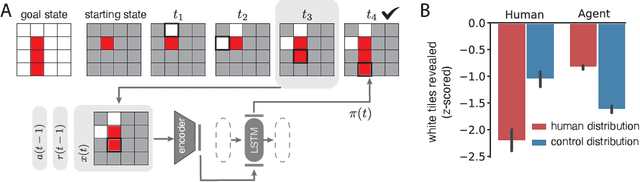

Strong inductive biases are a key component of human intelligence, allowing people to quickly learn a variety of tasks. Although meta-learning has emerged as an approach for endowing neural networks with useful inductive biases, agents trained by meta-learning may acquire very different strategies from humans. We show that co-training these agents on predicting representations from natural language task descriptions and from programs induced to generate such tasks guides them toward human-like inductive biases. Human-generated language descriptions and program induction with library learning both result in more human-like behavior in downstream meta-reinforcement learning agents than less abstract controls (synthetic language descriptions, program induction without library learning), suggesting that the abstraction supported by these representations is key.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge