Pushing the Limits of Semi-Supervised Learning for Automatic Speech Recognition

Paper and Code

Oct 20, 2020

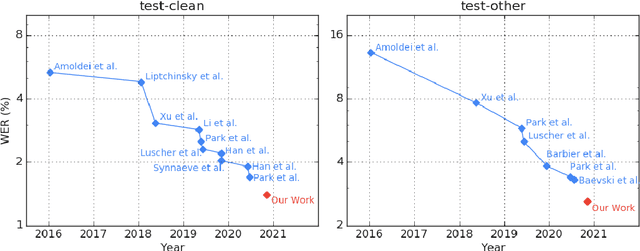

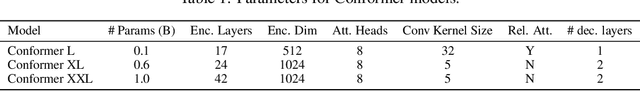

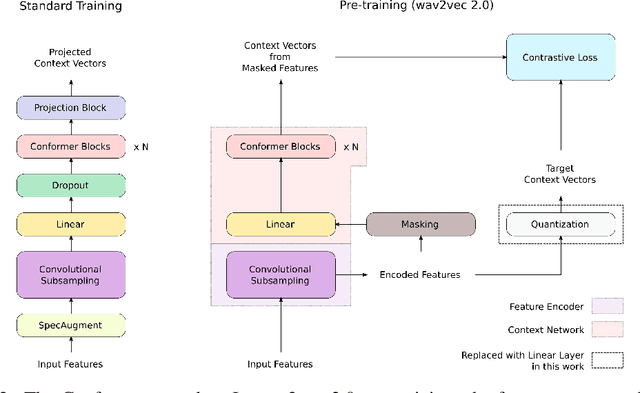

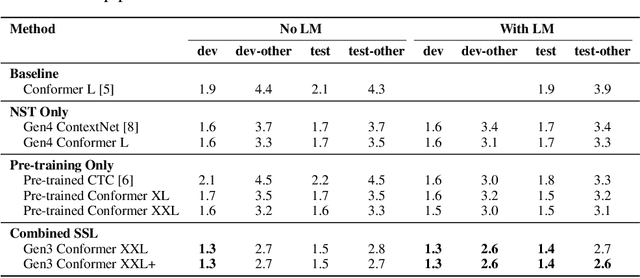

We employ a combination of recent developments in semi-supervised learning for automatic speech recognition to obtain state-of-the-art results on LibriSpeech utilizing the unlabeled audio of the Libri-Light dataset. More precisely, we carry out noisy student training with SpecAugment using giant Conformer models pre-trained using wav2vec 2.0 pre-training. By doing so, we are able to achieve word-error-rates (WERs) 1.4%/2.6% on the LibriSpeech test/test-other sets against the current state-of-the-art WERs 1.7%/3.3%.

* 11 pages, 3 figures, 5 tables. Submitted to NeurIPS SAS 2020 Workshop

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge