Heterogeneous Graph-based Trajectory Prediction using Local Map Context and Social Interactions

Paper and Code

Nov 30, 2023

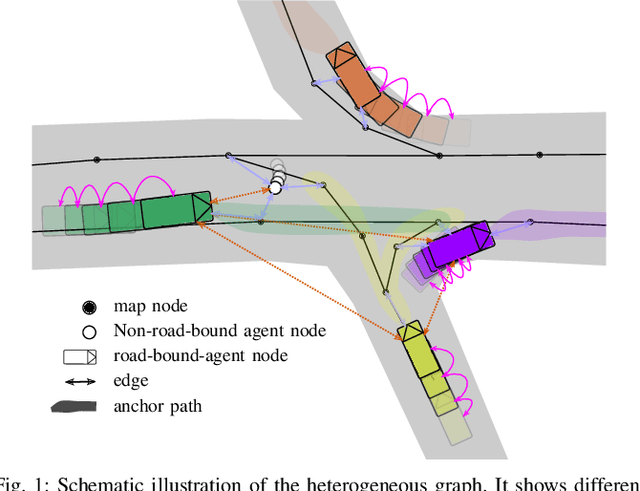

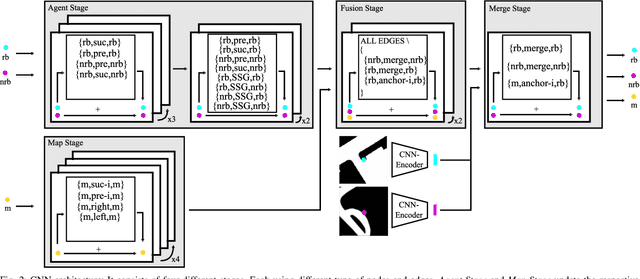

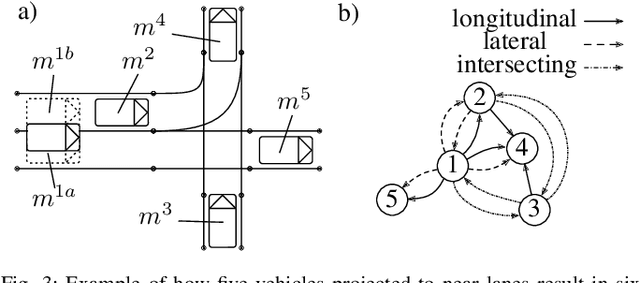

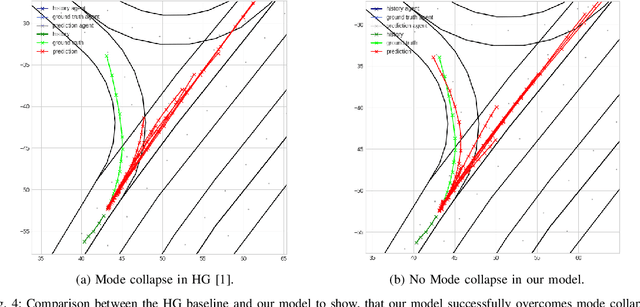

Precisely predicting the future trajectories of surrounding traffic participants is a crucial but challenging problem in autonomous driving, due to complex interactions between traffic agents, map context and traffic rules. Vector-based approaches have recently shown to achieve among the best performances on trajectory prediction benchmarks. These methods model simple interactions between traffic agents but don't distinguish between relation-type and attributes like their distance along the road. Furthermore, they represent lanes only by sequences of vectors representing center lines and ignore context information like lane dividers and other road elements. We present a novel approach for vector-based trajectory prediction that addresses these shortcomings by leveraging three crucial sources of information: First, we model interactions between traffic agents by a semantic scene graph, that accounts for the nature and important features of their relation. Second, we extract agent-centric image-based map features to model the local map context. Finally, we generate anchor paths to enforce the policy in multi-modal prediction to permitted trajectories only. Each of these three enhancements shows advantages over the baseline model HoliGraph.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge