ASMFS: Adaptive-Similarity-based Multi-modality Feature Selection for Classification of Alzheimer's Disease

Paper and Code

Oct 16, 2020

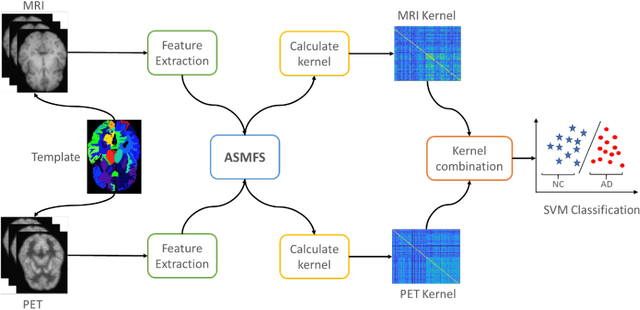

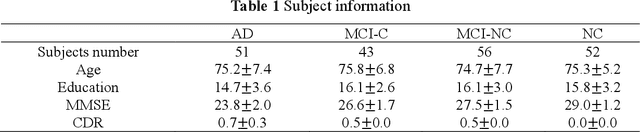

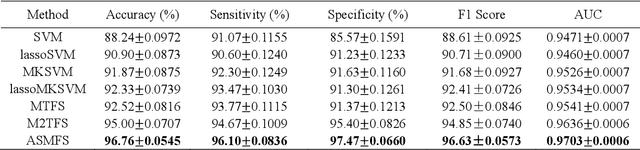

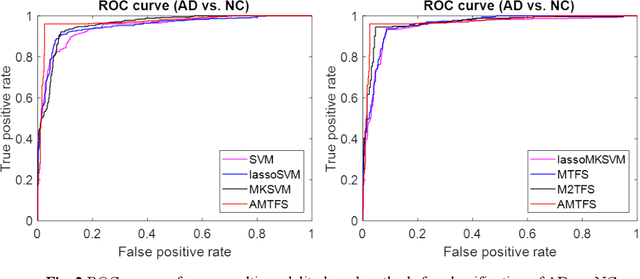

With the increasing amounts of high-dimensional heterogeneous data to be processed, multi-modality feature selection has become an important research direction in medical image analysis. Traditional methods usually depict the data structure using fixed and predefined similarity matrix for each modality separately, without considering the potential relationship structure across different modalities. In this paper, we propose a novel multi-modality feature selection method, which performs feature selection and local similarity learning simultaniously. Specially, a similarity matrix is learned by jointly considering different imaging modalities. And at the same time, feature selection is conducted by imposing sparse l_{2, 1} norm constraint. The effectiveness of our proposed joint learning method can be well demonstrated by the experimental results on Alzheimer's Disease Neuroimaging Initiative (ADNI) dataset, which outperforms existing the state-of-the-art multi-modality approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge