Abductive Commonsense Reasoning

Paper and Code

Aug 15, 2019

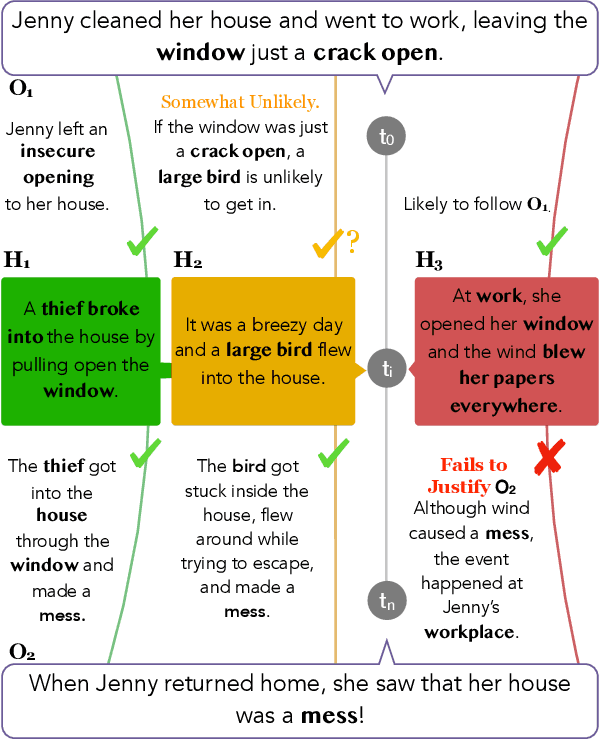

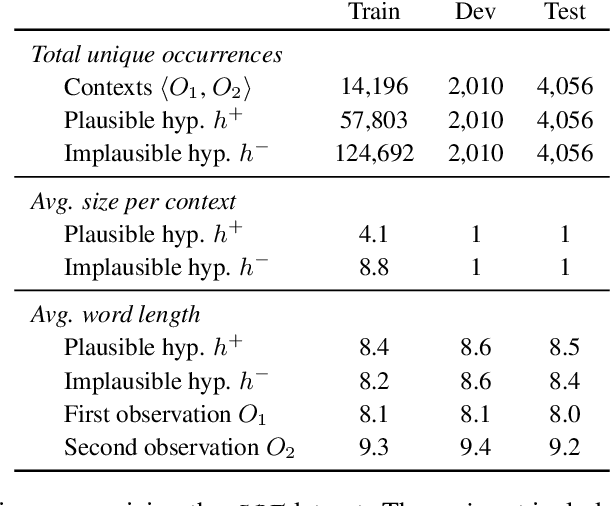

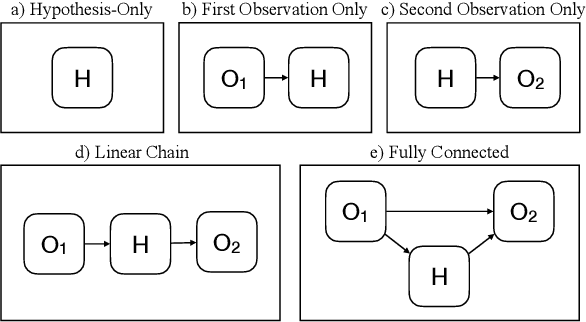

Abductive reasoning is inference to the most plausible explanation. For example, if Jenny finds her house in a mess when she returns from work, and remembers that she left a window open, she can hypothesize that a thief broke into her house and caused the mess, as the most plausible explanation. While abduction has long been considered to be at the core of how people interpret and read between the lines in natural language (Hobbs et al. (1988)), there has been relatively little NLP research in support of abductive natural language inference. We present the first study that investigates the viability of language-based abductive reasoning. We conceptualize a new task of Abductive NLI and introduce a challenge dataset, ART, that consists of over 20k commonsense narrative contexts and 200k explanations, formulated as multiple choice questions for easy automatic evaluation. We establish comprehensive baseline performance on this task based on state-of-the-art NLI and language models, which leads to 68.9% accuracy, well below human performance (91.4%). Our analysis leads to new insights into the types of reasoning that deep pre-trained language models fail to perform -- despite their strong performance on the related but fundamentally different task of entailment NLI -- pointing to interesting avenues for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge