Ziwu Song

MoiréTac: A Dual-Mode Visuotactile Sensor for Multidimensional Perception Using Moiré Pattern Amplification

Sep 16, 2025

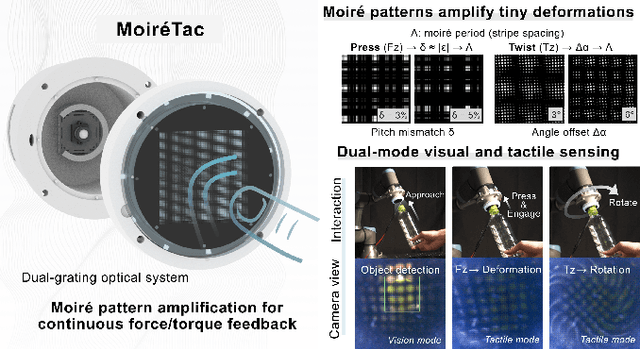

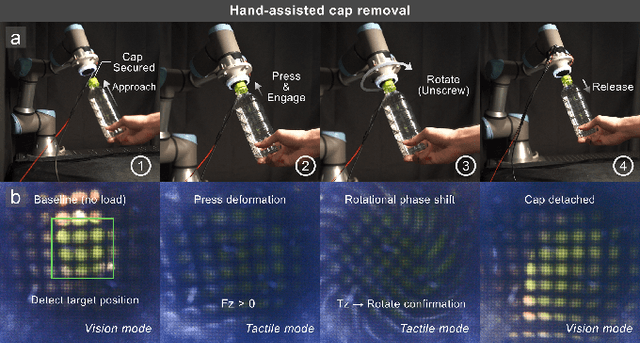

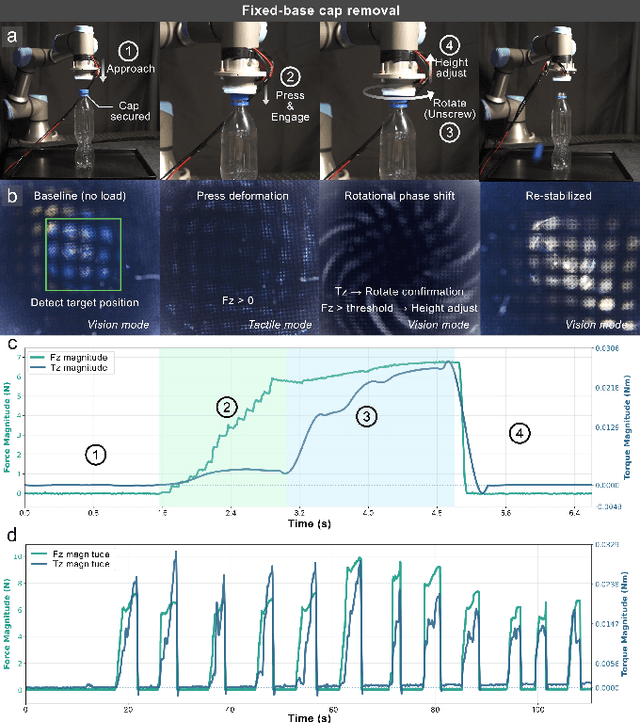

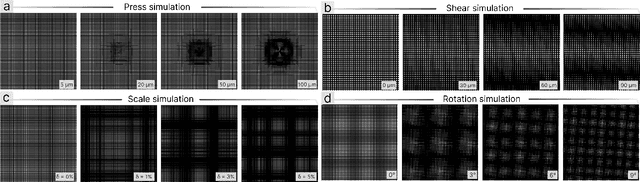

Abstract:Visuotactile sensors typically employ sparse marker arrays that limit spatial resolution and lack clear analytical force-to-image relationships. To solve this problem, we present \textbf{Moir\'eTac}, a dual-mode sensor that generates dense interference patterns via overlapping micro-gratings within a transparent architecture. When two gratings overlap with misalignment, they create moir\'e patterns that amplify microscopic deformations. The design preserves optical clarity for vision tasks while producing continuous moir\'e fields for tactile sensing, enabling simultaneous 6-axis force/torque measurement, contact localization, and visual perception. We combine physics-based features (brightness, phase gradient, orientation, and period) from moir\'e patterns with deep spatial features. These are mapped to 6-axis force/torque measurements, enabling interpretable regression through end-to-end learning. Experimental results demonstrate three capabilities: force/torque measurement with R^2 > 0.98 across tested axes; sensitivity tuning through geometric parameters (threefold gain adjustment); and vision functionality for object classification despite moir\'e overlay. Finally, we integrate the sensor into a robotic arm for cap removal with coordinated force and torque control, validating its potential for dexterous manipulation.

UltraTac: Integrated Ultrasound-Augmented Visuotactile Sensor for Enhanced Robotic Perception

Aug 29, 2025

Abstract:Visuotactile sensors provide high-resolution tactile information but are incapable of perceiving the material features of objects. We present UltraTac, an integrated sensor that combines visuotactile imaging with ultrasound sensing through a coaxial optoacoustic architecture. The design shares structural components and achieves consistent sensing regions for both modalities. Additionally, we incorporate acoustic matching into the traditional visuotactile sensor structure, enabling integration of the ultrasound sensing modality without compromising visuotactile performance. Through tactile feedback, we dynamically adjust the operating state of the ultrasound module to achieve flexible functional coordination. Systematic experiments demonstrate three key capabilities: proximity sensing in the 3-8 cm range ($R^2=0.90$), material classification (average accuracy: 99.20%), and texture-material dual-mode object recognition achieving 92.11% accuracy on a 15-class task. Finally, we integrate the sensor into a robotic manipulation system to concurrently detect container surface patterns and internal content, which verifies its potential for advanced human-machine interaction and precise robotic manipulation.

Depth Restoration of Hand-Held Transparent Objects for Human-to-Robot Handover

Aug 27, 2024

Abstract:Transparent objects are common in daily life, while their unique optical properties pose challenges for RGB-D cameras, which struggle to capture accurate depth information. For assistant robots, accurately perceiving transparent objects held by humans is essential for effective human-robot interaction. This paper presents a Hand-Aware Depth Restoration (HADR) method for hand-held transparent objects based on creating an implicit neural representation function from a single RGB-D image. The proposed method introduces the hand posture as an important guidance to leverage semantic and geometric information. To train and evaluate the proposed method, we create a high-fidelity synthetic dataset called TransHand-14K with a real-to-sim data generation scheme. Experiments show that our method has a better performance and generalization ability compared with existing methods. We further develop a real-world human-to-robot handover system based on the proposed depth restoration method, demonstrating its application value in human-robot interaction.

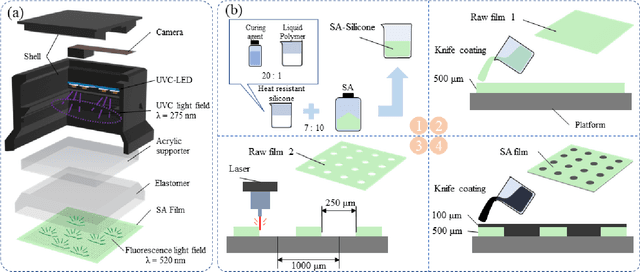

SATac: A Thermoluminescence Enabled Tactile Sensor for Concurrent Perception of Temperature, Pressure, and Shear

Feb 01, 2024

Abstract:Most vision-based tactile sensors use elastomer deformation to infer tactile information, which can not sense some modalities, like temperature. As an important part of human tactile perception, temperature sensing can help robots better interact with the environment. In this work, we propose a novel multimodal vision-based tactile sensor, SATac, which can simultaneously perceive information of temperature, pressure, and shear. SATac utilizes thermoluminescence of strontium aluminate (SA) to sense a wide range of temperatures with exceptional resolution. Additionally, the pressure and shear can also be perceived by analyzing Voronoi diagram. A series of experiments are conducted to verify the performance of our proposed sensor. We also discuss the possible application scenarios and demonstrate how SATac could benefit robot perception capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge