Zian Li

Rethinking Diffusion Models with Symmetries through Canonicalization with Applications to Molecular Graph Generation

Feb 16, 2026Abstract:Many generative tasks in chemistry and science involve distributions invariant to group symmetries (e.g., permutation and rotation). A common strategy enforces invariance and equivariance through architectural constraints such as equivariant denoisers and invariant priors. In this paper, we challenge this tradition through the alternative canonicalization perspective: first map each sample to an orbit representative with a canonical pose or order, train an unconstrained (non-equivariant) diffusion or flow model on the canonical slice, and finally recover the invariant distribution by sampling a random symmetry transform at generation time. Building on a formal quotient-space perspective, our work provides a comprehensive theory of canonical diffusion by proving: (i) the correctness, universality and superior expressivity of canonical generative models over invariant targets; (ii) canonicalization accelerates training by removing diffusion score complexity induced by group mixtures and reducing conditional variance in flow matching. We then show that aligned priors and optimal transport act complementarily with canonicalization and further improves training efficiency. We instantiate the framework for molecular graph generation under $S_n \times SE(3)$ symmetries. By leveraging geometric spectra-based canonicalization and mild positional encodings, canonical diffusion significantly outperforms equivariant baselines in 3D molecule generation tasks, with similar or even less computation. Moreover, with a novel architecture Canon, CanonFlow achieves state-of-the-art performance on the challenging GEOM-DRUG dataset, and the advantage remains large in few-step generation.

GeoRecon: Graph-Level Representation Learning for 3D Molecules via Reconstruction-Based Pretraining

Jun 16, 2025Abstract:The pretraining-and-finetuning paradigm has driven significant advances across domains, such as natural language processing and computer vision, with representative pretraining paradigms such as masked language modeling and next-token prediction. However, in molecular representation learning, the task design remains largely limited to node-level denoising, which is effective at modeling local atomic environments, yet maybe insufficient for capturing the global molecular structure required by graph-level property prediction tasks, such as energy estimation and molecular regression. In this work, we present GeoRecon, a novel graph-level pretraining framework that shifts the focus from individual atoms to the molecule as an integrated whole. GeoRecon introduces a graph-level reconstruction task: during pretraining, the model is trained to generate an informative graph representation capable of accurately guiding reconstruction of the molecular geometry. This encourages the model to learn coherent, global structural features rather than isolated atomic details. Without relying on additional supervision or external data, GeoRecon outperforms node-centric baselines on multiple molecular benchmarks (e.g., QM9, MD17), demonstrating the benefit of incorporating graph-level reconstruction for learning more holistic and geometry-aware molecular embeddings.

Geometric Representation Condition Improves Equivariant Molecule Generation

Oct 04, 2024

Abstract:Recent advancements in molecular generative models have demonstrated substantial potential in accelerating scientific discovery, particularly in drug design. However, these models often face challenges in generating high-quality molecules, especially in conditional scenarios where specific molecular properties must be satisfied. In this work, we introduce GeoRCG, a general framework to enhance the performance of molecular generative models by integrating geometric representation conditions. We decompose the molecule generation process into two stages: first, generating an informative geometric representation; second, generating a molecule conditioned on the representation. Compared to directly generating a molecule, the relatively easy-to-generate representation in the first-stage guides the second-stage generation to reach a high-quality molecule in a more goal-oriented and much faster way. Leveraging EDM as the base generator, we observe significant quality improvements in unconditional molecule generation on the widely-used QM9 and GEOM-DRUG datasets. More notably, in the challenging conditional molecular generation task, our framework achieves an average 31\% performance improvement over state-of-the-art approaches, highlighting the superiority of conditioning on semantically rich geometric representations over conditioning on individual property values as in previous approaches. Furthermore, we show that, with such representation guidance, the number of diffusion steps can be reduced to as small as 100 while maintaining superior generation quality than that achieved with 1,000 steps, thereby significantly accelerating the generation process.

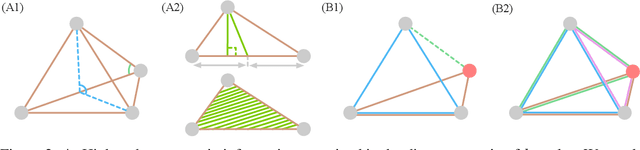

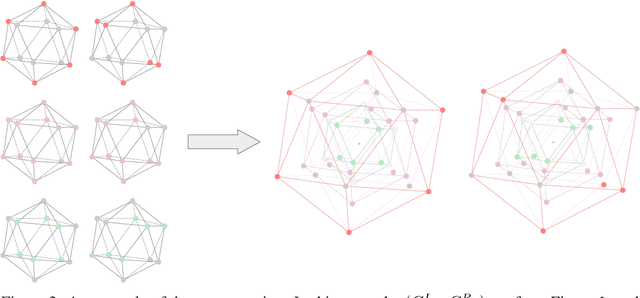

On the Completeness of Invariant Geometric Deep Learning Models

Feb 07, 2024Abstract:Invariant models, one important class of geometric deep learning models, are capable of generating meaningful geometric representations by leveraging informative geometric features. These models are characterized by their simplicity, good experimental results and computational efficiency. However, their theoretical expressive power still remains unclear, restricting a deeper understanding of the potential of such models. In this work, we concentrate on characterizing the theoretical expressiveness of invariant models. We first rigorously bound the expressiveness of the most classical invariant model, Vanilla DisGNN (message passing neural networks incorporating distance), restricting its unidentifiable cases to be only those highly symmetric geometric graphs. To break these corner cases' symmetry, we introduce a simple yet E(3)-complete invariant design by nesting Vanilla DisGNN, named GeoNGNN. Leveraging GeoNGNN as a theoretical tool, we for the first time prove the E(3)-completeness of three well-established geometric models: DimeNet, GemNet and SphereNet. Our results fill the gap in the theoretical power of invariant models, contributing to a rigorous and comprehensive understanding of their capabilities. Experimentally, GeoNGNN exhibits good inductive bias in capturing local environments, and achieves competitive results w.r.t. complicated models relying on high-order invariant/equivariant representations while exhibiting significantly faster computational speed.

Is Distance Matrix Enough for Geometric Deep Learning?

Feb 11, 2023

Abstract:Graph Neural Networks (GNNs) are often used for tasks involving the geometry of a given graph, such as molecular dynamics simulation. While the distance matrix of a graph contains the complete geometric structure information, whether GNNs can learn this geometry solely from the distance matrix has yet to be studied. In this work, we first demonstrate that Message Passing Neural Networks (MPNNs) are insufficient for learning the geometry of a graph from its distance matrix by constructing families of geometric graphs which cannot be distinguished by MPNNs. We then propose $k$-DisGNNs, which can effectively exploit the rich geometry contained in the distance matrix. We demonstrate the high expressive power of our models and prove that some existing well-designed geometric models can be unified by $k$-DisGNNs as special cases. Most importantly, we establish a connection between geometric deep learning and traditional graph representation learning, showing that those highly expressive GNN models originally designed for graph structure learning can also be applied to geometric deep learning problems with impressive performance, and that existing complex, equivariant models are not the only solution. Experimental results verify our theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge