Zhongping Ji

CliffordNet: All You Need is Geometric Algebra

Jan 11, 2026Abstract:Modern computer vision architectures, from CNNs to Transformers, predominantly rely on the stacking of heuristic modules: spatial mixers (Attention/Conv) followed by channel mixers (FFNs). In this work, we challenge this paradigm by returning to mathematical first principles. We propose the \textbf{Clifford Algebra Network (CAN)}, also referred to as CliffordNet, a vision backbone grounded purely in Geometric Algebra. Instead of engineering separate modules for mixing and memory, we derive a unified interaction mechanism based on the \textbf{Clifford Geometric Product} ($uv = u \cdot v + u \wedge v$). This operation ensures algebraic completeness regarding the Geometric Product by simultaneously capturing feature coherence (via the generalized inner product) and structural variation (via the exterior wedge product). Implemented via an efficient sparse rolling mechanism with \textbf{strict linear complexity $\mathcal{O}(N)$}, our model reveals a surprising emergent property: the geometric interaction is so representationally dense that standard Feed-Forward Networks (FFNs) become redundant. Empirically, CliffordNet establishes a new Pareto frontier: our \textbf{Nano} variant achieves \textbf{76.41\%} accuracy on CIFAR-100 with only \textbf{1.4M} parameters, effectively matching the heavy-weight ResNet-18 (11.2M) with \textbf{$8\times$ fewer parameters}, while our \textbf{Base} variant sets a new SOTA for tiny models at \textbf{78.05\%}. Our results suggest that global understanding can emerge solely from rigorous, algebraically complete local interactions, potentially signaling a shift where \textit{geometry is all you need}. Code is available at https://github.com/ParaMind2025/CAN.

RiemannFormer: A Framework for Attention in Curved Spaces

Jun 09, 2025Abstract:This research endeavors to offer insights into unlocking the further potential of transformer-based architectures. One of the primary motivations is to offer a geometric interpretation for the attention mechanism in transformers. In our framework, the attention mainly involves metric tensors, tangent spaces, inner product, and how they relate to each other. These quantities and structures at discrete positions are intricately interconnected via the parallel transport of tangent vectors. To make the learning process more efficient, we reduce the number of parameters through ingenious predefined configurations. Moreover, we introduce an explicit mechanism to highlight a neighborhood by attenuating the remote values, given that transformers inherently neglect local inductive bias. Experimental results demonstrate that our modules deliver significant performance improvements relative to the baseline. More evaluation experiments on visual and large language models will be launched successively.

MHS-VM: Multi-Head Scanning in Parallel Subspaces for Vision Mamba

Jun 10, 2024Abstract:Recently, State Space Models (SSMs), with Mamba as a prime example, have shown great promise for long-range dependency modeling with linear complexity. Then, Vision Mamba and the subsequent architectures are presented successively, and they perform well on visual tasks. The crucial step of applying Mamba to visual tasks is to construct 2D visual features in sequential manners. To effectively organize and construct visual features within the 2D image space through 1D selective scan, we propose a novel Multi-Head Scan (MHS) module. The embeddings extracted from the preceding layer are projected into multiple lower-dimensional subspaces. Subsequently, within each subspace, the selective scan is performed along distinct scan routes. The resulting sub-embeddings, obtained from the multi-head scan process, are then integrated and ultimately projected back into the high-dimensional space. Moreover, we incorporate a Scan Route Attention (SRA) mechanism to enhance the module's capability to discern complex structures. To validate the efficacy of our module, we exclusively substitute the 2D-Selective-Scan (SS2D) block in VM-UNet with our proposed module, and we train our models from scratch without using any pre-trained weights. The results indicate a significant improvement in performance while reducing the parameters of the original VM-UNet. The code for this study is publicly available at https://github.com/PixDeep/MHS-VM.

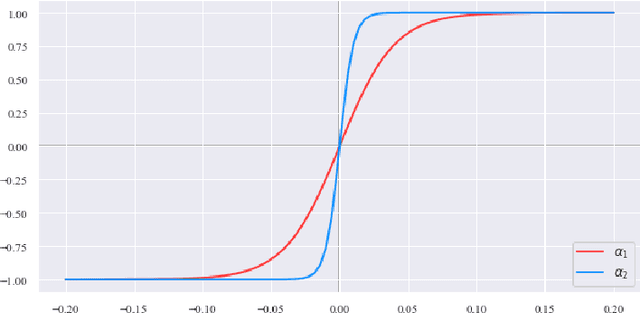

Photo2Relief: Let Human in the Photograph Stand Out

Jul 21, 2023

Abstract:In this paper, we propose a technique for making humans in photographs protrude like reliefs. Unlike previous methods which mostly focus on the face and head, our method aims to generate art works that describe the whole body activity of the character. One challenge is that there is no ground-truth for supervised deep learning. We introduce a sigmoid variant function to manipulate gradients tactfully and train our neural networks by equipping with a loss function defined in gradient domain. The second challenge is that actual photographs often across different light conditions. We used image-based rendering technique to address this challenge and acquire rendering images and depth data under different lighting conditions. To make a clear division of labor in network modules, a two-scale architecture is proposed to create high-quality relief from a single photograph. Extensive experimental results on a variety of scenes show that our method is a highly effective solution for generating digital 2.5D artwork from photographs.

BikNN: Anomaly Estimation in Bilateral Domains with k-Nearest Neighbors

May 11, 2021

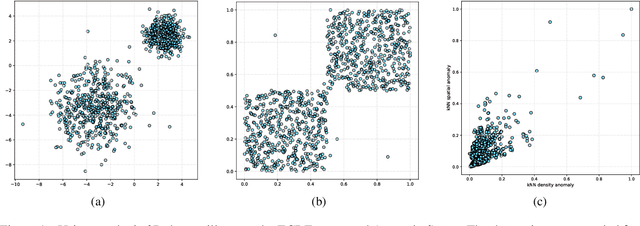

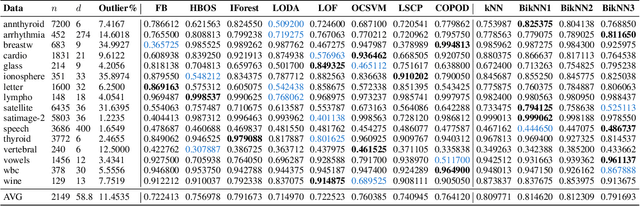

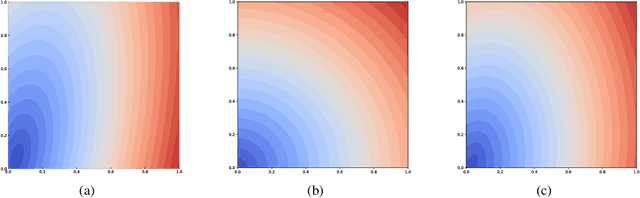

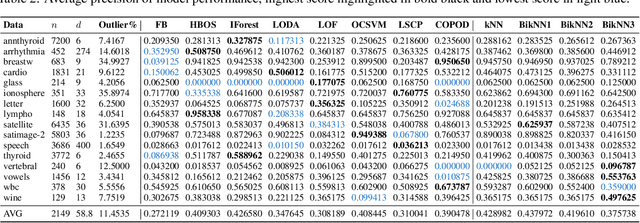

Abstract:In this paper, a novel framework for anomaly estimation is proposed. The basic idea behind our method is to reduce the data into a two-dimensional space and then rank each data point in the reduced space. We attempt to estimate the degree of anomaly in both spatial and density domains. Specifically, we transform the data points into a density space and measure the distances in density domain between each point and its k-Nearest Neighbors in spatial domain. Then, an anomaly coordinate system is built by collecting two unilateral anomalies from k-nearest neighbors of each point. Further more, we introduce two schemes to model their correlation and combine them to get the final anomaly score. Experiments performed on the synthetic and real world datasets demonstrate that the proposed method performs well and achieve highest average performance. We also show that the proposed method can provide visualization and classification of the anomalies in a simple manner. Due to the complexity of the anomaly, none of the existing methods can perform best on all benchmark datasets. Our method takes into account both the spatial domain and the density domain and can be adapted to different datasets by adjusting a few parameters manually.

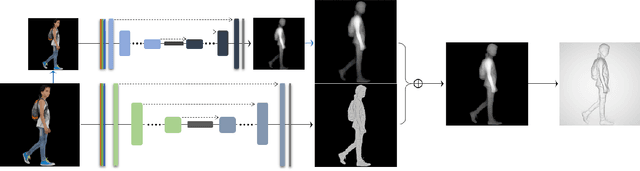

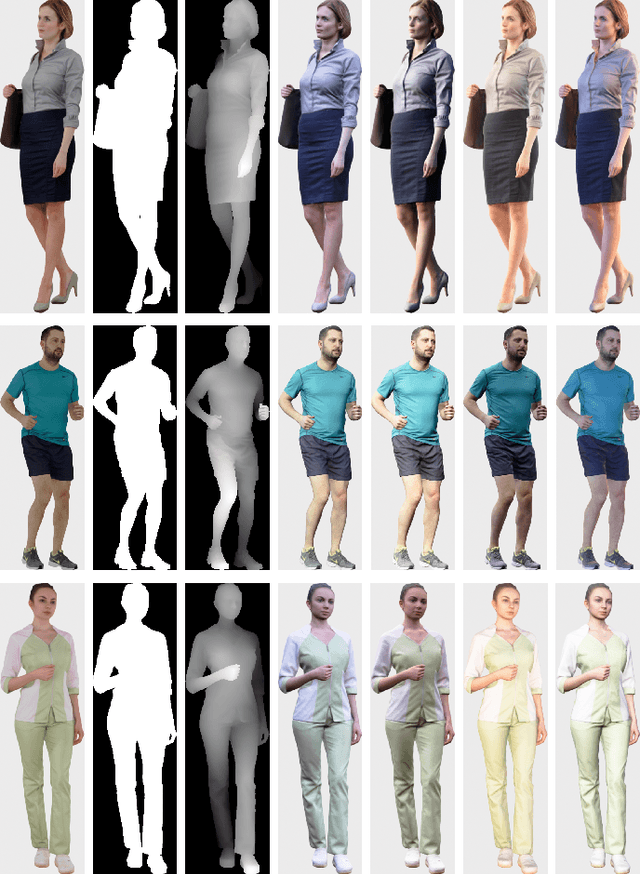

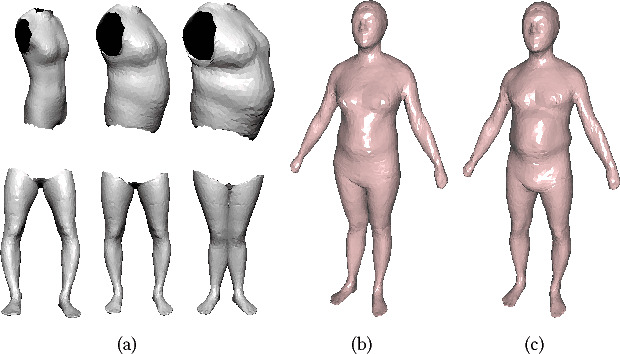

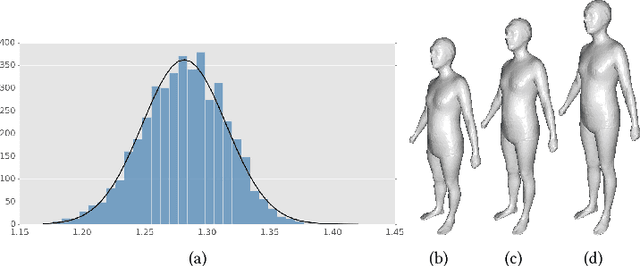

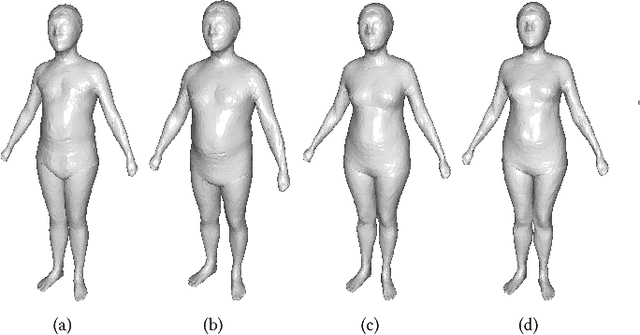

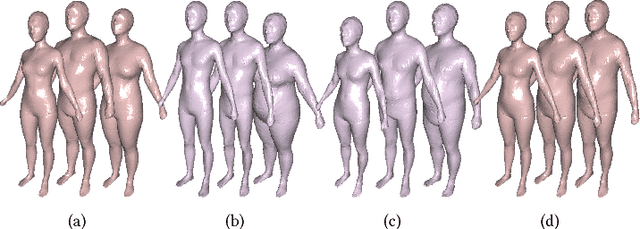

Shape-from-Mask: A Deep Learning Based Human Body Shape Reconstruction from Binary Mask Images

Jun 22, 2018

Abstract:3D content creation is referred to as one of the most fundamental tasks of computer graphics. And many 3D modeling algorithms from 2D images or curves have been developed over the past several decades. Designers are allowed to align some conceptual images or sketch some suggestive curves, from front, side, and top views, and then use them as references in constructing a 3D model automatically or manually. However, to the best of our knowledge, no studies have investigated on 3D human body reconstruction in a similar manner. In this paper, we propose a deep learning based reconstruction of 3D human body shape from 2D orthographic views. A novel CNN-based regression network, with two branches corresponding to frontal and lateral views respectively, is designed for estimating 3D human body shape from 2D mask images. We train our networks separately to decouple the feature descriptors which encode the body parameters from different views, and fuse them to estimate an accurate human body shape. In addition, to overcome the shortage of training data required for this purpose, we propose some significantly data augmentation schemes for 3D human body shapes, which can be used to promote further research on this topic. Extensive experimen- tal results demonstrate that visually realistic and accurate reconstructions can be achieved effectively using our algorithm. Requiring only binary mask images, our method can help users create their own digital avatars quickly, and also make it easy to create digital human body for 3D game, virtual reality, online fashion shopping.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge