Zhongheng Li

Encoding Agent Trajectories as Representations with Sequence Transformers

Oct 11, 2024Abstract:Spatiotemporal data faces many analogous challenges to natural language text including the ordering of locations (words) in a sequence, long range dependencies between locations, and locations having multiple meanings. In this work, we propose a novel model for representing high dimensional spatiotemporal trajectories as sequences of discrete locations and encoding them with a Transformer-based neural network architecture. Similar to language models, our Sequence Transformer for Agent Representation Encodings (STARE) model can learn representations and structure in trajectory data through both supervisory tasks (e.g., classification), and self-supervisory tasks (e.g., masked modelling). We present experimental results on various synthetic and real trajectory datasets and show that our proposed model can learn meaningful encodings that are useful for many downstream tasks including discriminating between labels and indicating similarity between locations. Using these encodings, we also learn relationships between agents and locations present in spatiotemporal data.

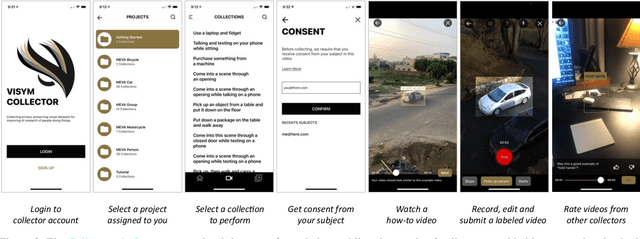

Fine-grained Activities of People Worldwide

Jul 11, 2022

Abstract:Every day, humans perform many closely related activities that involve subtle discriminative motions, such as putting on a shirt vs. putting on a jacket, or shaking hands vs. giving a high five. Activity recognition by ethical visual AI could provide insights into our patterns of daily life, however existing activity recognition datasets do not capture the massive diversity of these human activities around the world. To address this limitation, we introduce Collector, a free mobile app to record video while simultaneously annotating objects and activities of consented subjects. This new data collection platform was used to curate the Consented Activities of People (CAP) dataset, the first large-scale, fine-grained activity dataset of people worldwide. The CAP dataset contains 1.45M video clips of 512 fine grained activity labels of daily life, collected by 780 subjects in 33 countries. We provide activity classification and activity detection benchmarks for this dataset, and analyze baseline results to gain insight into how people around with world perform common activities. The dataset, benchmarks, evaluation tools, public leaderboards and mobile apps are available for use at visym.github.io/cap.

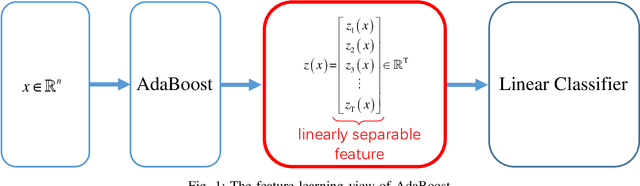

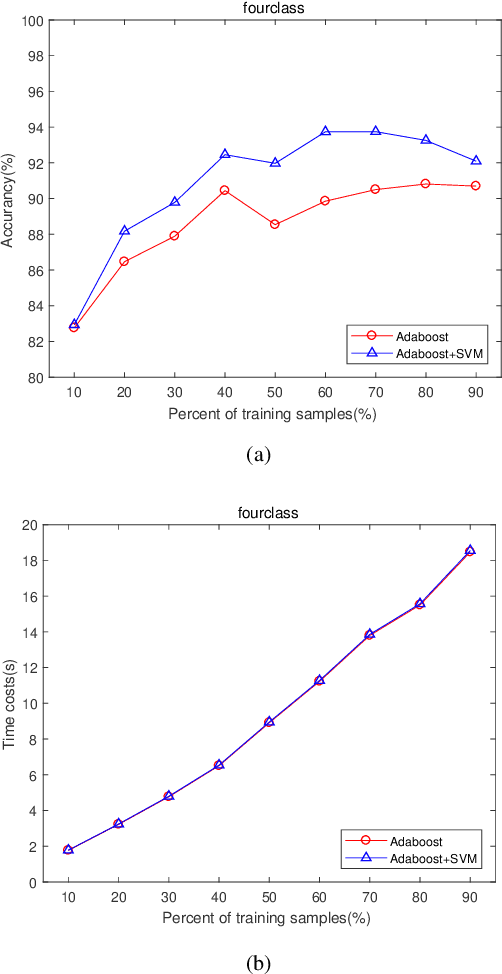

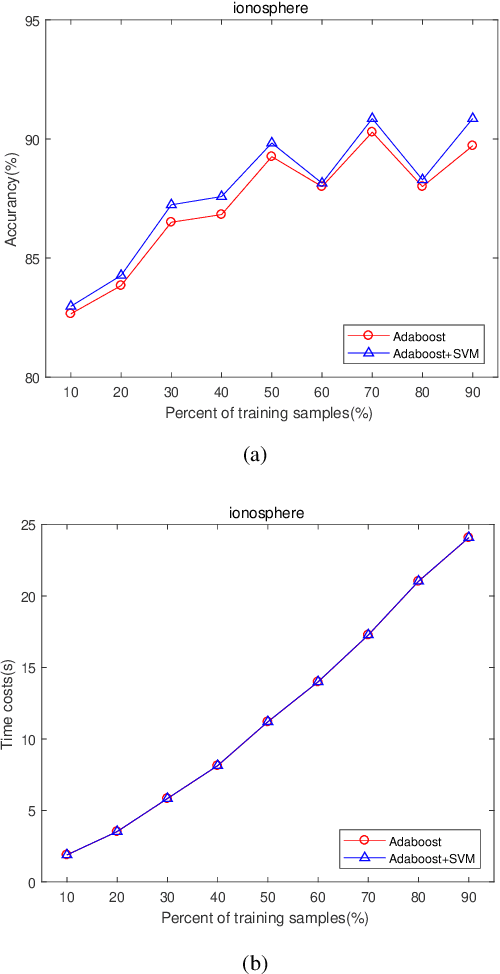

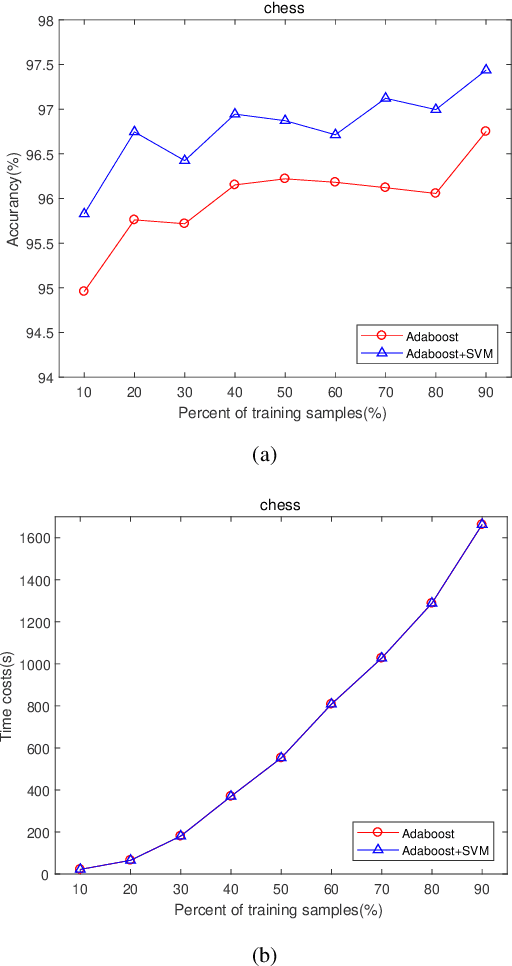

Feature Learning Viewpoint of AdaBoost and a New Algorithm

Apr 08, 2019

Abstract:The AdaBoost algorithm has the superiority of resisting overfitting. Understanding the mysteries of this phenomena is a very fascinating fundamental theoretical problem. Many studies are devoted to explaining it from statistical view and margin theory. In this paper, we illustrate it from feature learning viewpoint, and propose the AdaBoost+SVM algorithm, which can explain the resistant to overfitting of AdaBoost directly and easily to understand. Firstly, we adopt the AdaBoost algorithm to learn the base classifiers. Then, instead of directly weighted combination the base classifiers, we regard them as features and input them to SVM classifier. With this, the new coefficient and bias can be obtained, which can be used to construct the final classifier. We explain the rationality of this and illustrate the theorem that when the dimension of these features increases, the performance of SVM would not be worse, which can explain the resistant to overfitting of AdaBoost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge