Zhimin Wang

Learning with Challenges: Adaptive Difficulty-Aware Data Generation for Mobile GUI Agent Training

Jan 30, 2026Abstract:Large-scale, high-quality interaction trajectories are essential for advancing mobile Graphical User Interface (GUI) agents. While existing methods typically rely on labor-intensive human demonstrations or automated model exploration to generate GUI trajectories, they lack fine-grained control over task difficulty. This fundamentally restricts learning effectiveness due to the mismatch between the training difficulty and the agent's capabilities. Inspired by how humans acquire skills through progressively challenging tasks, we propose MobileGen, a novel data generation framework that adaptively aligns training difficulty with the GUI agent's capability frontier. Specifically, MobileGen explicitly decouples task difficulty into structural (e.g., trajectory length) and semantic (e.g., task goal) dimensions. It then iteratively evaluates the agent on a curated prior dataset to construct a systematic profile of its capability frontier across these two dimensions. With this profile, the probability distribution of task difficulty is adaptively computed, from which the target difficulty for the next round of training can be sampled. Guided by the sampled difficulty, a multi-agent controllable generator is finally used to synthesize high-quality interaction trajectories along with corresponding task instructions. Extensive experiments show that MobileGen consistently outperforms existing data generation methods by improving the average performance of GUI agents by 1.57 times across multiple challenging benchmarks. This highlights the importance of capability-aligned data generation for effective mobile GUI agent training.

CoBel-World: Harnessing LLM Reasoning to Build a Collaborative Belief World for Optimizing Embodied Multi-Agent Collaboration

Sep 26, 2025

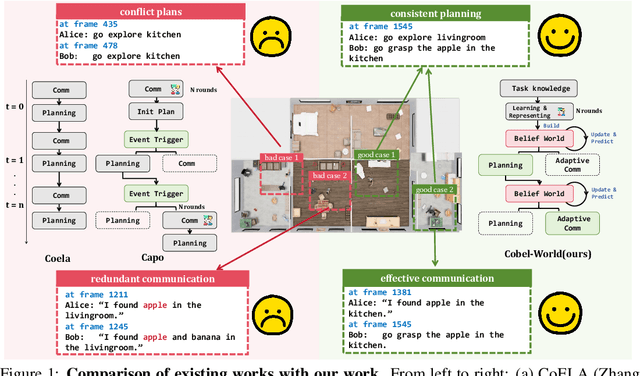

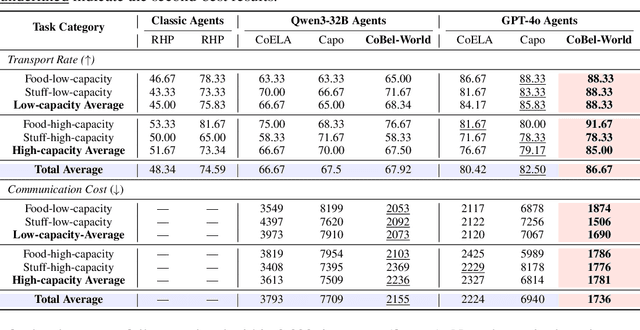

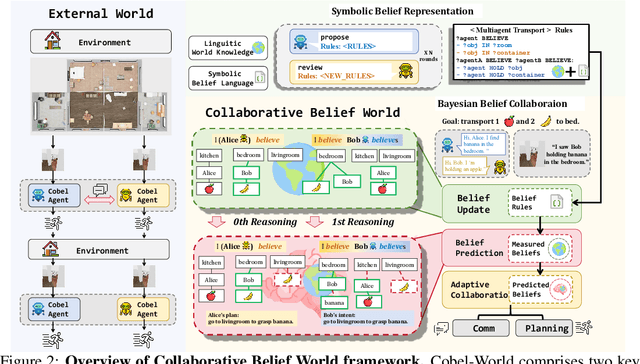

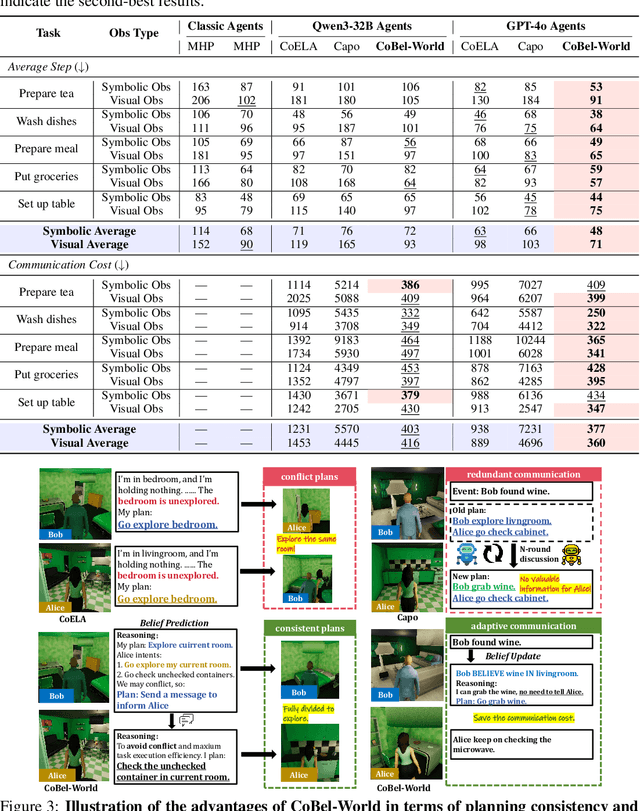

Abstract:Effective real-world multi-agent collaboration requires not only accurate planning but also the ability to reason about collaborators' intents -- a crucial capability for avoiding miscoordination and redundant communication under partial observable environments. Due to their strong planning and reasoning capabilities, large language models (LLMs) have emerged as promising autonomous agents for collaborative task solving. However, existing collaboration frameworks for LLMs overlook their reasoning potential for dynamic intent inference, and thus produce inconsistent plans and redundant communication, reducing collaboration efficiency. To bridge this gap, we propose CoBel-World, a novel framework that equips LLM agents with a collaborative belief world -- an internal representation jointly modeling the physical environment and collaborators' mental states. CoBel-World enables agents to parse open-world task knowledge into structured beliefs via a symbolic belief language, and perform zero-shot Bayesian-style belief updates through LLM reasoning. This allows agents to proactively detect potential miscoordination (e.g., conflicting plans) and communicate adaptively. Evaluated on challenging embodied benchmarks (i.e., TDW-MAT and C-WAH), CoBel-World significantly reduces communication costs by 22-60% and improves task completion efficiency by 4-28% compared to the strongest baseline. Our results show that explicit, intent-aware belief modeling is essential for efficient and human-like collaboration in LLM-based multi-agent systems.

Readability-guided Idiom-aware Sentence Simplification (RISS) for Chinese

Jun 05, 2024Abstract:Chinese sentence simplification faces challenges due to the lack of large-scale labeled parallel corpora and the prevalence of idioms. To address these challenges, we propose Readability-guided Idiom-aware Sentence Simplification (RISS), a novel framework that combines data augmentation techniques with lexcial simplification. RISS introduces two key components: (1) Readability-guided Paraphrase Selection (RPS), a method for mining high-quality sentence pairs, and (2) Idiom-aware Simplification (IAS), a model that enhances the comprehension and simplification of idiomatic expressions. By integrating RPS and IAS using multi-stage and multi-task learning strategies, RISS outperforms previous state-of-the-art methods on two Chinese sentence simplification datasets. Furthermore, RISS achieves additional improvements when fine-tuned on a small labeled dataset. Our approach demonstrates the potential for more effective and accessible Chinese text simplification.

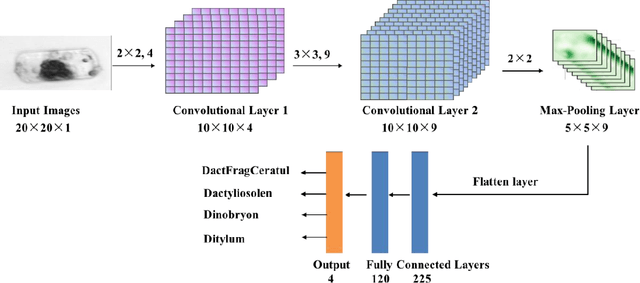

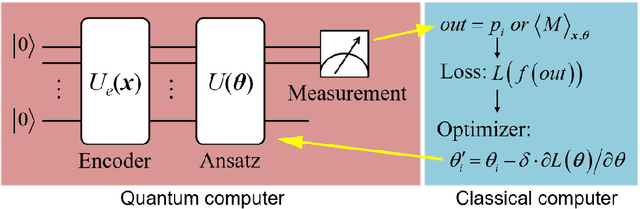

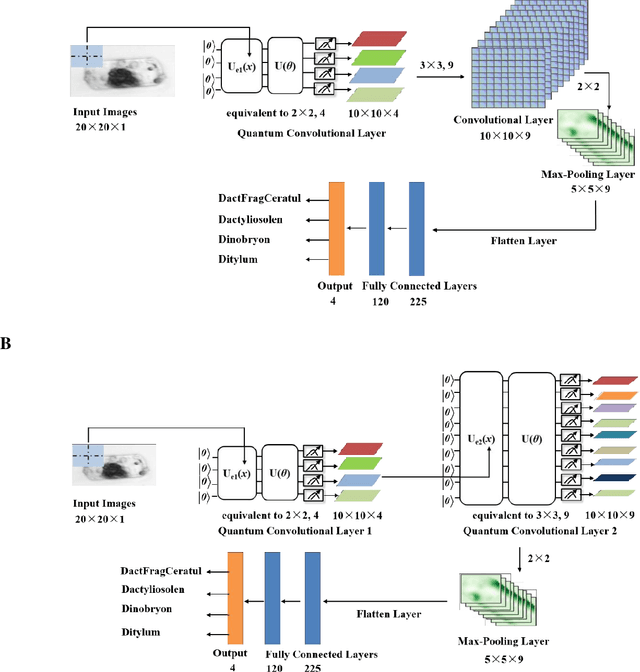

Hybrid quantum-classical convolutional neural network for phytoplankton classification

Mar 07, 2023

Abstract:The taxonomic composition and abundance of phytoplankton, having direct impact on marine ecosystem dynamic and global environment change, are listed as essential ocean variables. Phytoplankton classification is very crucial for Phytoplankton analysis, but it is very difficult because of the huge amount and tiny volume of Phytoplankton. Machine learning is the principle way of performing phytoplankton image classification automatically. When carrying out large-scale research on the marine phytoplankton, the volume of data increases overwhelmingly and more powerful computational resources are required for the success of machine learning algorithms. Recently, quantum machine learning has emerged as the potential solution for large-scale data processing by harnessing the exponentially computational power of quantum computer. Here, for the first time, we demonstrate the feasibility of quantum deep neural networks for phytoplankton classification. Hybrid quantum-classical convolutional and residual neural networks are developed based on the classical architectures. These models make a proper balance between the limited function of the current quantum devices and the large size of phytoplankton images, which make it possible to perform phytoplankton classification on the near-term quantum computers. Better performance is obtained by the quantum-enhanced models against the classical counterparts. In particular, quantum models converge much faster than classical ones. The present quantum models are versatile, and can be applied for various tasks of image classification in the field of marine science.

Quantum Recurrent Neural Networks for Sequential Learning

Feb 07, 2023

Abstract:Quantum neural network (QNN) is one of the promising directions where the near-term noisy intermediate-scale quantum (NISQ) devices could find advantageous applications against classical resources. Recurrent neural networks are the most fundamental networks for sequential learning, but up to now there is still a lack of canonical model of quantum recurrent neural network (QRNN), which certainly restricts the research in the field of quantum deep learning. In the present work, we propose a new kind of QRNN which would be a good candidate as the canonical QRNN model, where, the quantum recurrent blocks (QRBs) are constructed in the hardware-efficient way, and the QRNN is built by stacking the QRBs in a staggered way that can greatly reduce the algorithm's requirement with regard to the coherent time of quantum devices. That is, our QRNN is much more accessible on NISQ devices. Furthermore, the performance of the present QRNN model is verified concretely using three different kinds of classical sequential data, i.e., meteorological indicators, stock price, and text categorization. The numerical experiments show that our QRNN achieves much better performance in prediction (classification) accuracy against the classical RNN and state-of-the-art QNN models for sequential learning, and can predict the changing details of temporal sequence data. The practical circuit structure and superior performance indicate that the present QRNN is a promising learning model to find quantum advantageous applications in the near term.

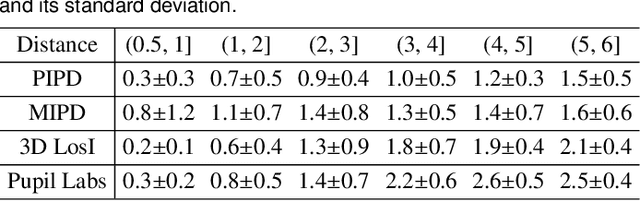

Gaze-Vergence-Controlled See-Through Vision in Augmented Reality

Jul 06, 2022

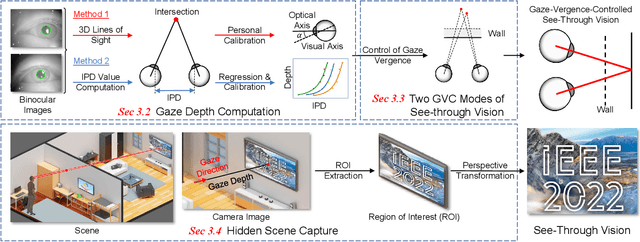

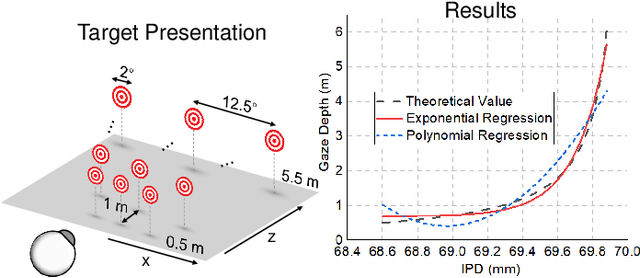

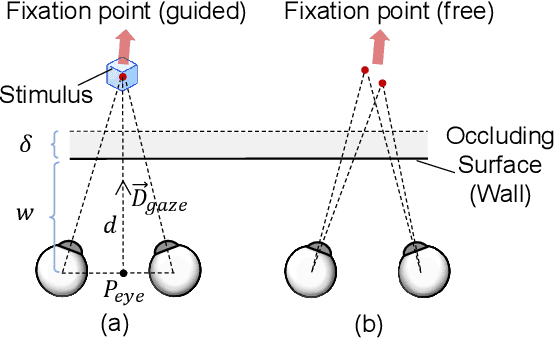

Abstract:Augmented Reality (AR) see-through vision is an interesting research topic since it enables users to see through a wall and see the occluded objects. Most existing research focuses on the visual effects of see-through vision, while the interaction method is less studied. However, we argue that using common interaction modalities, e.g., midair click and speech, may not be the optimal way to control see-through vision. This is because when we want to see through something, it is physically related to our gaze depth/vergence and thus should be naturally controlled by the eyes. Following this idea, this paper proposes a novel gaze-vergence-controlled (GVC) see-through vision technique in AR. Since gaze depth is needed, we build a gaze tracking module with two infrared cameras and the corresponding algorithm and assemble it into the Microsoft HoloLens 2 to achieve gaze depth estimation. We then propose two different GVC modes for see-through vision to fit different scenarios. Extensive experimental results demonstrate that our gaze depth estimation is efficient and accurate. By comparing with conventional interaction modalities, our GVC techniques are also shown to be superior in terms of efficiency and more preferred by users. Finally, we present four example applications of gaze-vergence-controlled see-through vision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge