Wenhe Feng

Automated Parsing of Engineering Drawings for Structured Information Extraction Using a Fine-tuned Document Understanding Transformer

May 02, 2025

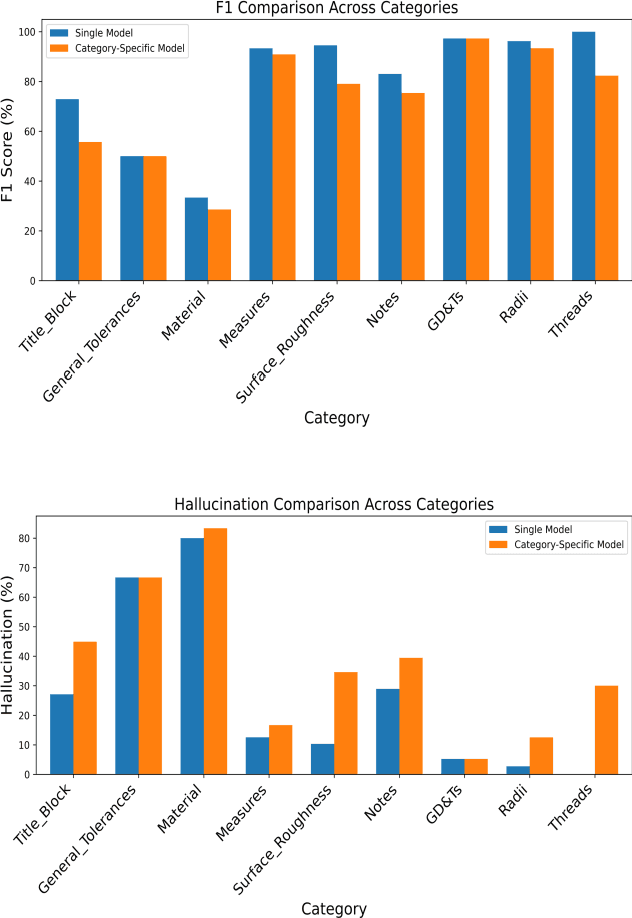

Abstract:Accurate extraction of key information from 2D engineering drawings is crucial for high-precision manufacturing. Manual extraction is time-consuming and error-prone, while traditional Optical Character Recognition (OCR) techniques often struggle with complex layouts and overlapping symbols, resulting in unstructured outputs. To address these challenges, this paper proposes a novel hybrid deep learning framework for structured information extraction by integrating an oriented bounding box (OBB) detection model with a transformer-based document parsing model (Donut). An in-house annotated dataset is used to train YOLOv11 for detecting nine key categories: Geometric Dimensioning and Tolerancing (GD&T), General Tolerances, Measures, Materials, Notes, Radii, Surface Roughness, Threads, and Title Blocks. Detected OBBs are cropped into images and labeled to fine-tune Donut for structured JSON output. Fine-tuning strategies include a single model trained across all categories and category-specific models. Results show that the single model consistently outperforms category-specific ones across all evaluation metrics, achieving higher precision (94.77% for GD&T), recall (100% for most), and F1 score (97.3%), while reducing hallucination (5.23%). The proposed framework improves accuracy, reduces manual effort, and supports scalable deployment in precision-driven industries.

Fine-Tuning Vision-Language Model for Automated Engineering Drawing Information Extraction

Nov 06, 2024

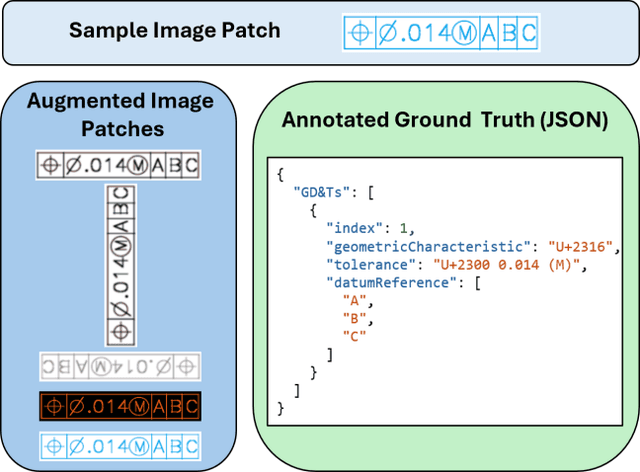

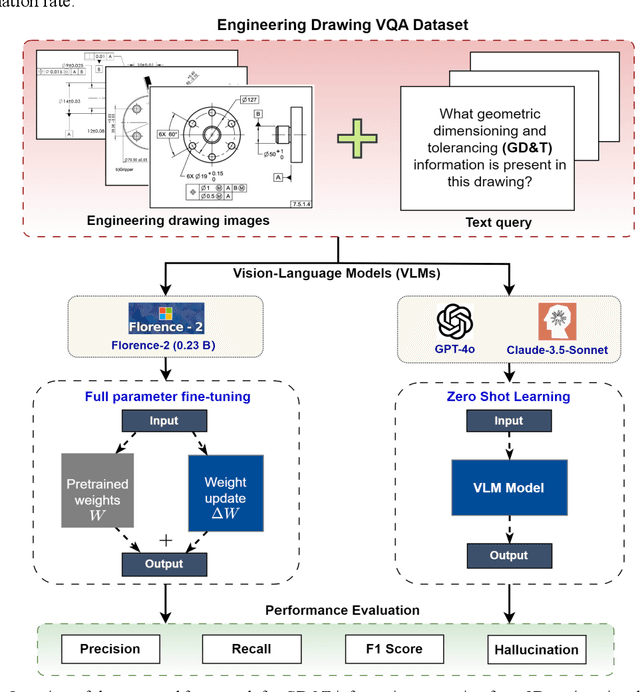

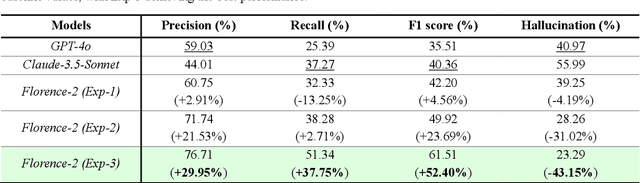

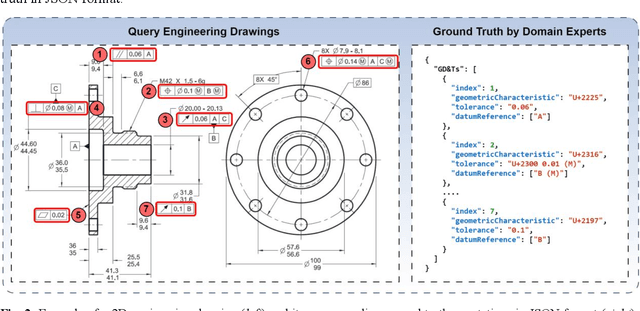

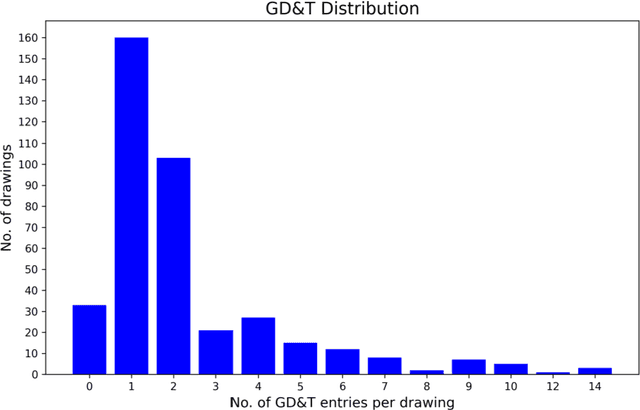

Abstract:Geometric Dimensioning and Tolerancing (GD&T) plays a critical role in manufacturing by defining acceptable variations in part features to ensure component quality and functionality. However, extracting GD&T information from 2D engineering drawings is a time-consuming and labor-intensive task, often relying on manual efforts or semi-automated tools. To address these challenges, this study proposes an automated and computationally efficient GD&T extraction method by fine-tuning Florence-2, an open-source vision-language model (VLM). The model is trained on a dataset of 400 drawings with ground truth annotations provided by domain experts. For comparison, two state-of-the-art closed-source VLMs, GPT-4o and Claude-3.5-Sonnet, are evaluated on the same dataset. All models are assessed using precision, recall, F1-score, and hallucination metrics. Due to the computational cost and impracticality of fine-tuning large closed-source VLMs for domain-specific tasks, GPT-4o and Claude-3.5-Sonnet are evaluated in a zero-shot setting. In contrast, Florence-2, a smaller model with 0.23 billion parameters, is optimized through full-parameter fine-tuning across three distinct experiments, each utilizing datasets augmented to different levels. The results show that Florence-2 achieves a 29.95% increase in precision, a 37.75% increase in recall, a 52.40% improvement in F1-score, and a 43.15% reduction in hallucination rate compared to the best-performing closed-source model. These findings highlight the effectiveness of fine-tuning smaller, open-source VLMs like Florence-2, offering a practical and efficient solution for automated GD&T extraction to support downstream manufacturing tasks.

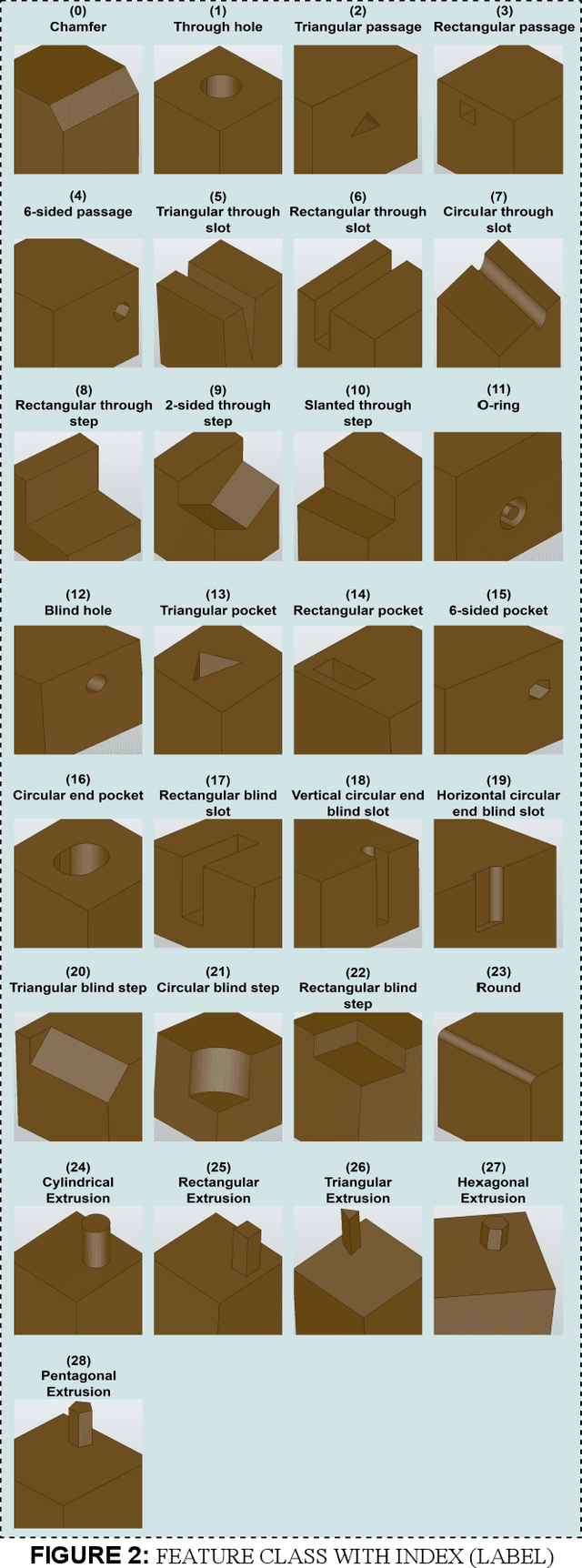

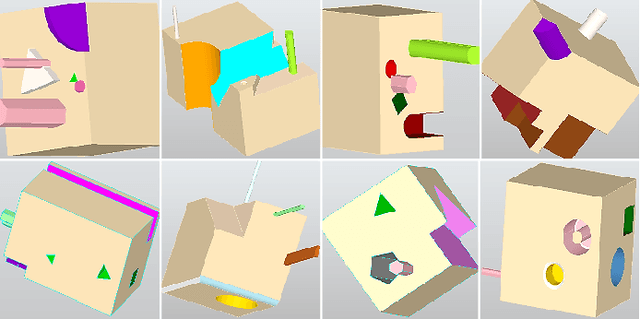

Automatic Feature Recognition and Dimensional Attributes Extraction From CAD Models for Hybrid Additive-Subtractive Manufacturing

Aug 14, 2024

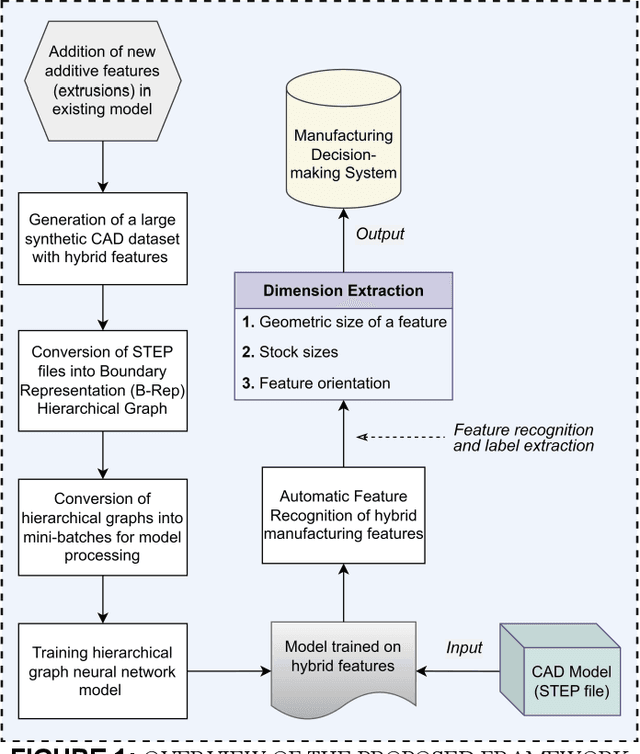

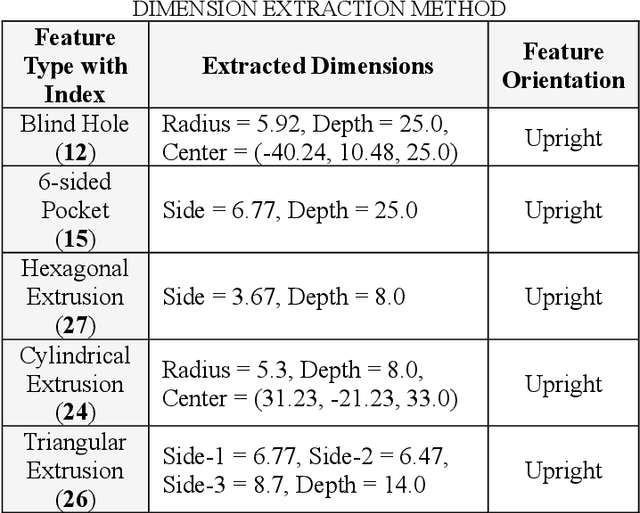

Abstract:The integration of Computer-Aided Design (CAD), Computer-Aided Process Planning (CAPP), and Computer-Aided Manufacturing (CAM) plays a crucial role in modern manufacturing, facilitating seamless transitions from digital designs to physical products. However, a significant challenge within this integration is the Automatic Feature Recognition (AFR) of CAD models, especially in the context of hybrid manufacturing that combines subtractive and additive manufacturing processes. Traditional AFR methods, focused mainly on the identification of subtractive (machined) features including holes, fillets, chamfers, pockets, and slots, fail to recognize features pertinent to additive manufacturing. Furthermore, the traditional methods fall short in accurately extracting geometric dimensions and orientations, which are also key factors for effective manufacturing process planning. This paper presents a novel approach for creating a synthetic CAD dataset that encompasses features relevant to both additive and subtractive machining through Python Open Cascade. The Hierarchical Graph Convolutional Neural Network (HGCNN) model is implemented to accurately identify the composite additive-subtractive features within the synthetic CAD dataset. The key novelty and contribution of the proposed methodology lie in its ability to recognize a wide range of manufacturing features, and precisely extracting their dimensions, orientations, and stock sizes. The proposed model demonstrates remarkable feature recognition accuracy exceeding 97% and a dimension extraction accuracy of 100% for identified features. Therefore, the proposed methodology enhances the integration of CAD, CAPP, and CAM within hybrid manufacturing by providing precise feature recognition and dimension extraction. It facilitates improved manufacturing process planning, by enabling more informed decision-making.

Cross-Lingual Word Alignment for ASEAN Languages with Contrastive Learning

Jul 06, 2024

Abstract:Cross-lingual word alignment plays a crucial role in various natural language processing tasks, particularly for low-resource languages. Recent study proposes a BiLSTM-based encoder-decoder model that outperforms pre-trained language models in low-resource settings. However, their model only considers the similarity of word embedding spaces and does not explicitly model the differences between word embeddings. To address this limitation, we propose incorporating contrastive learning into the BiLSTM-based encoder-decoder framework. Our approach introduces a multi-view negative sampling strategy to learn the differences between word pairs in the shared cross-lingual embedding space. We evaluate our model on five bilingual aligned datasets spanning four ASEAN languages: Lao, Vietnamese, Thai, and Indonesian. Experimental results demonstrate that integrating contrastive learning consistently improves word alignment accuracy across all datasets, confirming the effectiveness of the proposed method in low-resource scenarios. We will release our data set and code to support future research on ASEAN or more low-resource word alignment.

Readability-guided Idiom-aware Sentence Simplification (RISS) for Chinese

Jun 05, 2024Abstract:Chinese sentence simplification faces challenges due to the lack of large-scale labeled parallel corpora and the prevalence of idioms. To address these challenges, we propose Readability-guided Idiom-aware Sentence Simplification (RISS), a novel framework that combines data augmentation techniques with lexcial simplification. RISS introduces two key components: (1) Readability-guided Paraphrase Selection (RPS), a method for mining high-quality sentence pairs, and (2) Idiom-aware Simplification (IAS), a model that enhances the comprehension and simplification of idiomatic expressions. By integrating RPS and IAS using multi-stage and multi-task learning strategies, RISS outperforms previous state-of-the-art methods on two Chinese sentence simplification datasets. Furthermore, RISS achieves additional improvements when fine-tuned on a small labeled dataset. Our approach demonstrates the potential for more effective and accessible Chinese text simplification.

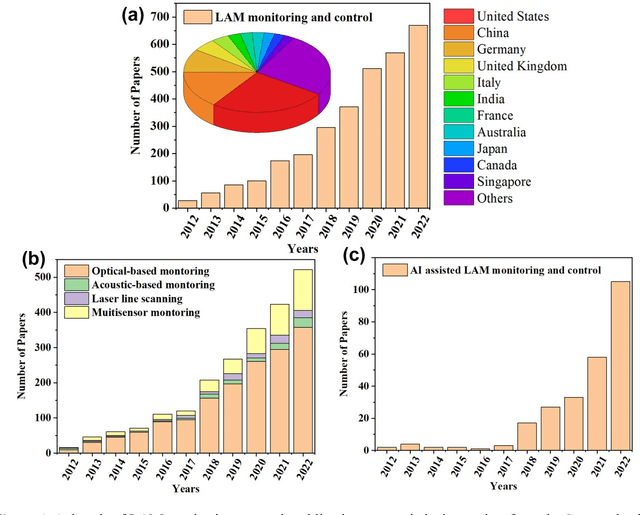

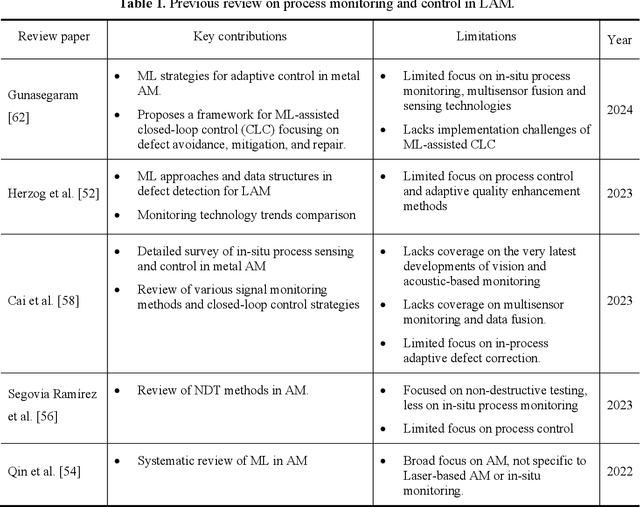

In-situ process monitoring and adaptive quality enhancement in laser additive manufacturing: a critical review

Apr 21, 2024

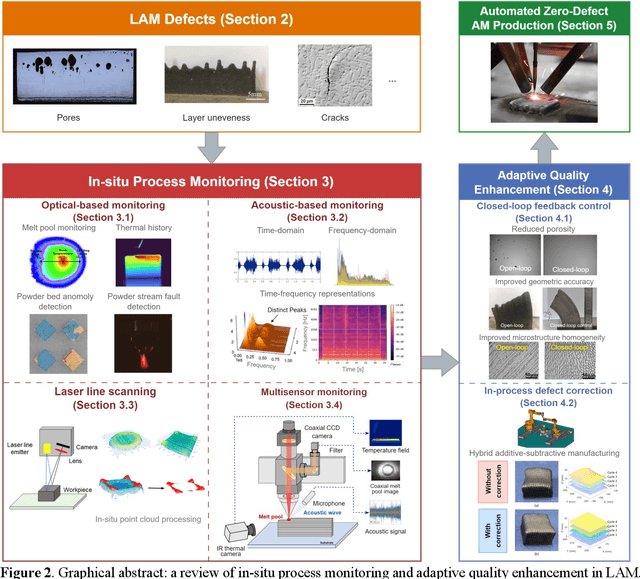

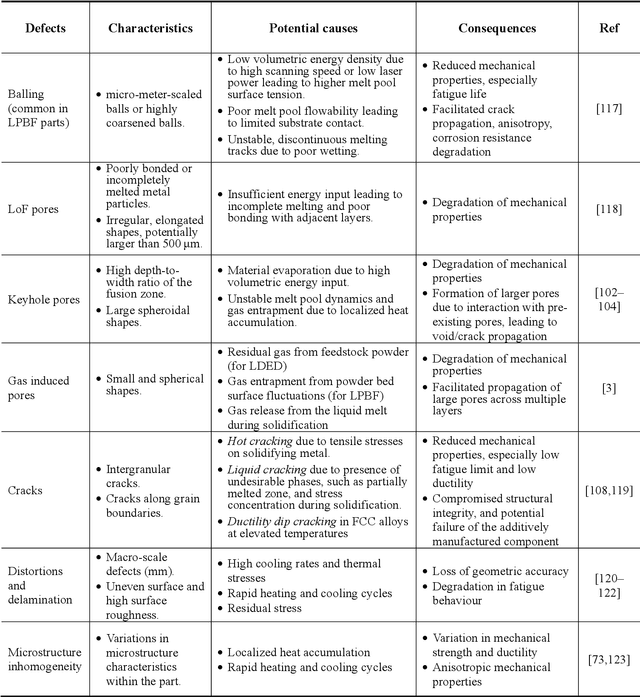

Abstract:Laser Additive Manufacturing (LAM) presents unparalleled opportunities for fabricating complex, high-performance structures and components with unique material properties. Despite these advancements, achieving consistent part quality and process repeatability remains challenging. This paper provides a comprehensive review of various state-of-the-art in-situ process monitoring techniques, including optical-based monitoring, acoustic-based sensing, laser line scanning, and operando X-ray monitoring. These techniques are evaluated for their capabilities and limitations in detecting defects within Laser Powder Bed Fusion (LPBF) and Laser Directed Energy Deposition (LDED) processes. Furthermore, the review discusses emerging multisensor monitoring and machine learning (ML)-assisted defect detection methods, benchmarking ML models tailored for in-situ defect detection. The paper also discusses in-situ adaptive defect remediation strategies that advance LAM towards zero-defect autonomous operations, focusing on real-time closed-loop feedback control and defect correction methods. Research gaps such as the need for standardization, improved reliability and sensitivity, and decision-making strategies beyond early stopping are highlighted. Future directions are proposed, with an emphasis on multimodal sensor fusion for multiscale defect prediction and fault diagnosis, ultimately enabling self-adaptation in LAM processes. This paper aims to equip researchers and industry professionals with a holistic understanding of the current capabilities, limitations, and future directions in in-situ process monitoring and adaptive quality enhancement in LAM.

Multimodal sensor fusion for real-time location-dependent defect detection in laser-directed energy deposition

May 23, 2023Abstract:Real-time defect detection is crucial in laser-directed energy deposition (L-DED) additive manufacturing (AM). Traditional in-situ monitoring approach utilizes a single sensor (i.e., acoustic, visual, or thermal sensor) to capture the complex process dynamic behaviors, which is insufficient for defect detection with high accuracy and robustness. This paper proposes a novel multimodal sensor fusion method for real-time location-dependent defect detection in the robotic L-DED process. The multimodal fusion sources include a microphone sensor capturing the laser-material interaction sound and a visible spectrum CCD camera capturing the coaxial melt pool images. A hybrid convolutional neural network (CNN) is proposed to fuse acoustic and visual data. The key novelty in this study is that the traditional manual feature extraction procedures are no longer required, and the raw melt pool images and acoustic signals are fused directly by the hybrid CNN model, which achieved the highest defect prediction accuracy (98.5 %) without the thermal sensing modality. Moreover, unlike previous region-based quality prediction, the proposed hybrid CNN can detect the onset of defect occurrences. The defect prediction outcomes are synchronized and registered with in-situ acquired robot tool-center-point (TCP) data, which enables localized defect identification. The proposed multimodal sensor fusion method offers a robust solution for in-situ defect detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge