Xiling Yao

In-situ process monitoring and adaptive quality enhancement in laser additive manufacturing: a critical review

Apr 21, 2024

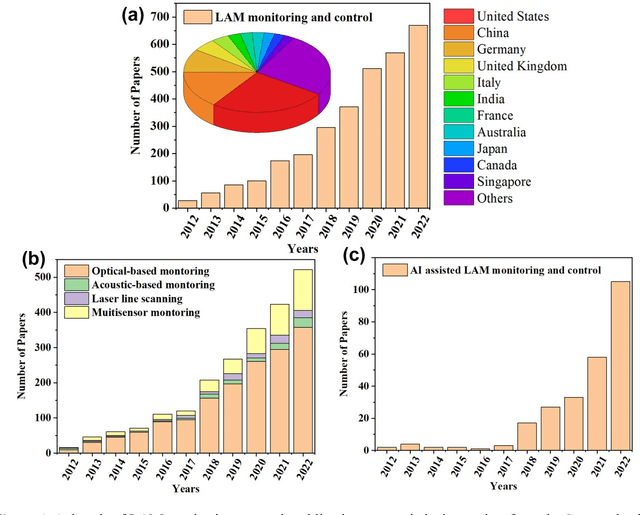

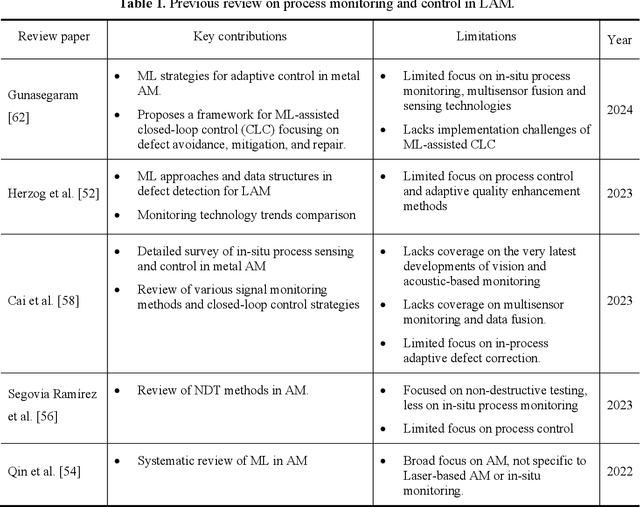

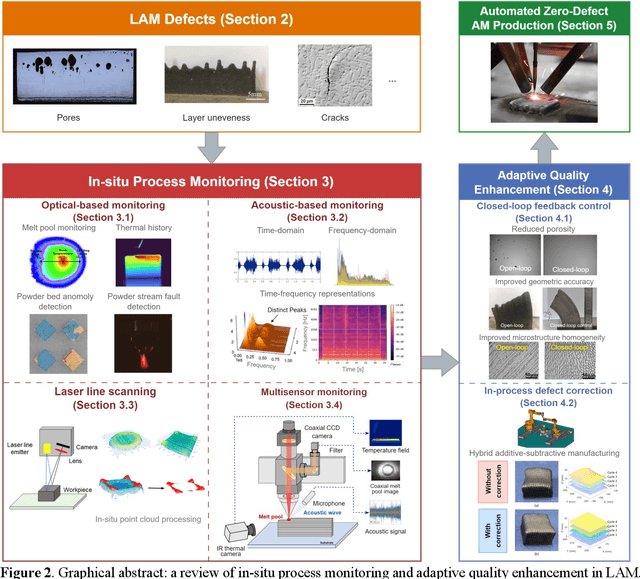

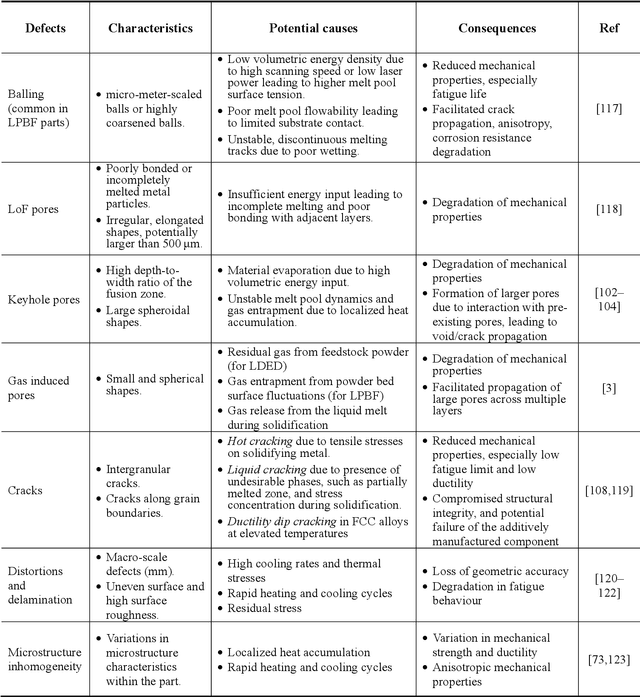

Abstract:Laser Additive Manufacturing (LAM) presents unparalleled opportunities for fabricating complex, high-performance structures and components with unique material properties. Despite these advancements, achieving consistent part quality and process repeatability remains challenging. This paper provides a comprehensive review of various state-of-the-art in-situ process monitoring techniques, including optical-based monitoring, acoustic-based sensing, laser line scanning, and operando X-ray monitoring. These techniques are evaluated for their capabilities and limitations in detecting defects within Laser Powder Bed Fusion (LPBF) and Laser Directed Energy Deposition (LDED) processes. Furthermore, the review discusses emerging multisensor monitoring and machine learning (ML)-assisted defect detection methods, benchmarking ML models tailored for in-situ defect detection. The paper also discusses in-situ adaptive defect remediation strategies that advance LAM towards zero-defect autonomous operations, focusing on real-time closed-loop feedback control and defect correction methods. Research gaps such as the need for standardization, improved reliability and sensitivity, and decision-making strategies beyond early stopping are highlighted. Future directions are proposed, with an emphasis on multimodal sensor fusion for multiscale defect prediction and fault diagnosis, ultimately enabling self-adaptation in LAM processes. This paper aims to equip researchers and industry professionals with a holistic understanding of the current capabilities, limitations, and future directions in in-situ process monitoring and adaptive quality enhancement in LAM.

Multimodal sensor fusion for real-time location-dependent defect detection in laser-directed energy deposition

May 23, 2023Abstract:Real-time defect detection is crucial in laser-directed energy deposition (L-DED) additive manufacturing (AM). Traditional in-situ monitoring approach utilizes a single sensor (i.e., acoustic, visual, or thermal sensor) to capture the complex process dynamic behaviors, which is insufficient for defect detection with high accuracy and robustness. This paper proposes a novel multimodal sensor fusion method for real-time location-dependent defect detection in the robotic L-DED process. The multimodal fusion sources include a microphone sensor capturing the laser-material interaction sound and a visible spectrum CCD camera capturing the coaxial melt pool images. A hybrid convolutional neural network (CNN) is proposed to fuse acoustic and visual data. The key novelty in this study is that the traditional manual feature extraction procedures are no longer required, and the raw melt pool images and acoustic signals are fused directly by the hybrid CNN model, which achieved the highest defect prediction accuracy (98.5 %) without the thermal sensing modality. Moreover, unlike previous region-based quality prediction, the proposed hybrid CNN can detect the onset of defect occurrences. The defect prediction outcomes are synchronized and registered with in-situ acquired robot tool-center-point (TCP) data, which enables localized defect identification. The proposed multimodal sensor fusion method offers a robust solution for in-situ defect detection.

Multisensor fusion-based digital twin in additive manufacturing for in-situ quality monitoring and defect correction

Apr 12, 2023Abstract:Early detection and correction of defects are critical in additive manufacturing (AM) to avoid build failures. In this paper, we present a multisensor fusion-based digital twin for in-situ quality monitoring and defect correction in a robotic laser direct energy deposition process. Multisensor fusion sources consist of an acoustic sensor, an infrared thermal camera, a coaxial vision camera, and a laser line scanner. The key novelty and contribution of this work are to develop a spatiotemporal data fusion method that synchronizes and registers the multisensor features within the part's 3D volume. The fused dataset can be used to predict location-specific quality using machine learning. On-the-fly identification of regions requiring material addition or removal is feasible. Robot toolpath and auto-tuned process parameters are generated for defecting correction. In contrast to traditional single-sensor-based monitoring, multisensor fusion allows for a more in-depth understanding of underlying process physics, such as pore formation and laser-material interactions. The proposed methods pave the way for self-adaptation AM with higher efficiency, less waste, and cleaner production.

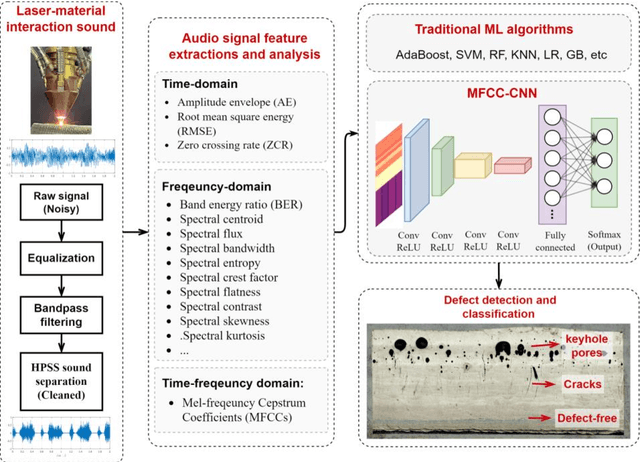

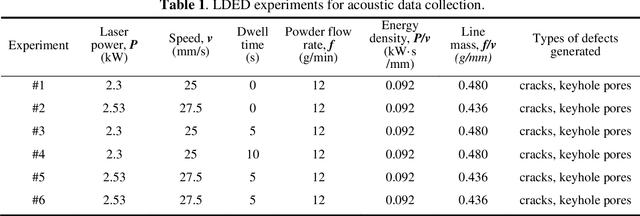

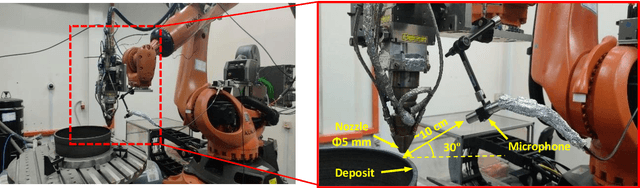

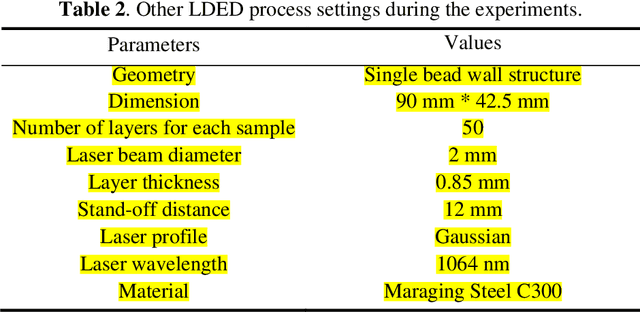

In-situ crack and keyhole pore detection in laser directed energy deposition through acoustic signal and deep learning

Apr 10, 2023

Abstract:Cracks and keyhole pores are detrimental defects in alloys produced by laser directed energy deposition (LDED). Laser-material interaction sound may hold information about underlying complex physical events such as crack propagation and pores formation. However, due to the noisy environment and intricate signal content, acoustic-based monitoring in LDED has received little attention. This paper proposes a novel acoustic-based in-situ defect detection strategy in LDED. The key contribution of this study is to develop an in-situ acoustic signal denoising, feature extraction, and sound classification pipeline that incorporates convolutional neural networks (CNN) for online defect prediction. Microscope images are used to identify locations of the cracks and keyhole pores within a part. The defect locations are spatiotemporally registered with acoustic signal. Various acoustic features corresponding to defect-free regions, cracks, and keyhole pores are extracted and analysed in time-domain, frequency-domain, and time-frequency representations. The CNN model is trained to predict defect occurrences using the Mel-Frequency Cepstral Coefficients (MFCCs) of the lasermaterial interaction sound. The CNN model is compared to various classic machine learning models trained on the denoised acoustic dataset and raw acoustic dataset. The validation results shows that the CNN model trained on the denoised dataset outperforms others with the highest overall accuracy (89%), keyhole pore prediction accuracy (93%), and AUC-ROC score (98%). Furthermore, the trained CNN model can be deployed into an in-house developed software platform for online quality monitoring. The proposed strategy is the first study to use acoustic signals with deep learning for insitu defect detection in LDED process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge